- The paper introduces a formal framework treating belief updating as a trade-off between belief utility and variational costs measured by KL divergence.

- It demonstrates how parameters like conservatism (λ) and likelihood weighting (α) modulate sensitivity to evidence, leading to confirmation bias and attitude polarization.

- The model implies practical strategies, such as incremental information exposure, to reduce the cognitive and social costs associated with belief revision.

Introduction and Theoretical Motivation

This paper presents a formal framework for modeling belief updating as a resource-rational, variational process, integrating cognitive, pragmatic, and social costs. The central thesis is that deviations from Bayesian rationality—such as confirmation bias, motivated reasoning, and attitude polarization—are not mere cognitive flaws but adaptive responses to the nontrivial costs associated with belief revision. The framework quantifies these costs using the Kullback-Leibler (KL) divergence between prior and posterior beliefs, representing the informational "work" required for belief state transitions. This approach is situated within the context of variational inference, the Free Energy Principle (FEP), and Active Inference (AIF), extending these paradigms to account for agents' preferences over their own beliefs and the pragmatic affordances of belief states.

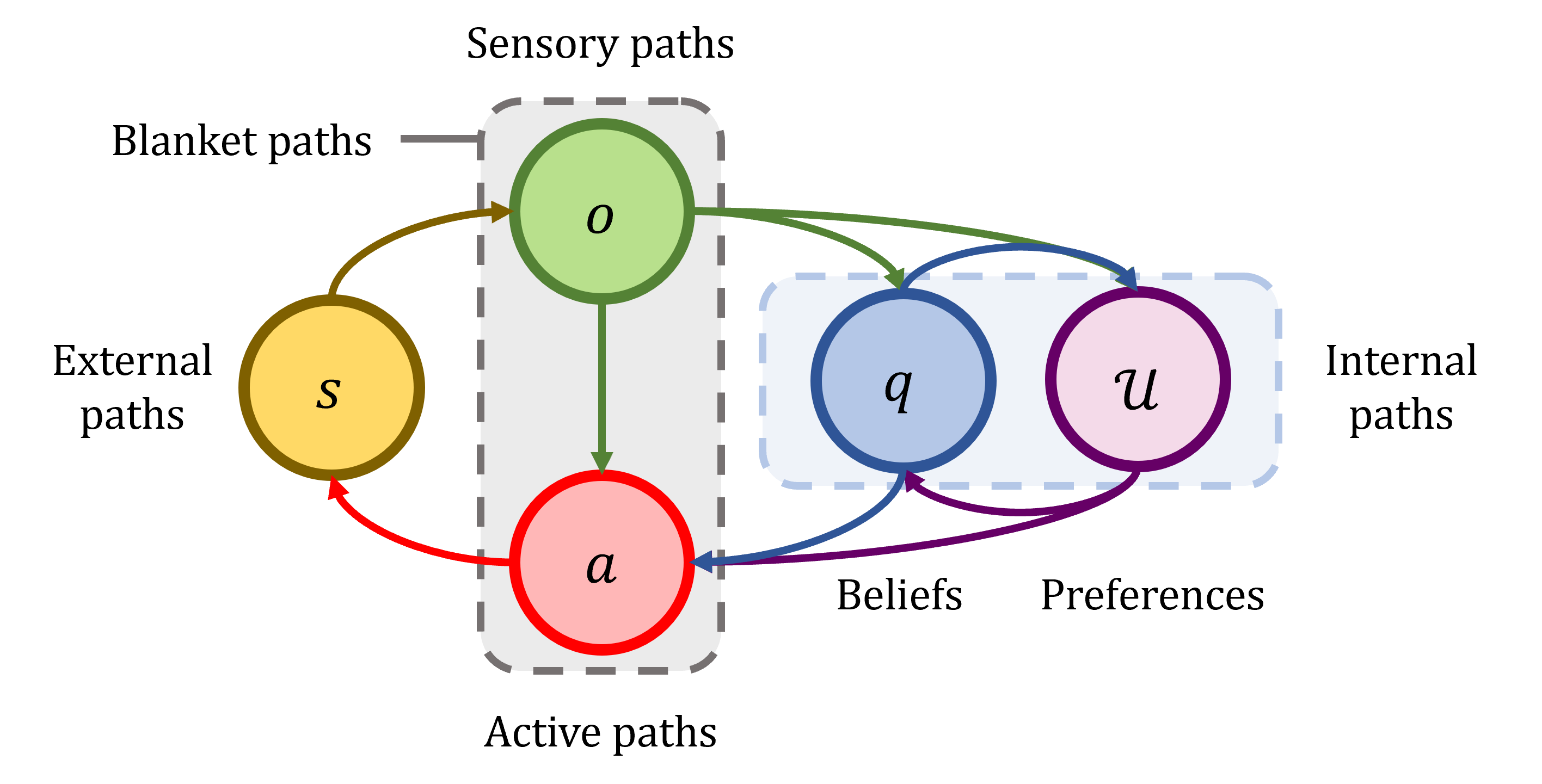

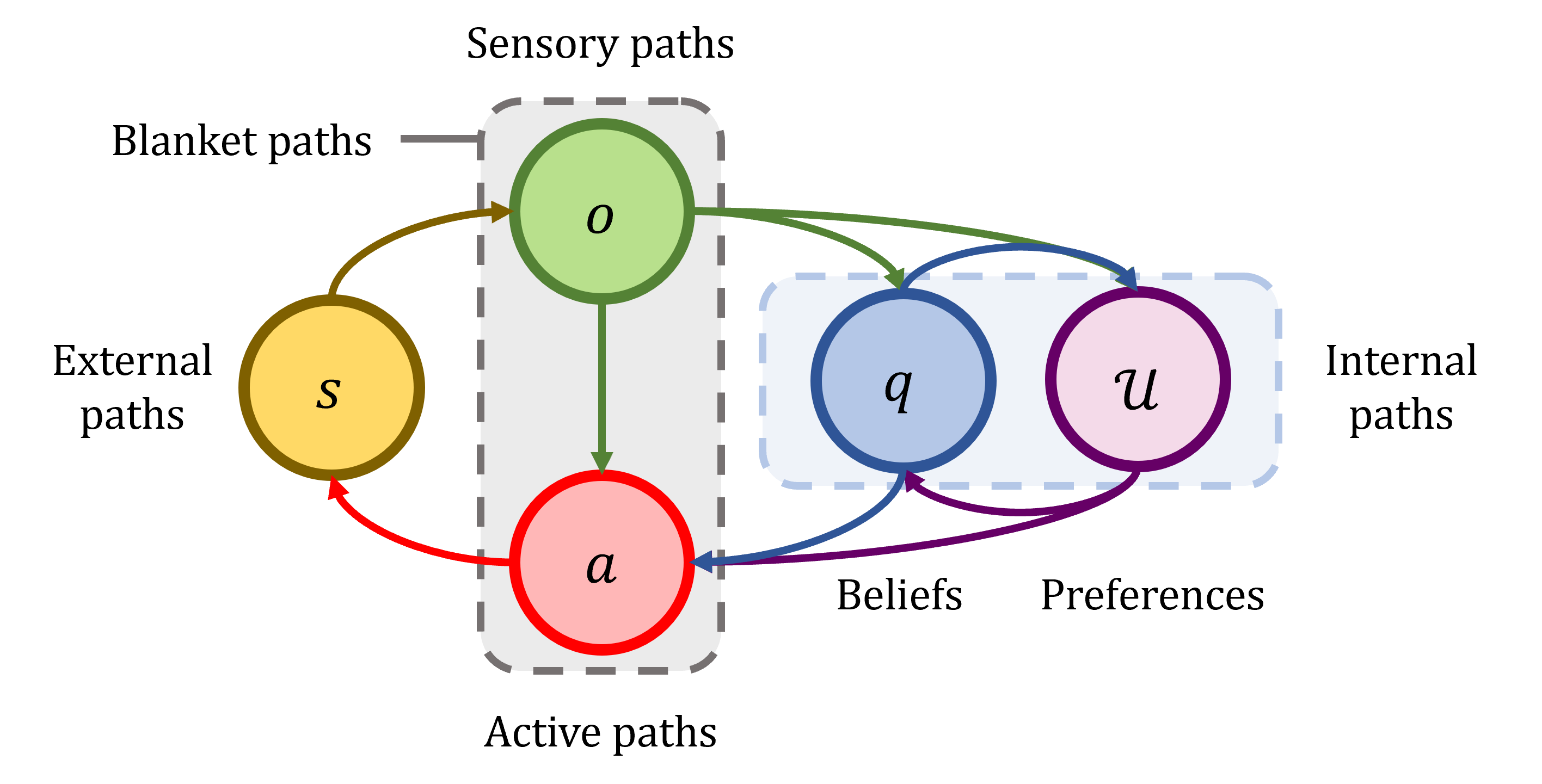

Figure 1: Causal structure of agent-environment interactions, highlighting the Markov blanket and the mutual influence of beliefs and preferences on active and sensory paths.

The model posits that belief updating is driven by a trade-off between belief utility (which may include affective, epistemic, and social components) and the complexity cost of deviating from the prior. This is formalized as a variational objective functional, with tunable parameters controlling the relative weight of utility and cost. The framework is capable of emulating a range of human belief updating phenomena, including confirmation bias, selective evidence search, and attitude polarization.

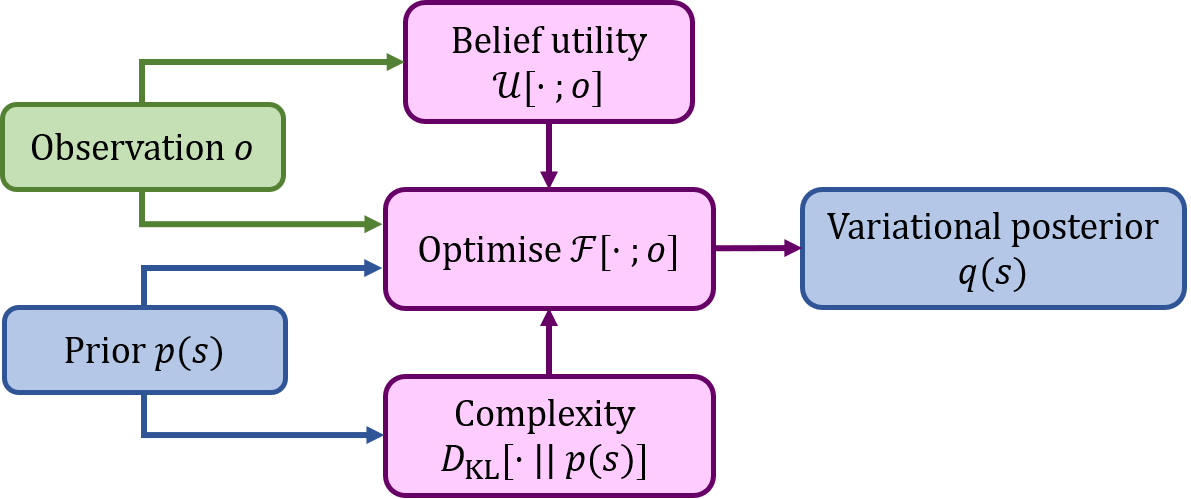

The belief updating process is cast as the maximization of a variational objective:

F[q(s),o]=U[q(s),o]−λKL(q(s)∥p(s))

where U[q(s),o] is the belief utility functional, λ is the conservatism parameter, and KL(q(s)∥p(s)) quantifies the informational cost of belief change. The utility functional can be decomposed into affective and epistemic components:

U[q(s),o]=U[q(s),o]+αEq(s)[logp(o∣s)]

with α controlling the weight assigned to evidence-based accuracy.

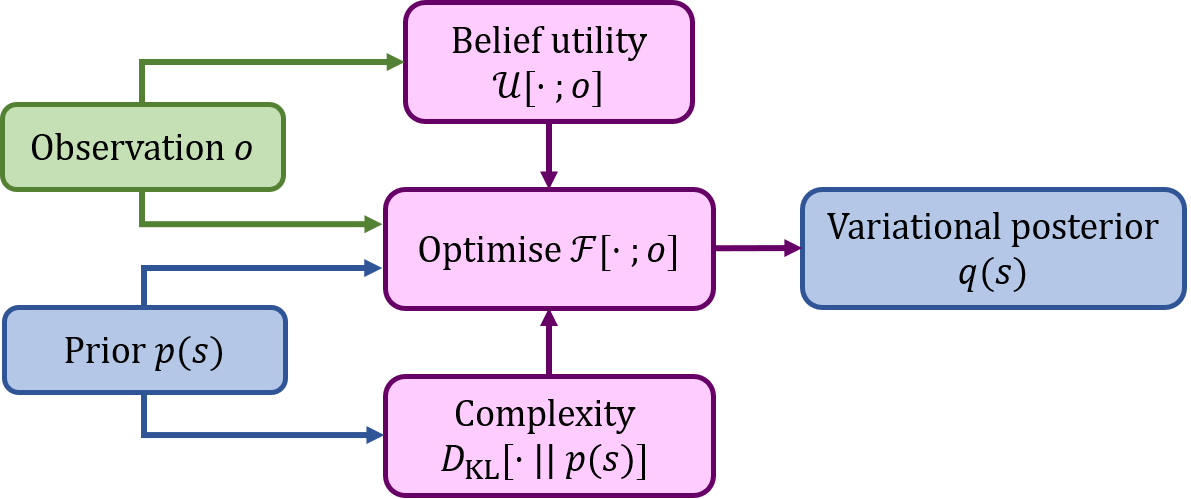

Figure 2: Schematic of the belief updating mechanism, balancing prior beliefs, observations, utility, and complexity cost.

Analytically, for linear affective utilities, the optimal posterior is given by:

q∗(s)=Z(o)p(s)exp(λ−1(cs+αlogp(o∣s)))

where cs encodes the valence of believing state s, and Z(o) is the partition function ensuring normalization.

This formulation generalizes standard variational inference and active inference, allowing for explicit modeling of motivational and social factors in belief change. The model's parameters (λ, α, cs) can be tuned to simulate different agent profiles and updating behaviors.

Empirical Simulations and Key Results

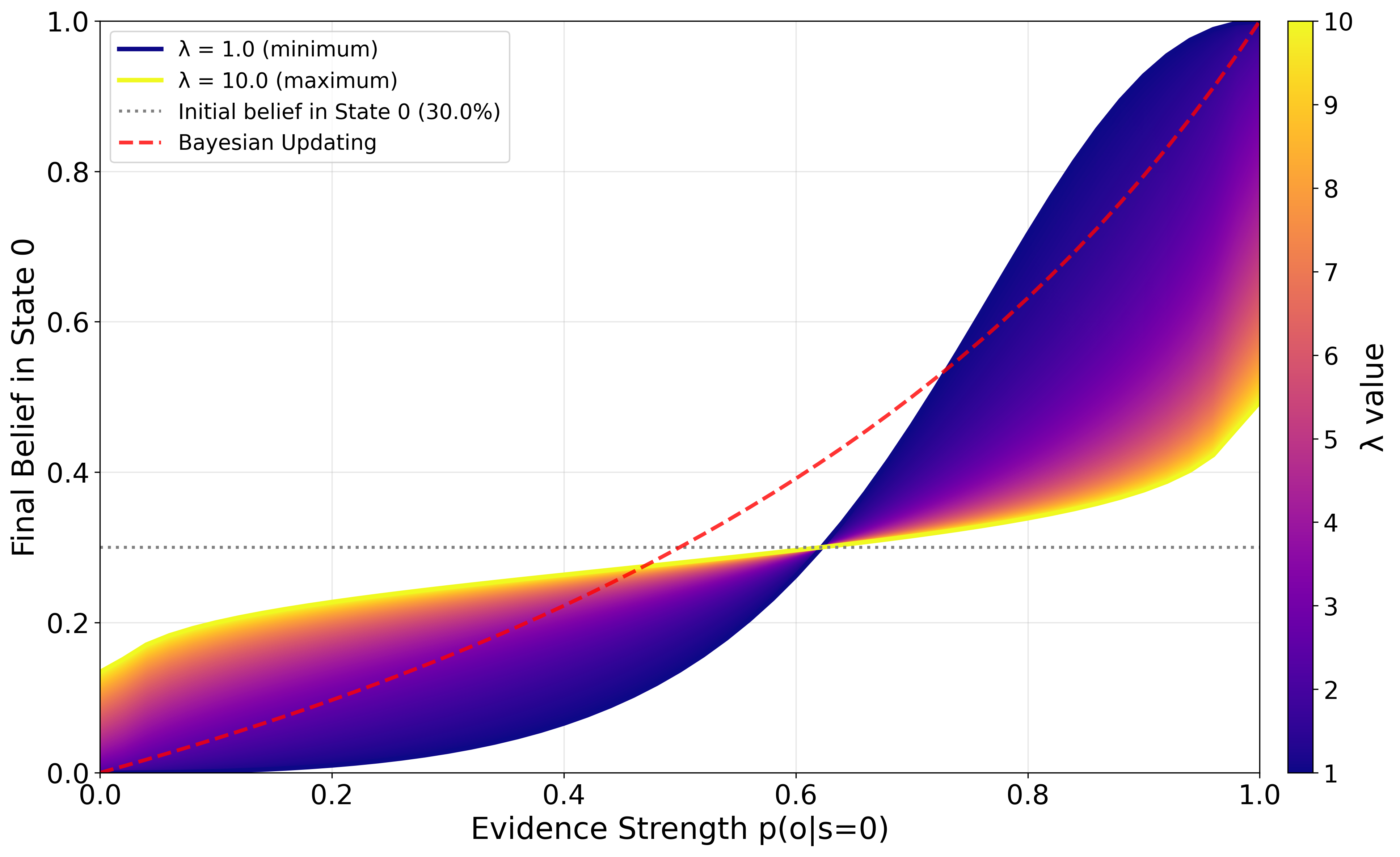

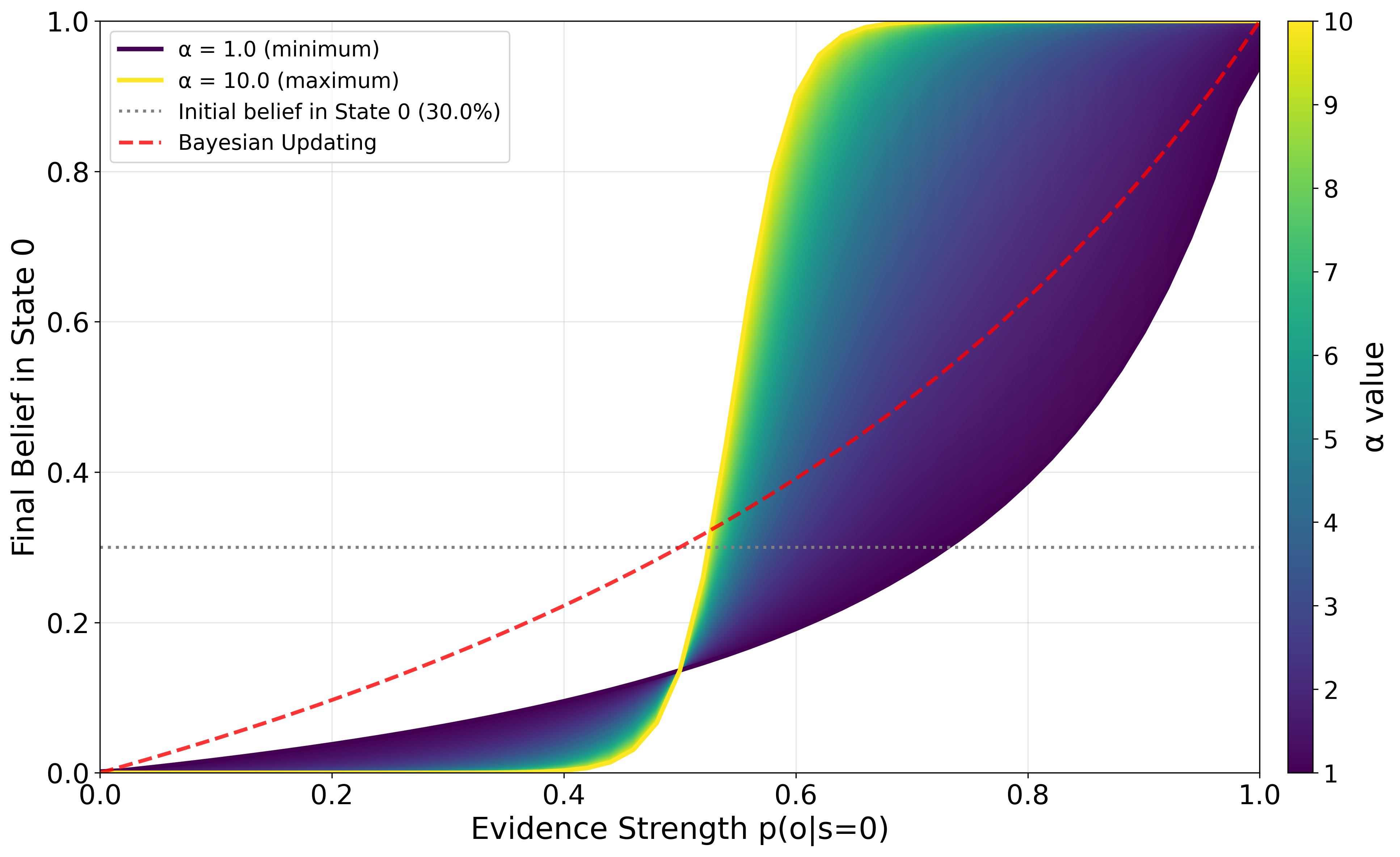

Sensitivity to Evidence and Conservatism

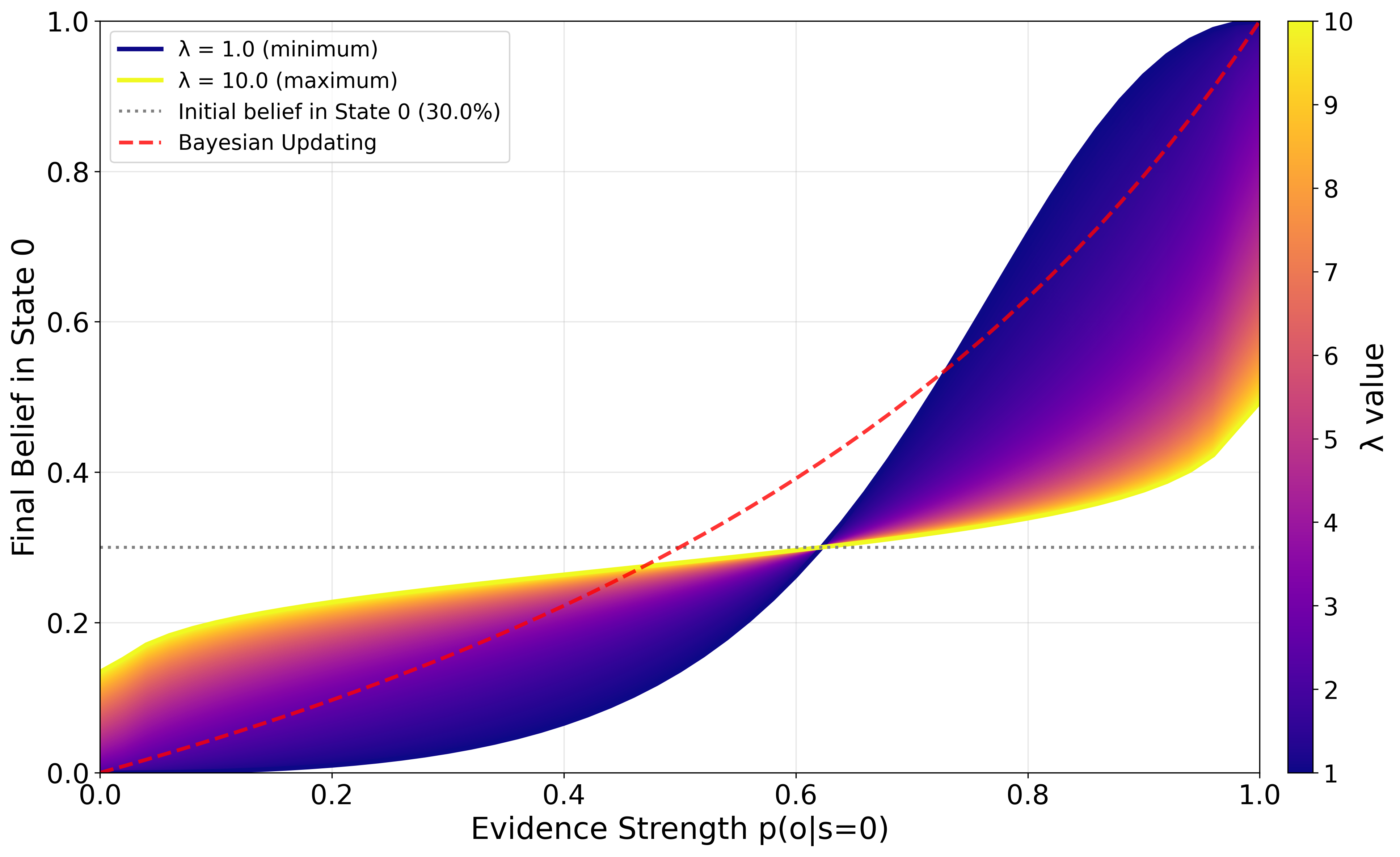

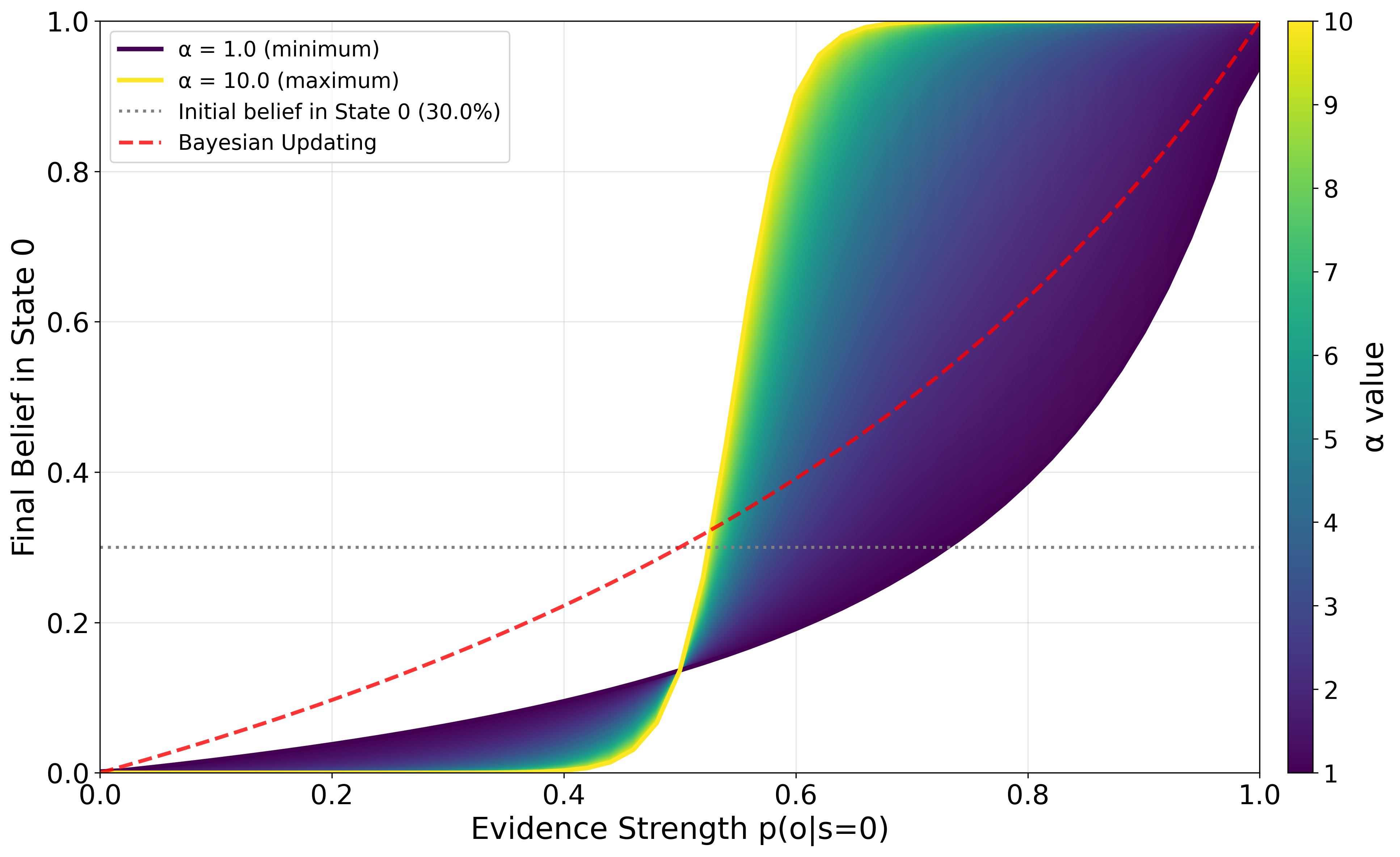

Simulations demonstrate that agents with higher conservatism (λ) exhibit greater inertia, updating beliefs less in response to new evidence, while agents with higher likelihood weighting (α) are more sensitive to evidence and less influenced by affective utility.

Figure 3: Final belief q(s=0) as a function of evidence strength, for varying λ (left) and α (right).

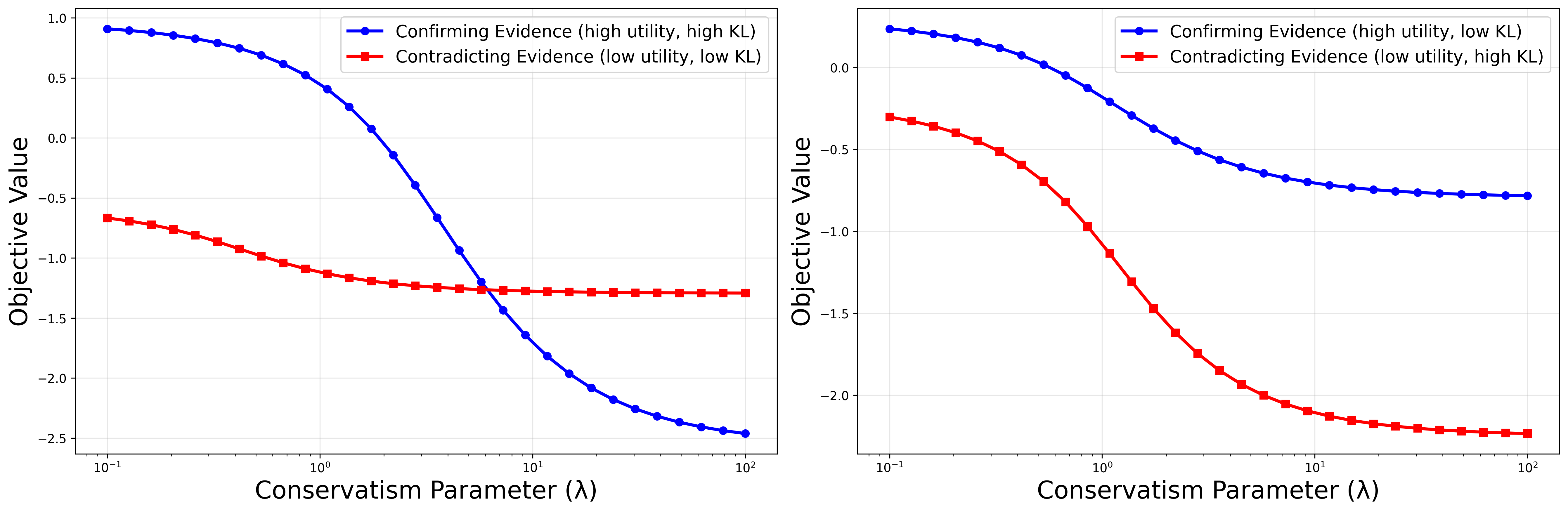

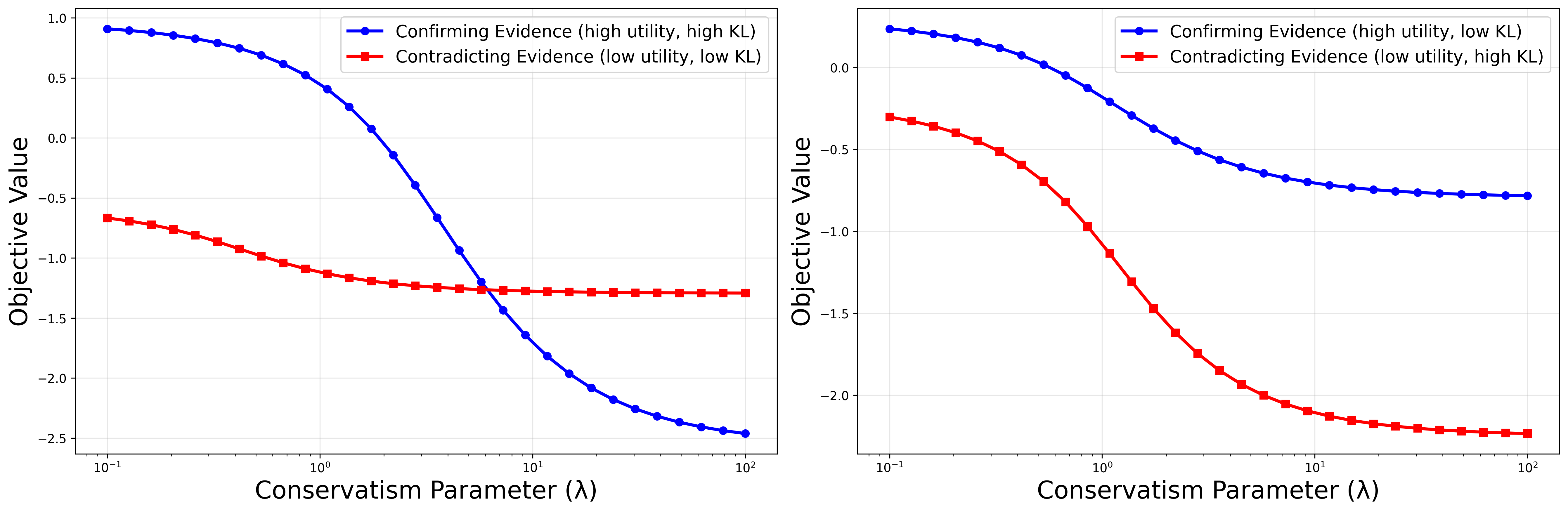

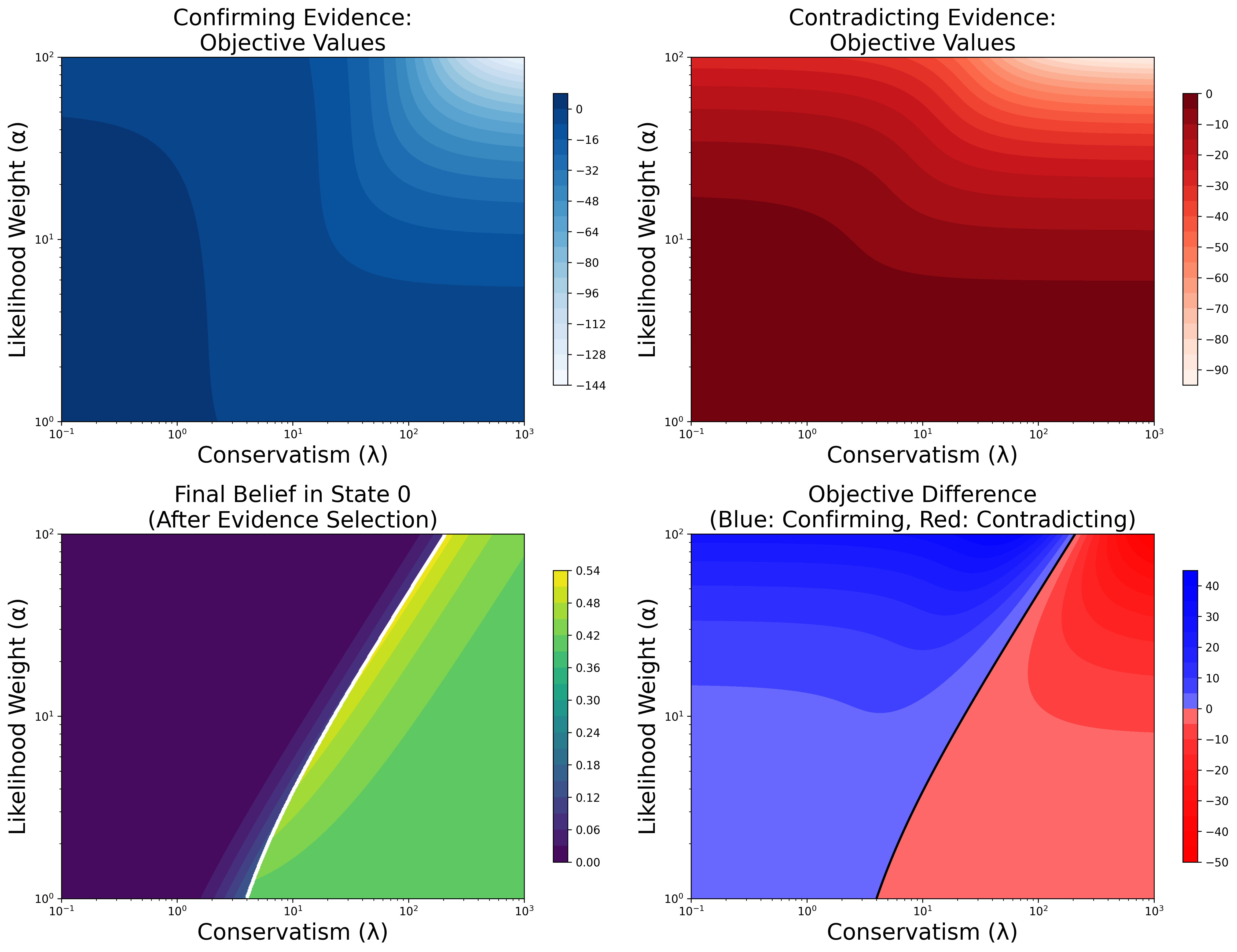

Confirmation Bias and Evidence Selection

The model reproduces confirmation bias in evidence selection: agents preferentially select evidence that aligns with their affective utility, especially when the cost of belief change is low. As λ increases, agents become more likely to select evidence that minimizes cognitive cost, even if it contradicts their preferences.

Figure 4: Objective values for evidence selection scenarios, showing threshold effects in confirmatory vs. contradictory evidence selection as λ varies.

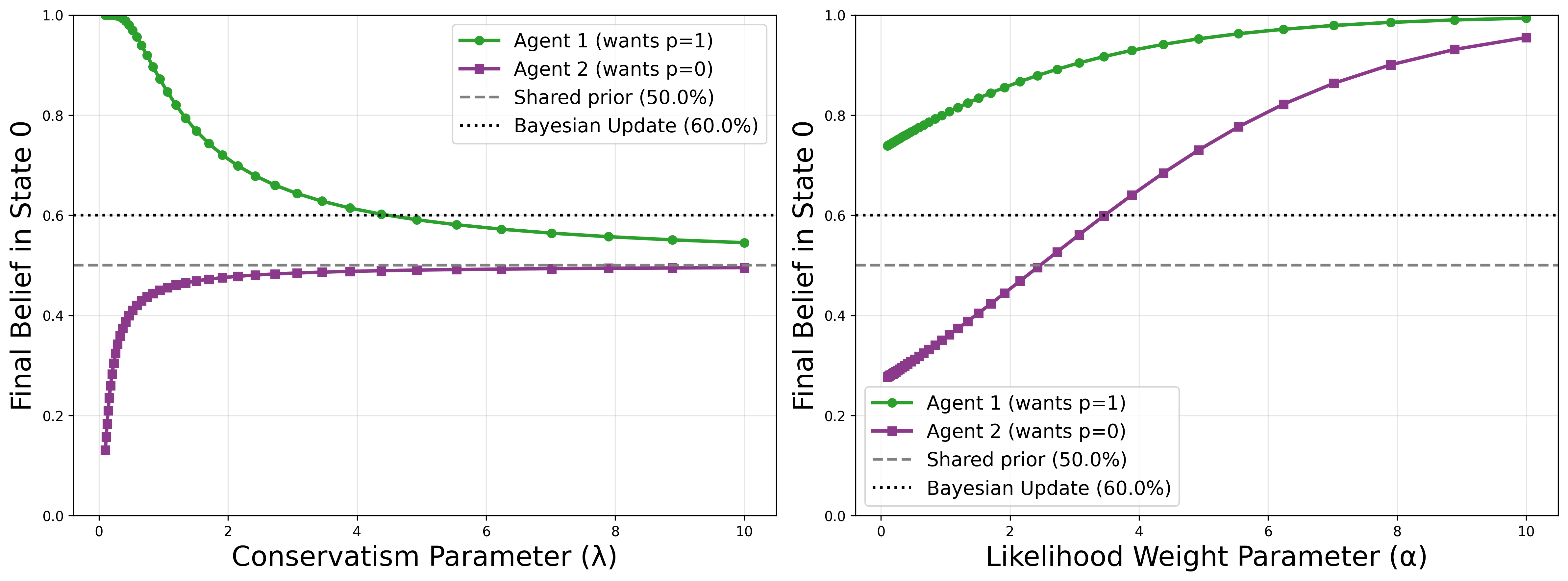

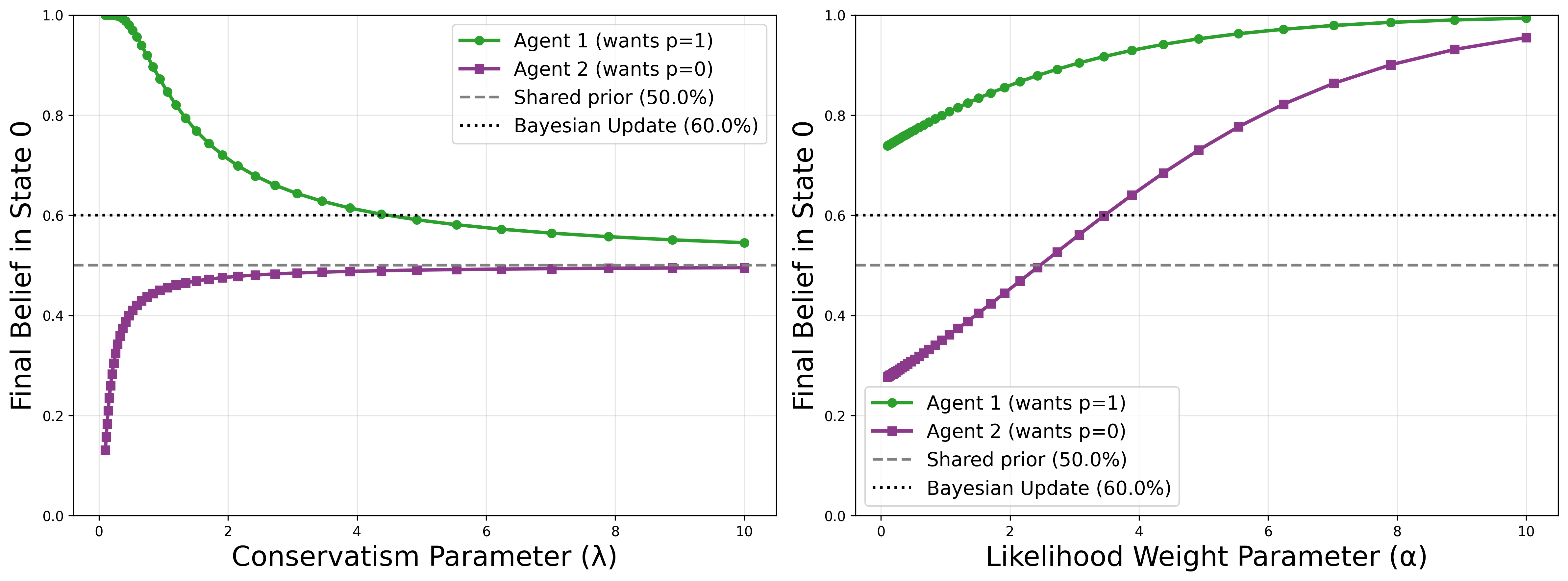

Attitude Polarization

Simulations of two agents with identical priors but opposing affective utilities reveal that, for low λ and α, shared evidence leads to divergent posterior beliefs—capturing attitude polarization. As these parameters increase, agents' beliefs converge, but not necessarily to the Bayesian posterior.

Figure 5: Attitude polarization effects as a function of λ (left) and α (right), for agents with opposing belief preferences.

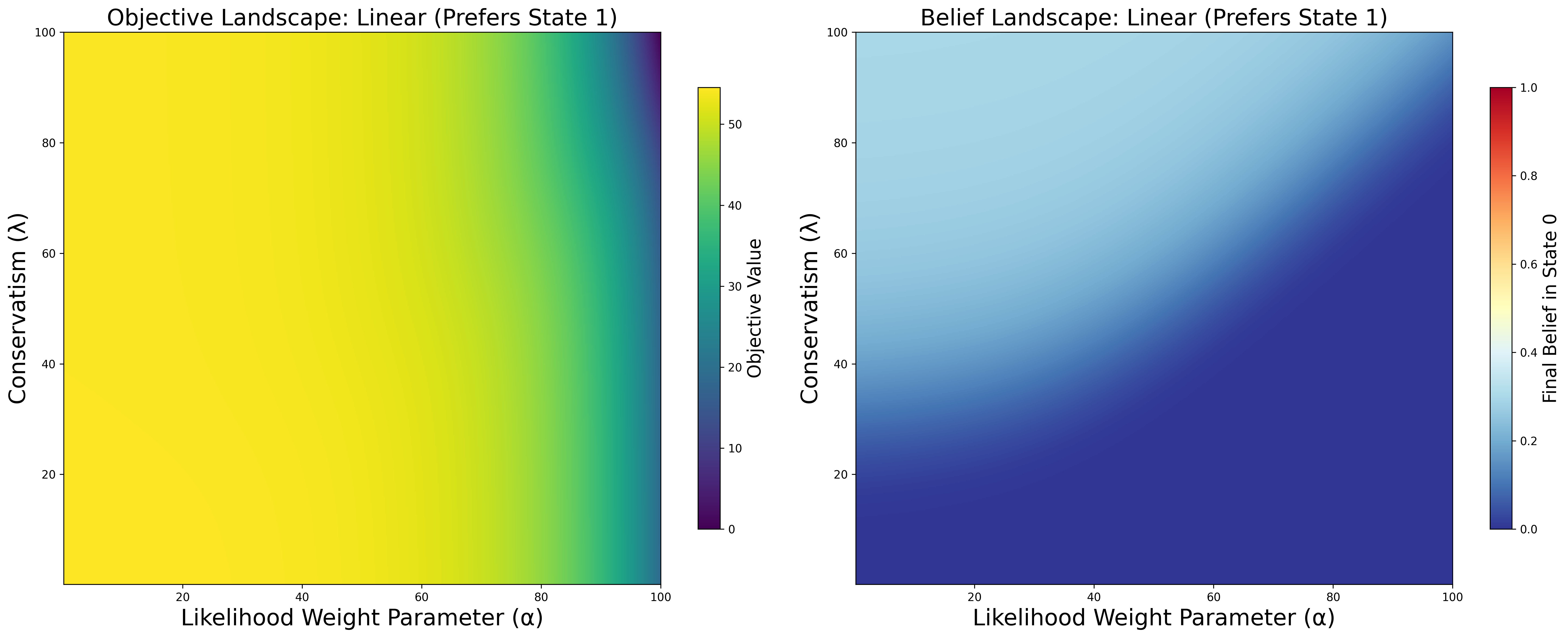

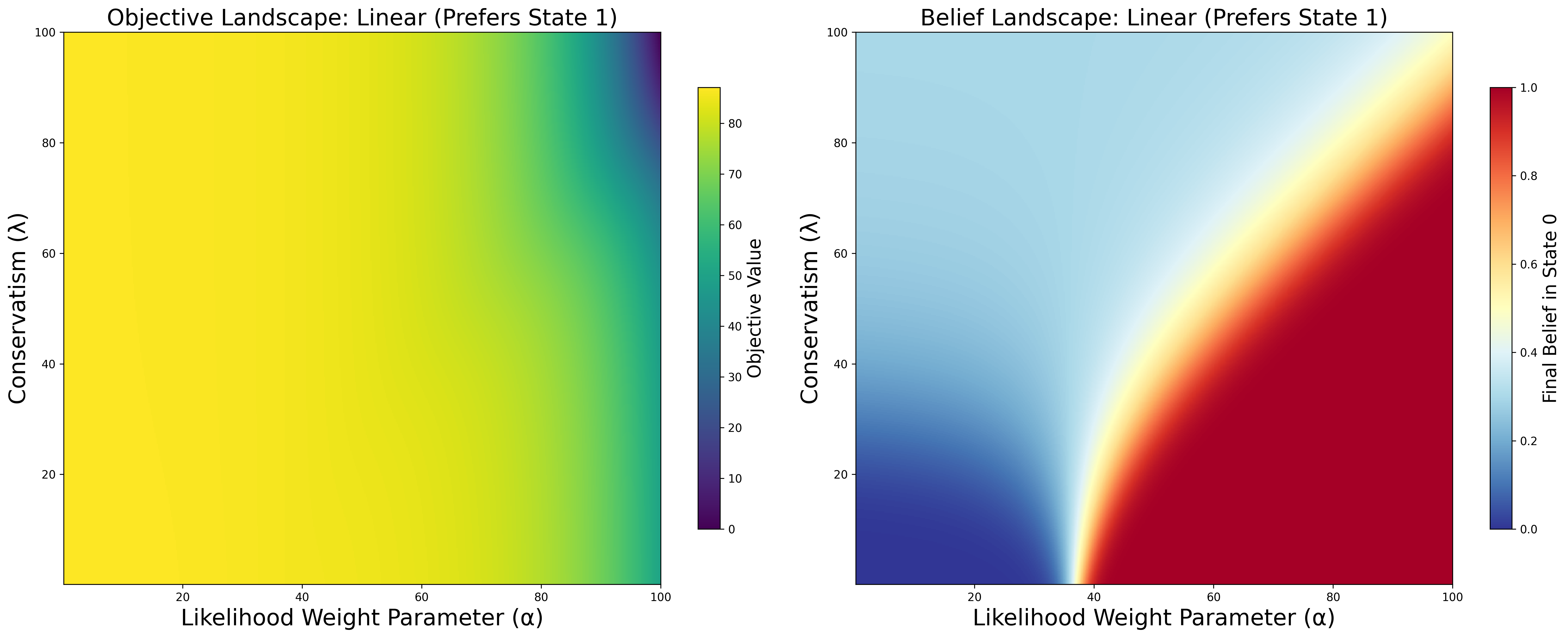

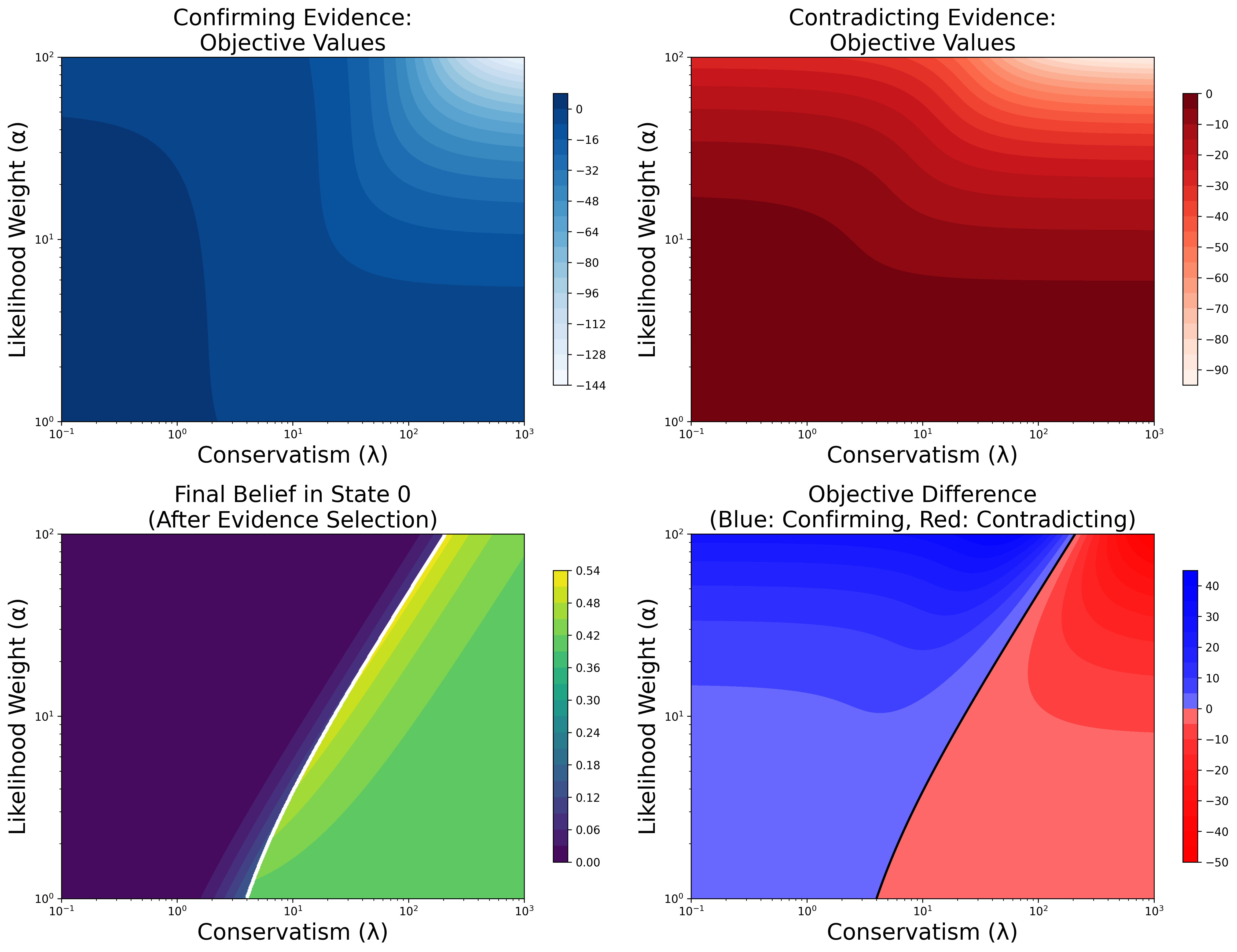

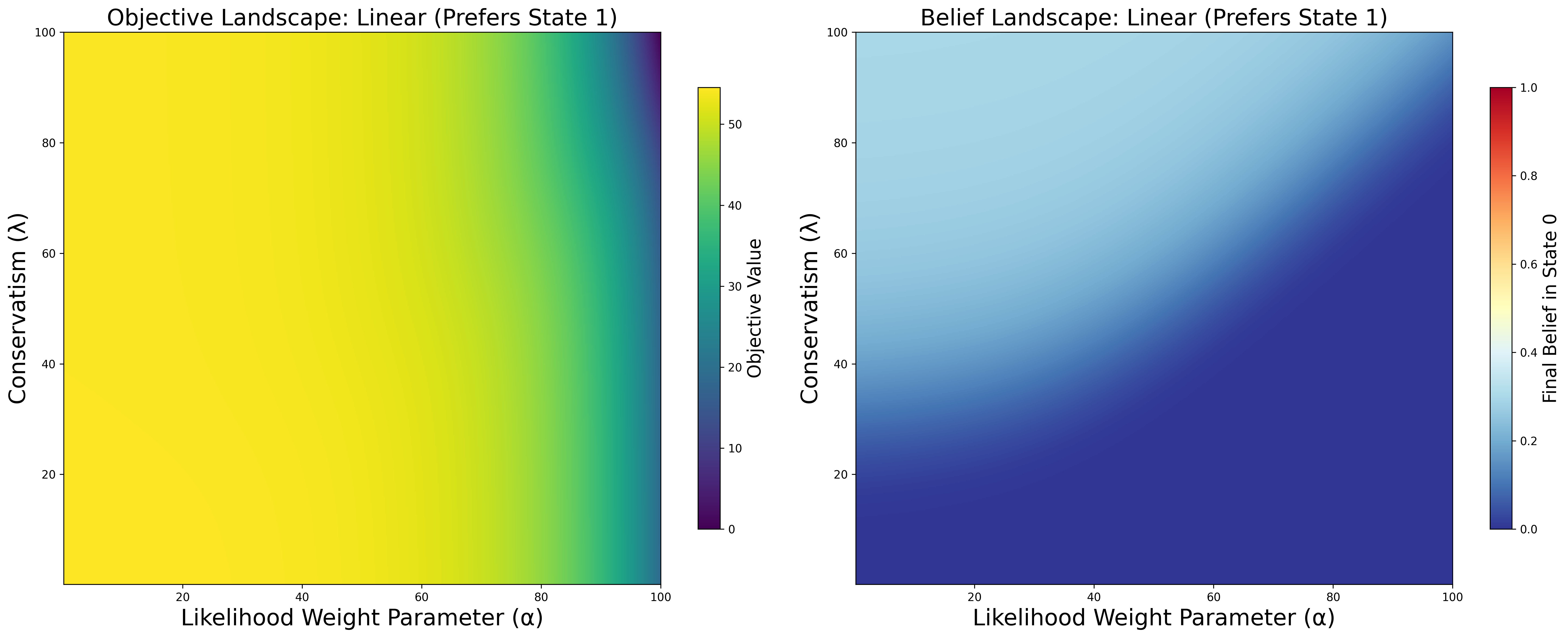

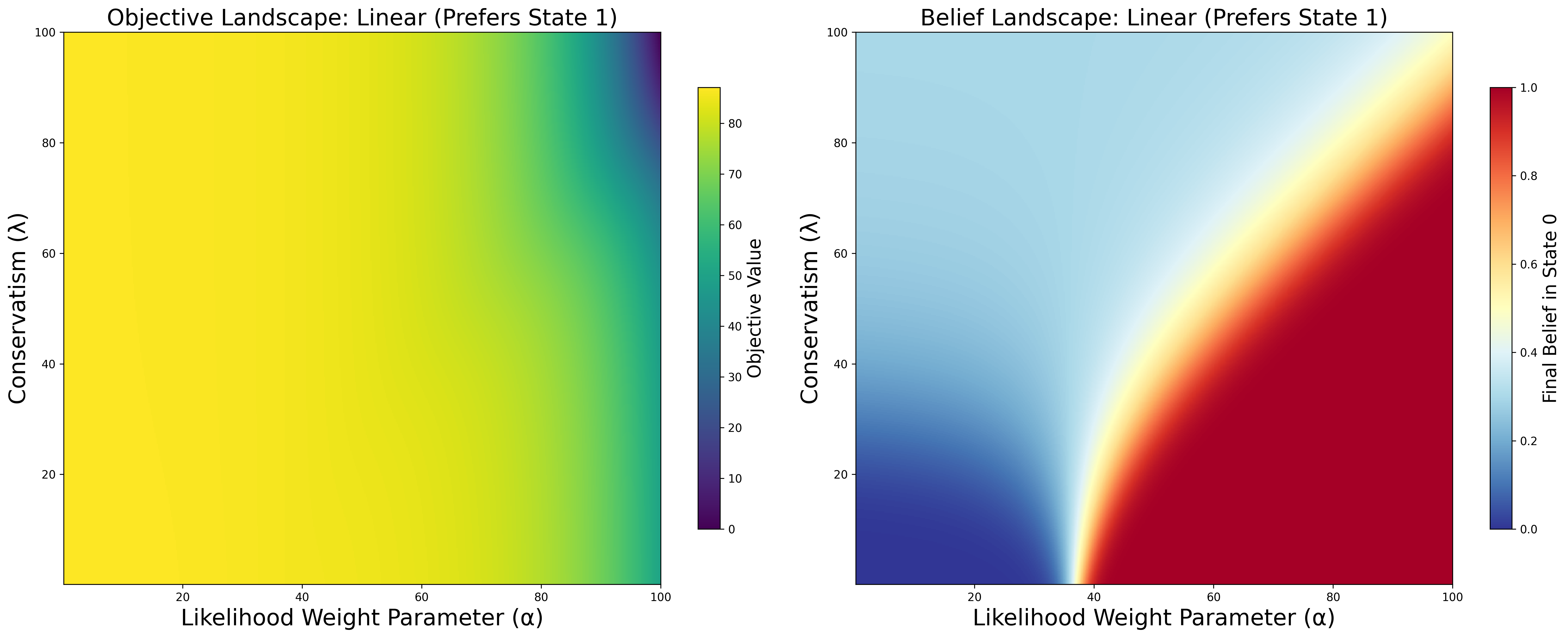

Objective and Belief Landscapes

Heatmaps of the variational objective and final beliefs across (λ,α) parameter space illustrate the nontrivial landscape of belief updating, with regions of high inertia and regions of high sensitivity to evidence.

Figure 6: Variational objective and belief landscapes for different evidence strengths and (λ,α) pairs.

Figure 7: Contoured heatmaps of objective values and belief selection boundaries in evidence selection scenarios.

Implications and Theoretical Significance

The framework provides a principled account of why belief change is often incremental and conservative, even in the face of strong contradictory evidence. It formalizes the cognitive, energetic, and social costs of belief revision, offering a unified explanation for a range of non-Bayesian phenomena. The model suggests that confirmation bias, selective exposure, and polarization are strategic adaptations to the variational costs of belief change, rather than irrational failures.

Practically, the model implies that interventions aimed at promoting belief change should focus on reducing the perceived costs—cognitive, affective, and social—associated with updating. Incremental information exposure and leveraging social networks to lower the social risks of belief revision are recommended strategies.

Theoretically, the framework bridges decision-theoretic, thermodynamic, and social-psychological perspectives on belief updating, and is extensible to group dynamics, temporal trajectories, and networked belief systems. It provides testable predictions regarding the relationship between KL divergence, belief revision speed, and physiological or neural correlates of cognitive effort.

Future Directions

- Action-Perception Loop: Integrating motivated belief updating into the full perception-action cycle, including active evidence selection and policy optimization.

- Temporal Dynamics: Modeling the rate and trajectory of belief change, incorporating affective responses to prediction error rates.

- Group and Network Effects: Extending the framework to model belief propagation, inertia, and polarization in social networks, quantifying identity-protective cognition and feedback loops.

- Empirical Validation: Experimental studies to test model predictions, including physiological measures of cognitive effort and longitudinal tracking of belief trajectories in real-world groups.

Conclusion

This paper advances a formal, resource-rational framework for belief updating, capturing the variational costs—cognitive, pragmatic, and social—of changing one's mind. By modeling belief revision as a motivated variational process, the framework accounts for a spectrum of human belief updating phenomena, including confirmation bias and polarization, as adaptive responses to the nontrivial costs of belief change. The approach offers both theoretical insight and practical guidance for understanding and influencing belief dynamics in individuals and groups.