Cybersecurity AI: Humanoid Robots as Attack Vectors (2509.14139v1)

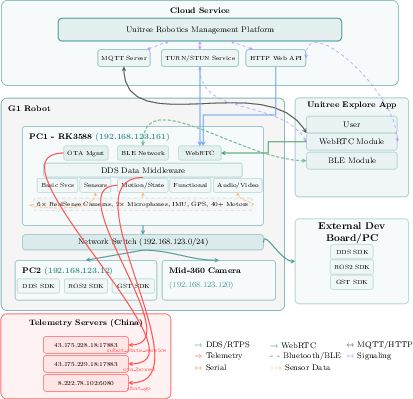

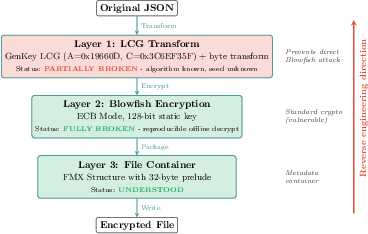

Abstract: We present a systematic security assessment of the Unitree G1 humanoid showing it operates simultaneously as a covert surveillance node and can be purposed as an active cyber operations platform. Partial reverse engineering of Unitree's proprietary FMX encryption reveal a static Blowfish-ECB layer and a predictable LCG mask-enabled inspection of the system's otherwise sophisticated security architecture, the most mature we have observed in commercial robotics. Two empirical case studies expose the critical risk of this humanoid robot: (a) the robot functions as a trojan horse, continuously exfiltrating multi-modal sensor and service-state telemetry to 43.175.228.18:17883 and 43.175.229.18:17883 every 300 seconds without operator notice, creating violations of GDPR Articles 6 and 13; (b) a resident Cybersecurity AI (CAI) agent can pivot from reconnaissance to offensive preparation against any target, such as the manufacturer's cloud control plane, demonstrating escalation from passive monitoring to active counter-operations. These findings argue for adaptive CAI-powered defenses as humanoids move into critical infrastructure, contributing the empirical evidence needed to shape future security standards for physical-cyber convergence systems.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Explain it Like I'm 14

Explaining “Cybersecurity AI: Humanoid Robots as Attack Vectors” for a 14-year-old

What is this paper about?

This paper looks at a human-shaped robot called the Unitree G1 and asks a simple question: Is it safe from hackers? The researchers tried to see whether the robot could secretly collect information (like a sneaky spy) and whether it could be turned into a tool to attack other computers. They also tested how “Cybersecurity AI” (smart software that protects or attacks in cyberspace) could work on a robot like this.

What were the main questions?

The researchers focused on three big ideas:

- Does the robot send data about its surroundings and its own status to the internet without the owner clearly knowing?

- Is the robot’s secret-protecting system (its encryption) strong, or can it be cracked?

- Could a smart cybersecurity program running on the robot discover weaknesses and prepare to attack other systems?

How did they paper it?

They used a mix of digital detective work and careful monitoring:

- Reverse engineering: This means they took apart the robot’s software (like opening up a mechanical toy to see the gears) to understand how its security works, including its encryption system.

- Network traffic analysis: They watched what information the robot sent over the internet (like checking which letters a mailbox sends out and when). They used special tools to see the data even when it was supposed to be hidden.

- Performance profiling: They checked which robot programs were running and how much computer power each used.

- Real-world tests (“case studies”): They tested two situations—one where the robot behaved like a secret surveillance device and another where a “Cybersecurity AI” agent on the robot mapped out ways to attack targets.

- Plain-language translations of key technical terms:

- Encryption: Scrambling information so only someone with the right key can read it.

- Telemetry: Status reports a device sends about things like battery, temperature, or sensors.

- Exfiltration: Quietly sending information out to somewhere else, often without permission.

- MQTT/DDS/WebRTC: Different “mail systems” for devices to send messages and media (voice/video) across a network.

What did they find, and why does it matter?

Here are the main discoveries and their importance:

- Weakness in the robot’s “secret-keeping” system:

- The robot uses an encryption setup that reuses the same key across many robots and uses an old-fashioned mode that reveals patterns. That makes it much easier for skilled people to unlock and read protected data. This is like many houses on a street using the same house key—once you copy one, you can open them all.

- Silent data sharing to outside servers:

- The robot regularly sends detailed status reports online—things like battery levels, joint temperatures, what services are running, and more—without the user clearly being told or asked. It can also share audio and video streams on the local network. This could break privacy laws in some places because people are not being properly informed or given a choice.

- A “dual threat”: spying and attacking

- If someone abuses these weaknesses, the robot could act like a hidden surveillance device (capturing audio, video, and room maps) and also be used to plan cyberattacks on other systems. Imagine a moving computer with cameras and microphones that can travel into sensitive areas and then reach out to other computers from the inside.

- Cybersecurity AI on the robot can prepare attacks:

- The team ran a cybersecurity AI agent on the robot. It automatically looked for weaknesses and planned how an attack could work (they stopped before doing anything harmful). This shows that smart software can speed up both defense and offense—so defenders need equally smart tools too.

Even though the robot’s security is better than what the researchers usually see in similar machines, these problems are still serious because the robot has cameras, microphones, and network access. That combination raises the stakes.

What is the impact of this research?

This paper is a warning and a guide:

- For robot makers: Use stronger, modern encryption with unique keys per device, turn on secure checks by default, and be transparent about what data is sent and why.

- For owners and workplaces (like factories, hospitals, or labs): Treat humanoid robots like powerful computers on wheels. Put them on secure networks, limit what data they can send, and monitor their traffic.

- For laws and standards: As humanoid robots enter homes and critical infrastructure, clear privacy rules and security standards are needed so users know what’s collected and can control it.

- For cybersecurity: Attackers can use AI to move faster. Defenders need to use AI too—“Cybersecurity AI”—to watch, detect, and block threats in real time.

In short, the paper shows that humanoid robots can become both helpful workers and dangerous digital “Trojan horses” if not secured properly. The solution is stronger, transparent security and smarter defenses that keep up with AI-powered threats.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a consolidated, action-oriented list of what remains missing, uncertain, or unexplored in the paper.

- FMX inner layer uncertainty: exact LCG seed derivation from device identifiers is not fully recovered; per-device uniqueness and rotation behavior remain unknown.

- No brute-force feasibility paper of the 32-bit LCG seed space (time-to-crack estimates on commodity attacker hardware and at cloud scale are missing).

- Fleet-wide key reuse scope unclear: sample size, models/firmware covered, and cross-generation validation (e.g., H1/Go2/other Unitree lines) are not specified.

- Key lifecycle unknown: whether the static Blowfish key is ever rotated, revocable, or regionally varied is not assessed.

- Secret inventory incomplete: reconciliation between “no hardcoded secrets” (Table 1) and discovery of a hardcoded encryption key is absent.

- Encryption at rest not evaluated: status of eMMC/disk encryption, key storage, and data protection for logs and configuration is unknown.

- Boot chain and root-of-trust unassessed: secure/verified boot, fuse states, bootloader locking, rollback protection, and measured boot/attestation are not analyzed.

- OTA update security not examined: code signing scheme, update server authentication, rollback protections, delta integrity, and recovery paths are not validated.

- DDS/ROS 2 security posture incomplete: feasibility and performance impact of DDS Security/SROS2, key distribution, and QoS/ACL hardening are not evaluated.

- CVE coverage gap: specific unpatched CVEs affecting ROS 2 Foxy (EOL), CycloneDDS 0.10.2, and bundled libraries are not enumerated, reproduced, or risk-ranked.

- MQTT security details missing: broker-side ACLs, topic authorization, credential provisioning/rotation, certificate pinning/trust store contents, and revocation handling are not reported.

- WebRTC claim not operationalized: TLS verification-disabled assertion lacks an end-to-end MITM or hijack demonstration (STUN/TURN/ICE/DTLS-SRTP details absent).

- chat_go WebSocket risks unvalidated: practical exploitability of SSL verification disabled at 8.222.78.102:6080 (e.g., control hijack, transcript interception) is not tested.

- Longitudinal telemetry characterization missing: only 10 minutes of SSL_write capture; behavior across operating modes, regions, firmware updates, and network conditions is unstudied.

- Cloud exfiltration scope unclear: conditions under which audio/video/LIDAR streams leave the local network (vs. remain DDS-local) are not established; Kinesis endpoints and activation triggers are unverified.

- Data residency controls untested: whether brokers/endpoints can be regionally selected, overridden, or disabled by operators (and with what effect) is unknown.

- Lateral movement into air-gapped environments is asserted but not experimentally demonstrated (e.g., via rogue AP, BLE bridging, physical payloading, or RF constraints).

- Network exposure breadth not fully mapped: IPv6, mDNS/SSDP, multicast scopes, service discovery, and localhost-bound services are not inventoried.

- Physical attack surfaces not exercised: UART/JTAG/bootloader console access, tamper detection, debug fuse states, and fault-injection resilience remain untested.

- Wireless security unassessed: BLE pairing mode and MITM protection, Wi‑Fi auth (WPA2/3, WPS), MAC randomization, and hotspot/adhoc behaviors are not evaluated.

- On-device hardening unknown: presence and configuration of SELinux/AppArmor/seccomp, ASLR, kernel lockdown, containerization, and least-privilege service accounts are not characterized.

- Forensic readiness not covered: secure logging, clock integrity, signed logs, retention, remote attestation, and investigative visibility for operators are not assessed.

- Safety-security interplay untested: ability to bypass motion limits, speed/torque constraints, e-stop integrity, and fail-safe behaviors under cyber compromise are not validated.

- CAI demonstration limited: no end-to-end exploit (e.g., OTA abuse, cloud control-plane change, telemetry spoof with operator-side impact); ethical constraints noted but impact remains hypothetical.

- CAI effectiveness unquantified: no baseline vs. human pentester comparison, success rate, time-to-find, false positives, or detection by vendor SOC/cloud defenses.

- CAI safety and robustness unexamined: susceptibility of the CAI agent to prompt injection/data poisoning on-device (vui_service/chat_go) and containment/guardrails are not tested.

- Real-time interference risks unmeasured: resource contention of CAI workloads on RK3588 (CPU, memory, RT scheduling) and effects on control loops/stability are not reported.

- Disclosure and vendor response absent: timelines, remediation status, and patch verification are not provided; reproducibility artifacts (pcaps, configs, decryption scripts) are not released.

- Generalizability uncertain: claims of “most mature security” are not backed by a standardized, multi-vendor benchmark; methodology for cross-vendor scoring is missing.

- Legal analysis high-level: no DPIA-style mapping of data elements to lawful bases, consent UX audits in companion apps, retention/processing purposes, or cross-border transfer mechanisms.

- Mitigations not experimentally validated: concrete hardening steps (broker ACLs, firewalling, DDS Security enablement, cert pinning, telemetry minimization) are not prototyped or benchmarked for efficacy and performance overhead.

Collections

Sign up for free to add this paper to one or more collections.