- The paper’s main contribution is a chain-of-thought tuning framework that trains LLMs to generate and verify detailed, step-by-step symbolic plans.

- It employs a three-phase process—initial tuning, logical reasoning, and external validation—achieving up to 94% plan validity in complex domains.

- The approach bridges neural and symbolic AI, enhancing plan interpretability and reliability for applications like robotics and autonomous systems.

Logical Chain-of-Thought Instruction Tuning for Symbolic Planning in LLMs

Introduction and Motivation

The paper "Teaching LLMs to Plan: Logical Chain-of-Thought Instruction Tuning for Symbolic Planning" (2509.13351) addresses a critical limitation in current LLMs: their inability to reliably perform structured symbolic planning, especially in domains requiring formal representations such as the Planning Domain Definition Language (PDDL). While LLMs have demonstrated strong performance in general reasoning and unstructured tasks, their outputs in multi-step, logic-intensive planning tasks are often invalid or suboptimal due to a lack of explicit logical verification and systematic reasoning.

The authors propose PDDL-Instruct, a novel instruction tuning framework that explicitly teaches LLMs to reason about action applicability, state transitions, and plan validity using logical chain-of-thought (CoT) reasoning. The approach decomposes the planning process into atomic, verifiable reasoning steps, enabling LLMs to generate and self-verify plans with a high degree of logical rigor.

PDDL-Instruct Framework

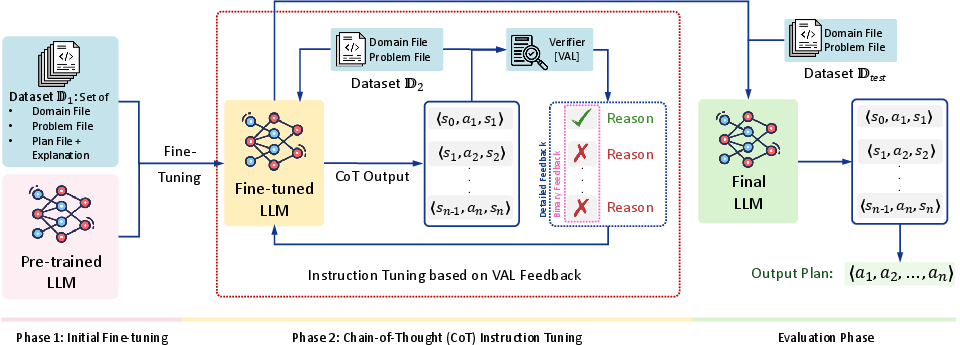

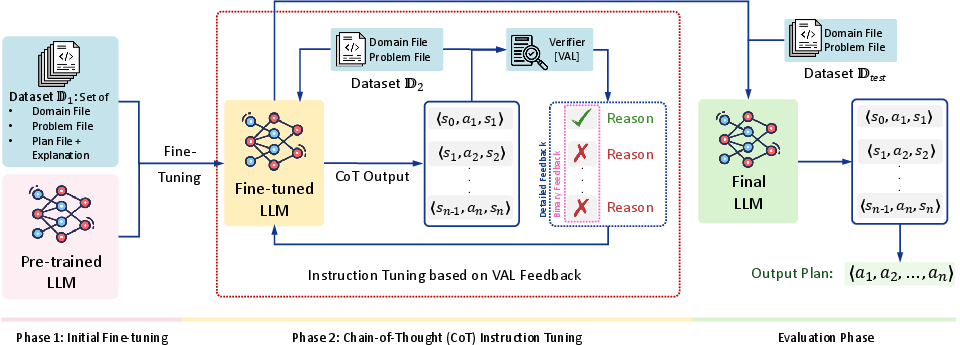

PDDL-Instruct is structured into three phases: Initial Instruction Tuning, Chain-of-Thought (CoT) Instruction Tuning, and Evaluation. The main innovation lies in the second phase, where the model is further trained to produce explicit logical reasoning chains for planning tasks.

Figure 1: The PDDL-Instruct approach consists of three phases: Two training phases (Initial and CoT Instruction Tuning) and evaluation phase. The main innovation lies in the second phase: CoT Instruction Tuning (highlighted by the red boundary). The initially tuned LLM is further trained using a structured instruction process that emphasizes complete logical reasoning chains.

Phase 1: Initial Instruction Tuning

In this phase, a pre-trained LLM is instruction-tuned using a dataset of planning problems and solutions (both valid and invalid) in PDDL. Prompts are crafted to require the model to explain the validity of each action in a plan, focusing on precondition satisfaction and effect application. Exposure to both correct and incorrect plans, with detailed explanations, establishes a foundation for logical verification and error recognition.

Phase 2: Chain-of-Thought Instruction Tuning

The core contribution is the CoT instruction tuning phase. Here, the model is trained to generate step-by-step state-action-state sequences, explicitly reasoning about each transition. Each step is externally validated using a formal plan validator (VAL), which provides either binary (valid/invalid) or detailed feedback (specific logical errors). This feedback is used to further tune the model, reinforcing correct logical reasoning and penalizing specific errors such as precondition violations or incorrect effect applications.

The CoT phase employs a two-stage optimization process:

- Stage 1: Optimizes the model to generate high-quality reasoning chains, penalizing logical errors at each step.

- Stage 2: Optimizes for end-task performance, ensuring that improvements in reasoning translate to higher plan validity.

Evaluation Phase

After both training phases, the model is evaluated on unseen planning problems. It must generate complete reasoning chains for new tasks, which are then validated for correctness. No feedback is provided during evaluation, ensuring a fair assessment of generalization.

Empirical Results

The framework is evaluated on PlanBench, covering three domains: Blocksworld, Mystery Blocksworld (with obfuscated predicates), and Logistics. Experiments are conducted with Llama-3-8B and GPT-4, comparing baseline models, models after Phase 1, and full PDDL-Instruct models with both binary and detailed feedback.

Key findings include:

- PDDL-Instruct achieves up to 94% plan validity in Blocksworld, representing a 66% absolute improvement over baseline models.

- Detailed feedback consistently outperforms binary feedback, especially in more complex domains (e.g., a 15 percentage point improvement in Mystery Blocksworld).

- The approach generalizes across domains, with the largest relative improvements in the most challenging settings (e.g., from 1% to 64% in Mystery Blocksworld for Llama-3).

- Increasing the number of feedback iterations (η) further improves performance, with diminishing returns beyond a certain point.

Implementation Considerations

Data and Prompt Engineering

- Dataset Construction: Requires a diverse set of planning problems, including both valid and invalid plans, with detailed explanations for each.

- Prompt Design: Prompts must elicit explicit reasoning about preconditions, effects, and goal achievement. For CoT tuning, prompts should require the model to output state-action-state triplets and justify each transition.

Training and Optimization

- Two-Stage Loss Functions: The reasoning loss penalizes step-level logical errors, while the final performance loss penalizes invalid plans at the sequence level.

- External Validation: Integration with a formal plan validator (e.g., VAL) is essential for providing ground-truth feedback during training.

- Resource Requirements: Training is computationally intensive, requiring multiple GPUs, large memory, and extended training times (e.g., 30 hours for full training on two RTX 3080 GPUs).

Scaling and Generalization

- Domain Generalization: The approach is robust across domains with varying complexity, but performance degrades with increased domain complexity and obfuscated predicates.

- PDDL Feature Coverage: The current framework is limited to a subset of PDDL (no conditional effects, durative actions, etc.). Extending to full PDDL coverage is a non-trivial future direction.

Limitations

- Not Guaranteed Optimality: The focus is on satisficing planning (any valid plan), not optimal planning (minimal action sequences).

- Dependence on External Validators: Current LLMs lack reliable self-verification; external tools are required for robust logical validation.

- Fixed Iteration Limits: The number of feedback loops is fixed; dynamic iteration control could improve efficiency.

Implications and Future Directions

The PDDL-Instruct framework demonstrates that explicit logical chain-of-thought instruction tuning, combined with external validation, can substantially improve the symbolic planning capabilities of LLMs. This bridges a key gap between neural and symbolic AI, enabling LLMs to generate plans that are not only syntactically correct but also logically valid.

Practical implications include improved reliability and interpretability of LLM-generated plans in domains such as robotics, autonomous systems, and decision support. The approach also provides a template for integrating formal verification into other sequential reasoning tasks, such as theorem proving or complex multi-step problem solving.

Theoretical implications involve the demonstration that CoT reasoning, when properly structured and externally validated, can be effective for planning tasks—contradicting prior claims that CoT is unsuitable for planning without additional scaffolding.

Future research should address:

- Extending to optimal planning and richer PDDL features.

- Developing self-verification capabilities within LLMs to reduce reliance on external validators.

- Optimizing instruction tuning data for maximal learning efficiency.

- Expanding to broader domains and more complex sequential decision-making tasks.

Conclusion

PDDL-Instruct provides a rigorous, empirically validated methodology for teaching LLMs to perform symbolic planning via logical chain-of-thought instruction tuning. By decomposing planning into verifiable reasoning steps and leveraging external validation, the approach achieves substantial improvements in plan validity and generalization. This work lays a foundation for more trustworthy, interpretable, and capable AI planning systems, and suggests promising avenues for further integration of neural and symbolic reasoning in large-scale LLMs.