Essay on "LLM+P: Empowering LLMs with Optimal Planning Proficiency"

The paper presents an intriguing approach to enhancing the capabilities of LLMs by integrating them with classical planning systems, leading to the LLM+P framework. The motivation behind this work lies in the observation that while LLMs excel at linguistic competence, they exhibit significant limitations in functional competence, particularly in solving long-horizon planning problems. LLM+P addresses this gap by leveraging the strengths of both LLMs and classical planners.

Overview

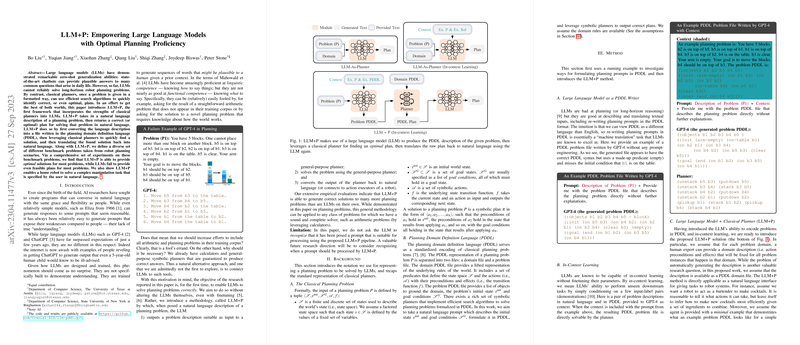

LLMs have demonstrated remarkable abilities in generating plausible natural language responses, yet they struggle with structured problem-solving tasks such as robotic planning. In contrast, classical planners are adept at solving these tasks once they are described within a suitable formal language like PDDL (Planning Domain Definition Language). LLM+P capitalizes on these strengths by translating natural language problem descriptions into PDDL, utilizing classical planners for solution generation, and then converting the resulting plans back into natural language.

The framework is applied to seven robot planning domains sourced from the International Planning Competitions. These domains include tasks like arranging blocks, mixing cocktails, and moving objects across rooms. Through extensive experimentation, LLM+P is found to outperform LLMs alone, notably solving complex planning tasks that require intricate reasoning about state transitions and constraints.

Key Contributions

The key contribution of the paper lies in the methodology of LLM+P, which is designed to utilize in-context learning and leverage LLMs as PDDL translators. By delivering a contextualized example to LLMs, the method enhances their ability to generate precise PDDL representations of given problems. This approach emphasizes the LLM's strength in language understanding and translation rather than task outcome prediction.

The empirical results demonstrate the method's effectiveness, achieving high success rates across several domains compared to standard LLM-based planning approaches. The framework's ability to provide optimal solutions underscores its potential utility in real-world robot applications.

Strong Numerical Results

A robust set of experiments underscores LLM+P's performance. For instance, in the Blocksworld domain, LLM+P achieved a 90% success rate, significantly outperforming LLM-based methods that struggled to generate feasible plans. Similarly, in the Grippers domain, LLM+P reached a success rate near 100%, indicating its reliability and efficiency.

Implications and Future Directions

The implications of LLM+P are considerable, offering practical benefits for robotics and AI applications that require natural language interfaces for complex tasks. By bridging the gap between natural language processing and structured problem-solving, LLM+P can be instrumental in developing more intuitive interfaces for user-robot interactions.

Theoretical implications include advancing the understanding of how LLMs can be effectively integrated with other AI systems to bolster their reasoning capabilities. Future work could focus on reducing the dependency on manually provided domain-specific information and enabling automatic recognition of planning tasks suitable for LLM+P.

Moreover, extending the framework's utility to broader problem domains could widen its applicability. The paper hints at recognizing prompts appropriate for planning autonomously as a promising direction, potentially enhancing the scalability and flexibility of the LLM+P approach.

In summary, LLM+P represents a significant step forward in enhancing LLM capabilities through strategic integration with classical planning systems, offering a robust pathway for solving complex planning problems via natural language.