- The paper introduces ACERL, which leverages contrastive learning and adaptive random masking to achieve minimax optimal edge representation.

- The paper details a dual-module approach using augmented network pairs and signal-to-noise ratio-based masking to enhance noise reduction and information preservation.

- The paper demonstrates robust improvements in downstream tasks like classification, edge detection, and community detection compared to methods such as Sparse PCA.

Contrastive Network Representation Learning

Introduction

Network representation learning (NRL) seeks to transform network data into a low-dimensional space, preserving structural and semantic properties to enhance downstream tasks such as classification, edge identification, and community detection. The paper "Contrastive Network Representation Learning" (2509.11316) introduces a new method aimed at addressing challenges in analyzing sparse and high-dimensional networks, specifically in contexts like brain connectivity where node or edge covariates are scarce. The proposed solution, Adaptive Contrastive Edge Representation Learning (ACERL), leverages contrastive learning and an adaptive random masking mechanism to achieve minimax optimal convergence in edge representation.

Methodology

The core innovation of ACERL lies in its two-module approach: contrastive learning applied to augmented network pairs and a data-adaptive random masking mechanism that tailors the augmentation process based on the signal-to-noise ratio across edges. This mechanism improves the robustness of representation by dynamically adjusting which parts of the data are masked, allowing it to handle the heterogeneous noise levels ubiquitous in brain connectivity analysis.

Construction of Augmented Views

ACERL generates augmented data views using two linear transformations of the original edge data matrix, differing by a random diagonal masking matrix. The masking probability for each element is adaptively estimated from the signal-to-noise ratio, enabling the approach to strike a balance between information preservation and noise reduction.

Contrastive Loss Function

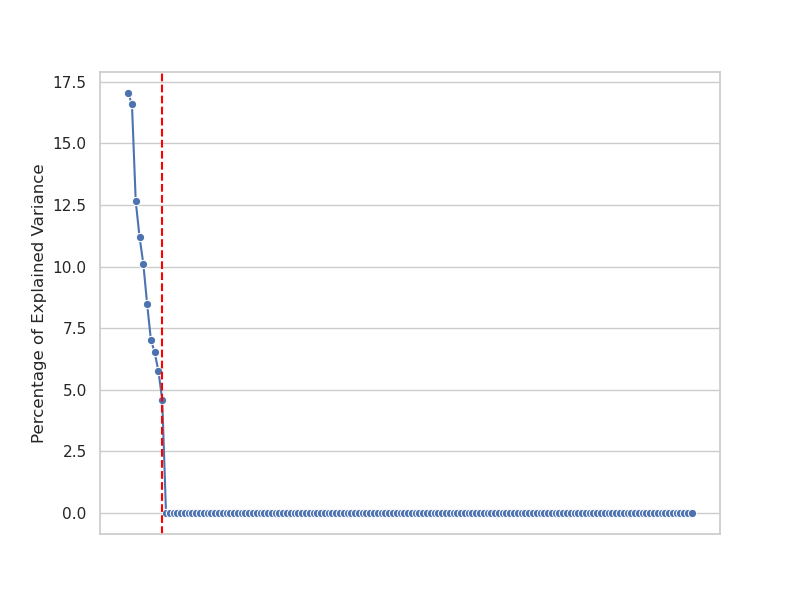

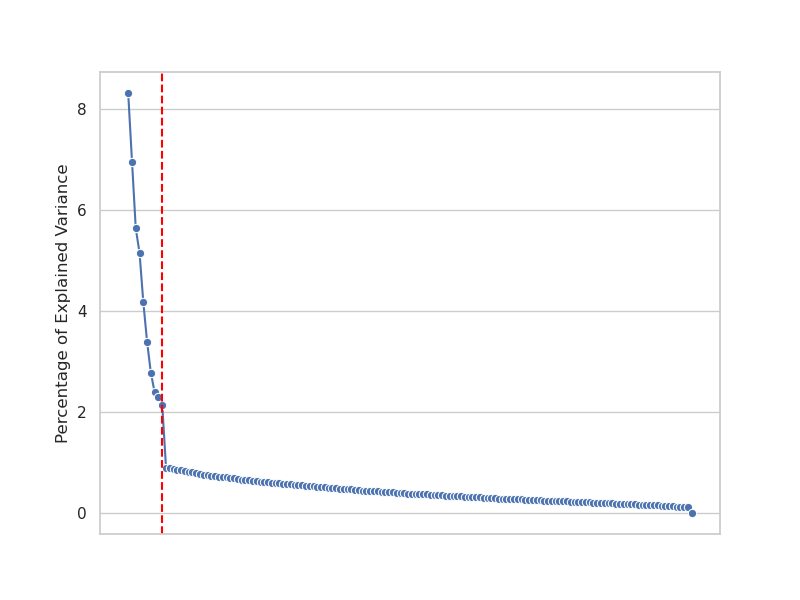

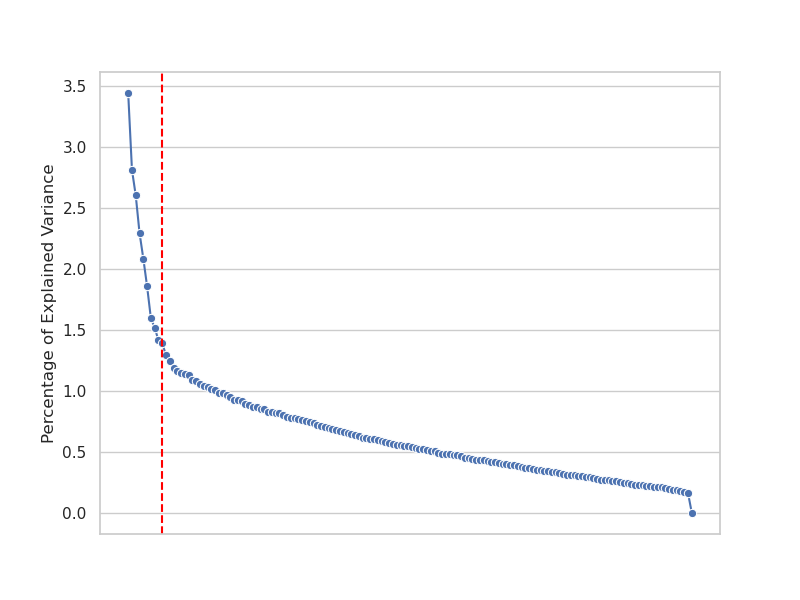

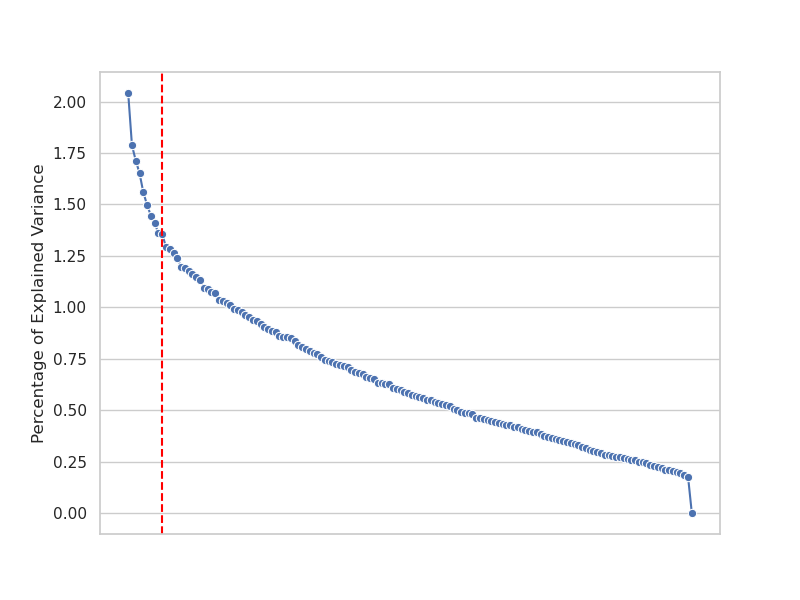

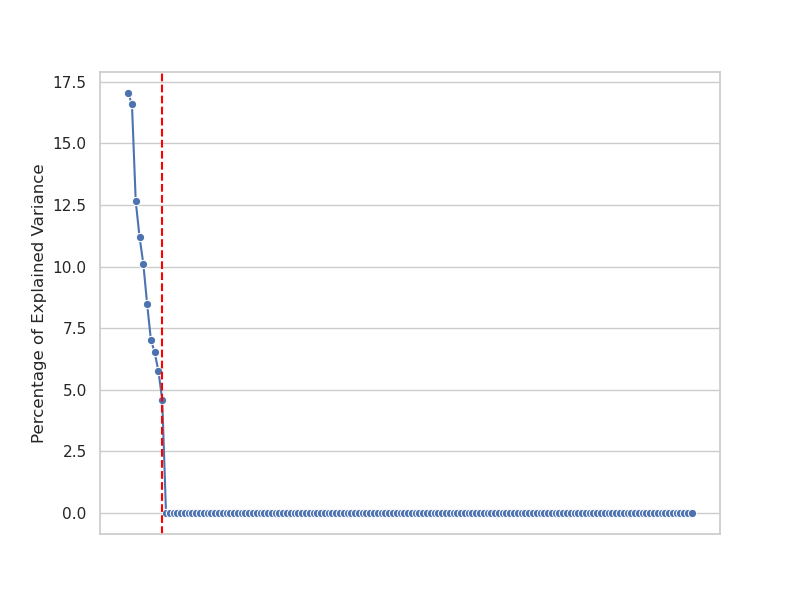

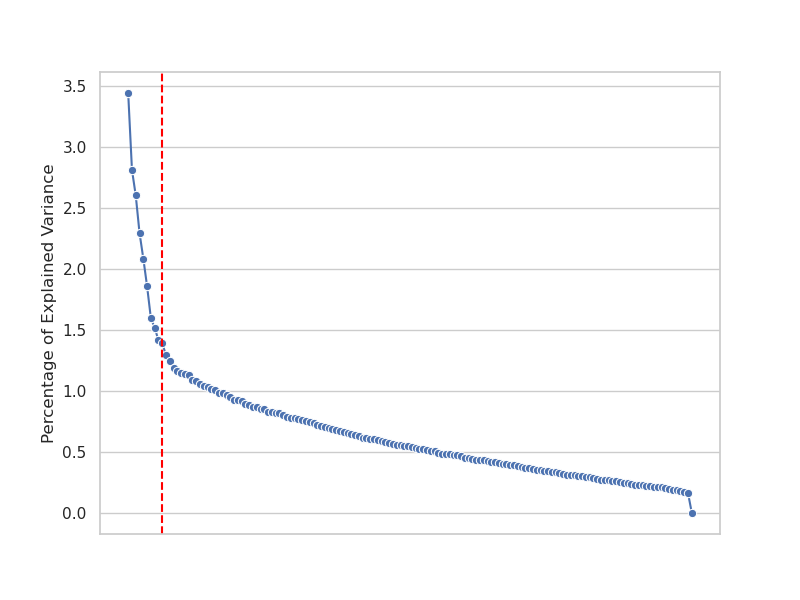

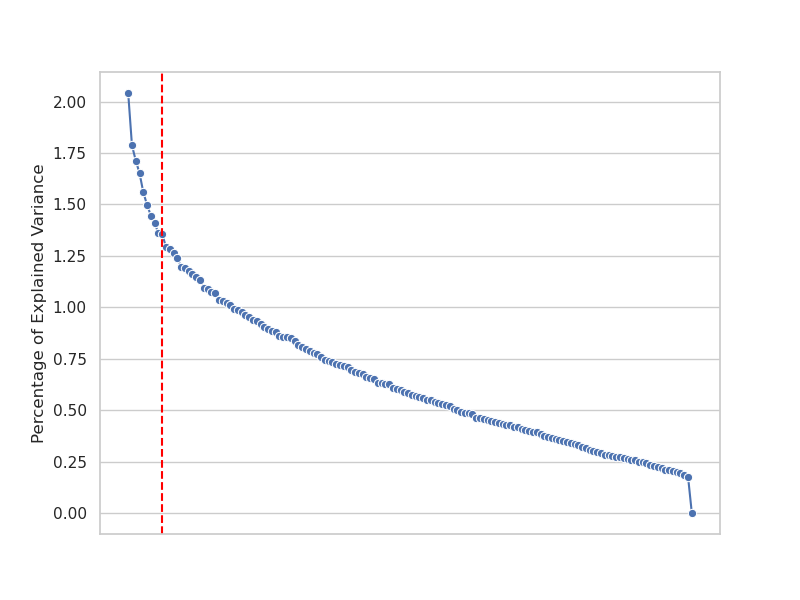

A unique triplet contrastive loss is employed, designed to minimize the distance between similar pairs and maximize the distance between dissimilar pairs. This loss formulation allows learning the edge embedding matrix efficiently, providing theoretical guarantees in terms of non-asymptotic error bounds and minimax optimal rates (Figure 1).

Figure 1: Percentage of explained variance for each component for n=250, d=990, and r=10 under different noise levels.

Iterative Estimation Process

ACERL uses a two-level iteratively truncated gradient descent method for minimizing the loss, iteratively refining embeddings while updating masking parameters. Theoretical analysis demonstrates that ACERL achieves sharp performance guarantees and efficient convergence.

Theoretical Analysis

ACERL's theoretical framework focuses on robust embedding estimation under heterogeneous noise, establishing conditions under which the method achieves minimax optimal convergence. The theoretical results are supported by simulations showing that the adaptive masking robustly handles noise variability and that the edge embeddings maintain the underlying network structure effectively.

Downstream Tasks

ACERL demonstrates strong performance across several downstream tasks including:

- Classification: Comparing patient traits from edge embeddings.

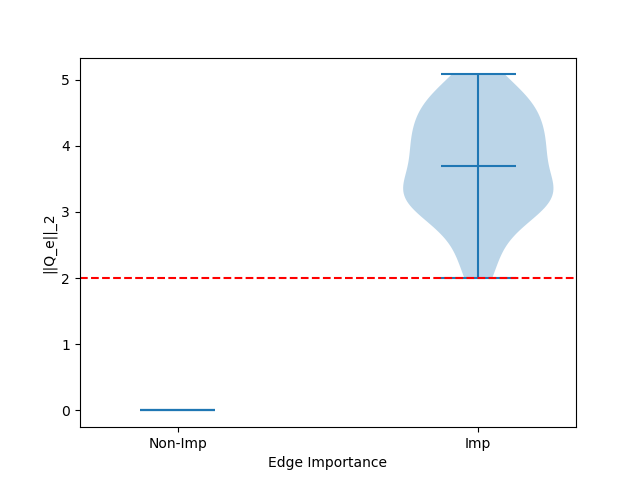

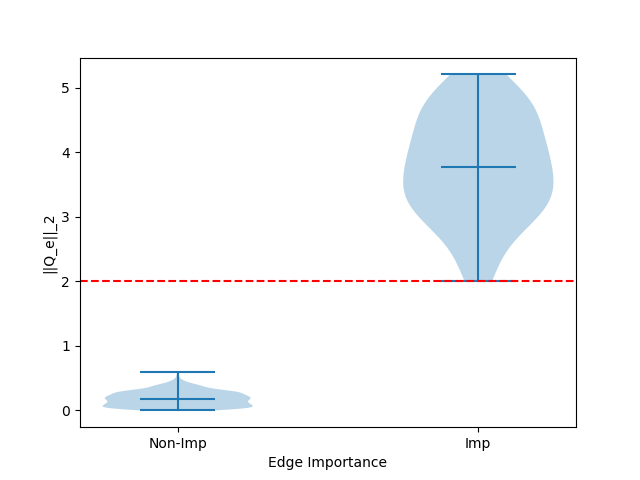

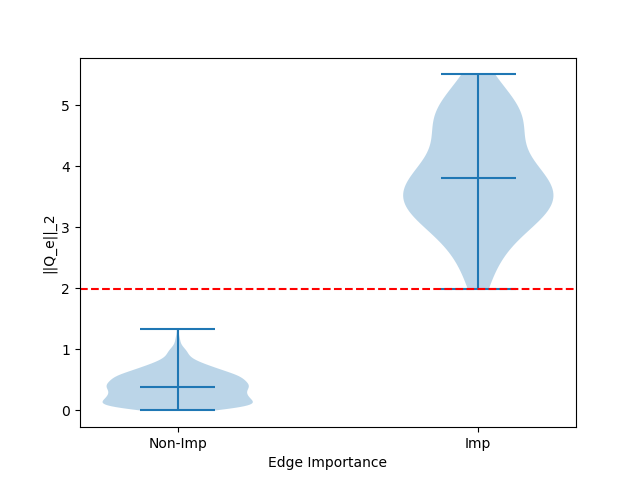

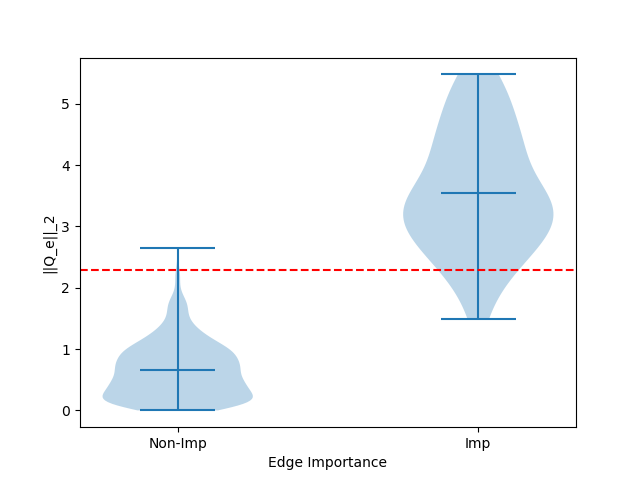

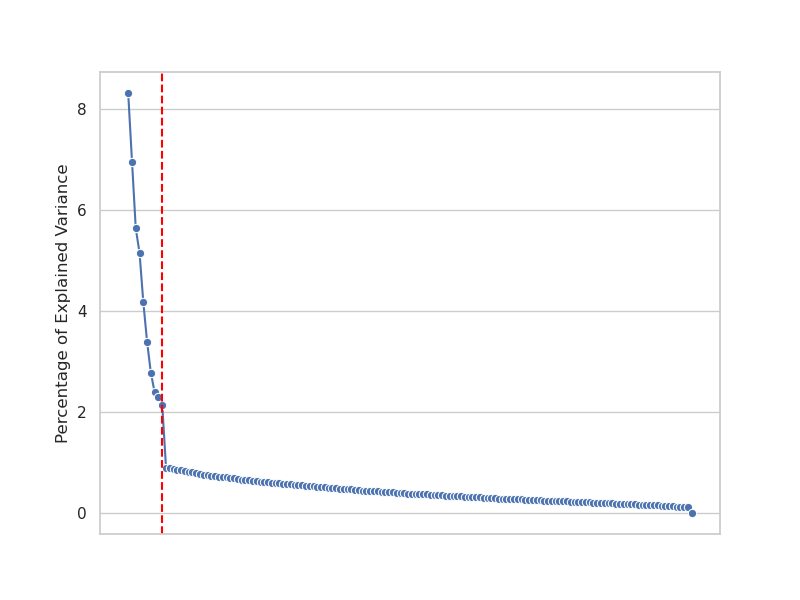

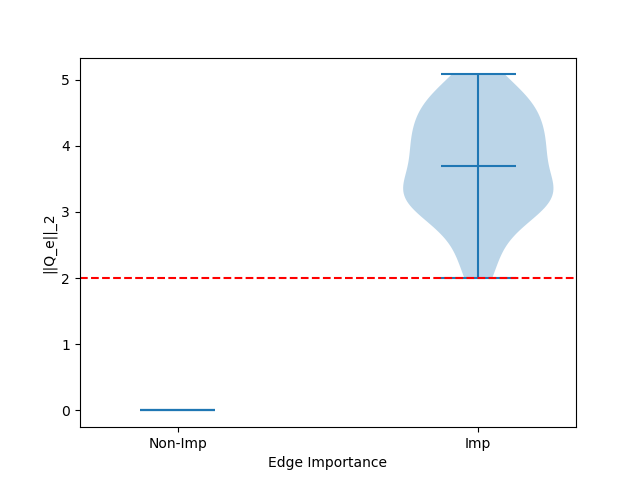

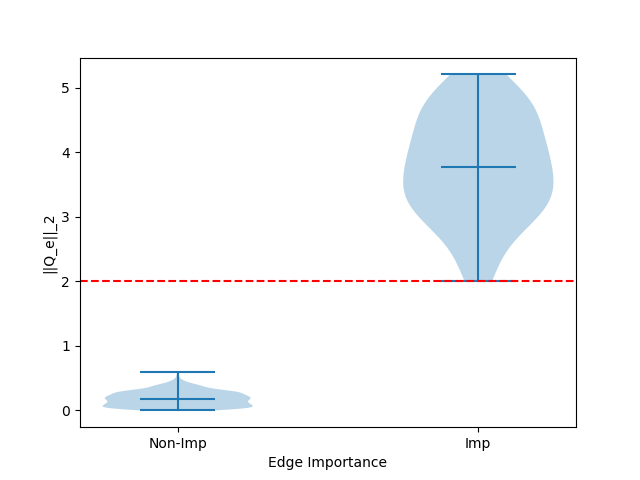

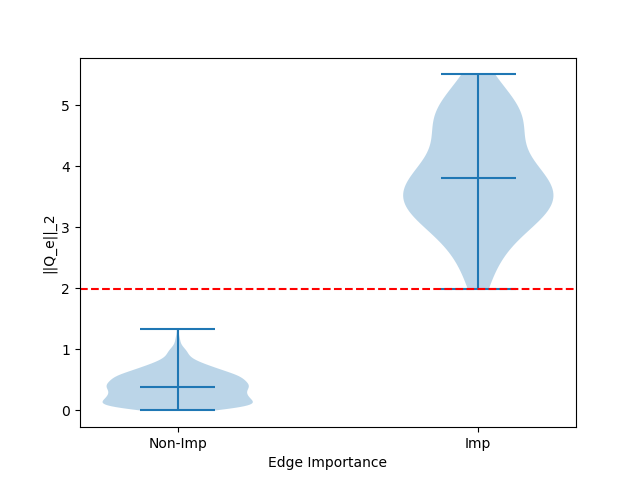

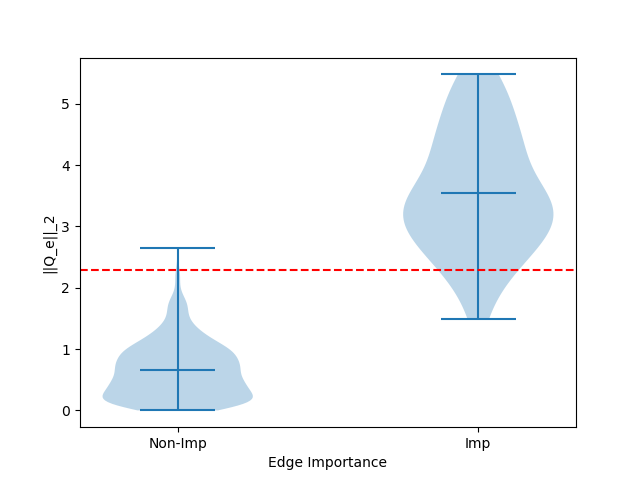

- Edge Selection: Identifying key edges using the norm of the learned embeddings, validated through Violin plots (Figure 2).

- Community Detection: Applying spectral clustering on the learned embeddings reveals coherent community structures in graphs, even under noise.

Figure 2: Violin plot for the ℓ2 norms of the edge embeddings for ACERL. The red line corresponds to the true sparsity level.

Comparison to Sparse PCA

The paper contrasts ACERL with Sparse PCA (sPCA). Notably, in the presence of heteroskedastic noise, sPCA struggles due to a lack of robustness in adjusting to varying noise levels across dimensions. ACERL's adaptive strategy provides substantial advantages in classification and edge detection tasks by making efficient use of contrastive learning's potential to handle unlabeled, heterogeneous data.

Simulations and Applications

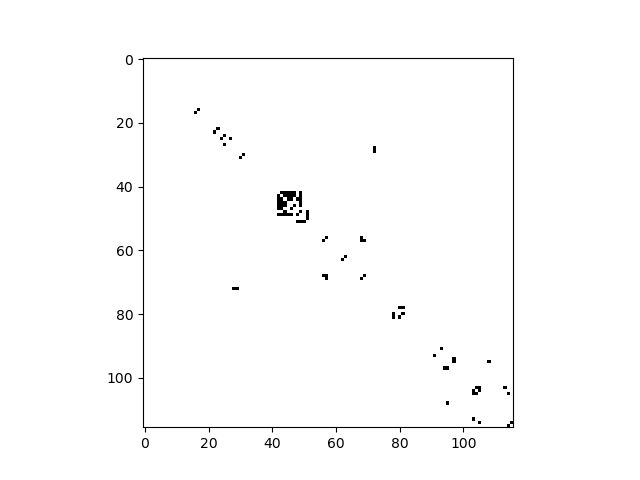

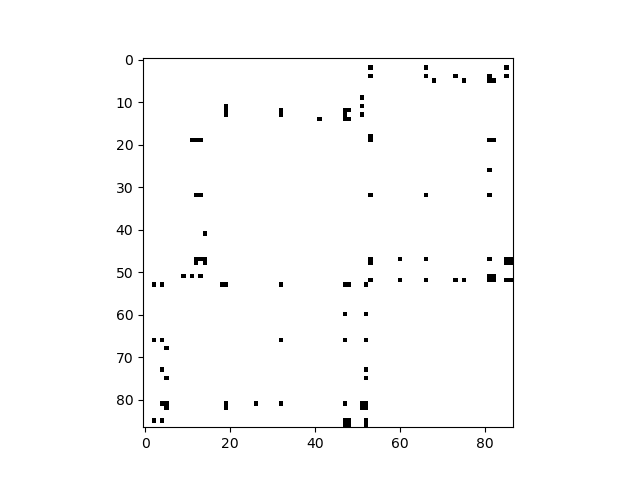

Simulations illustrate ACERL's comparative performance against baseline methods in subject classification and important edge identification. The method exhibits notable improvements in accuracy and sparsity handling, particularly in complex datasets such as ABIDE and HCP brain connectivity data (Figure 3).

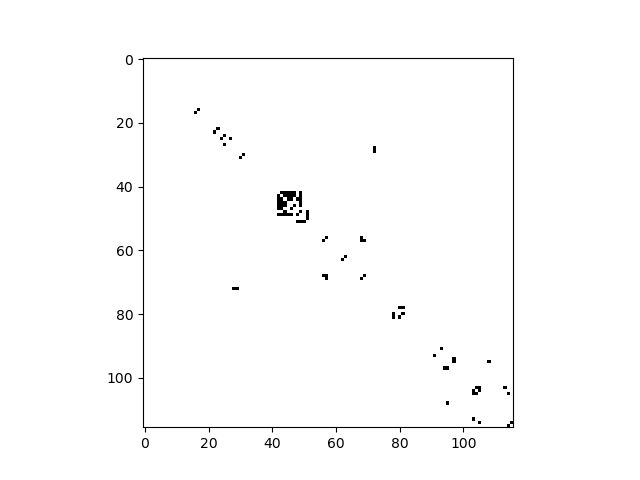

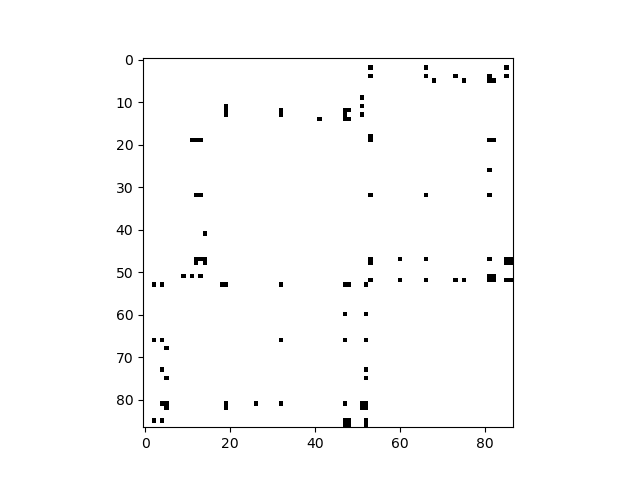

Figure 3: Sparse graph for ABIDE and HCP data at the sparsity level s=100.

Conclusion

The ACERL framework presents a significant advancement in network representation learning by providing a principled approach to handling sparse and heterogeneously noisy data typically encountered in brain connectivity analyses. Its comprehensive theoretical foundation and practical robustness suggest broad applicability, not limited to neuroscience but extendable to other domains with complex network structures. Future work might explore further personalization of masking strategies and adaptation to other network types beyond brain connectivity.