- The paper presents InternScenes, a comprehensive dataset combining real-world scans, procedural, and designer-created scenes to achieve over 40,000 realistic indoor environments.

- It details a robust data processing pipeline that uses real-to-sim transformations and physics-based optimization to ensure collision-free, simulation-ready scene layouts.

- Benchmark evaluations reveal that current generative and navigation models struggle with high-density, cluttered indoor scenes, highlighting the need for new algorithmic approaches.

InternScenes: A Large-scale Simulatable Indoor Scene Dataset with Realistic Layouts

Motivation and Dataset Construction

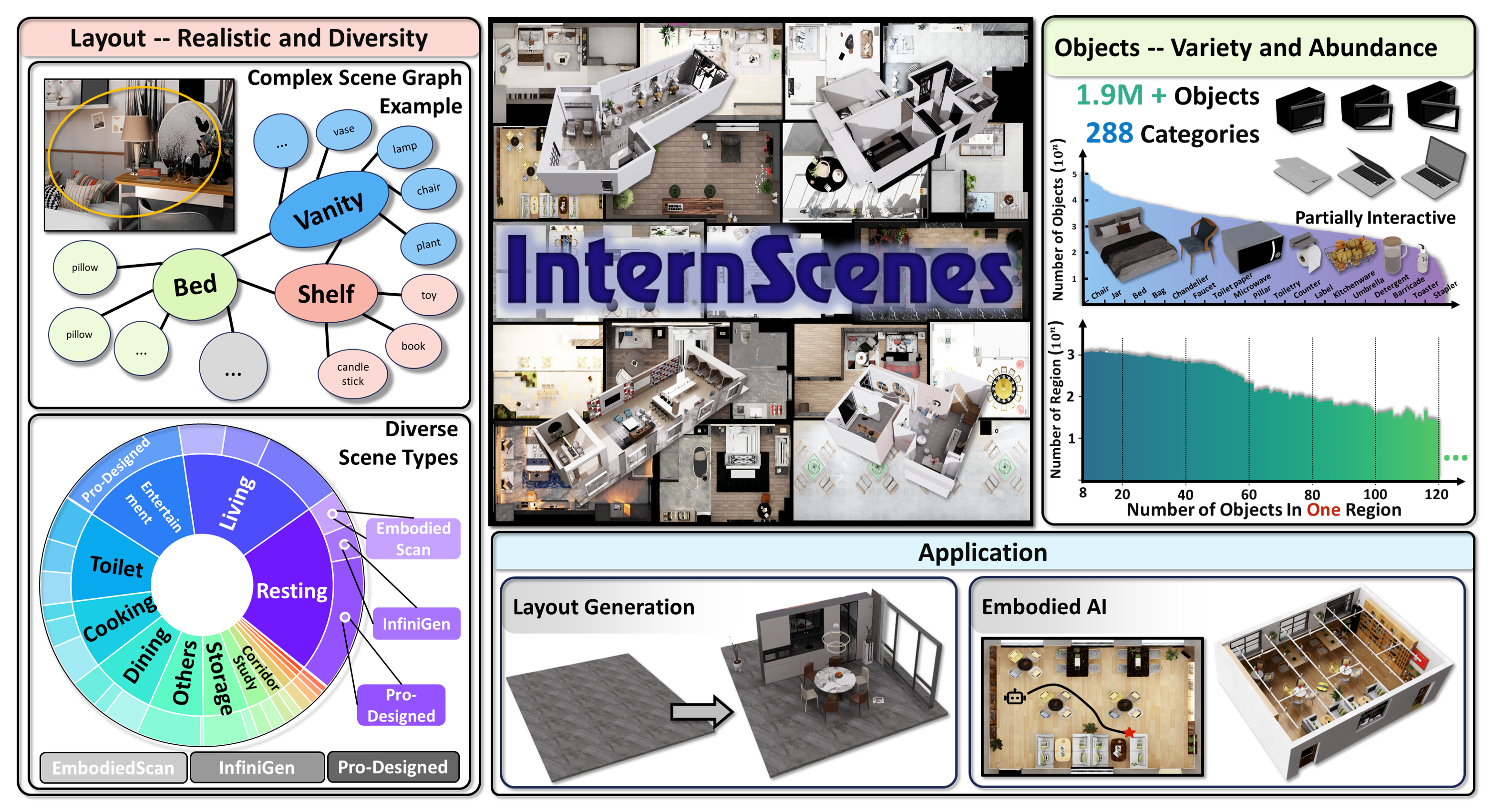

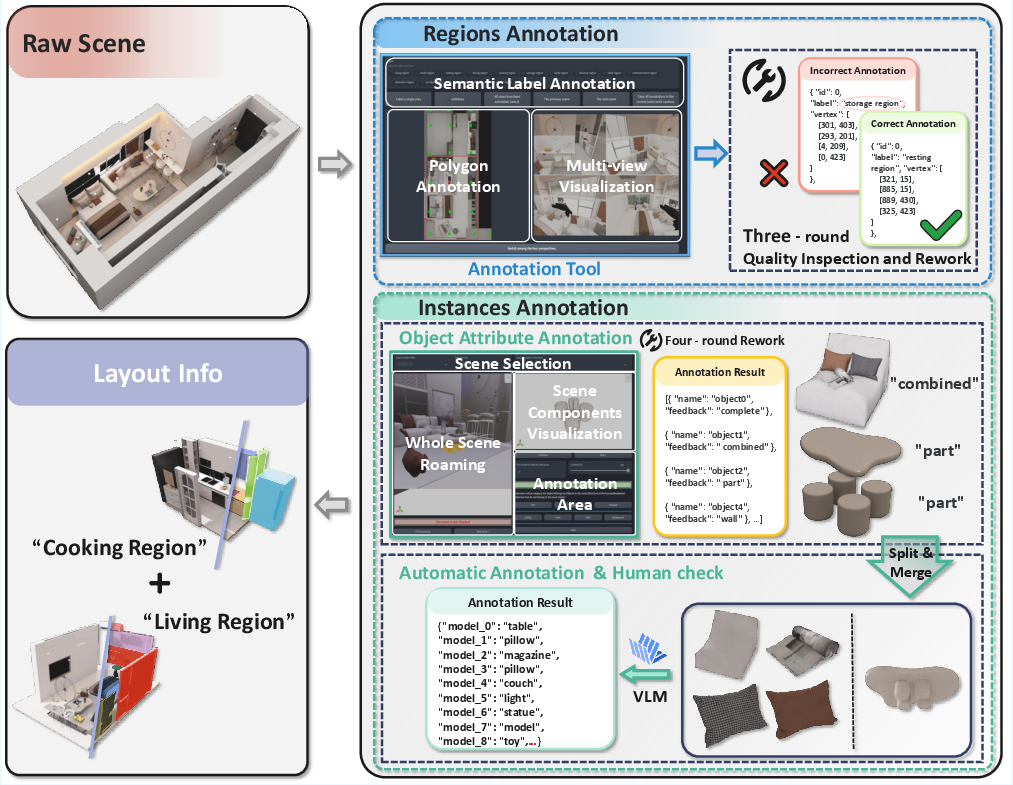

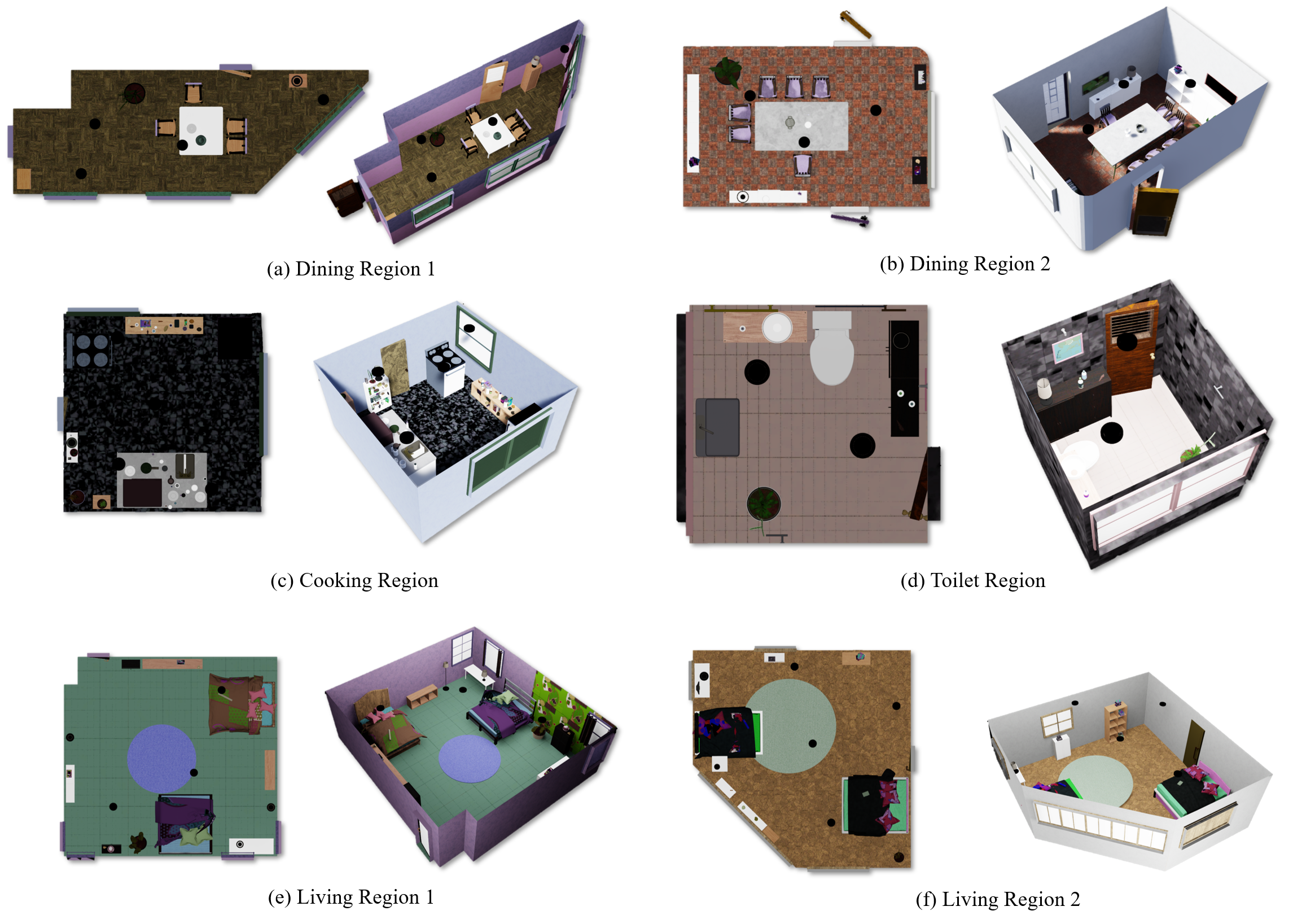

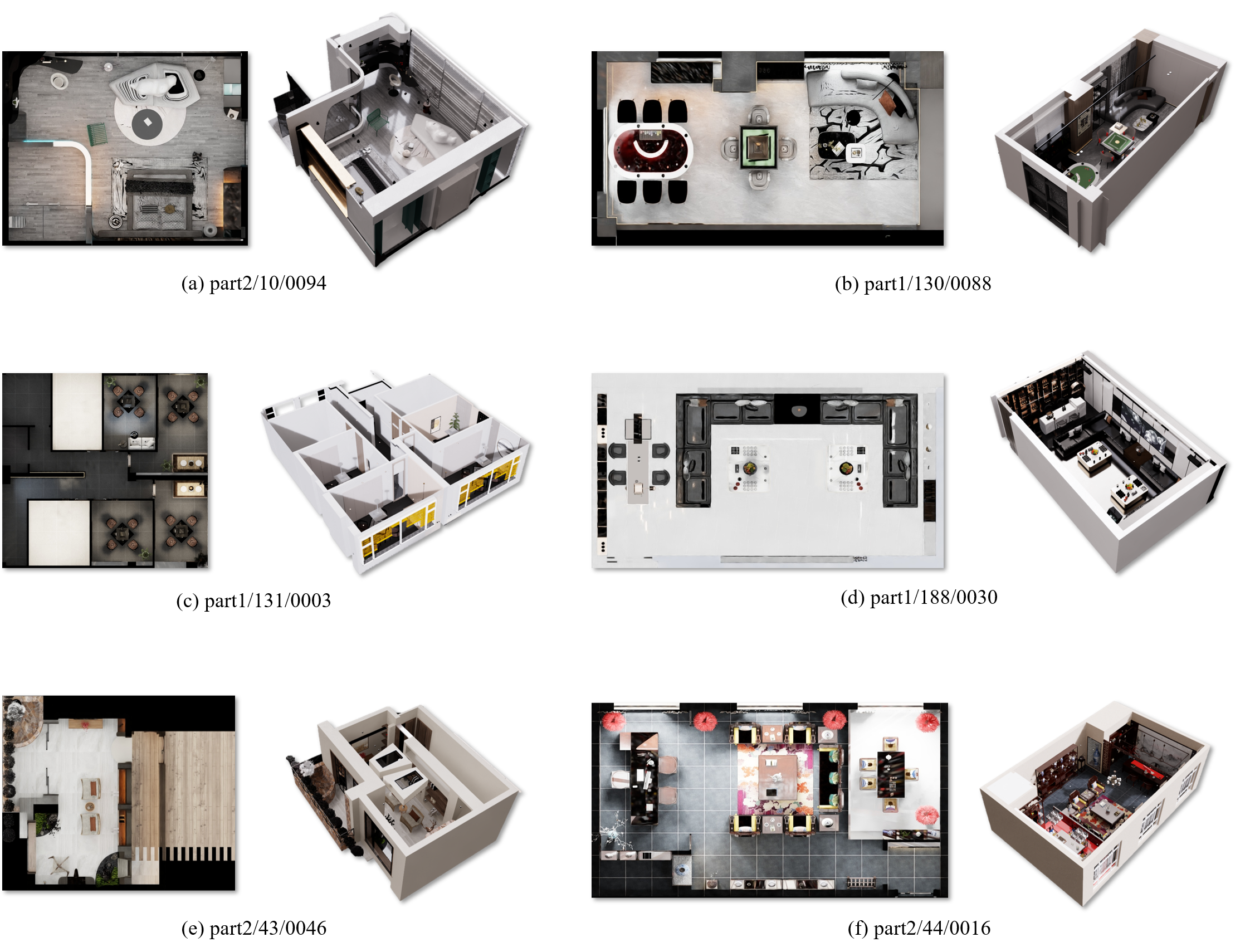

InternScenes addresses critical limitations in existing 3D indoor scene datasets for Embodied AI, including insufficient scale, lack of diversity, sanitized layouts, and poor simulatability. The dataset integrates three complementary sources: real-world scans (EmbodiedScan), procedurally generated scenes (Infinigen Indoors), and designer-created synthetic scenes. This multi-source approach yields approximately 40,000 scenes, 48,381 regions, and 1.96 million 3D objects spanning 288 object classes and 15 region types, with a high average object density (41.5 objects/region).

InternScenes preserves small items, which are typically omitted in other datasets, resulting in complex, realistic layouts. The pipeline ensures simulatability by transforming real scans into simulation-ready assets, annotating and processing designer scenes for precise layout extraction, and applying physics-based optimization to resolve object collisions and ensure physical plausibility.

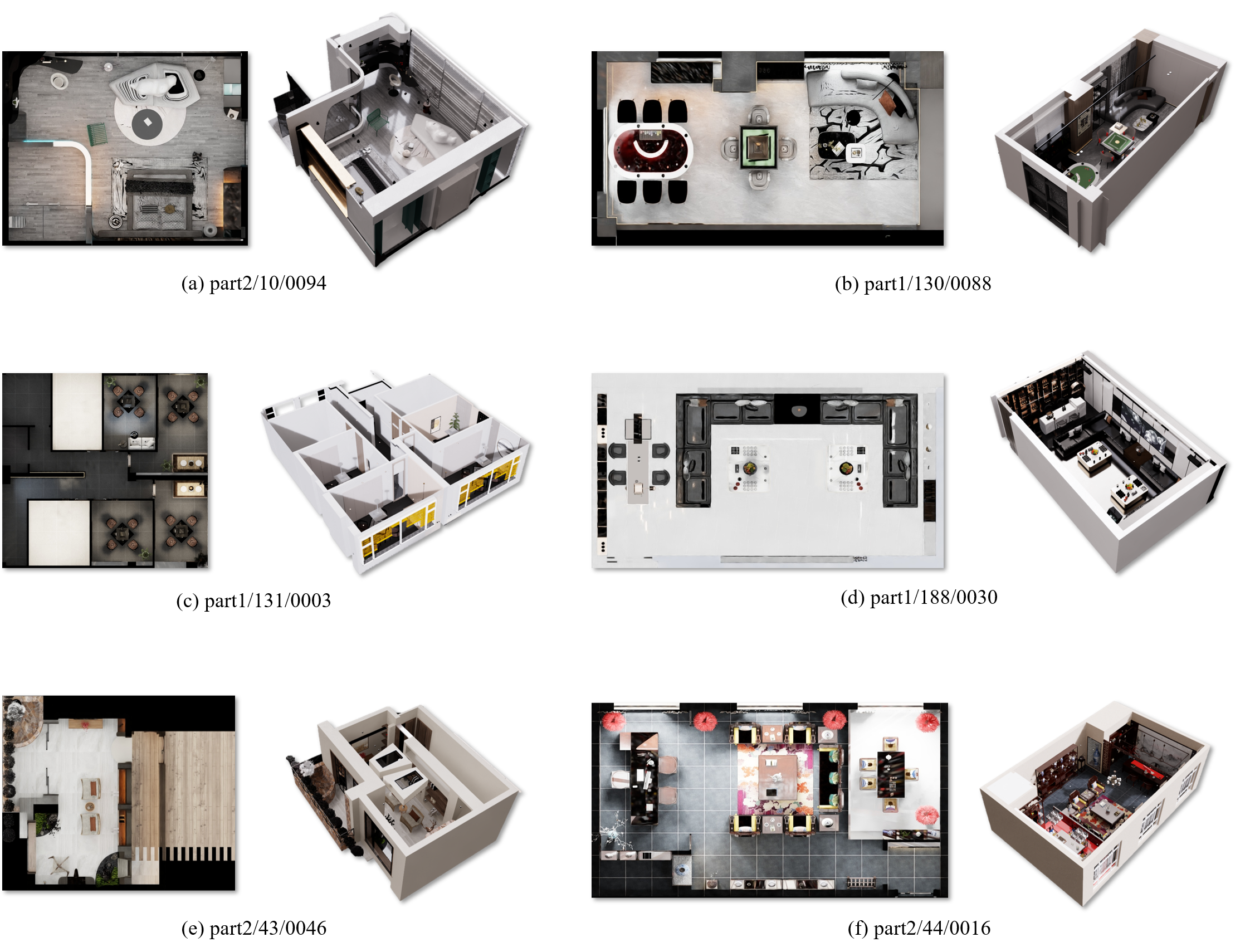

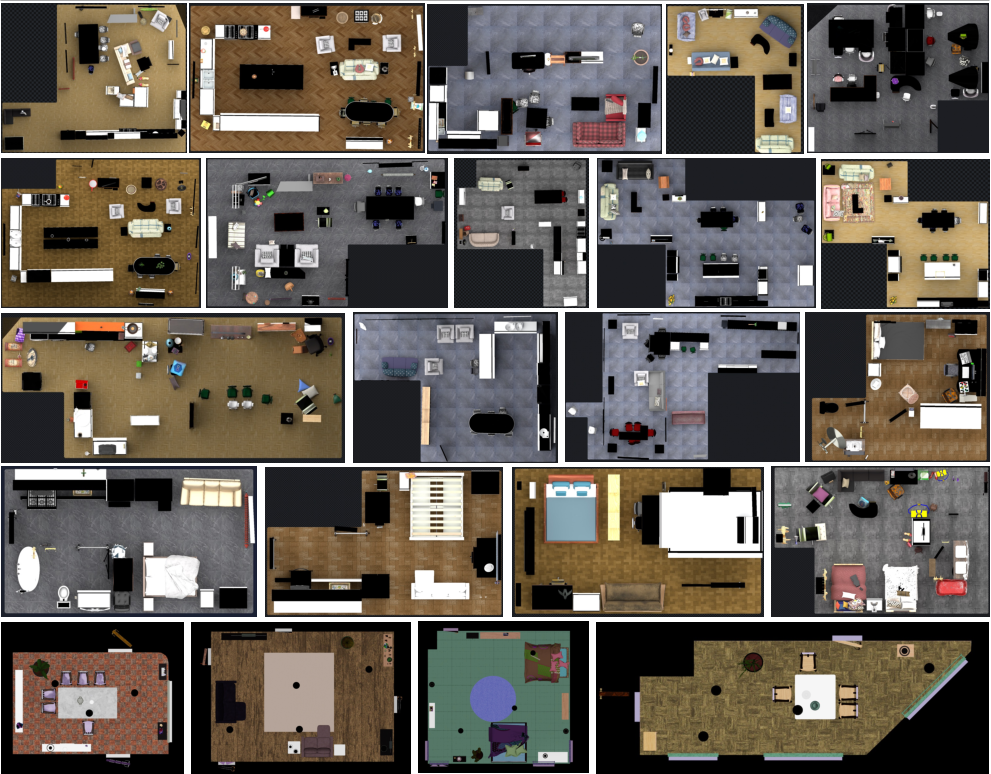

Figure 1: InternScenes is a large-scale simulatable indoor scene dataset with diverse layout and various 3D objects. It supports tasks like layout generation and vision navigation.

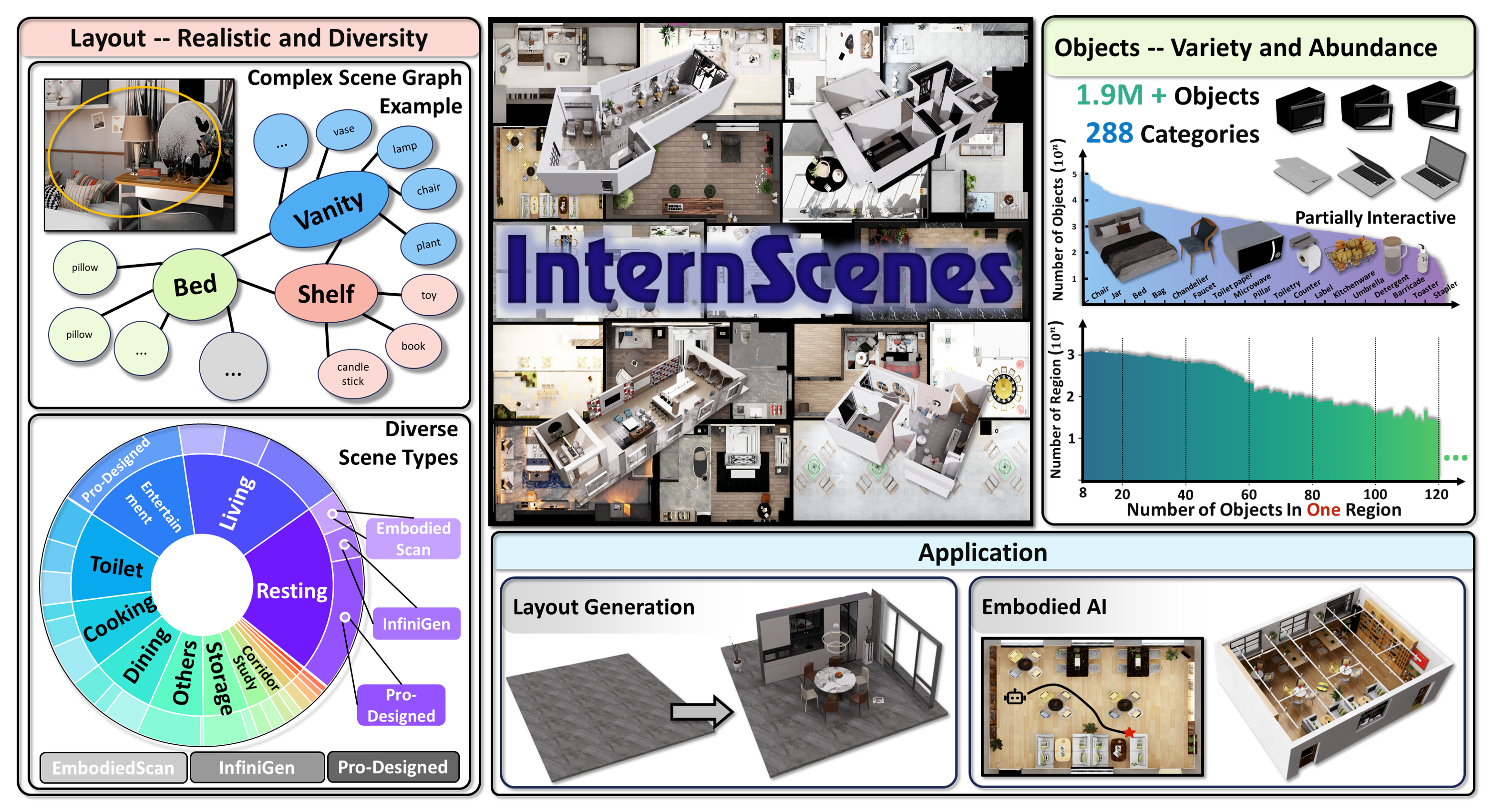

Data Processing Pipeline

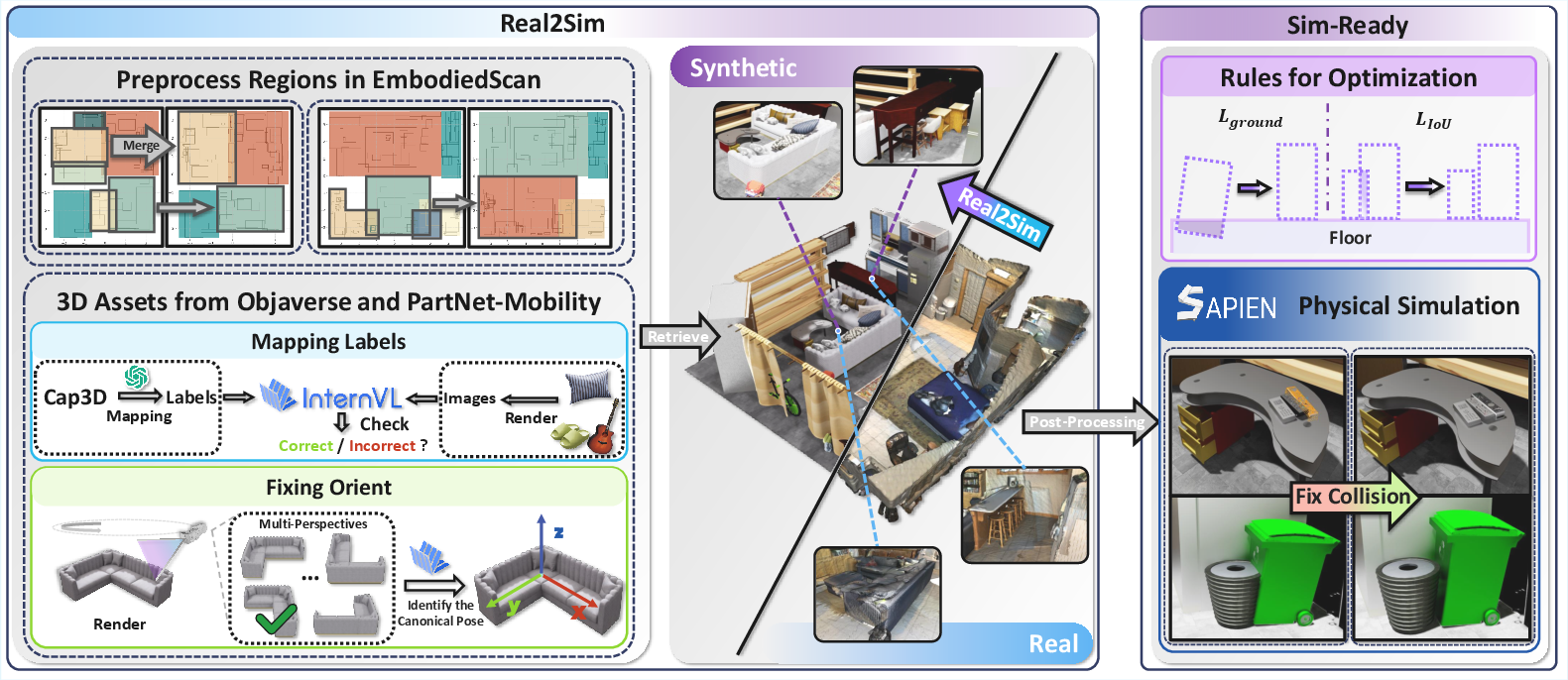

For real-world scans, the pipeline retrieves suitable 3D assets from Objaverse and PartNet-Mobility, using GPT-4o and InternVL for semantic label mapping and verification. Canonical pose correction is performed for orientation-constrained objects, and ambiguous categories are resolved via context-driven label replacement. Bounding box similarity is used for asset selection, and spatial transformations ensure accurate placement.

Figure 2: Pipeline for retrieving synthetic scenes from real scan scenes.

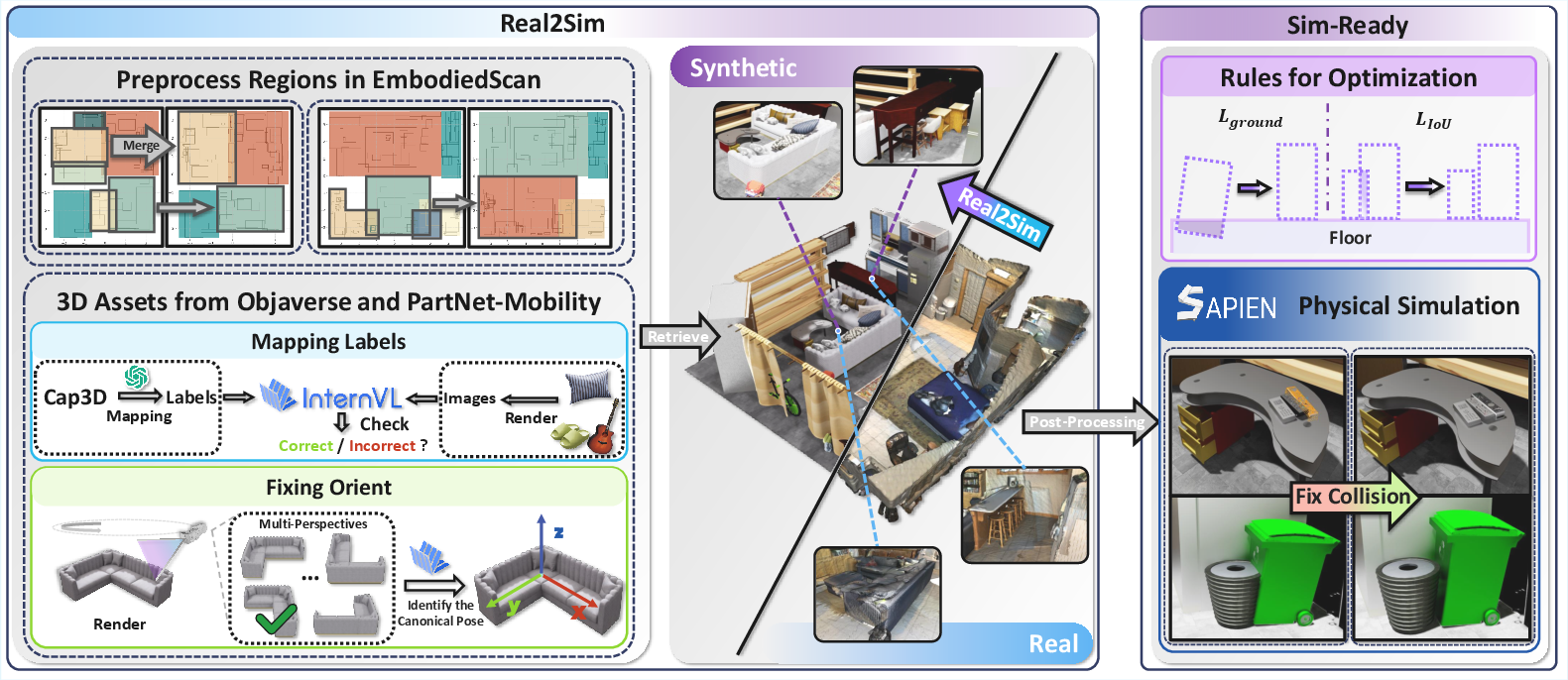

Procedural and Designer Scene Processing

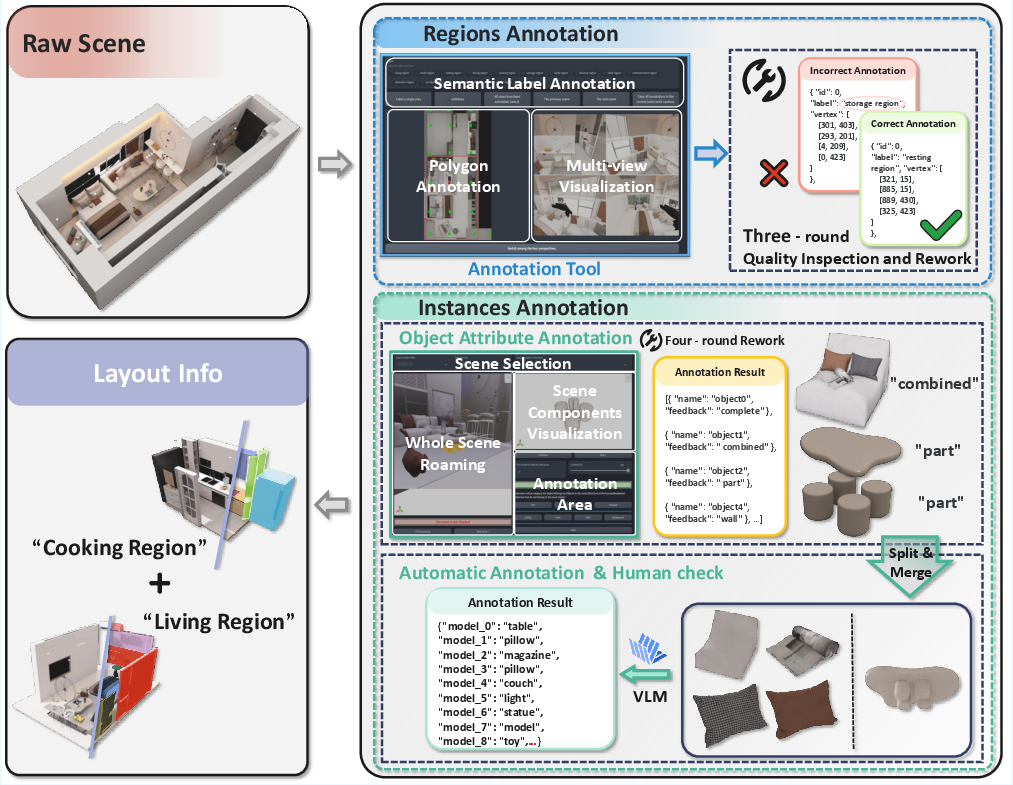

Procedural scenes are generated using Infinigen Indoors, which employs constraint-based arrangement systems for photorealistic, semantically plausible layouts. Designer-created scenes undergo manual region and instance annotation, supported by custom tools for BEV map visualization and multi-view rendering. Hierarchical disorder and annotation gaps are resolved by splitting/merging instances and refining region definitions.

Figure 3: Pipeline for annotating and processing raw scenes to extract precise layout information.

Physics-Aware Scene Composition

Bounding box optimization is performed for large furniture using a composite loss function:

L=λIoULIoU+λgroundLground+λregLreg

where LIoU penalizes overlaps, Lground enforces ground alignment, and Lreg restricts deviation from original positions. Small objects are refined via physics simulation in SAPIEN, with convex decomposition (COACD) for accurate collision geometry, including cavity segmentation for realistic containment.

Dataset Statistics and Analysis

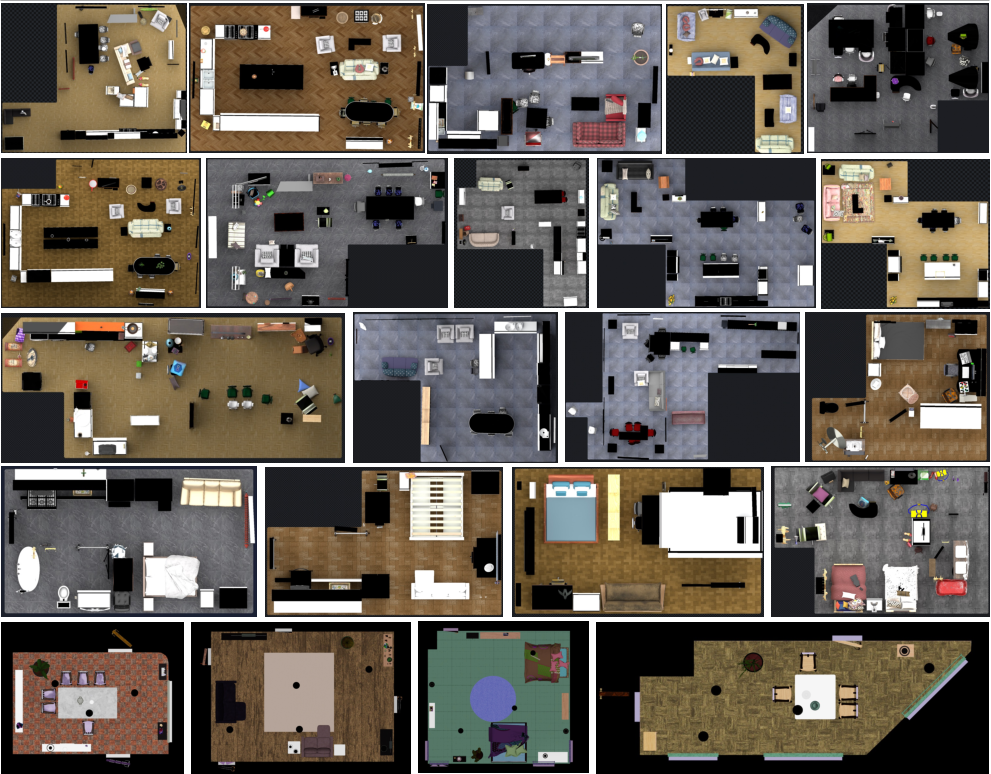

InternScenes comprises three subsets: Real2Sim (9,833 regions), Gen (11,454 regions), and Synthetic (27,094 regions). The asset library contains 80 million CAD models. The dataset provides region-level layout information and mesh assets for full 3D reconstruction.

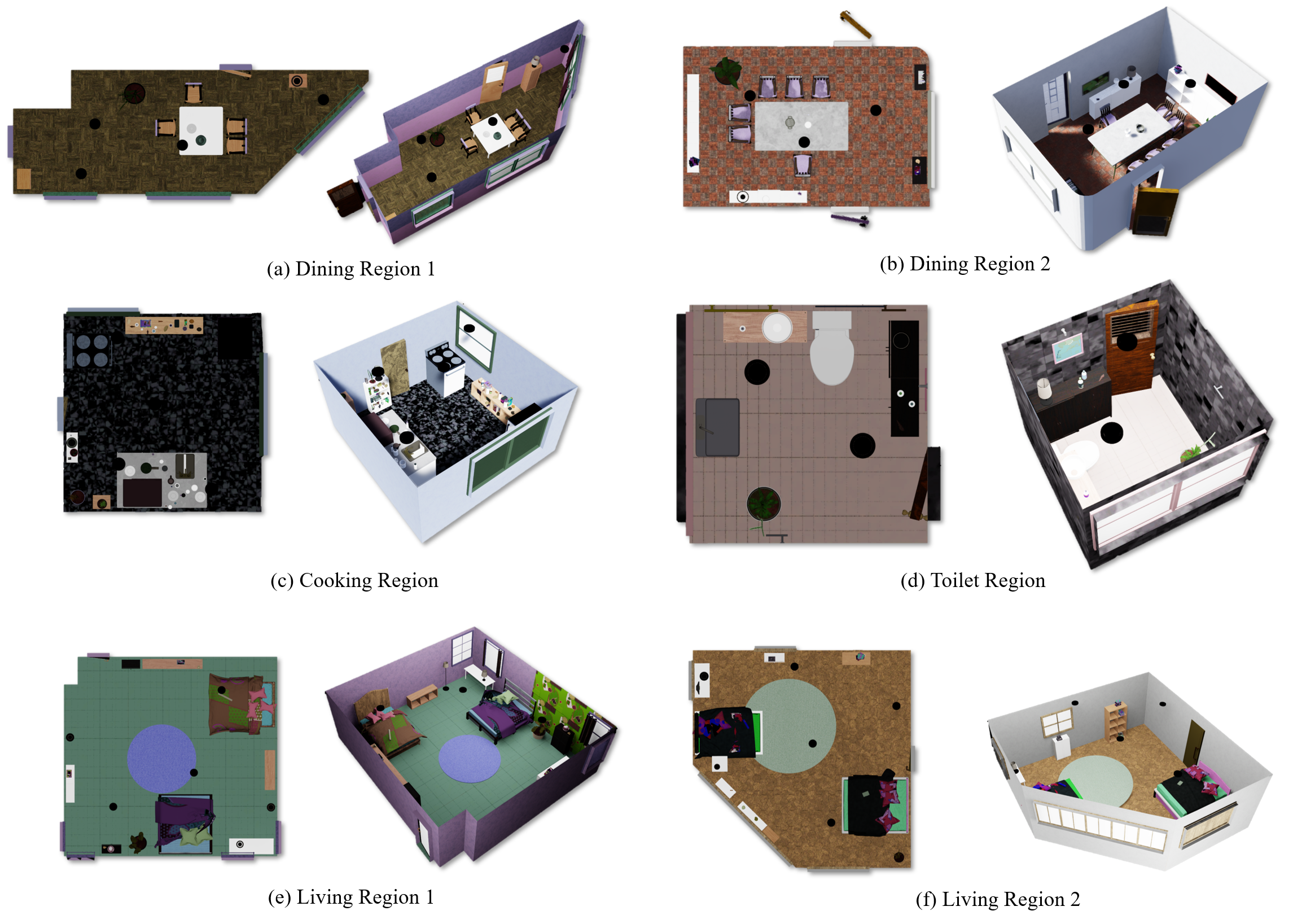

Figure 4: Examples from InternScenes-Real2Sim. Each scene shows its BEV map and isometric view.

Figure 5: Examples from InternScenes-Gen. The BEV map and one isometric view are shown.

Figure 6: Examples from InternScenes-Synthetic. The BEV map and one isometric view are shown.

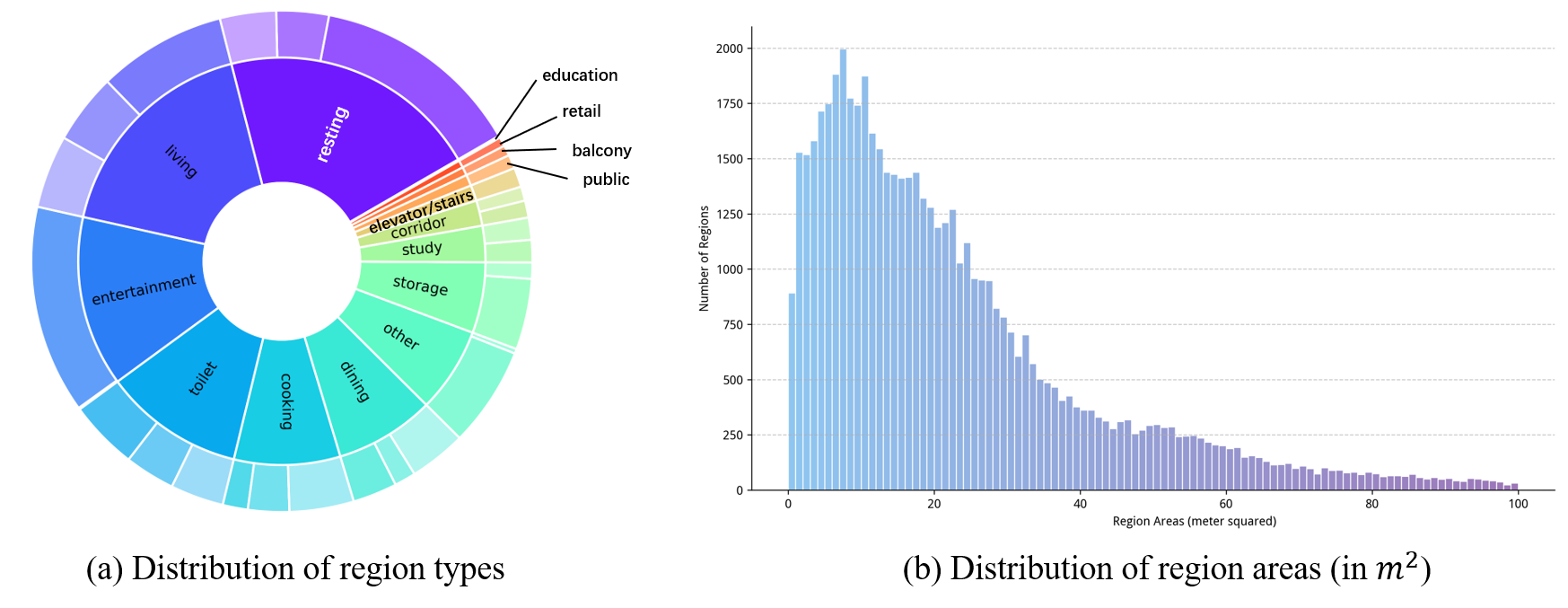

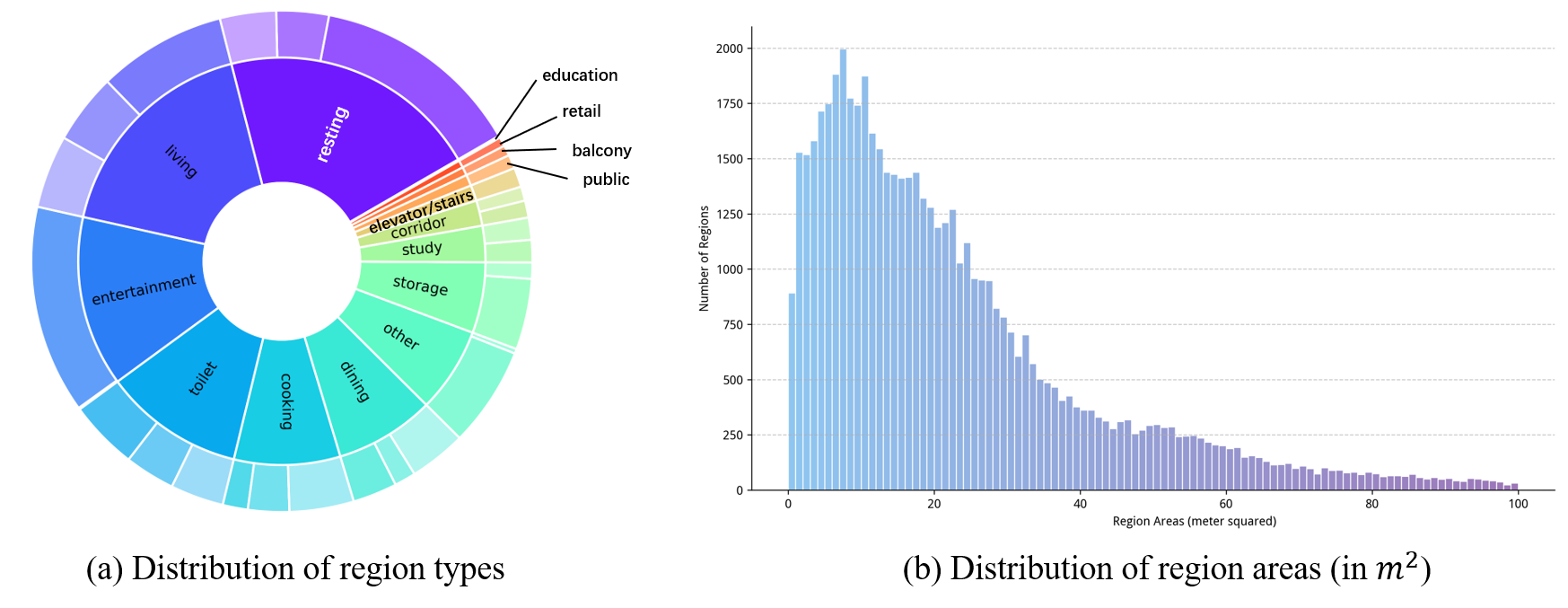

Region statistics show coverage of 15 region types with diverse area distributions.

Figure 7: Region statistics.

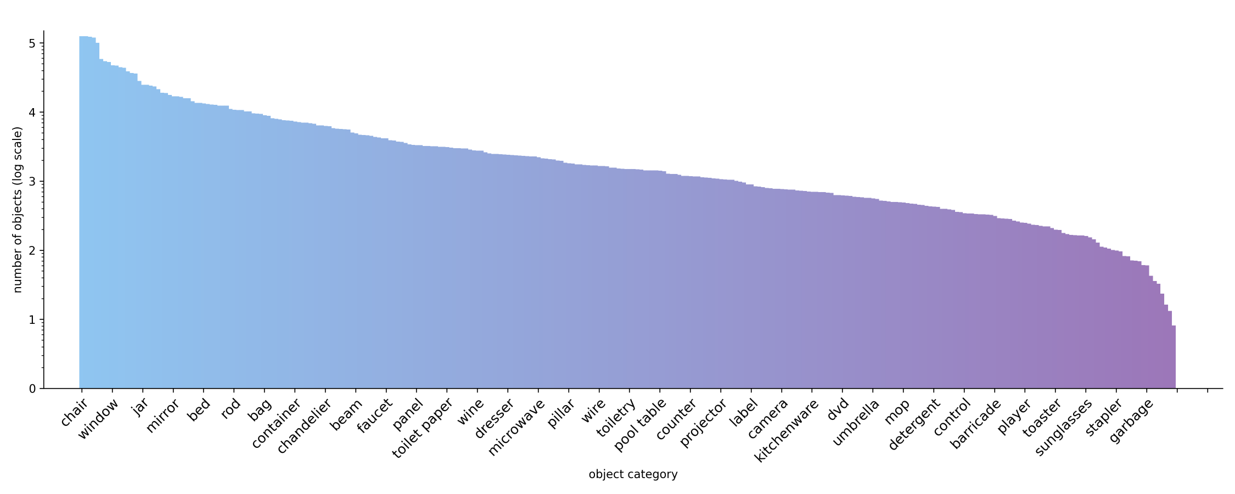

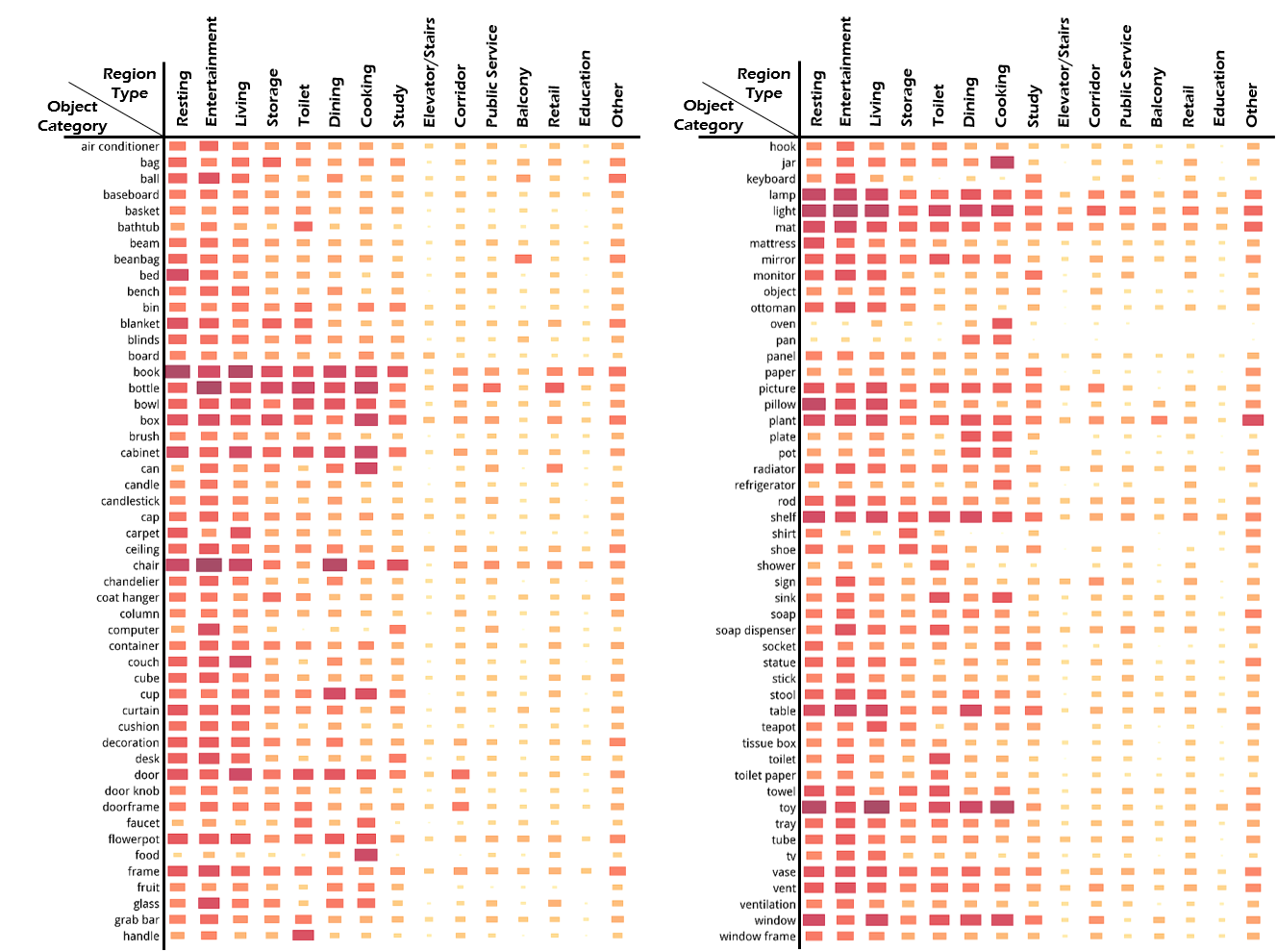

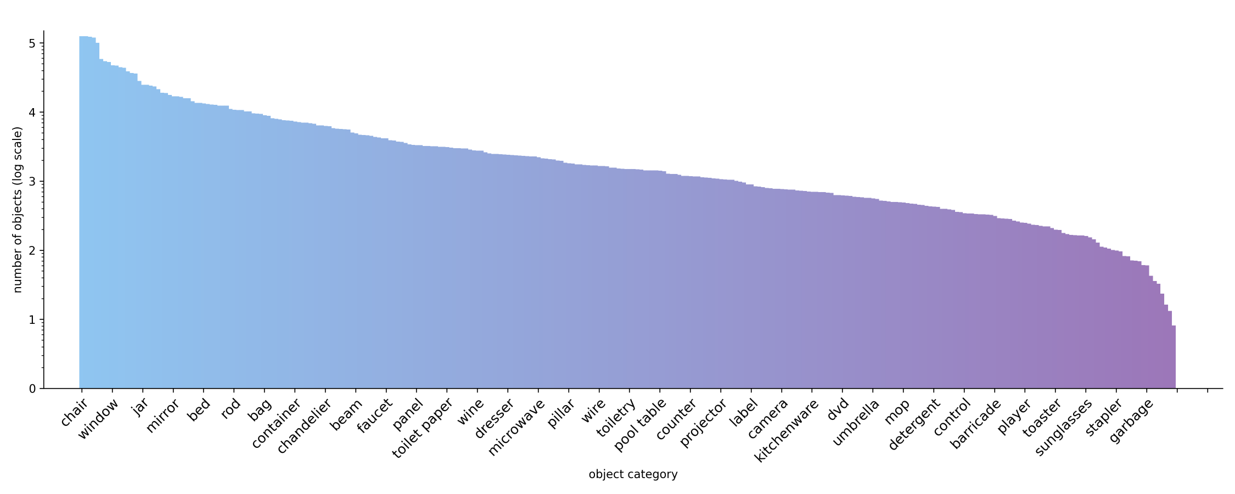

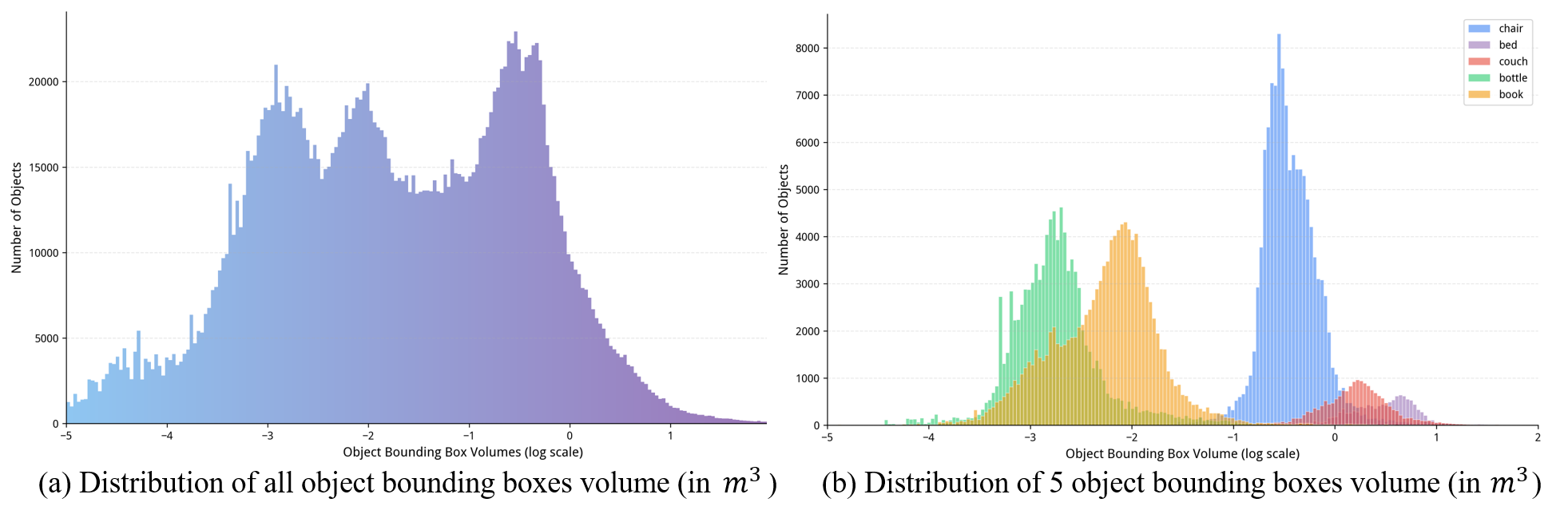

Object statistics reveal a broad distribution across 288 categories, with high frequency for chairs, toys, books, lights, and bottles.

Figure 8: Distribution of objects across 288 categories.

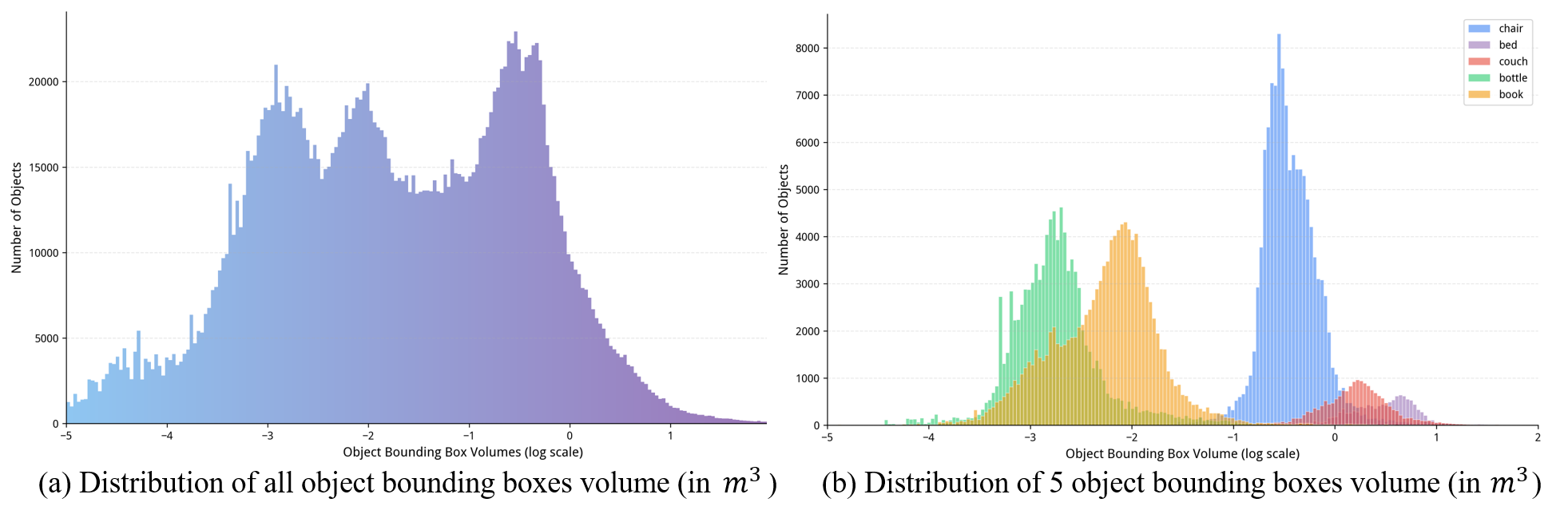

Bounding box volume statistics demonstrate coverage from large furniture to small items.

Figure 9: Object bounding boxes volume statistics.

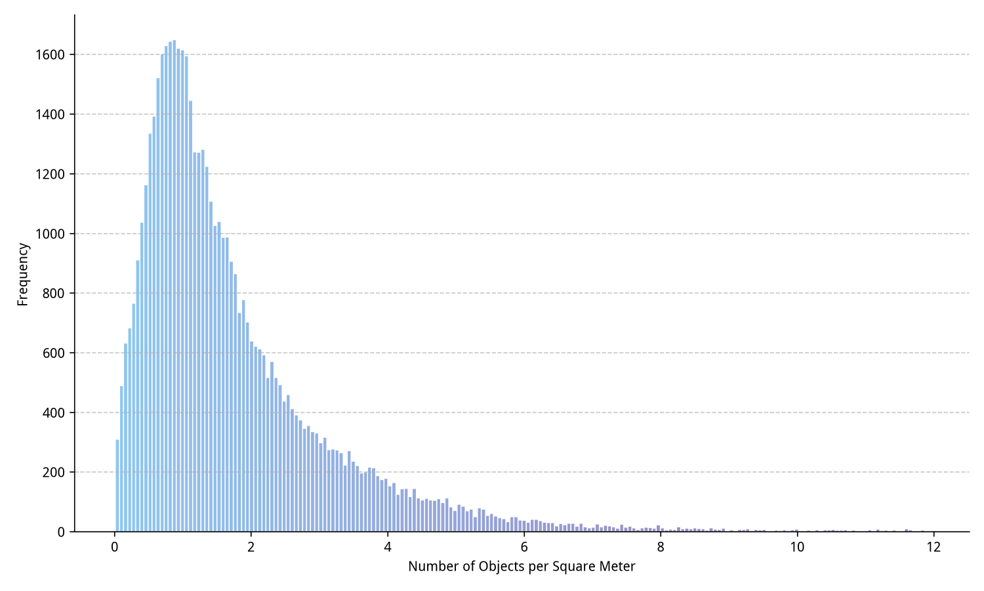

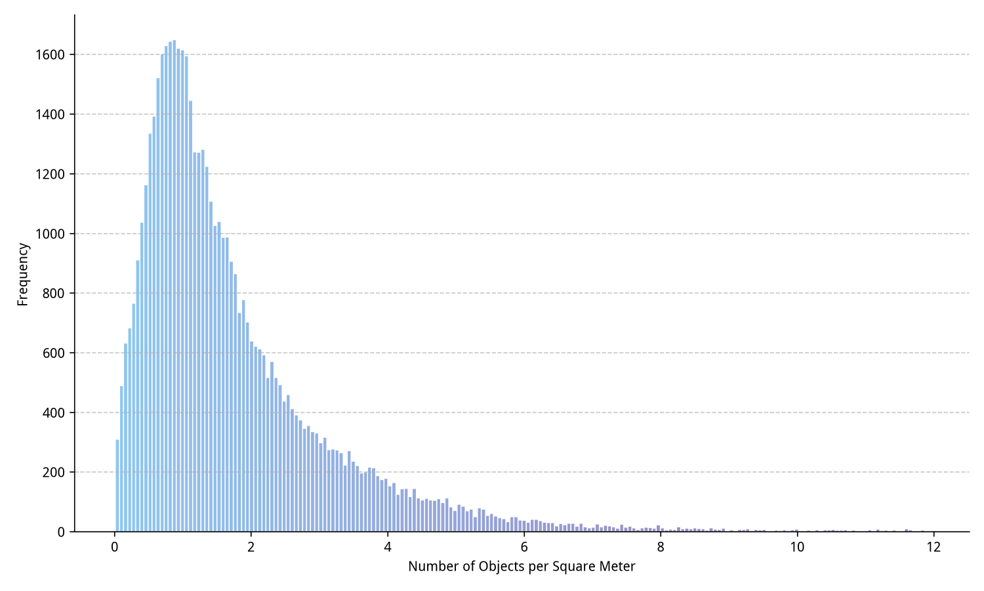

Object density averages 1.296 objects/m², with significant variation across regions.

Figure 10: Distribution of object density (number of objects per m²) across different regions.

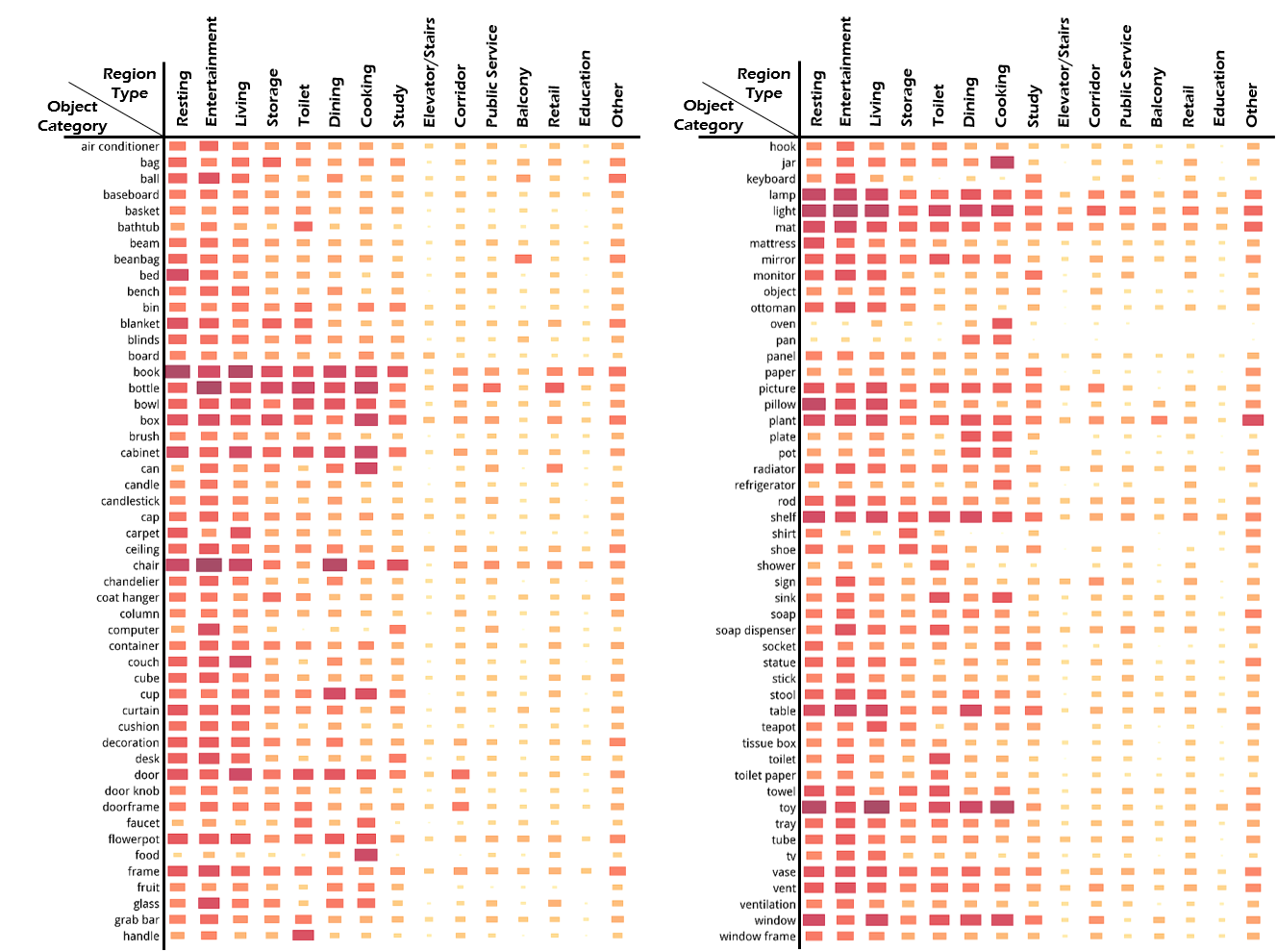

Joint region-object statistics show strong correlation between object types and region functions.

Figure 11: Distribution of 100 object categories conditioned on 15 different types.

Containment/support relationships average 3.45 per object, increasing to 5.57 for supporting/containing objects, indicating high structural complexity.

Benchmark Applications

Scene Layout Generation

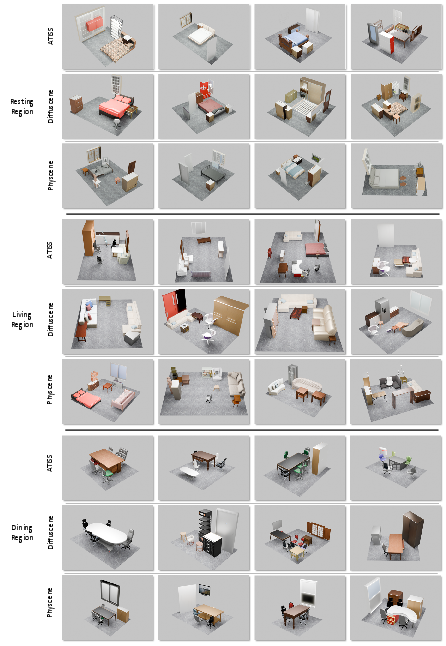

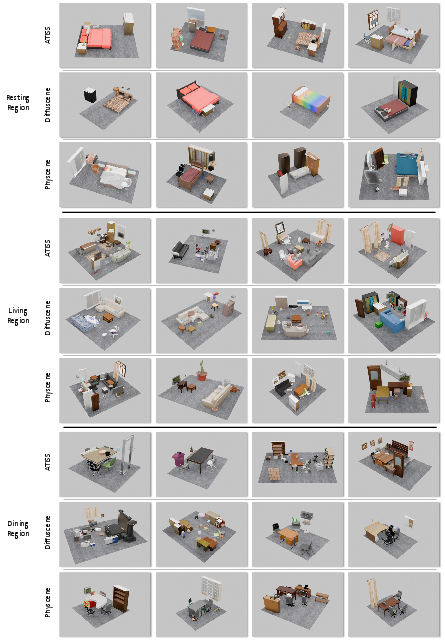

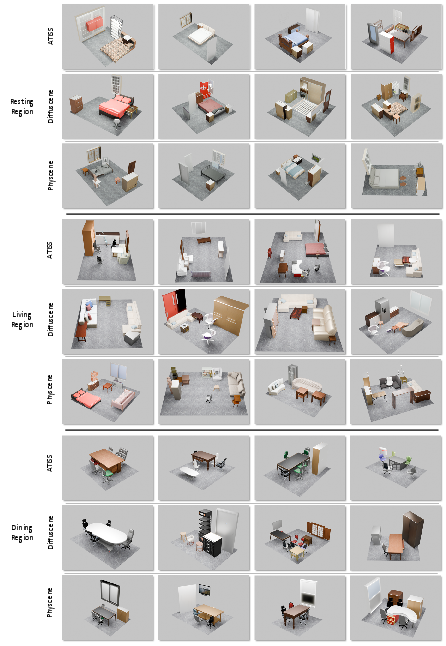

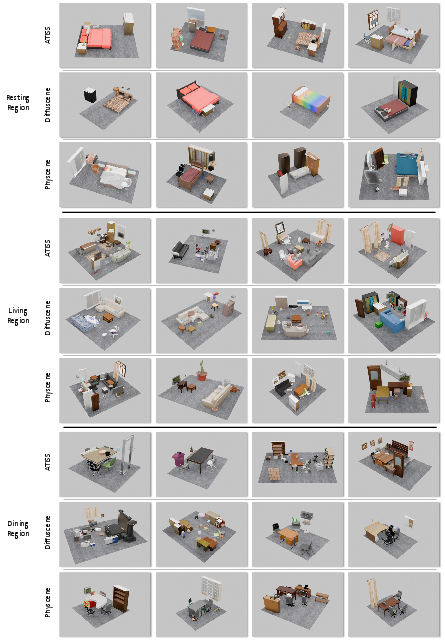

InternScenes enables rigorous benchmarking for interior scene generation. Experiments use both full and simplified versions (with/without small objects) across three region types (Resting, Living, Dining). Baselines include ATISS, DiffuScene, and PhyScene, evaluated on FID, KID, SCA, and CKL metrics.

Results show that all baselines perform well on simplified scenes but exhibit significant performance degradation on the full dataset, especially in handling small objects and complex layouts. PhyScene demonstrates relative robustness due to physics-based guidance, outperforming others in physically plausible scene synthesis.

Key finding: Current state-of-the-art generative models are insufficient for realistic, cluttered scene generation, highlighting the need for new paradigms capable of modeling high-density, multi-object distributions.

Figure 12: Examples of regions generated by baseline models trained on a simplified version of the InternScenes dataset.

Figure 13: Examples of regions generated by baseline models trained on the full version of the InternScenes dataset.

Point-Goal Navigation

InternScenes supports physically and visually realistic navigation benchmarks using IsaacSim and the ClearPath Dingo robot. Baselines include DD-PPO (RL-based), NavDP (diffusion-based imitation learning), and NavDP-FT (fine-tuned on InternScenes). Metrics are Success Rate and SPL.

Results indicate low generalization for DD-PPO in continuous, cluttered environments (Success Rate: 23.6% Real2Sim, 45.0% Gen). NavDP achieves moderate success (48.3% Real2Sim, 61.9% Gen), with slight improvement after fine-tuning (51.0% Real2Sim, 63.6% Gen). The diversity and complexity of InternScenes benefit policy generalization, but scaling model capacity remains an open challenge.

Figure 14: Scenes for the navigation evaluation.

Key challenges identified:

- Cluttered layouts require advanced path planning and collision recovery.

- Narrow pathways demand embodiment-aware navigation.

- Tiny obstacles (e.g., chair legs) challenge spatial perception and safe planning.

Implications and Future Directions

InternScenes establishes a new standard for large-scale, realistic, and simulatable indoor scene datasets. Its integration of multi-source data, preservation of small items, and physics-aware optimization enable robust benchmarking for both generative scene synthesis and embodied navigation. The dataset exposes significant limitations in current models, particularly in handling high-density, complex layouts and sim-to-real transfer.

Practical implications:

- Facilitates development and evaluation of next-generation AIGC and embodied AI algorithms.

- Supports research in sim-to-real transfer, multi-object reasoning, and interactive scene understanding.

- Provides open-source assets, pipelines, and benchmarks for reproducibility and community advancement.

Theoretical implications:

- Highlights the need for compositional, context-aware generative models.

- Suggests new directions in physics-guided learning and multi-modal scene representation.

- Motivates research into scalable annotation and asset generation to further increase diversity.

Conclusion

InternScenes is a comprehensive, large-scale indoor scene dataset that overcomes key limitations of prior resources by integrating real, procedural, and synthetic data, preserving small items, and ensuring physical plausibility. Benchmark results demonstrate that current generative and navigation models struggle with the complexity and realism of InternScenes, underscoring the need for new algorithmic approaches. The dataset, tools, and benchmarks are open-sourced to catalyze progress in embodied AI and AIGC. Future work will focus on automating annotation and expanding asset diversity to further enhance the dataset's utility.