- The paper introduces MIMo v2, a simulation platform that models continuous infant body growth and sensory development from birth to 24 months.

- The paper demonstrates that incorporating sensorimotor delays and dynamic actuation strength yields more realistic infant motor capabilities and perceptual learning.

- The paper validates the platform against empirical growth and vision standards, establishing its practical applications in developmental robotics and cognitive research.

MIMo v2: A Simulation Platform for Modeling Infant Body and Sensory Development

Introduction

The paper presents MIMo v2, a significant extension of the Multimodal Infant Model (MIMo), designed to simulate the physical and sensory development of human infants from birth to 24 months. Unlike prior developmental robotics platforms, which typically fix the agent's embodiment to a single age, MIMo v2 introduces dynamic growth in body size, mass, actuation strength, and sensory modalities, including vision and sensorimotor delays. This enables the paper of developmental trajectories and the impact of changing constraints on sensorimotor learning and cognitive development in silico.

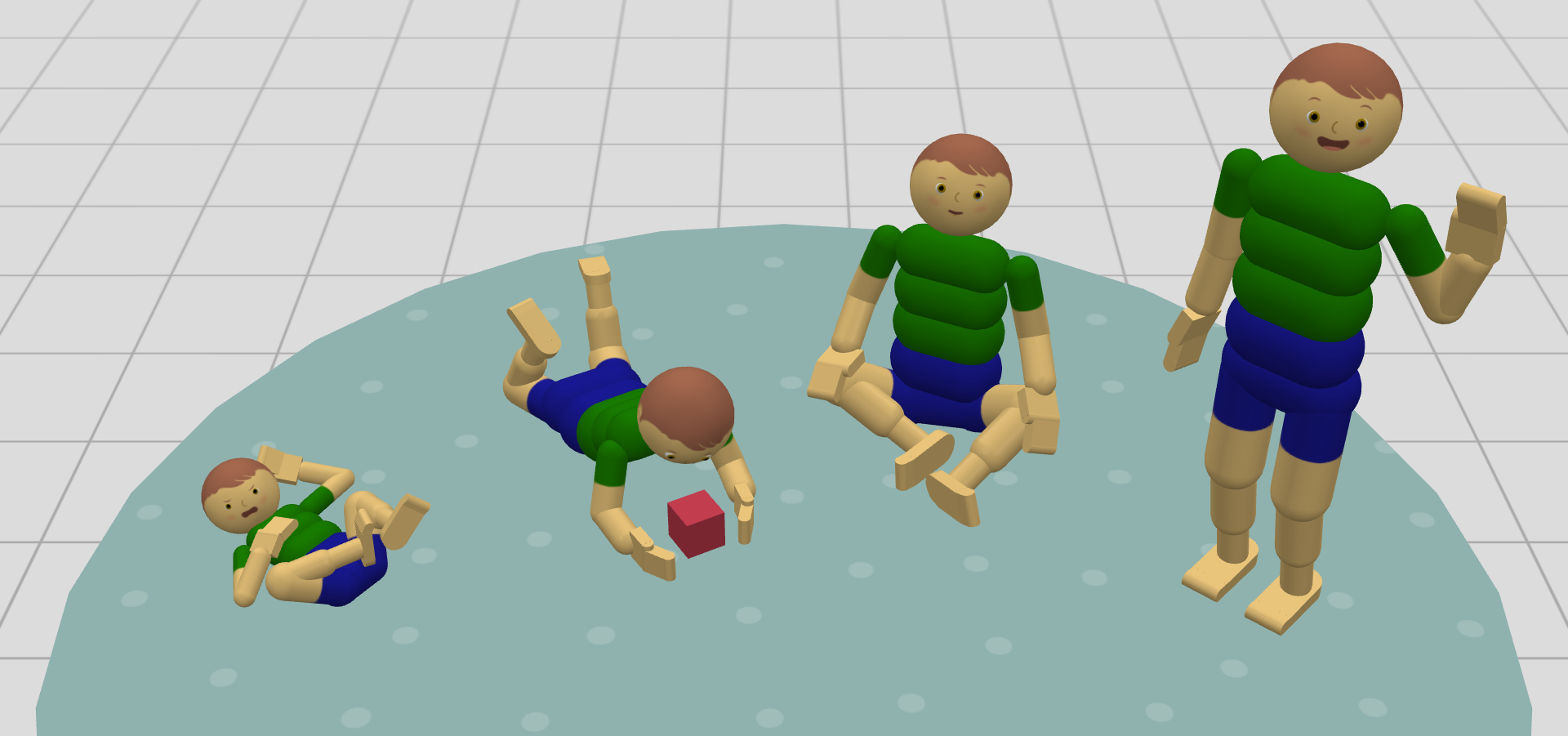

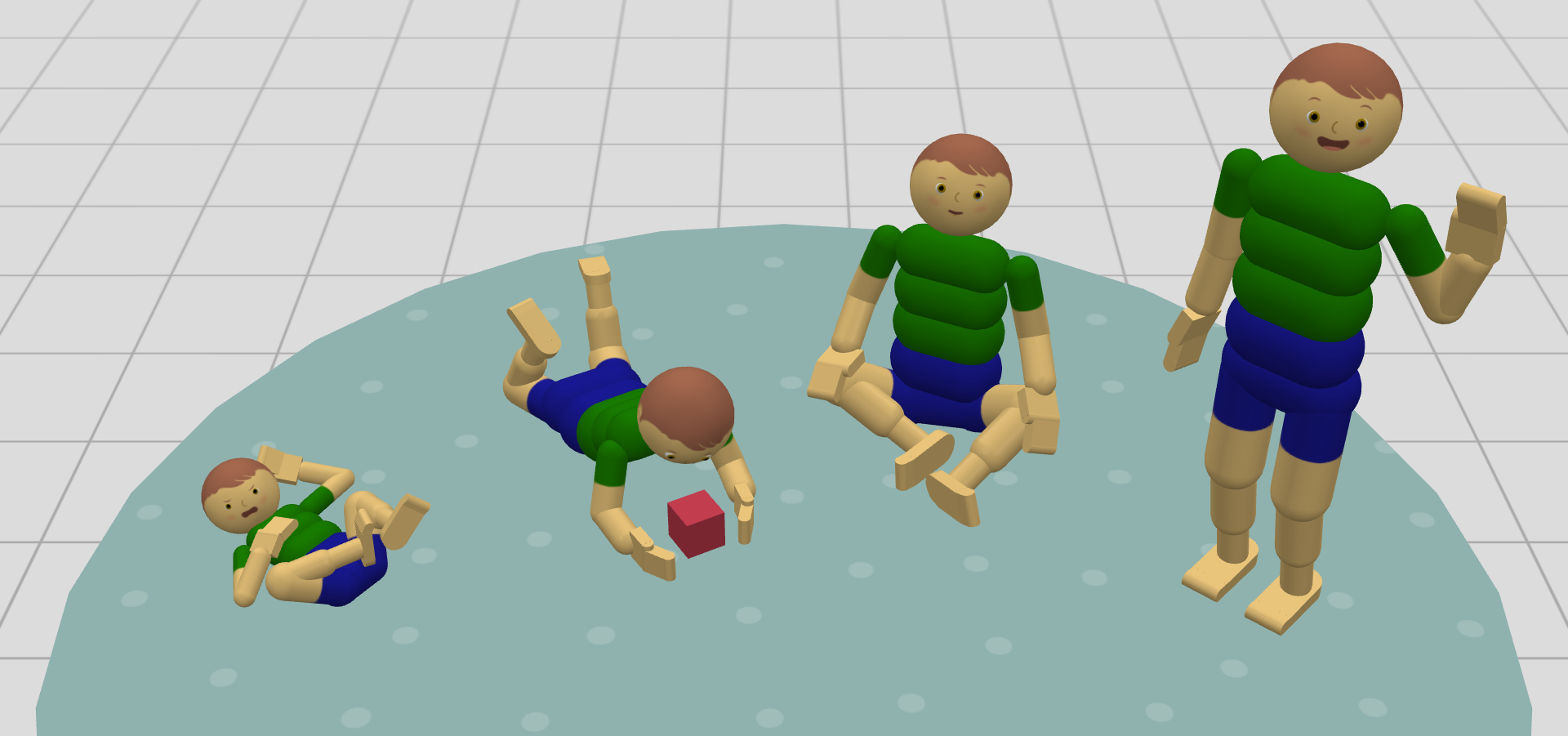

Figure 1: Illustration of MIMo at different ages. From left to right: 0, 6, 18, and 24 months.

Body Growth and Actuation

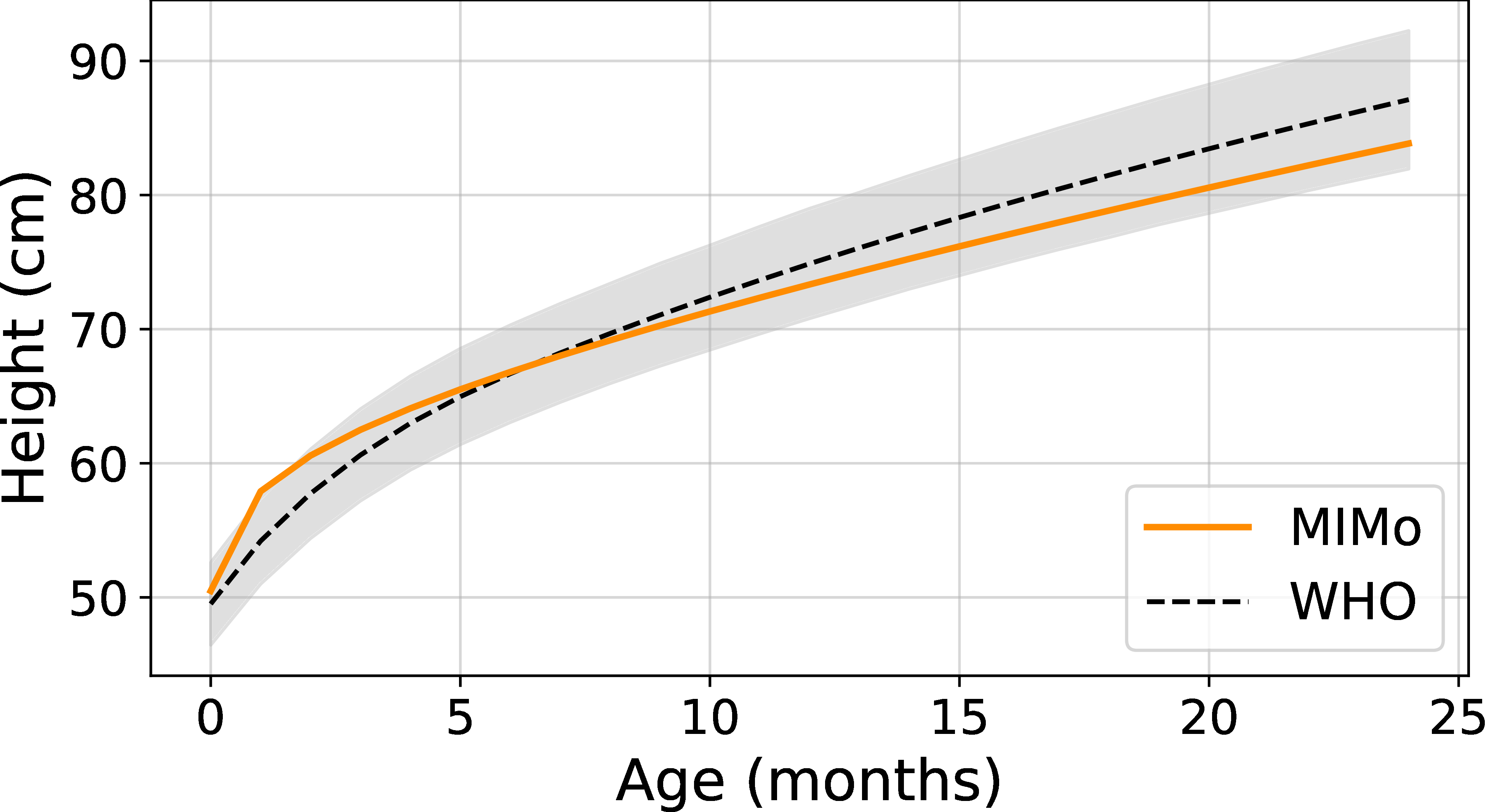

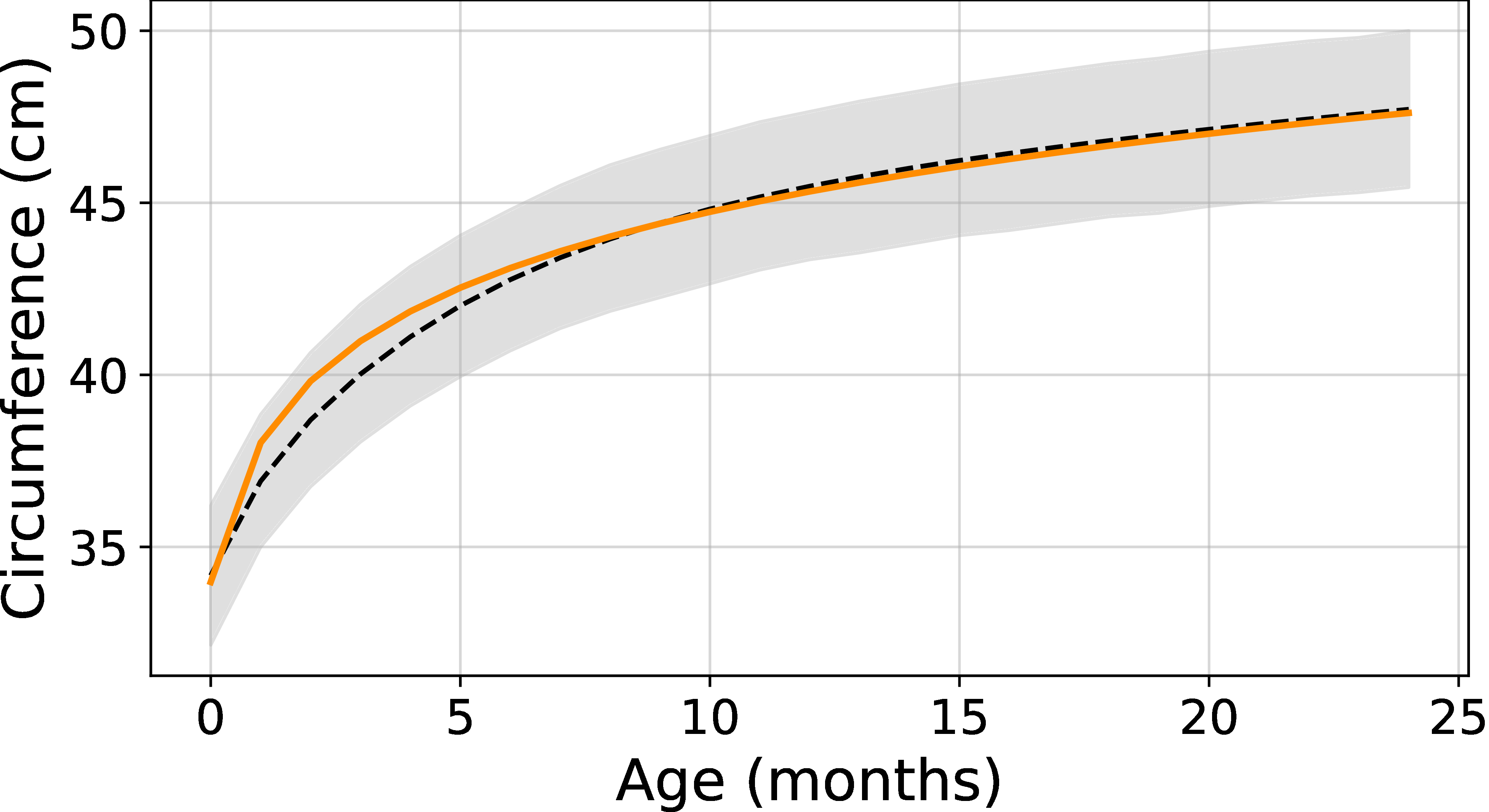

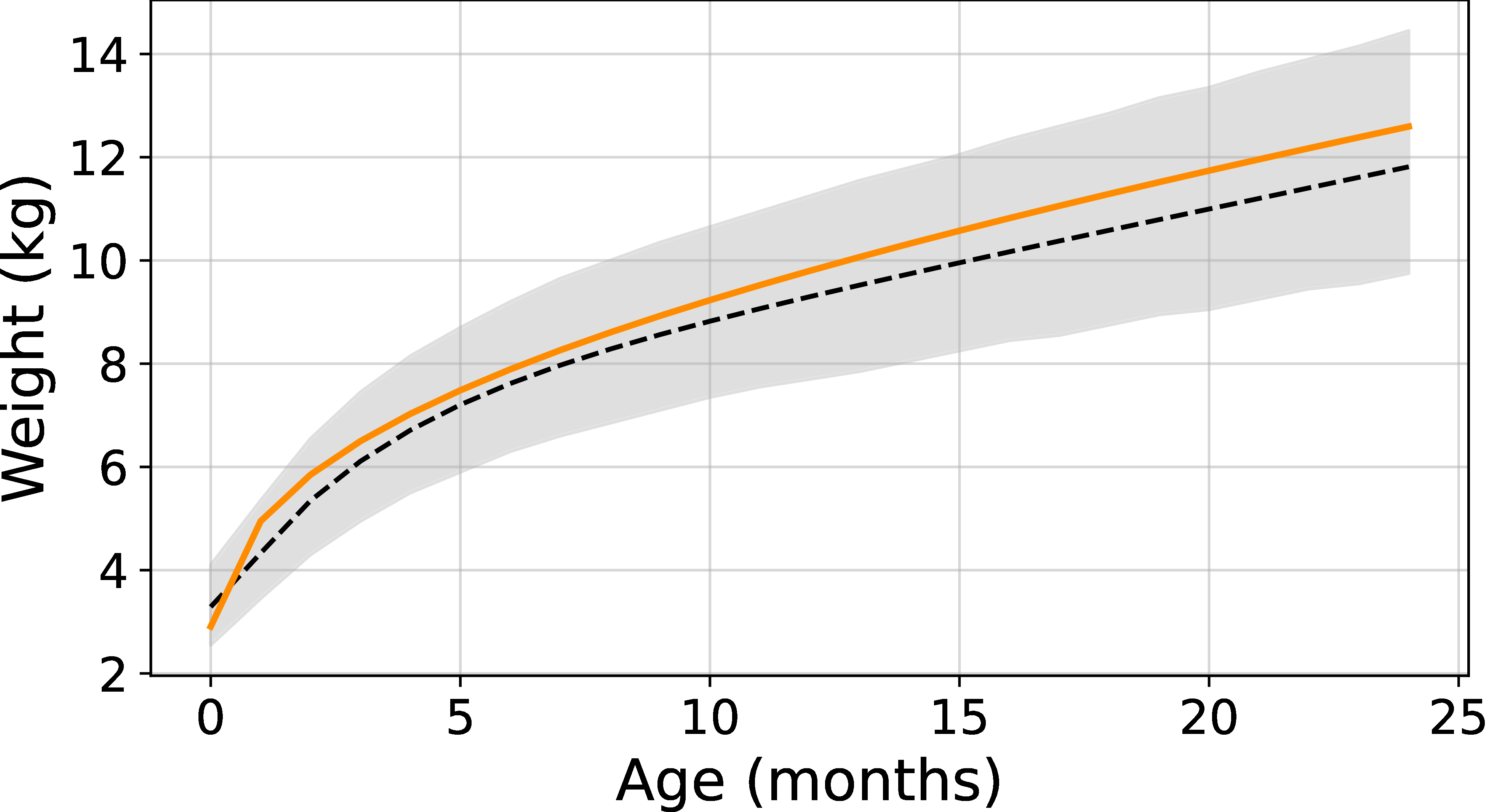

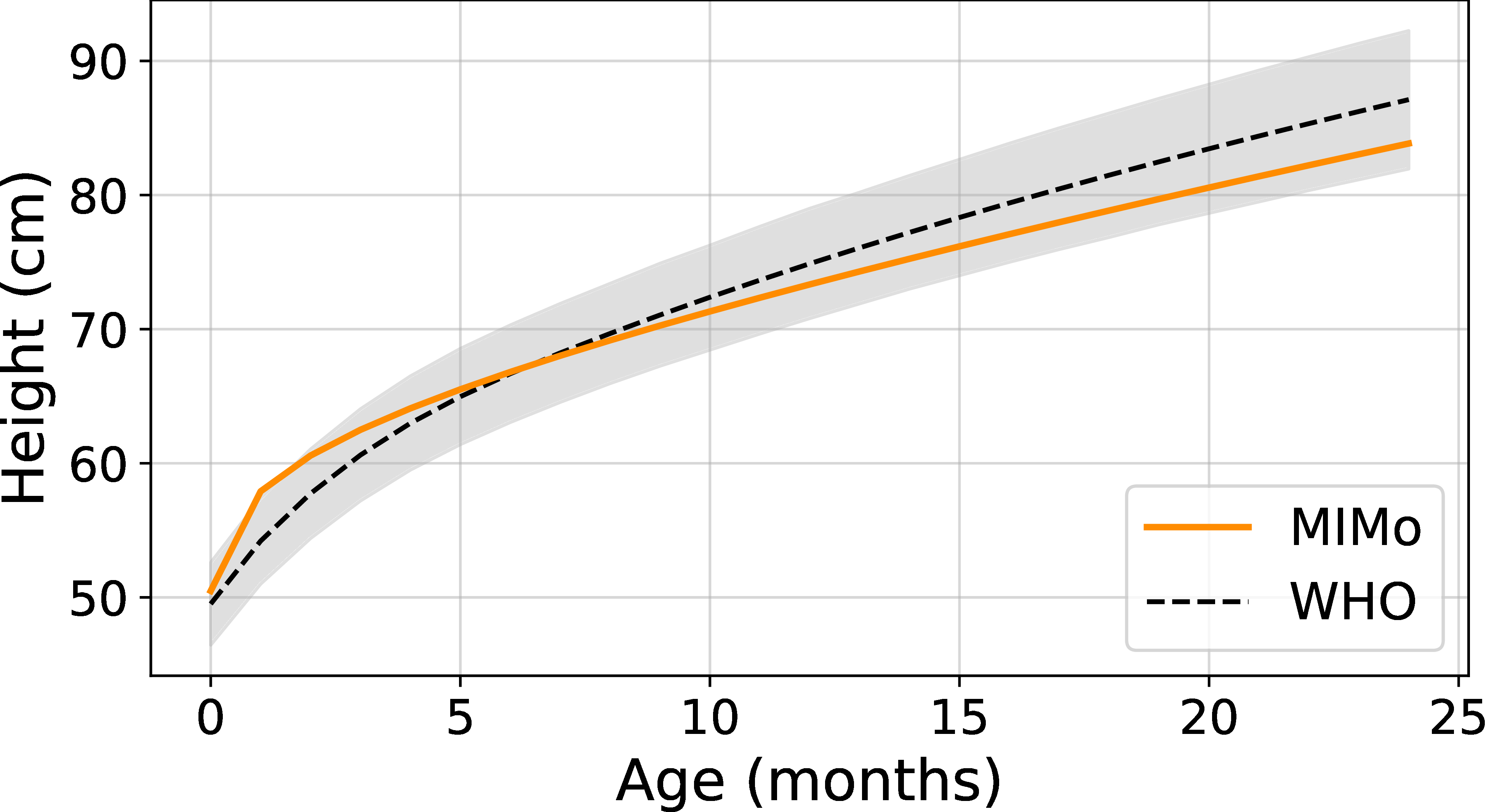

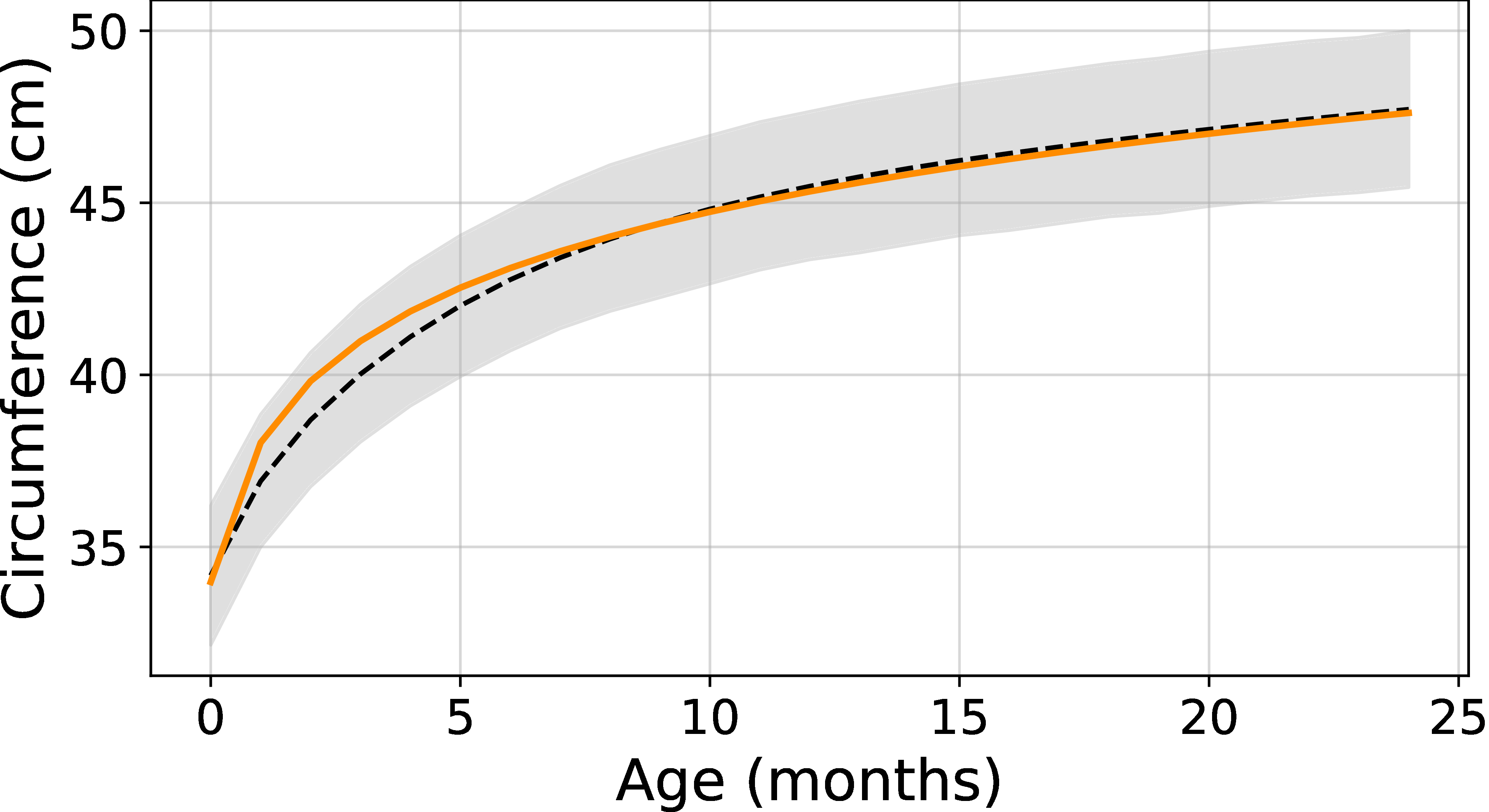

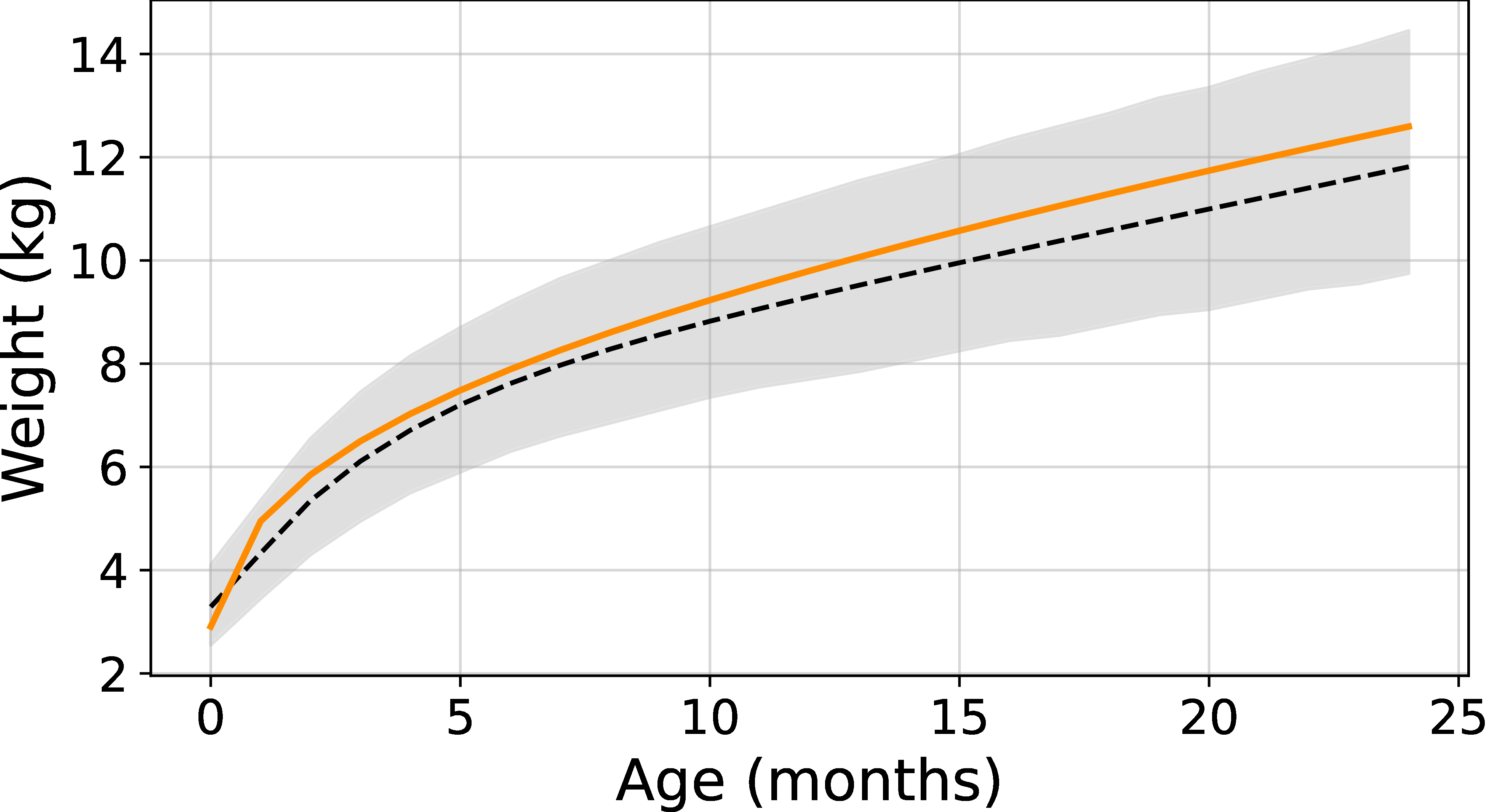

MIMo v2 models continuous physical growth by parameterizing body size, mass, and actuation strength as functions of age, using empirical data from the AnthroKids dataset and WHO growth standards. Each body part's geometry is scaled logarithmically with age, and mass is updated under the assumption of constant density, resulting in realistic age-dependent body proportions and weight.

Figure 2: MIMo's body height as a function of age, closely matching WHO growth curves.

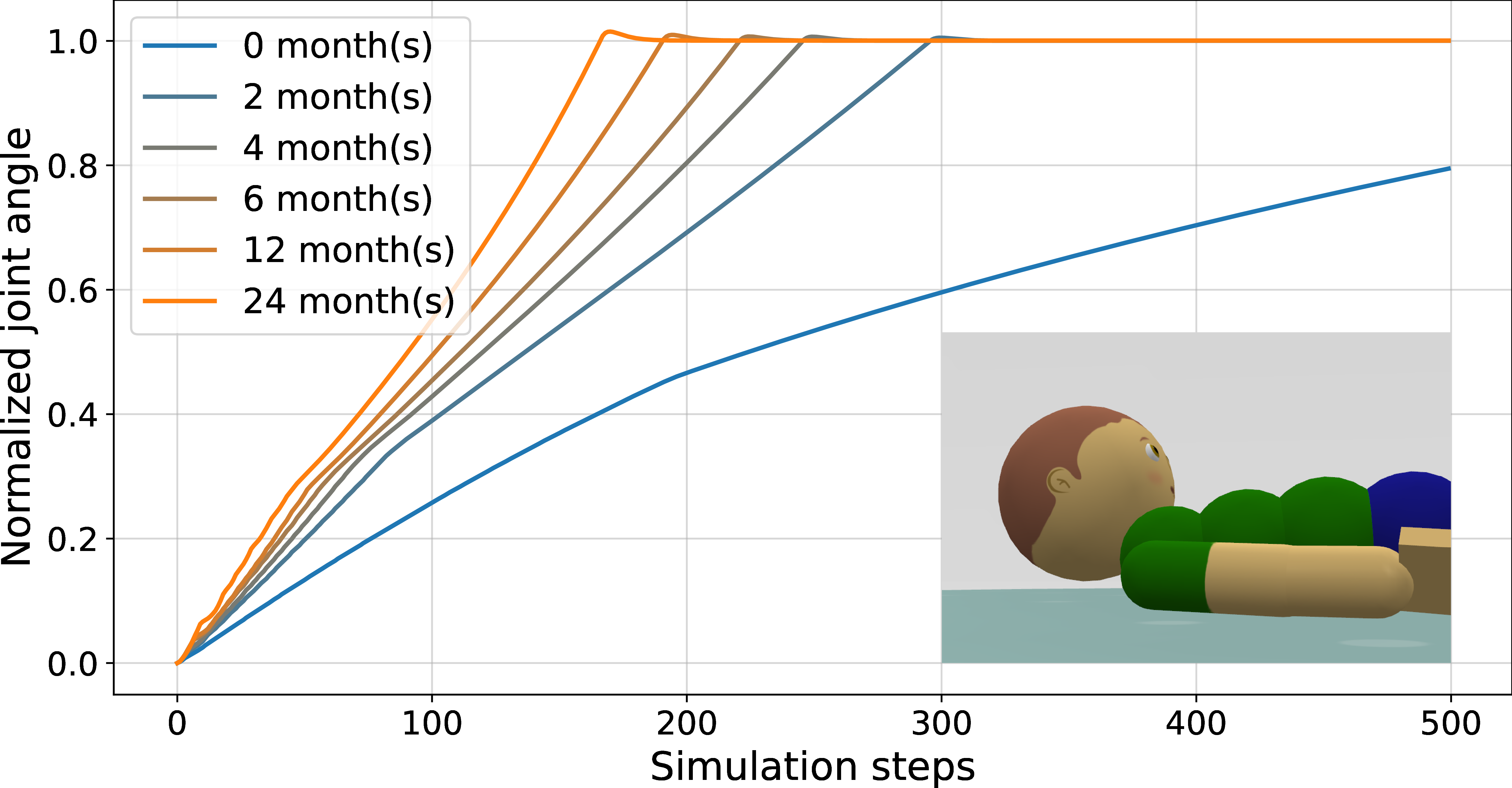

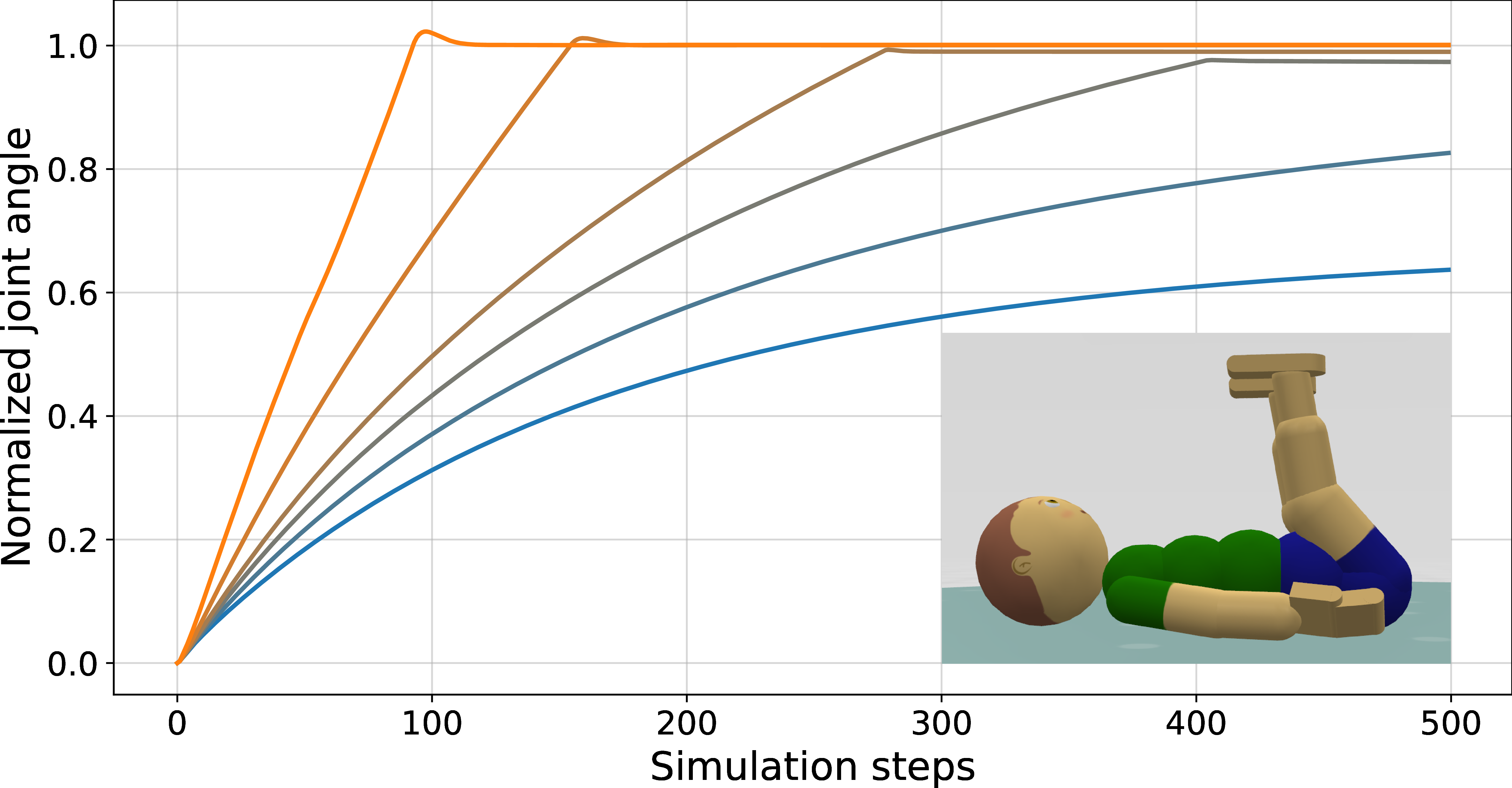

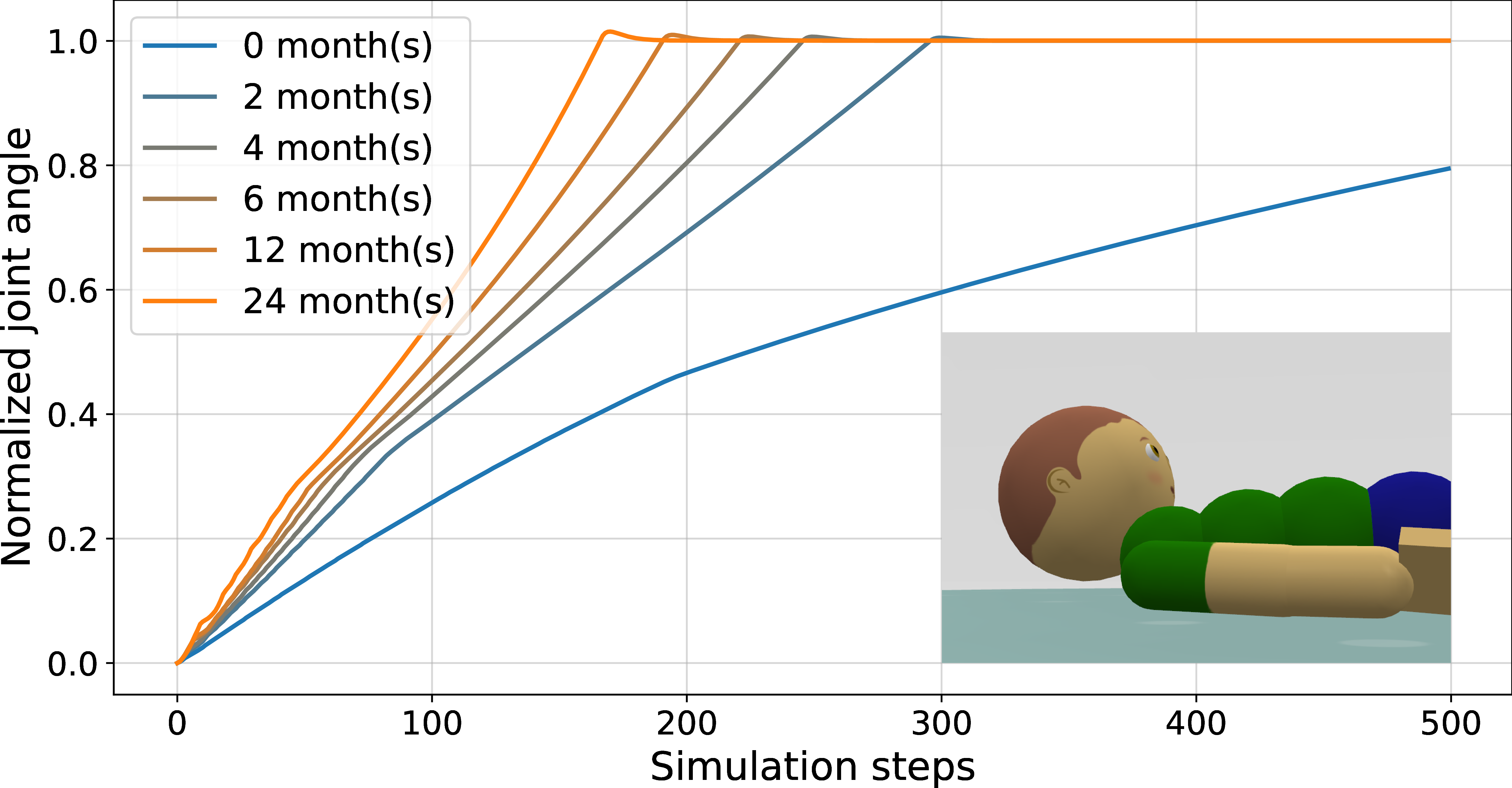

Actuation strength is scaled with the volume of the relevant body part, reflecting the correlation between muscle size and strength. This approach allows MIMo to exhibit age-appropriate motor capabilities, such as head and leg lifts, with performance improving as the simulated infant matures. The model demonstrates that, as in real infants, increased strength enables faster and more robust execution of motor tasks.

Sensory Development: Vision and Sensorimotor Delays

Visual Acuity and Foveation

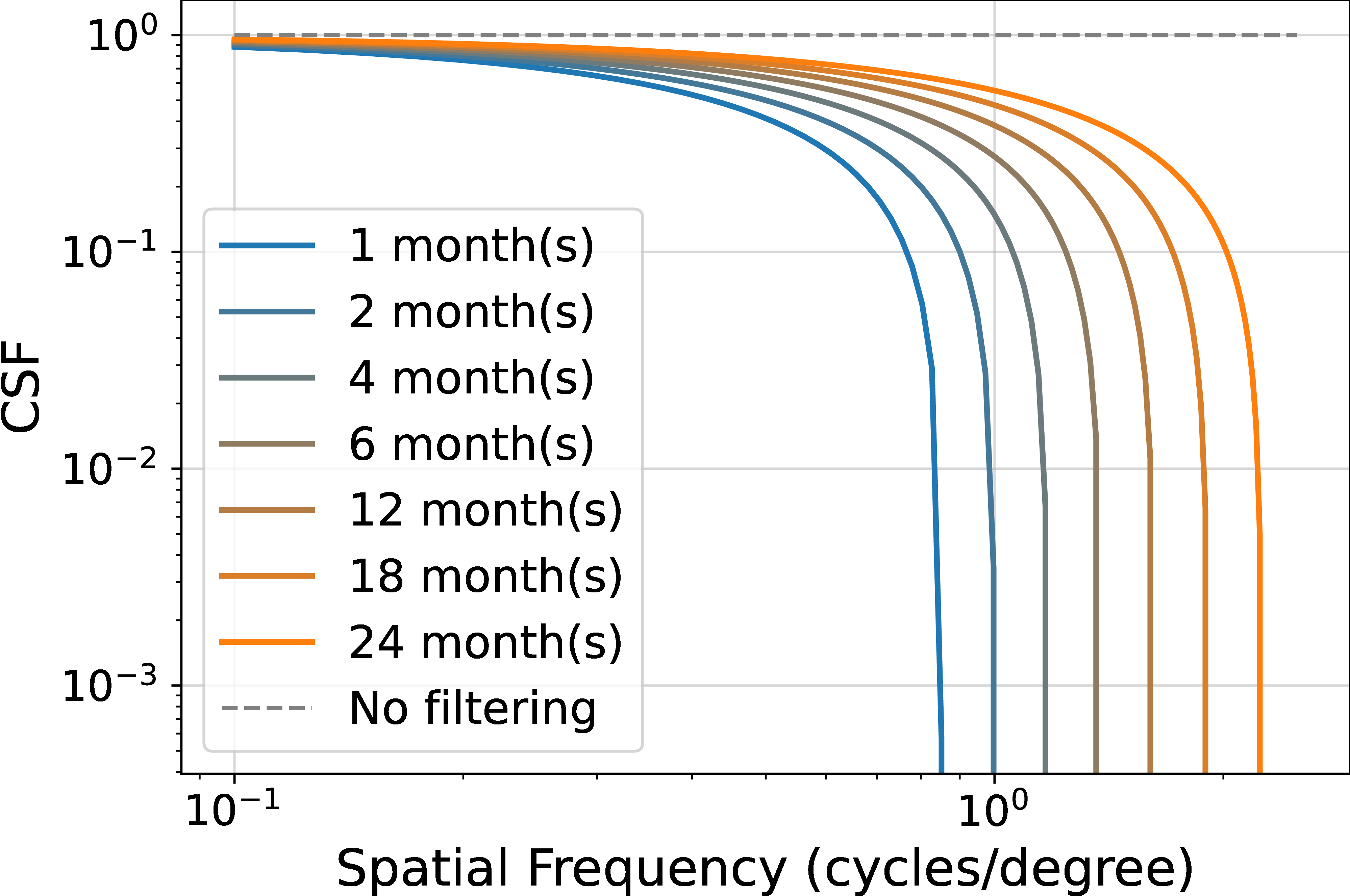

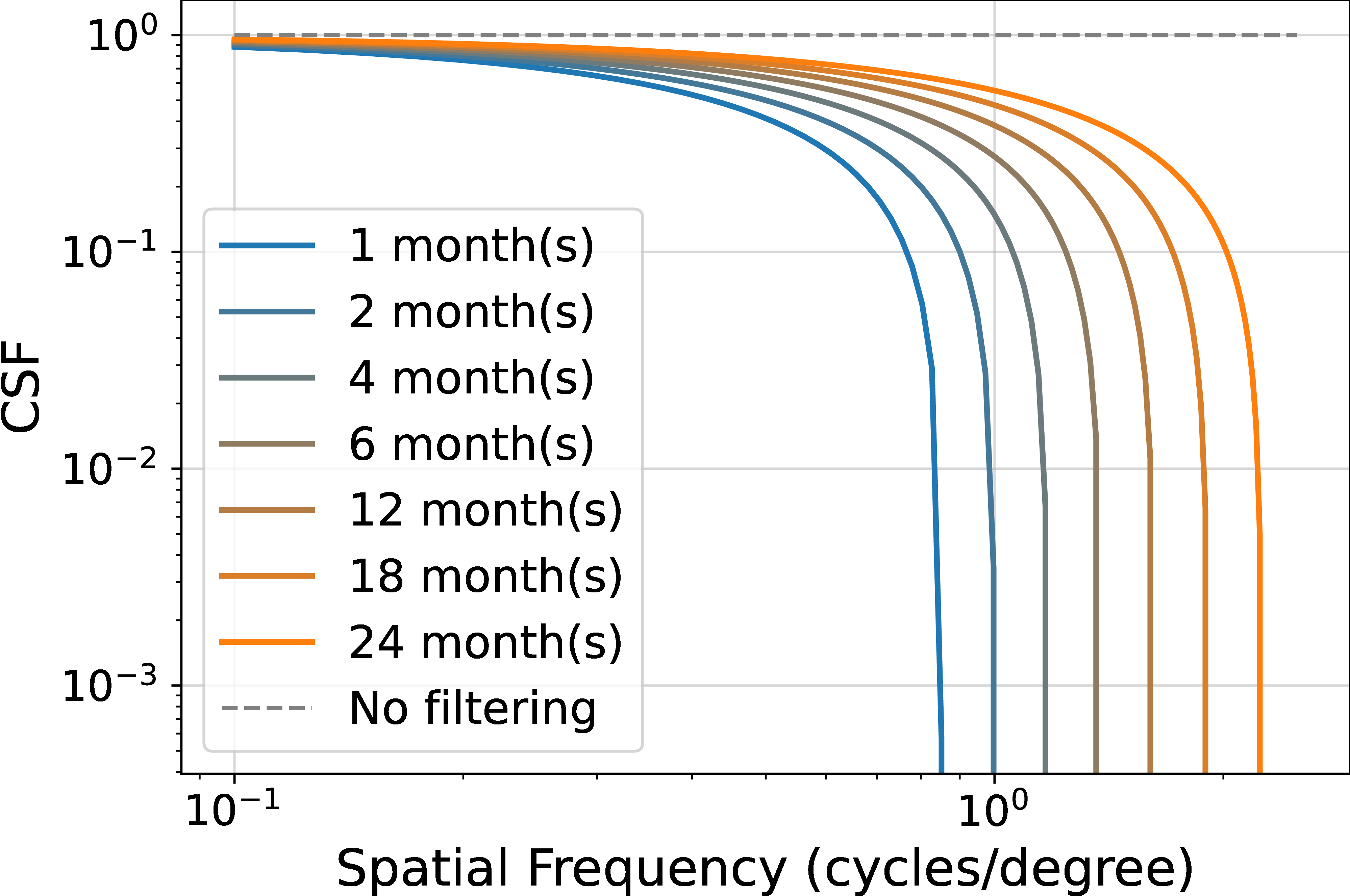

MIMo v2 incorporates a vision module that simulates the development of visual acuity and foveation. Visual acuity is modeled using a contrast sensitivity function (CSF) that acts as a low-pass filter in the frequency domain, with the cutoff frequency (acuity) increasing with age based on empirical measurements. This results in age-appropriate blurring of the visual input, capturing the limited spatial resolution of infant vision.

Foveation is implemented by transforming rendered images into log-polar space and back, magnifying the central region and compressing the periphery, consistent with the distribution of photoreceptors and cortical magnification in the human visual system.

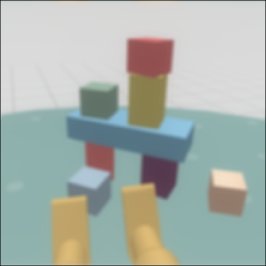

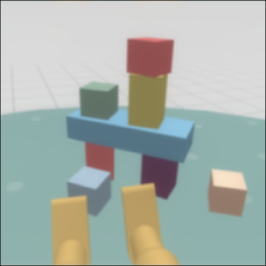

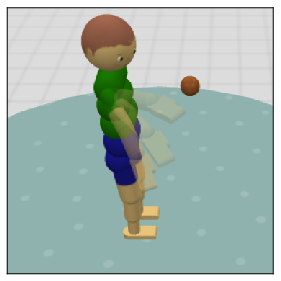

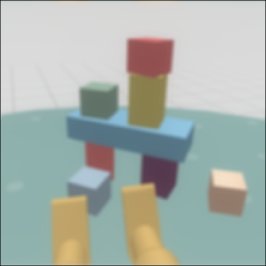

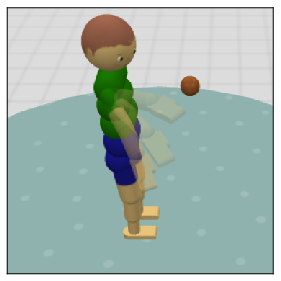

Figure 3: External view of MIMo's environment, illustrating the agent's perspective.

Sensorimotor Delays

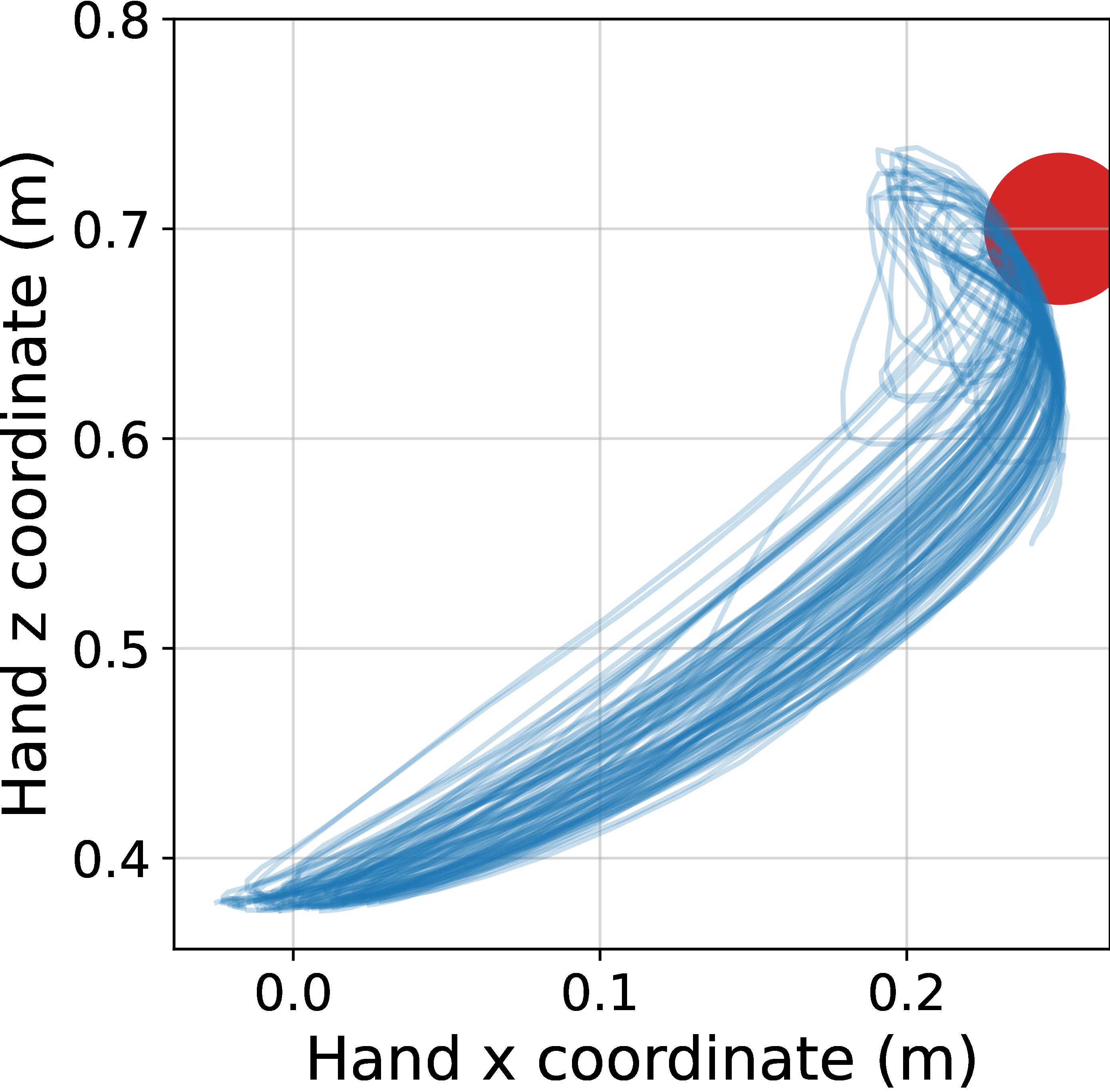

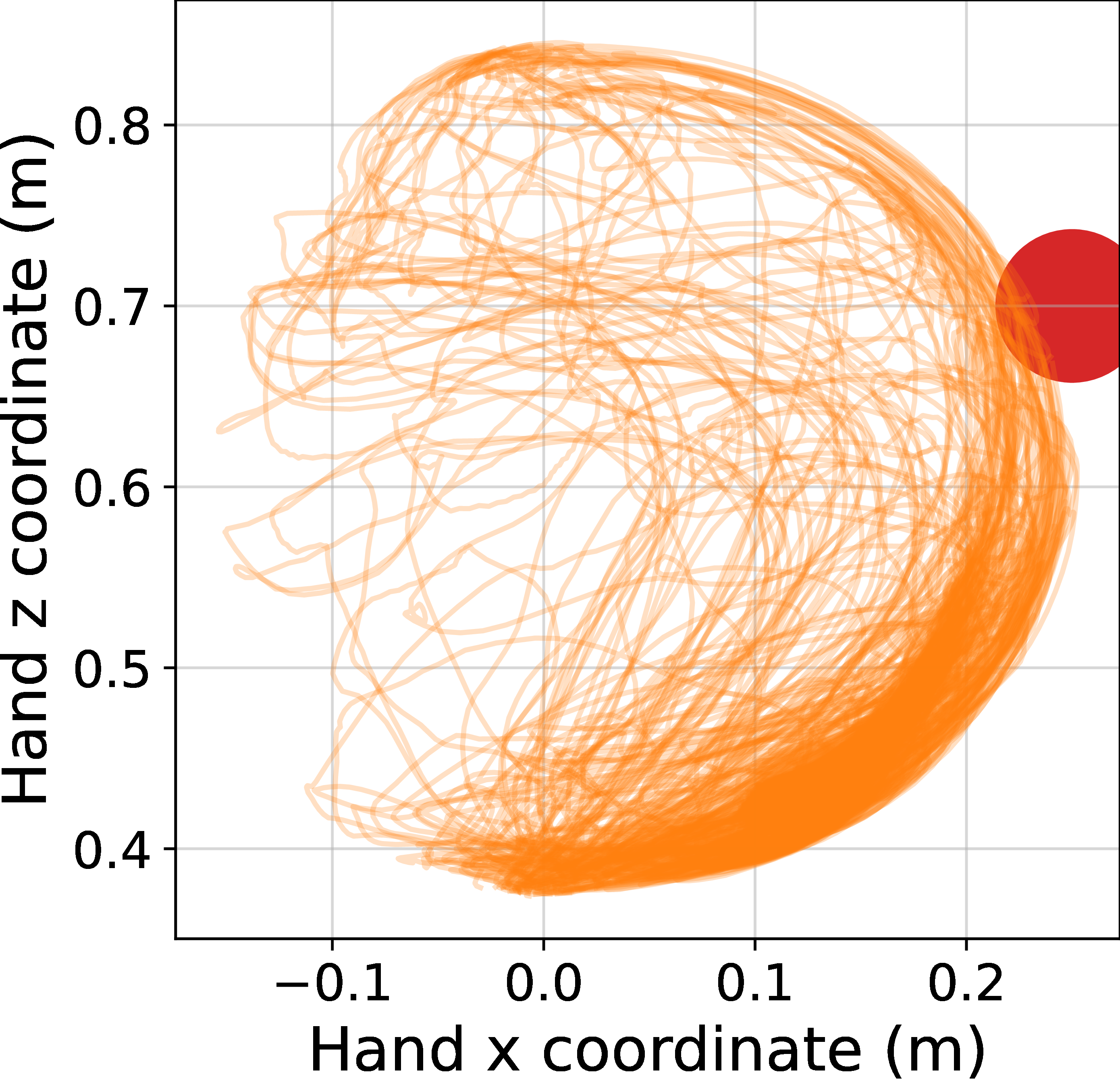

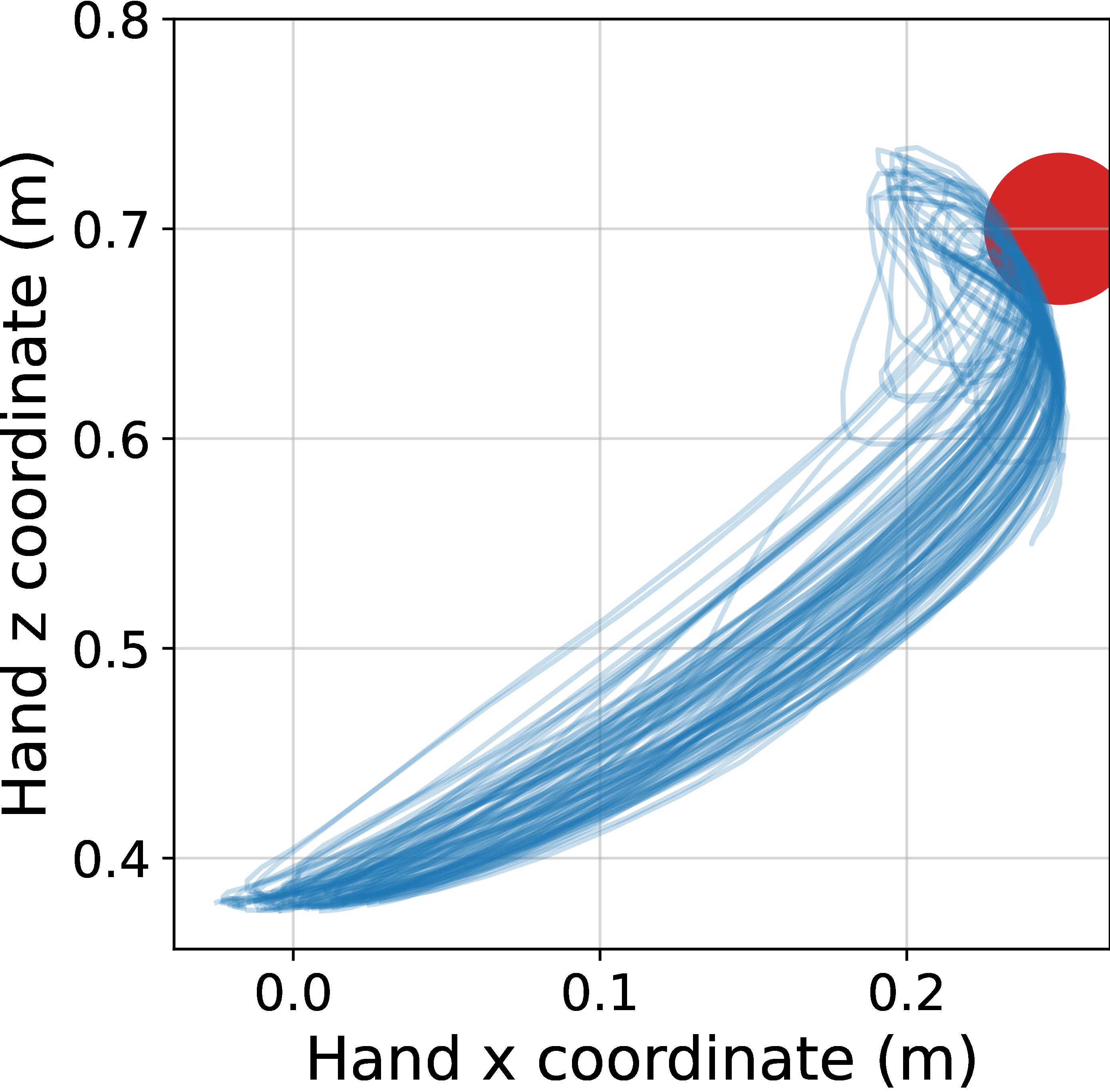

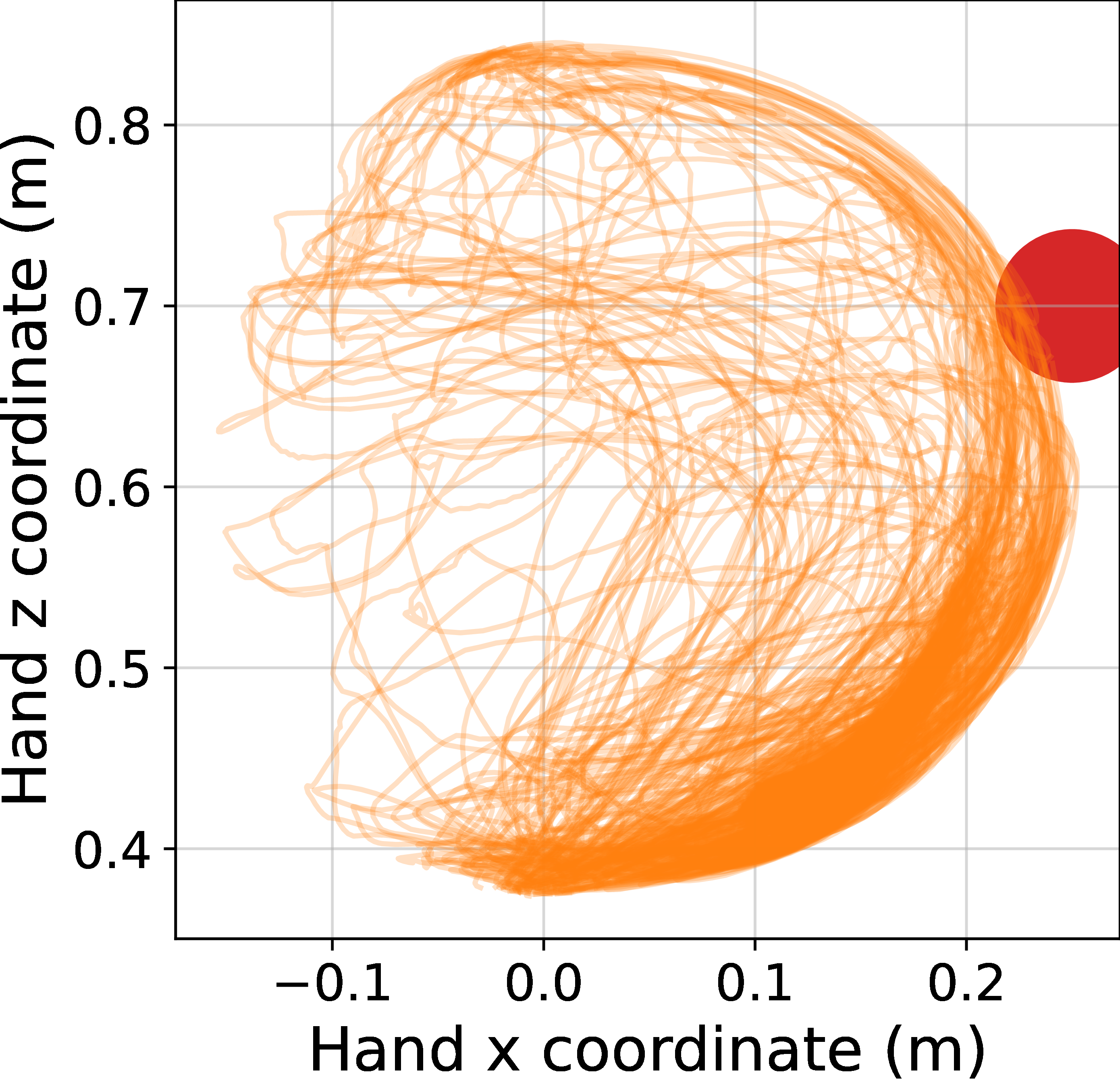

To model the finite conduction velocities of neural signals, MIMo v2 introduces configurable sensorimotor delays via FIFO buffers for both sensory inputs and motor outputs. This allows the simulation of age-dependent reaction times and their impact on learning and control. Experiments demonstrate that increased delays degrade the efficiency and smoothness of reaching movements, consistent with observations in human infants.

Figure 4: MIMo reaching the target in a delayed sensorimotor control experiment.

Inverse Dynamics Control and Procedural Environments

MIMo v2 includes an inverse dynamics controller that enables precise, task-prioritized control of individual joints or groups of joints. This is essential for applications such as motion retargeting from real infant data or generating demonstrations for imitation learning. The controller extends operational space control formulations to underactuated systems and supports multiple error metrics, including translational and orientation errors.

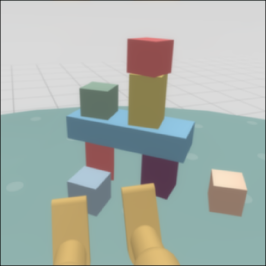

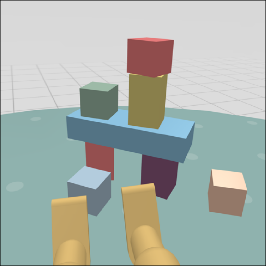

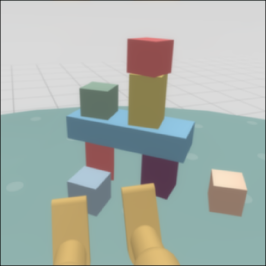

The platform also features a procedural environment generator, producing randomized rooms with variable textures and objects (from the Toys4K dataset), supporting robust generalization and curriculum learning in diverse contexts.

Figure 5: Examples of MIMo's random environment generator with various room textures and objects from Toys4K.

Software Architecture and Compatibility

MIMo v2 is built on MuJoCo and Gymnasium, supporting fast, modular simulation and compatibility with major RL libraries (Stable-Baselines3, TorchRL). The environment is highly configurable, allowing users to select sensory modalities, actuation models, and scene parameters. Distribution via Singularity containers ensures reproducibility and ease of deployment across platforms.

Empirical Results and Implications

The paper provides quantitative validation of MIMo's growth trajectories against WHO standards, and demonstrates the impact of developmental changes on motor performance and learning. Notably, the introduction of sensorimotor delays leads to increased learning times and noisier trajectories in reaching tasks, highlighting the importance of temporal constraints in developmental models. The vision module's fidelity enables the paper of how limited acuity and foveation shape early perceptual learning and active exploration.

MIMo v2's extensibility and realism position it as a valuable tool for investigating the interplay between embodiment, sensory development, and cognitive processes. The platform's ability to simulate developmental trajectories enables systematic studies of critical periods, the emergence of sensorimotor coordination, and the effects of atypical development or interventions.

Limitations and Future Directions

While MIMo v2 advances the state of the art in developmental simulation, it remains an approximation of infant physiology and behavior. The current model lacks certain sensory modalities (e.g., audition, olfaction, nociception), and does not yet support social interaction with conspecifics or caregivers. The actuation models, while biologically inspired, do not capture the full complexity of musculoskeletal dynamics or learning processes in real infants.

Future work should focus on integrating additional sensory channels, modeling social and communicative development, and incorporating more sophisticated learning algorithms that exploit the platform's developmental realism. The potential for using MIMo as a testbed for embodied AI, developmental neuroscience, and robotics is substantial, particularly for research on the emergence of agency, self-awareness, and early cognitive functions.

Conclusion

MIMo v2 represents a comprehensive simulation platform for modeling the physical and sensory development of human infants. By enabling dynamic changes in embodiment and perception, it provides a unique resource for studying the constraints and opportunities that shape early learning and behavior. The platform's modularity, empirical grounding, and compatibility with modern RL frameworks make it well-suited for both theoretical and applied research in developmental AI and cognitive science.