- The paper presents an object-relative control strategy that decouples image matching from trajectory prediction to achieve robust navigation.

- It leverages a relative 3D scene graph and WayObject Costmap with Dijkstra's algorithm to plan trajectories, enhancing cross-embodiment generalization.

- Experimental results on the Habitat-Matterport dataset demonstrate significant improvements in SPL and SSPL, indicating enhanced flexibility and scalability.

ObjectReact: Learning Object-Relative Control for Visual Navigation

Abstract

The paper "ObjectReact: Learning Object-Relative Control for Visual Navigation" proposes a novel approach to visual navigation using a single camera and a topological map. Traditional methods rely heavily on image-relative representations tied to the robot's pose and embodiment, which can be limiting. This paper introduces an object-relative control paradigm that allows for more flexible and robust navigation by leveraging object-level representations.

Introduction and Background

Visual navigation often uses dense 3D maps and sensors like LiDAR, which can be costly and complex. Alternatives, such as visual topological navigation, use simpler setups with a single camera, inspired by human navigation strategies. Earlier approaches, classified as image-relative, have limitations tied to the robot's pose and embodiment when utilizing image-based subgoal representations. These constraints affect flexibility and scalability, especially in varied environments or with different robot embodiments.

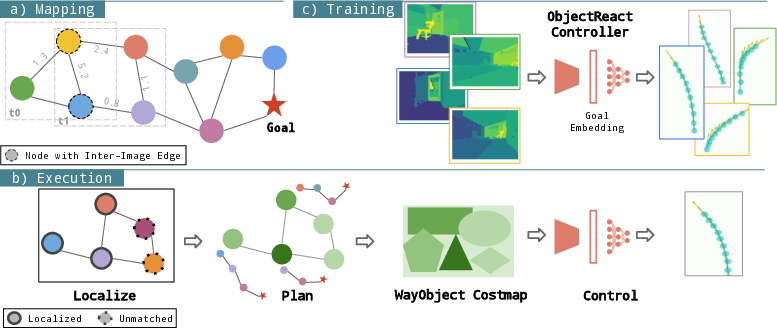

The paper proposes a shift toward object-relative control, decoupling control prediction from image matching and allowing cross-embodiment generalization. This is achieved through a relative 3D scene graph that represents object connectivity within and across images, enabling trajectory-invariant and robust navigation across a variety of tasks and environments.

Methodology

Mapping Phase: Relative 3D Scene Graph

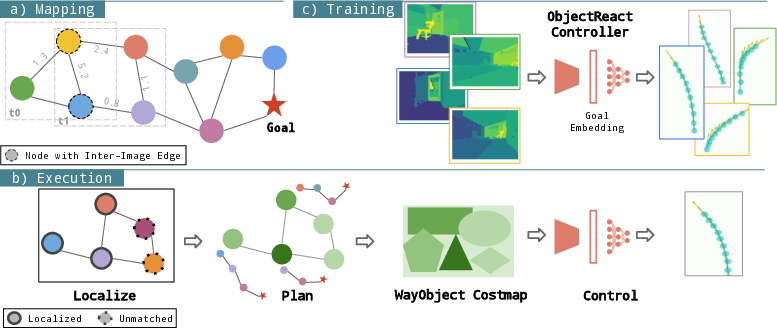

The paper introduces a topometric map as a relative 3D scene graph, where image segments are used as object nodes linked by intra-image 3D Euclidean distances and inter-image object associations.

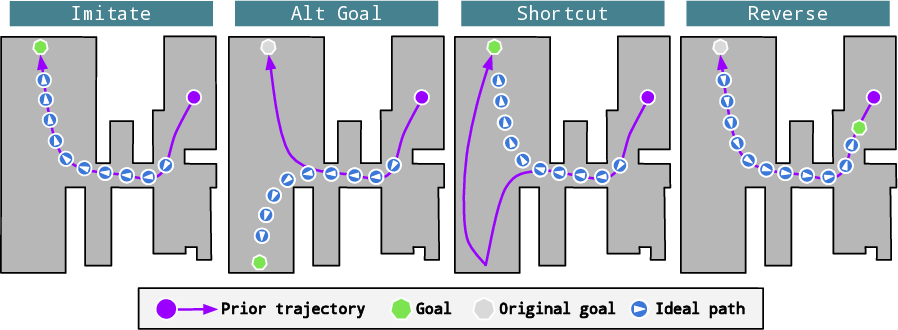

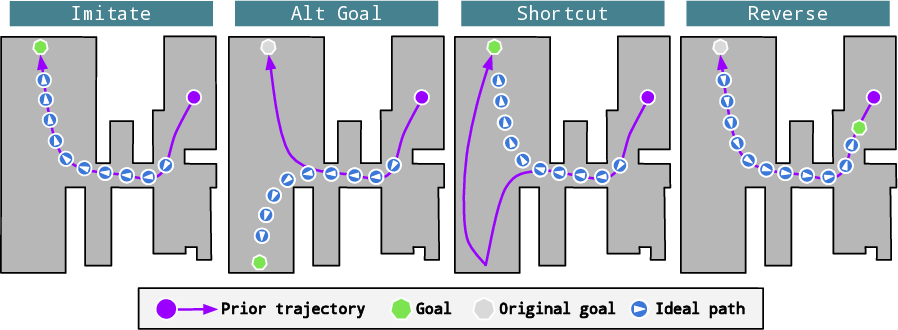

Figure 1: Tasks: Each column shows a topdown view with the prior experience trajectory displayed as a purple path from the purple circle (start) to the green point (goal).

Execution Phase: Object Localizer, Global Planner, and Local Controller

The execution phase involves localizing query objects using the precomputed map and planning paths using Dijkstra's algorithm. The novel "WayObject Costmap" represents object path lengths and is used for control prediction, eliminating the need for explicit RGB inputs.

Figure 2: Object-Relative Navigation Pipeline, illustrating mapping, execution, and training phases.

Training Phase: The ObjectReact Controller

The local controller, dubbed ObjectReact, conditions its trajectory rollouts on the WayObject Costmap. This approach improves upon traditional image-relative methods by utilizing an object-centric representation that provides contextual and spatial awareness without reliance on direct visual cues from RGB images.

Experimental Setup and Evaluation

The approach was tested using the Habitat-Matterport 3D dataset with varied tasks, including Imitate, Alt Goal, Shortcut, and Reverse tasks. The evaluation metrics included Success weighted by Path Length (SPL) and Soft-SPL (SSPL), with tests conducted on different robot embodiments.

Results and Discussion

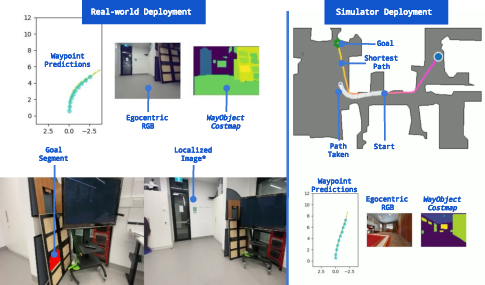

The object-relative controller, ObjectReact, demonstrated significant performance improvements over image-relative baselines, especially in challenging tasks like Alt Goal and Reverse. The robustness to embodiment changes, such as sensor height differences between mapping and execution, highlighted the approach's flexibility. The results illustrate the advantages of object-centric representations, particularly in varied and dynamic environments.

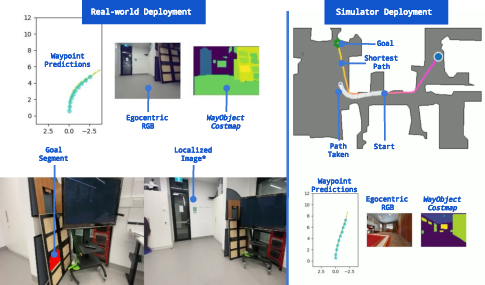

Figure 3: Examples of demonstration videos showing real-world and simulator deployments.

Conclusion

This research provides a compelling case for object-relative navigation, showcasing its potential for more adaptable and efficient robotic path planning in visual navigation tasks. The use of object-level connectivity and the WayObject Costmap allows for efficient generalization across different environments and embodiments, overcoming many limitations faced by traditional image-relative navigation methods. Future work could focus on integrating language-based goals or exploring more complex dynamic environments to further enhance the capabilities of robotic navigation systems.

In conclusion, ObjectReact opens new pathways for achieving sophisticated navigation strategies with reduced sensor and computational overhead, paving the way for practical deployment in real-world applications. Further enhancements in perception models and continued exploration of context-oriented navigational strategies would build on this foundation to achieve advanced autonomous navigation solutions.