- The paper introduces InterAct, a benchmark augmenting 30.70 hours of high-fidelity 3D HOI data enabling six diverse generation tasks.

- It employs a marker-based human representation and a three-stage unified optimization to drastically reduce artifacts and enhance motion fidelity.

- Comprehensive experiments demonstrate improved metrics in contact precision, diversity, and downstream control performance for physics-based imitation.

InterAct: A Large-Scale Benchmark for Versatile 3D Human-Object Interaction Generation

Introduction and Motivation

The paper introduces InterAct, a comprehensive benchmark and dataset for 3D human-object interaction (HOI) generation, addressing critical limitations in existing datasets such as insufficient scale, poor annotation quality, and prevalent physical artifacts. The authors consolidate and standardize data from seven major sources, resulting in 21.81 hours of curated interactions, and further augment the dataset to 30.70 hours using a unified optimization framework. This enables the definition and evaluation of six distinct HOI generation tasks, facilitating robust benchmarking and model development for applications in robotics, animation, and embodied AI.

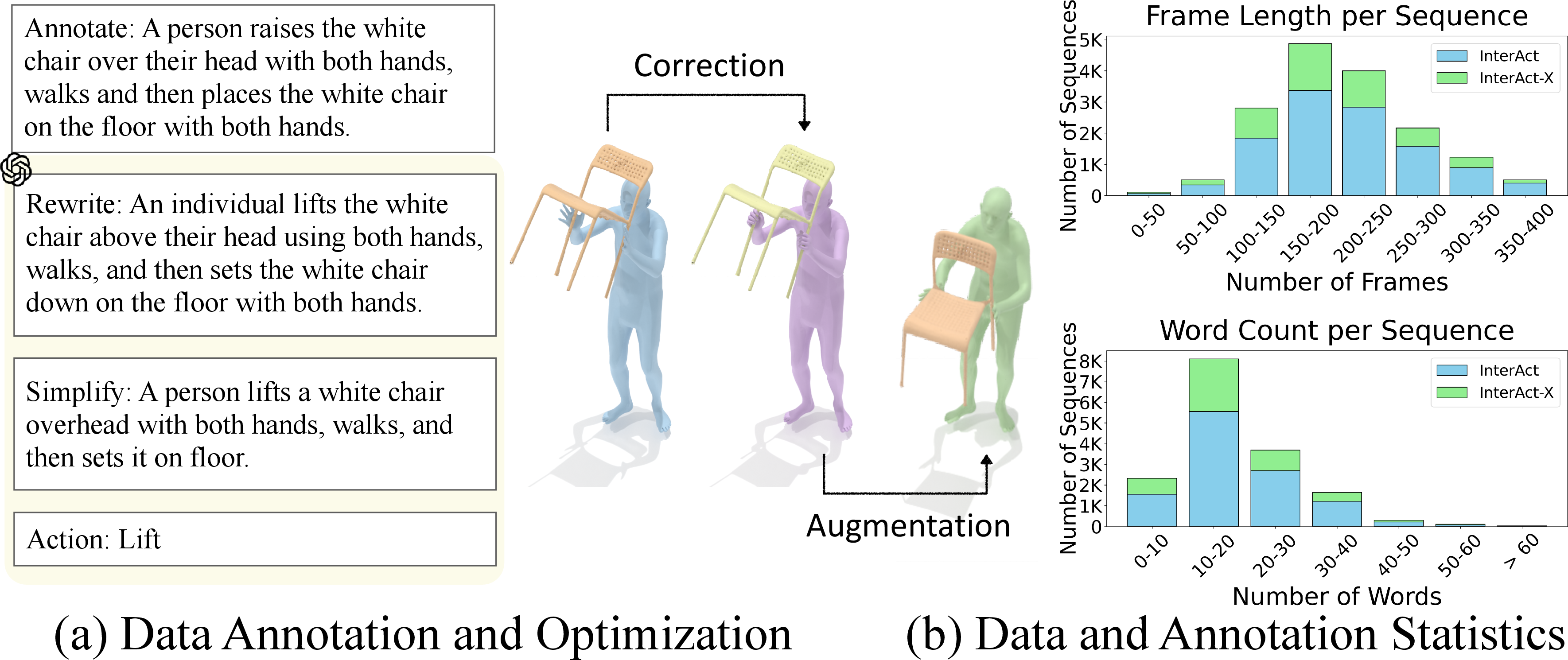

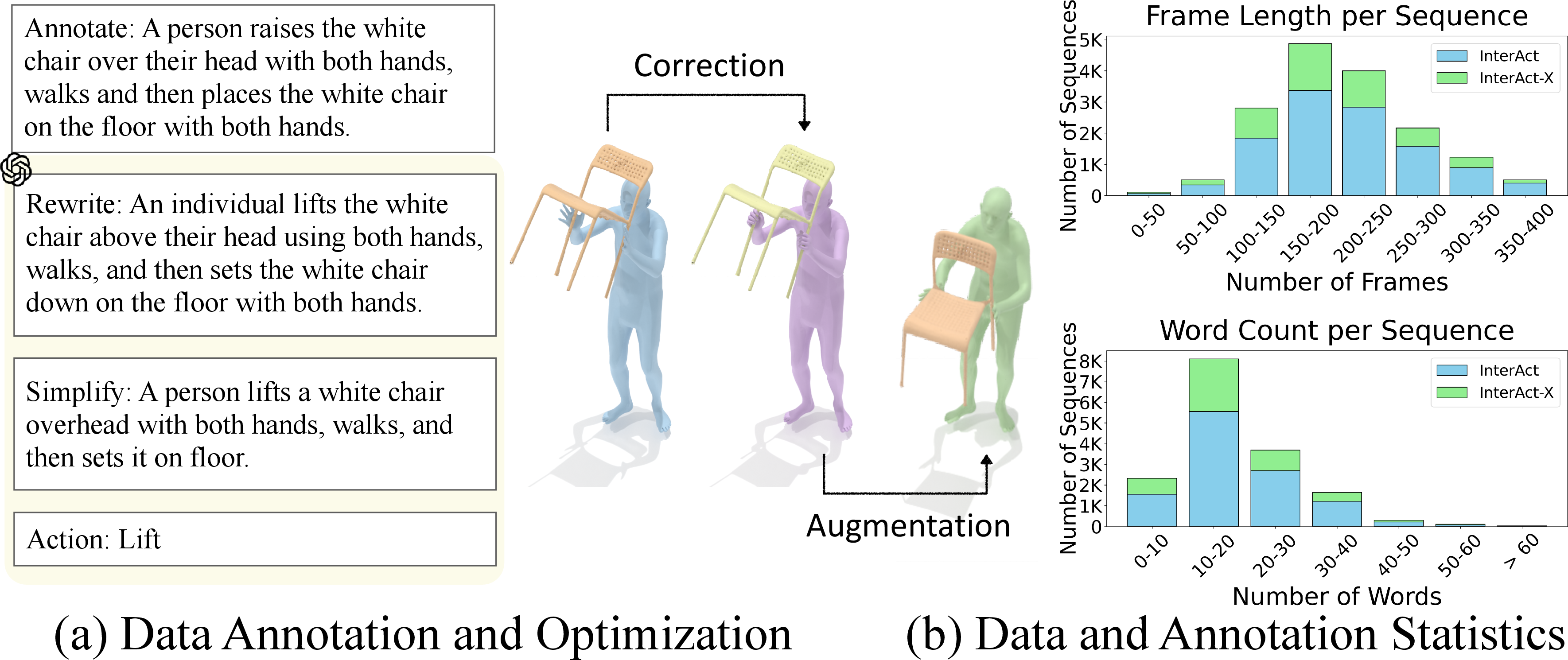

Figure 1: The data processing pipeline for InterAct, including consolidation, annotation via foundation models, correction, and interaction illustration, with statistics on motion and text annotations.

Dataset Construction and Representation

Data Consolidation and Annotation

InterAct unifies heterogeneous datasets by standardizing both textual annotations and human representations. Textual descriptions are generated through a two-phase process involving human annotators and GPT-4, ensuring consistency and granularity. Action labels are assigned using in-context learning, supporting downstream tasks such as action-conditioned generation.

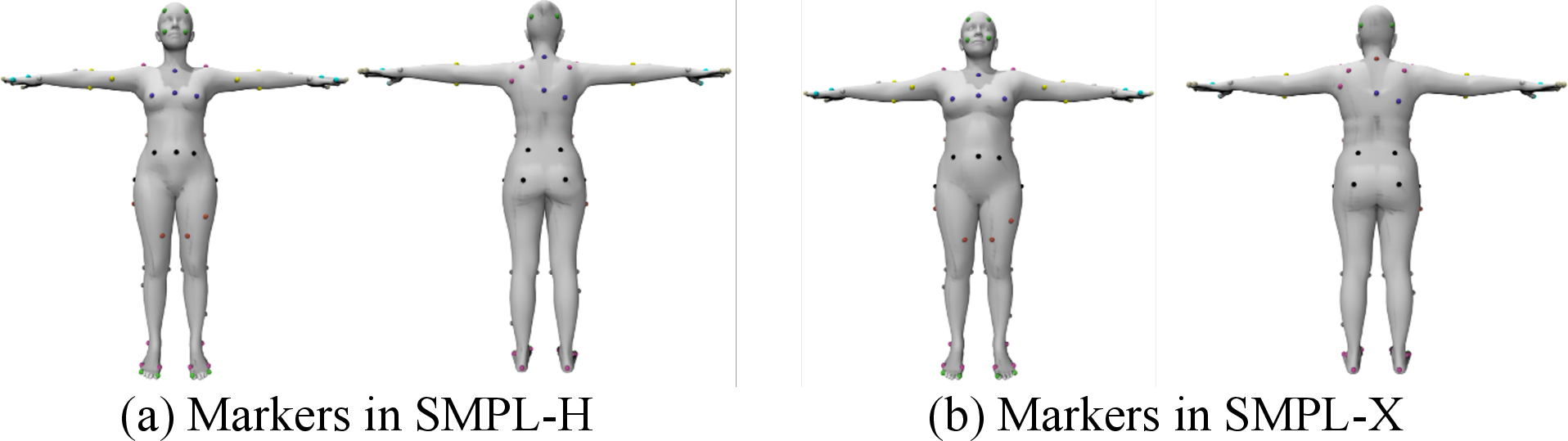

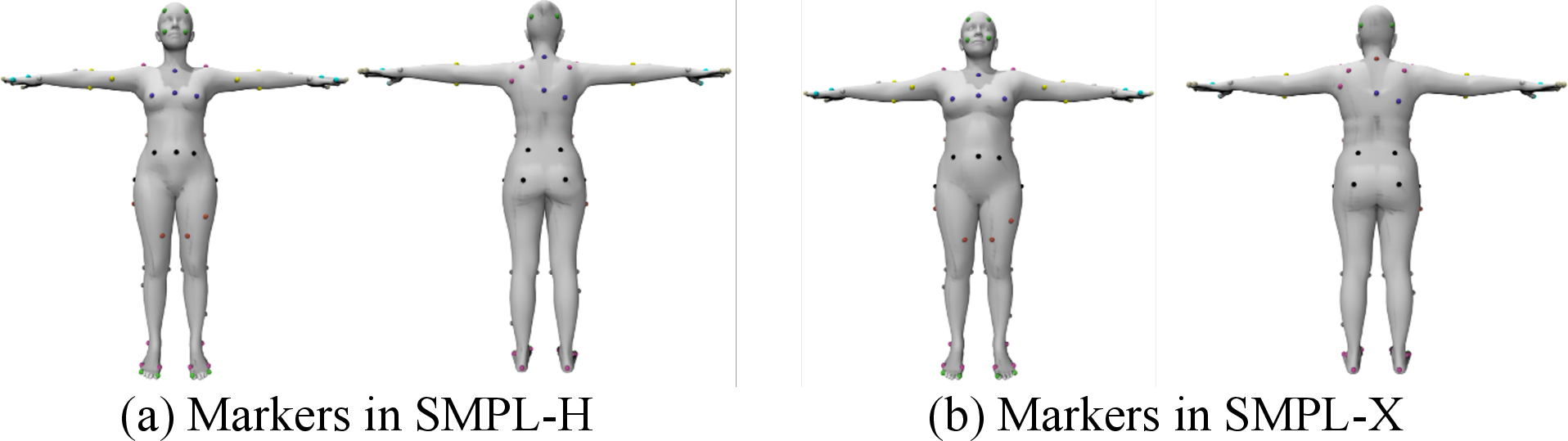

Marker-Based Human Representation

To address inconsistencies in human models (SMPL-H, SMPL-X), the authors employ a marker-based representation, mapping surface vertices across models to achieve sub-centimeter correspondence. This approach is superior to joint-based or rotation-based representations for contact modeling, as it directly encodes surface interactions.

Figure 2: Marker-based representation for human, illustrating consistent mapping between SMPL-H and SMPL-X models.

Data Correction and Augmentation

Unified Optimization Framework

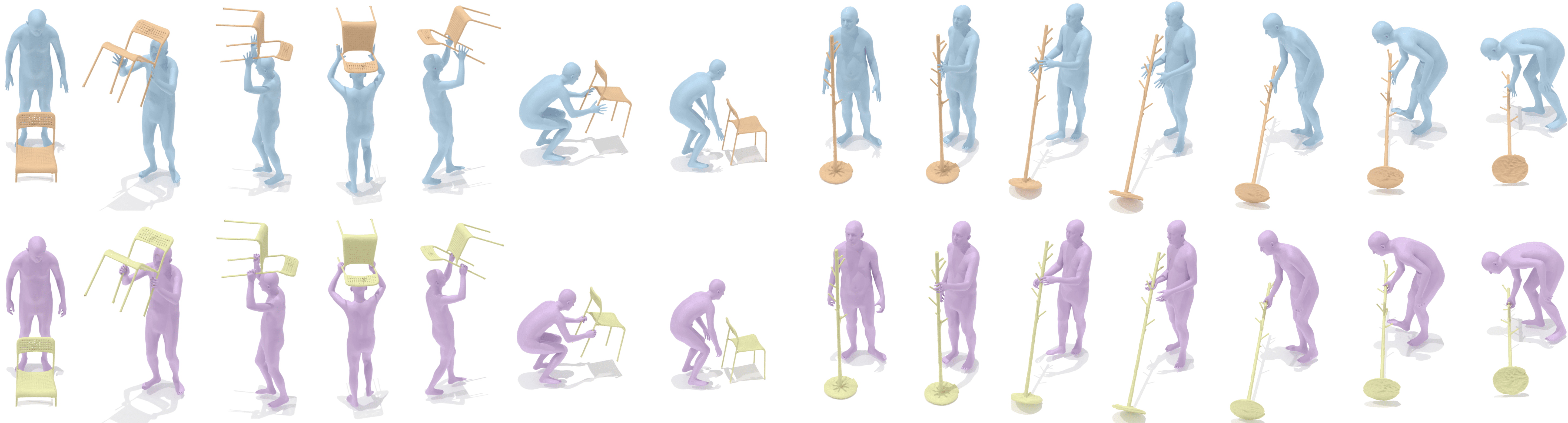

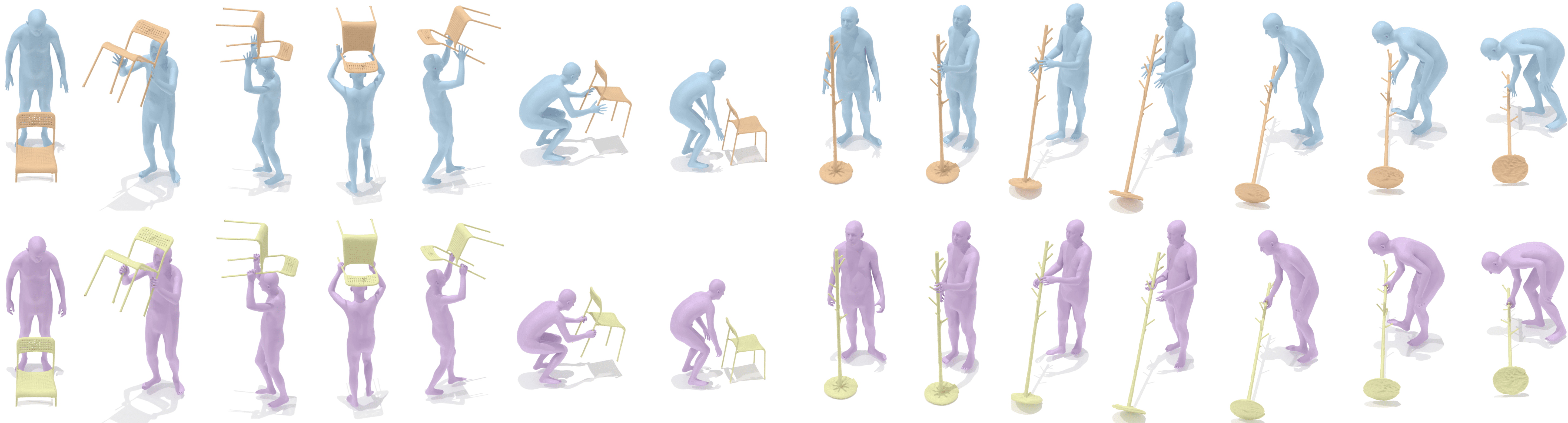

The dataset is refined using a three-stage optimization pipeline: full-body correction, hand correction, and interaction augmentation. Loss functions penalize penetration, promote contact, enforce smoothness, and constrain hand pose realism. The correction process significantly reduces artifacts such as interpenetration and floating contacts, as validated by both quantitative metrics and user studies.

Figure 3: Qualitative evaluation of interaction correction, showing improved hand recovery and contact fidelity compared to ground truth.

Leveraging the principle of contact invariance, the authors generate synthetic data by varying human motion while maintaining object contact, expanding the dataset without additional MoCap acquisition. Augmentation is performed via object displacement, interaction alignment, and filtering, resulting in high-quality synthetic sequences.

Figure 4: Qualitative evaluation of interaction augmentation, demonstrating diverse synthetic interactions with consistent contact.

Benchmark Tasks and Unified Modeling

Six HOI generation tasks are defined:

- Text-to-Interaction: Generate interaction sequences from textual descriptions.

- Action-to-Interaction: Generate interactions from action labels.

- Object-to-Human: Generate human motion conditioned on object trajectories.

- Human-to-Object: Generate object motion conditioned on human motion.

- Interaction Prediction: Predict future interactions from past sequences.

- Interaction Imitation: Learn physics-based control policies to reproduce interactions in simulation.

A unified multi-task learning framework is proposed, incorporating a contact feature vector η that encodes spatial relationships between human markers and object surfaces. This enables joint modeling of motion and contact, improving generalization and performance across tasks.

Experimental Results

Correction and Augmentation

Quantitative evaluation demonstrates that correction reduces penetration depth and increases contact ratio, with user studies confirming improved realism. Augmented data maintains comparable quality to corrected data.

Language-Conditioned HOI Generation

The proposed model, integrating interaction-aware text and object encoders, BPS object representation, and multi-task contact modeling, achieves superior FID, R-Precision, and diversity metrics compared to baselines such as HOI-Diff. Ablation studies confirm the efficacy of marker-based representation for artifact reduction.

Object-Conditioned and Human-Conditioned Generation

Single-stage multi-task models outperform two-stage baselines (e.g., OMOMO) in MPMPE, contact precision, recall, and F1 score, especially for whole-body interactions and novel objects.

Interaction Prediction

Scaling the model and training data size yields improved MPMPE and object motion accuracy, demonstrating the benefits of large-scale data for generalization.

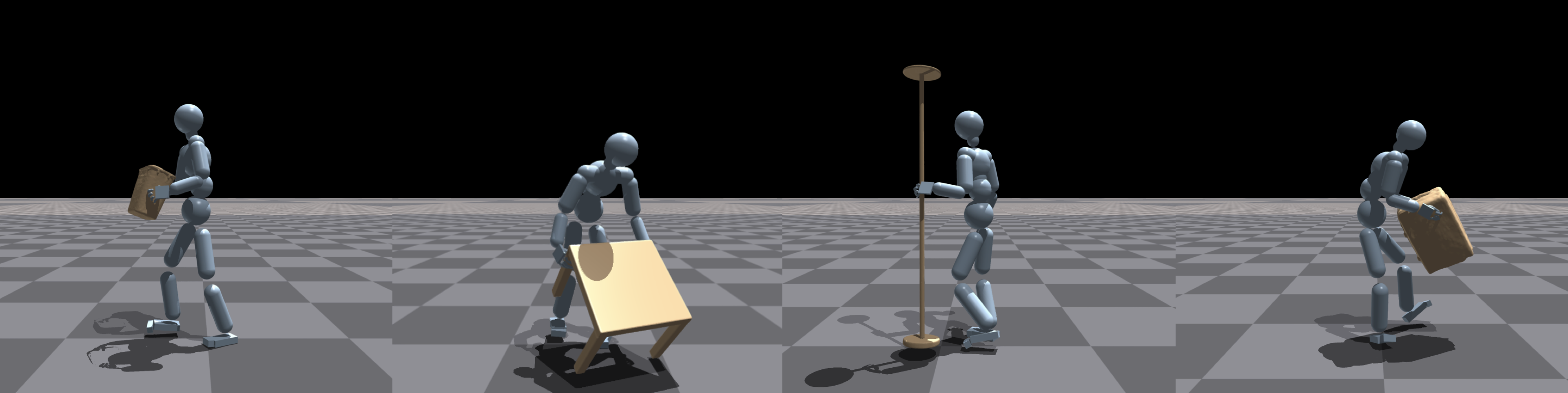

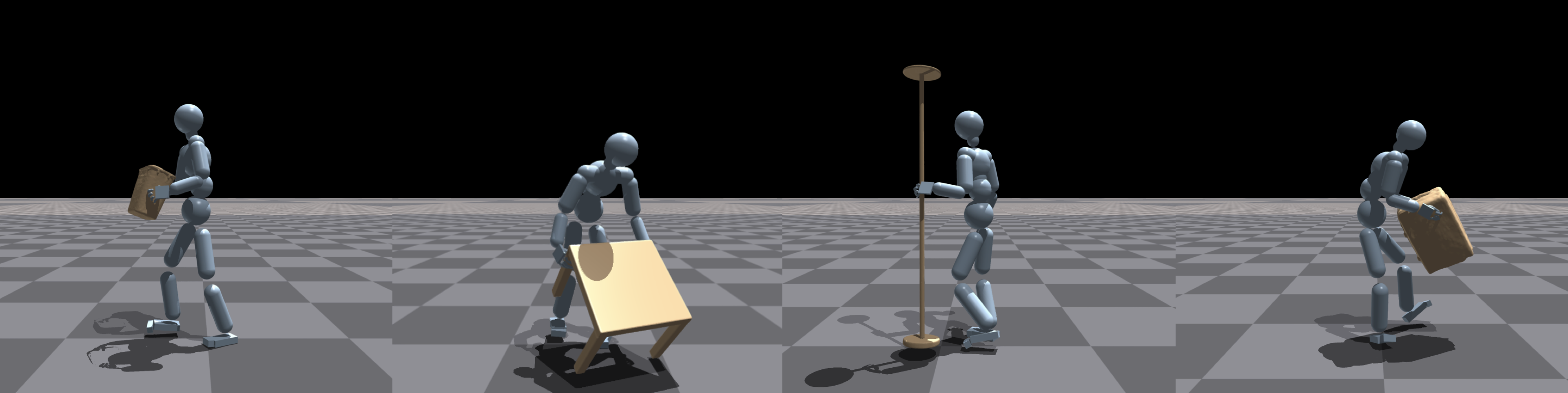

Physics-Based Imitation

Using PhysHOI, imitation policies trained on corrected data achieve a success rate of 90.7%, compared to 84.4% for raw data, indicating that correction enhances downstream control performance.

Figure 5: Qualitative results of successful imitation of corrected data using PhysHOI, demonstrating high-fidelity reproduction in simulation.

Implementation Considerations

- Computational Requirements: Training multi-task transformer-based diffusion models on InterAct requires significant GPU resources (e.g., NVIDIA A40), with training times ranging from hours to days depending on the task.

- Data Licensing: The dataset is released under CC BY-NC-SA, with sub-datasets subject to their original licenses.

- Deployment: The marker-based representation and unified modeling framework facilitate integration into downstream applications such as embodied agents, animation pipelines, and simulation environments.

Limitations and Future Directions

While InterAct substantially increases the scale and diversity of HOI data, coverage of in-the-wild object categories remains limited. Correction and augmentation are constrained by the quality of original data and hyperparameter choices. Future work should focus on expanding object diversity, improving correction robustness, and exploring more expressive generative models.

Conclusion

InterAct establishes a new standard for large-scale, versatile 3D human-object interaction generation. Through unified data consolidation, annotation, correction, and augmentation, it enables robust benchmarking and model development across six key HOI tasks. The marker-based representation and multi-task learning framework yield state-of-the-art performance, supporting both kinematic and physics-based approaches. InterAct is poised to accelerate research in embodied AI, robotics, and animation, with ongoing maintenance and public availability fostering continued advancement in the field.