- The paper presents SMapper, a compact open-hardware platform integrating LiDAR, cameras, and IMUs to overcome limitations in traditional SLAM datasets.

- It details a robust calibration and synchronization pipeline achieving sub-millisecond alignment and reprojection errors below 0.7 pixels.

- Benchmarking on the SMapper-light dataset demonstrates the platform’s utility for enhancing dense mapping and semantic scene understanding in varied environments.

Introduction and Motivation

The SMapper platform addresses persistent limitations in SLAM research related to dataset diversity, sensor modality coverage, and reproducibility of hardware setups. Existing datasets such as KITTI, EuRoC, and TUM RGB-D have advanced SLAM algorithm development but are constrained by fixed sensor configurations, limited environmental variety, and lack of open hardware specifications. SMapper is introduced as a compact, open-hardware, multi-sensor device integrating synchronized LiDAR, multi-camera, and inertial sensing, with a robust calibration and synchronization pipeline. The platform is designed for both handheld and robot-mounted use, facilitating reproducible data collection and extensibility for diverse SLAM scenarios.

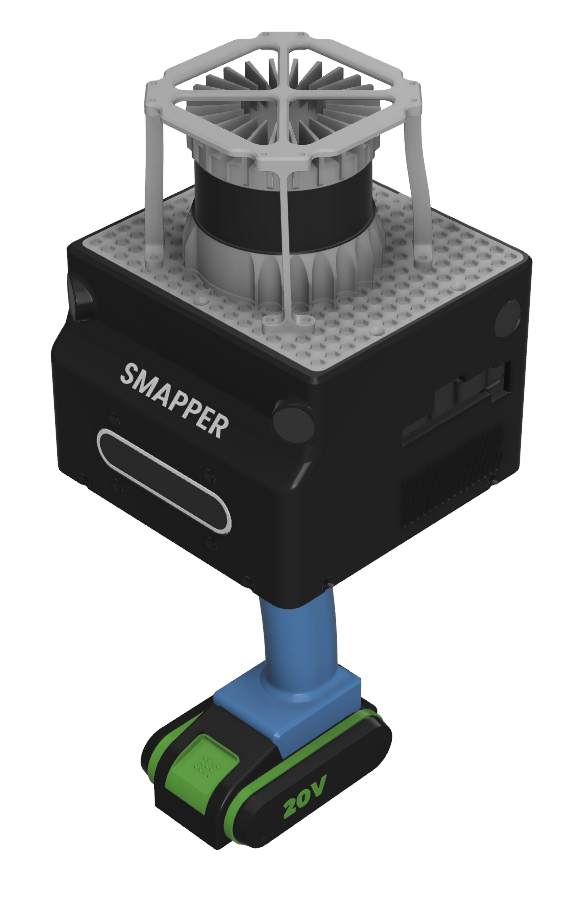

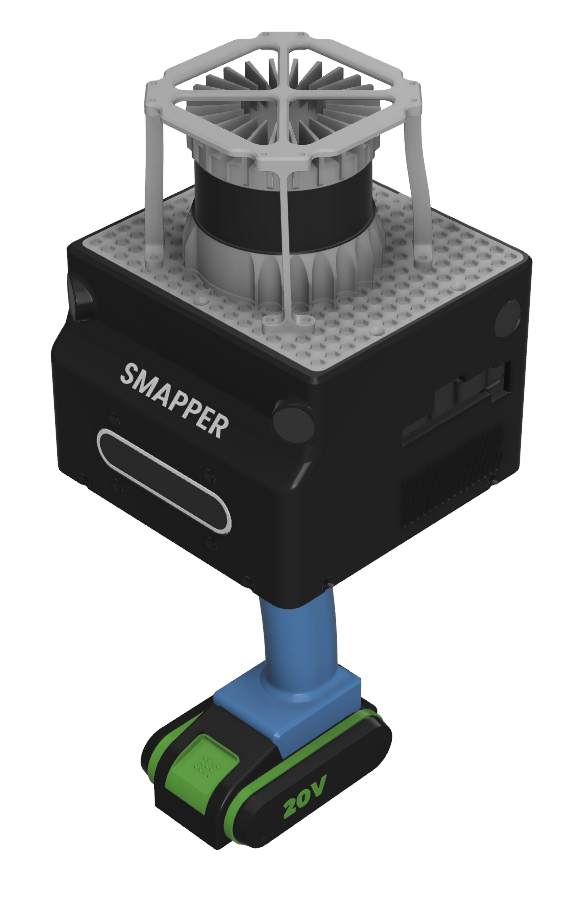

Figure 1: The SMapper platform, from CAD rendering of the final design (left) to the fully assembled physical prototype (right).

System Architecture and Sensor Integration

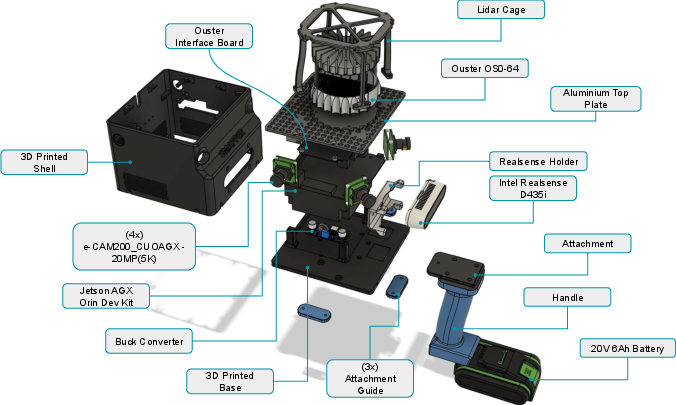

SMapper's modular structure supports rapid reconfiguration between handheld and robot-mounted modes. The device houses a 64-beam Ouster OS0 3D LiDAR, four synchronized e-CAM200 rolling shutter RGB cameras, an Intel RealSense D435i RGB-D camera, and dual IMUs (LiDAR and camera-integrated). The onboard NVIDIA Jetson AGX Orin Developer Kit provides real-time data acquisition, synchronization, and processing capabilities, leveraging a 2048-core GPU and 12-core ARM CPU.

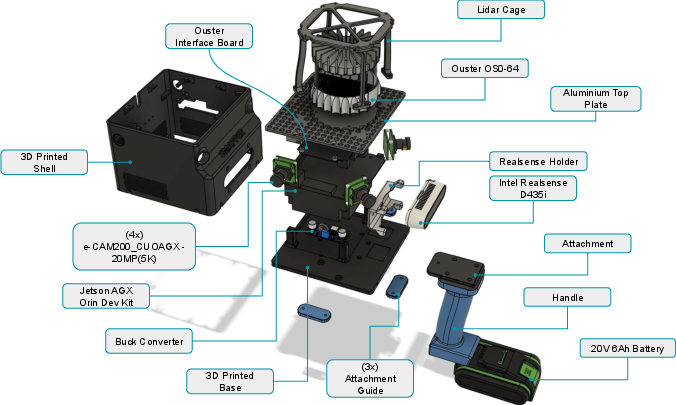

Figure 2: Overview of the SMapper device, depicting its components, sensors, and parts, designed for data collection in various SLAM scenarios.

The sensor configuration yields a wide, overlapping field of view (up to 270∘×66∘), supporting multi-view geometry and depth-based perception. The LiDAR offers $100$ m range and 360∘×90∘ coverage, while the camera array enables robust visual SLAM and multi-modal fusion.

Synchronization and Calibration Pipeline

Temporal synchronization is achieved via selectable timestamping modes: ROS system clock, hardware timestamp system counter (TSC), and Precision Time Protocol (PTP). PTP mode enables sub-millisecond alignment across modalities, critical for high-fidelity sensor fusion in dynamic environments. SMapper prioritizes accurately timestamped raw sensor streams, maximizing compatibility with modern SLAM frameworks and minimizing storage overhead.

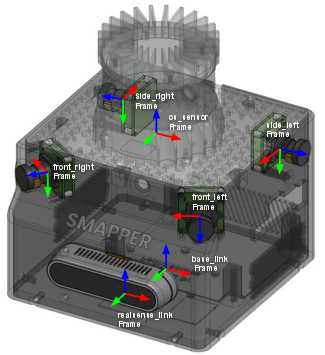

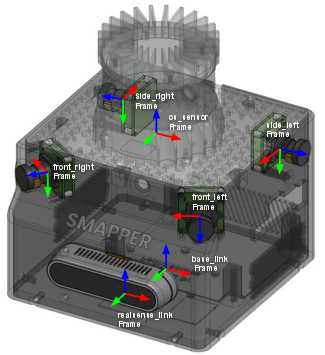

Spatial calibration is performed using an IMU-centric pipeline based on the Kalibr toolbox, with automation provided by the smapper_toolbox. Calibration involves IMU noise characterization, camera intrinsic/extrinsic estimation using AprilTag grids, and assembly of a unified transformation tree. Quantitative validation yields mean reprojection errors below $0.7$ pixels for all cameras, and extrinsic parameter deviations from CAD models are within $3.5$ cm and 2∘.

Figure 3: Coordinate frames of the SMapper device, containing the spatial configuration of the cameras, LiDAR, and IMU sensors.

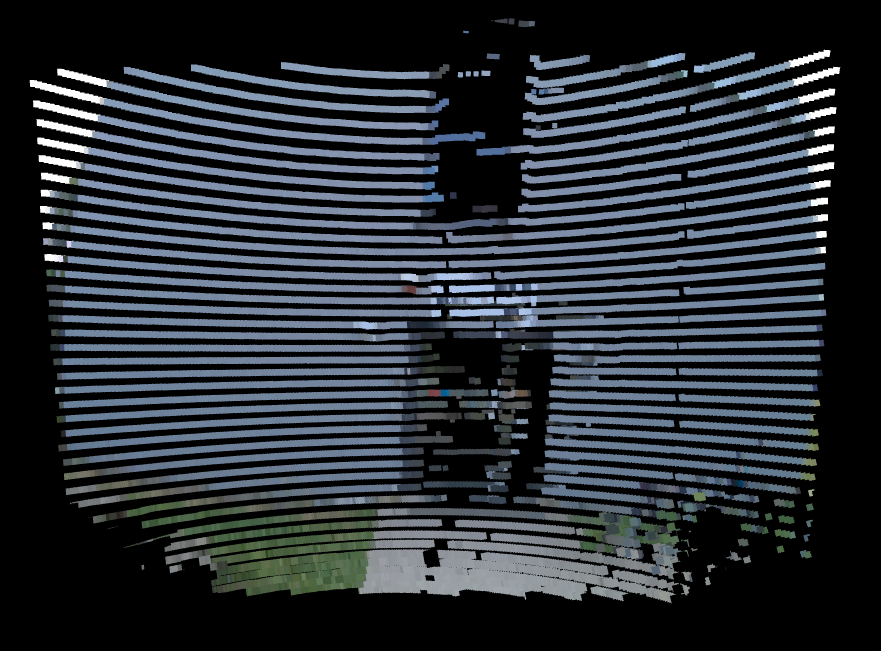

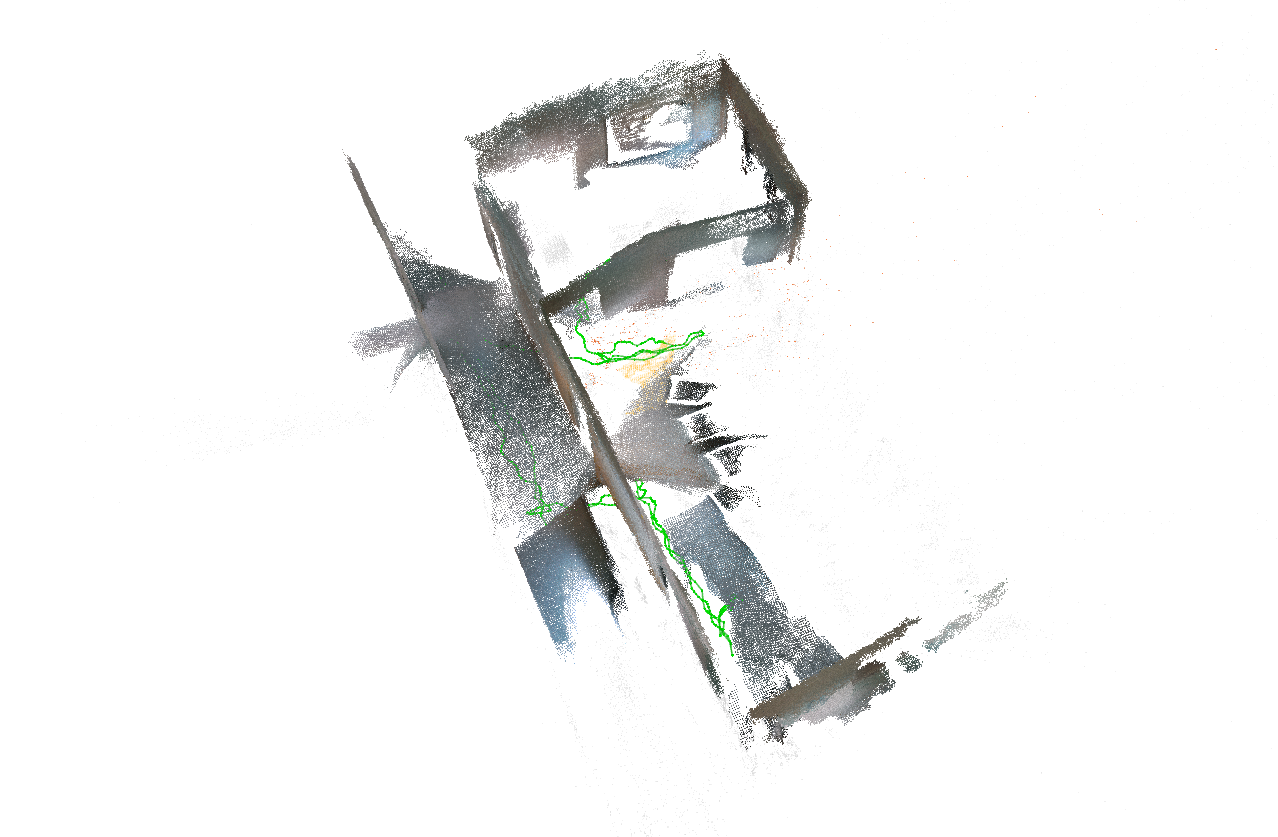

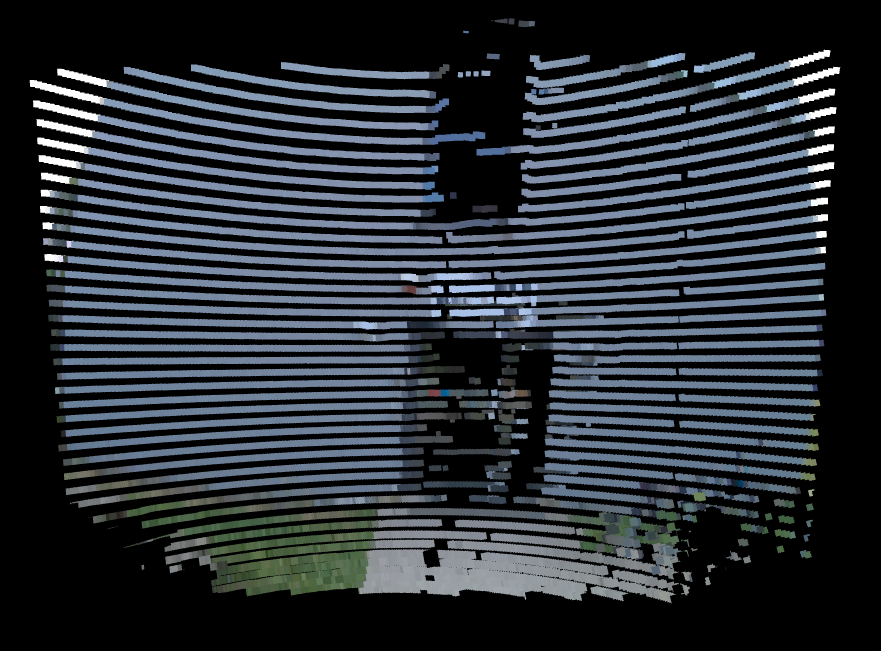

Qualitative validation via point cloud colorization demonstrates strong geometric-visual alignment, with minor residual calibration errors at structural edges.

Figure 4: Qualitative calibration validation using point cloud colorization with the front-right camera. (a) raw camera image; (b) LiDAR point cloud colored by the projected image. While the alignment is generally consistent, minor misalignments are visible at building edges, reflecting residual calibration errors.

Dataset Collection: SMapper-light

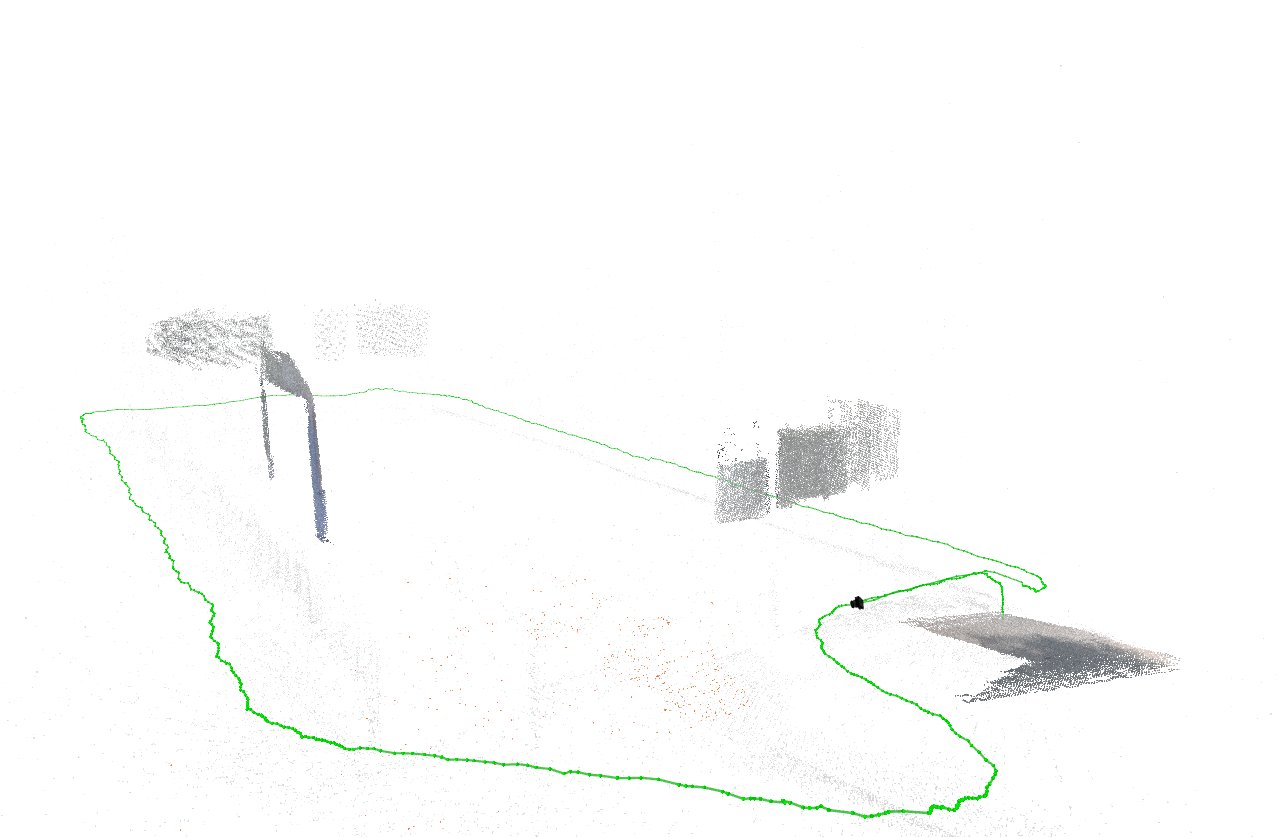

To demonstrate SMapper's utility, the SMapper-light dataset is released, comprising six representative indoor and outdoor sequences (totaling $35$ minutes and $164$ GB). Data are stored as synchronized ROS bag files in .mcap format, including all sensor streams. Ground-truth trajectories are generated via offline LiDAR-based SLAM, achieving sub-centimeter accuracy (<3 cm RMSE), and dense 3D reconstructions are provided.

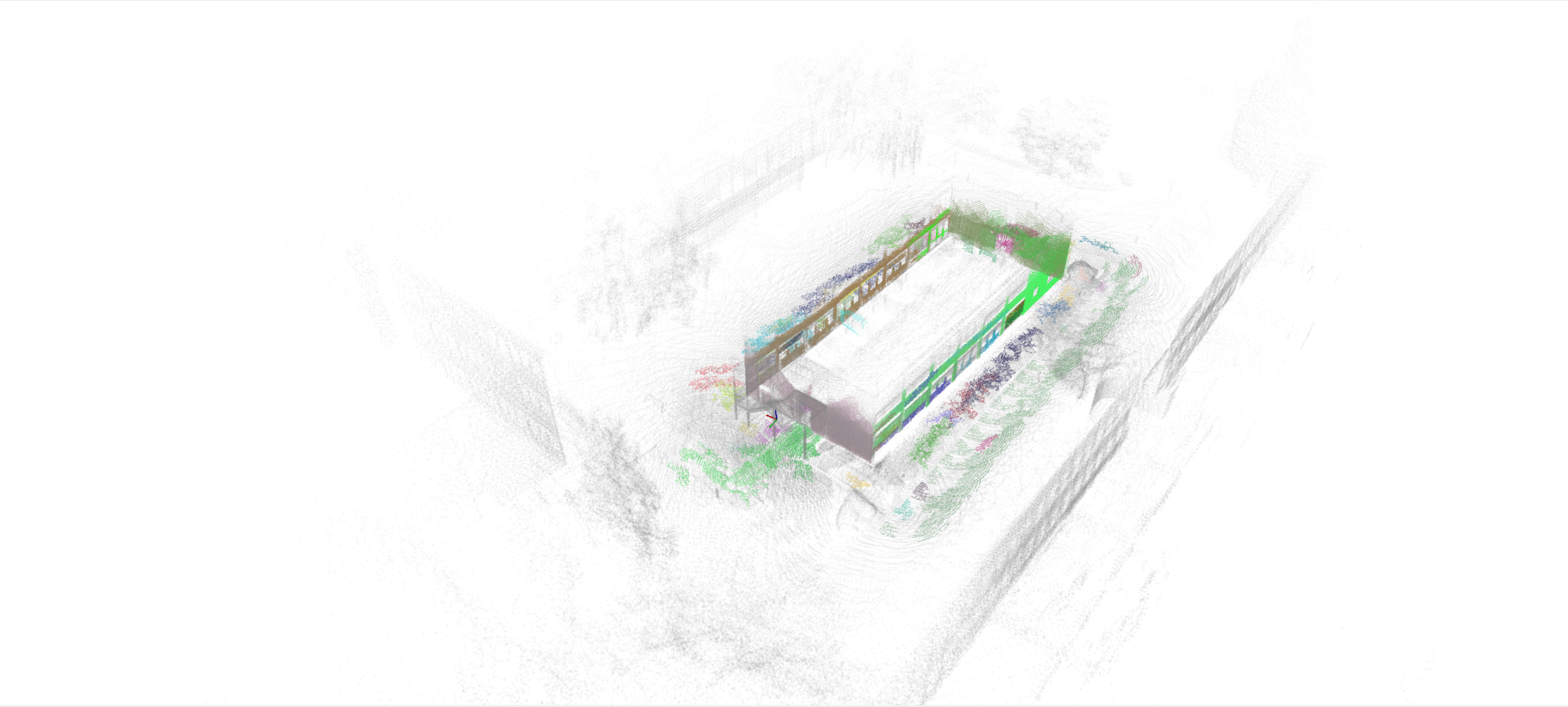

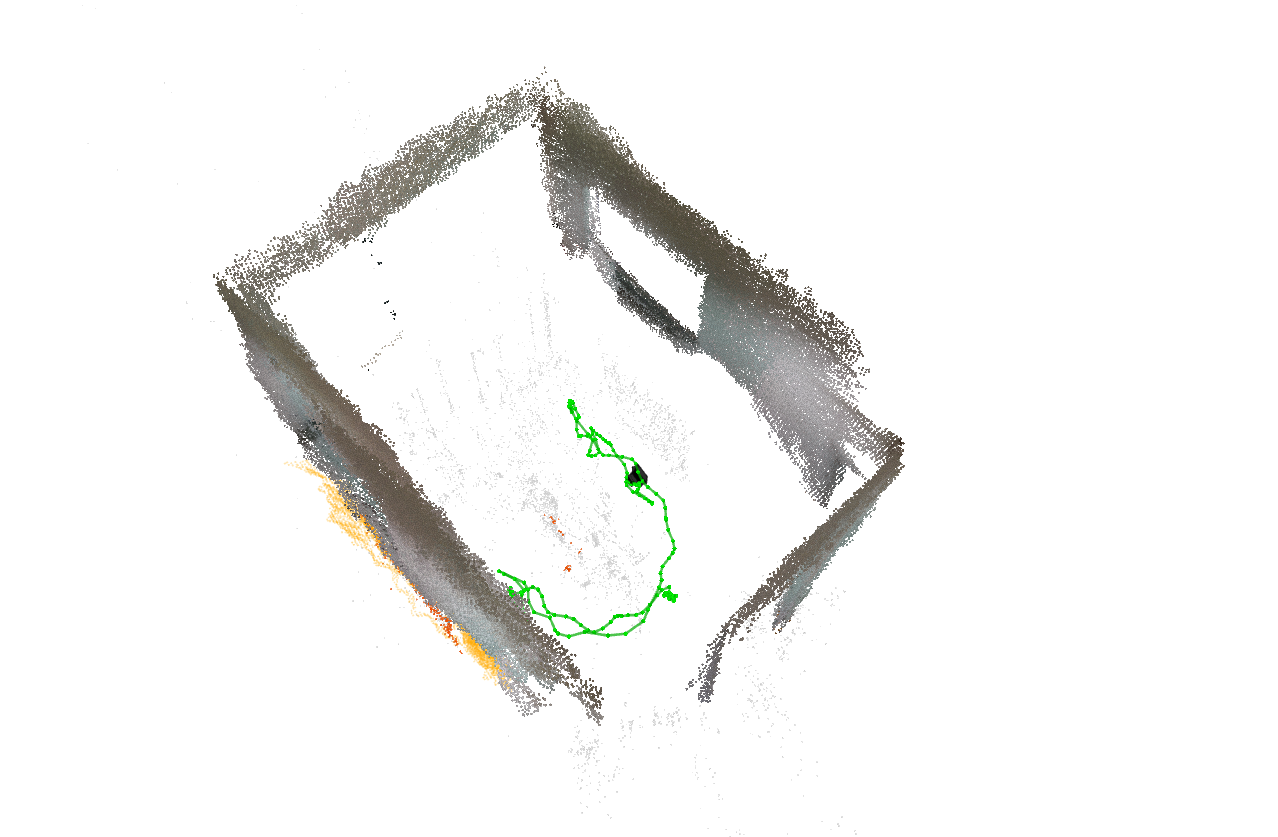

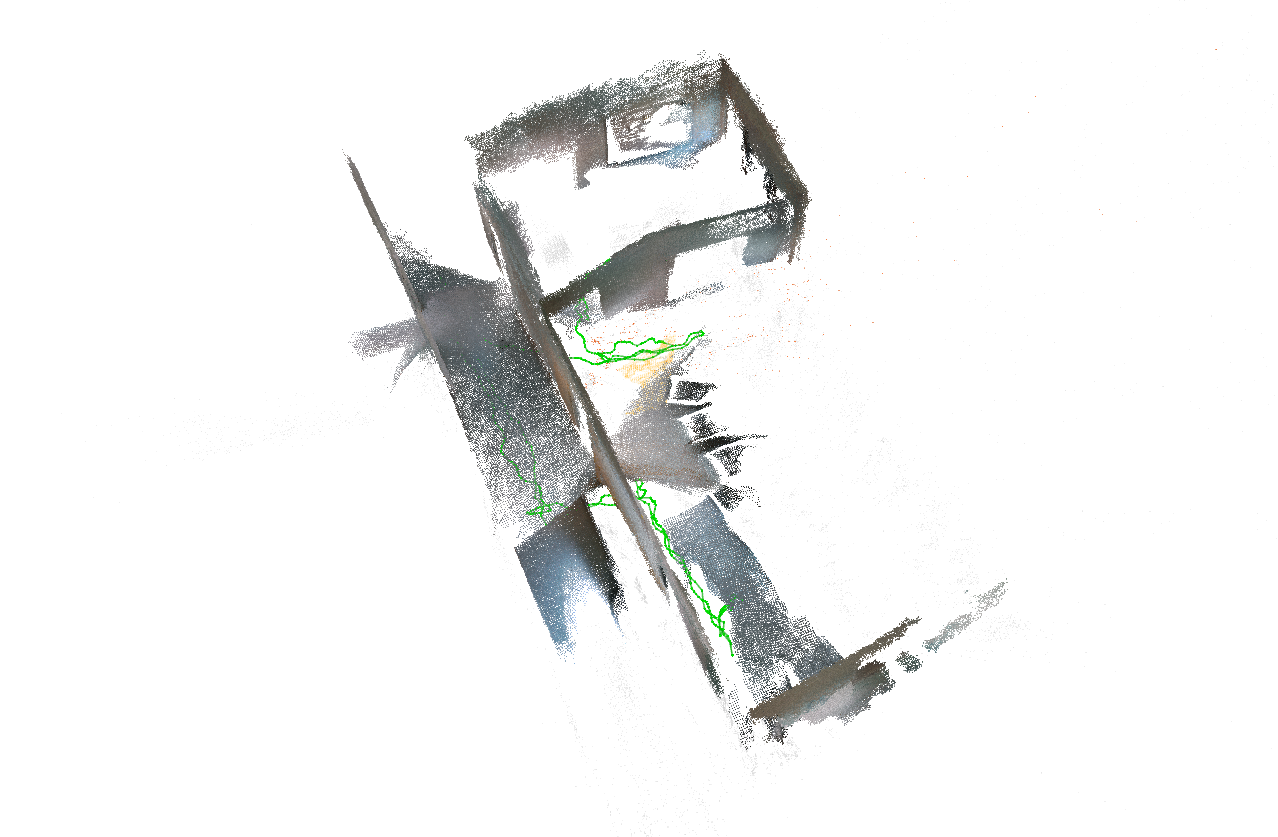

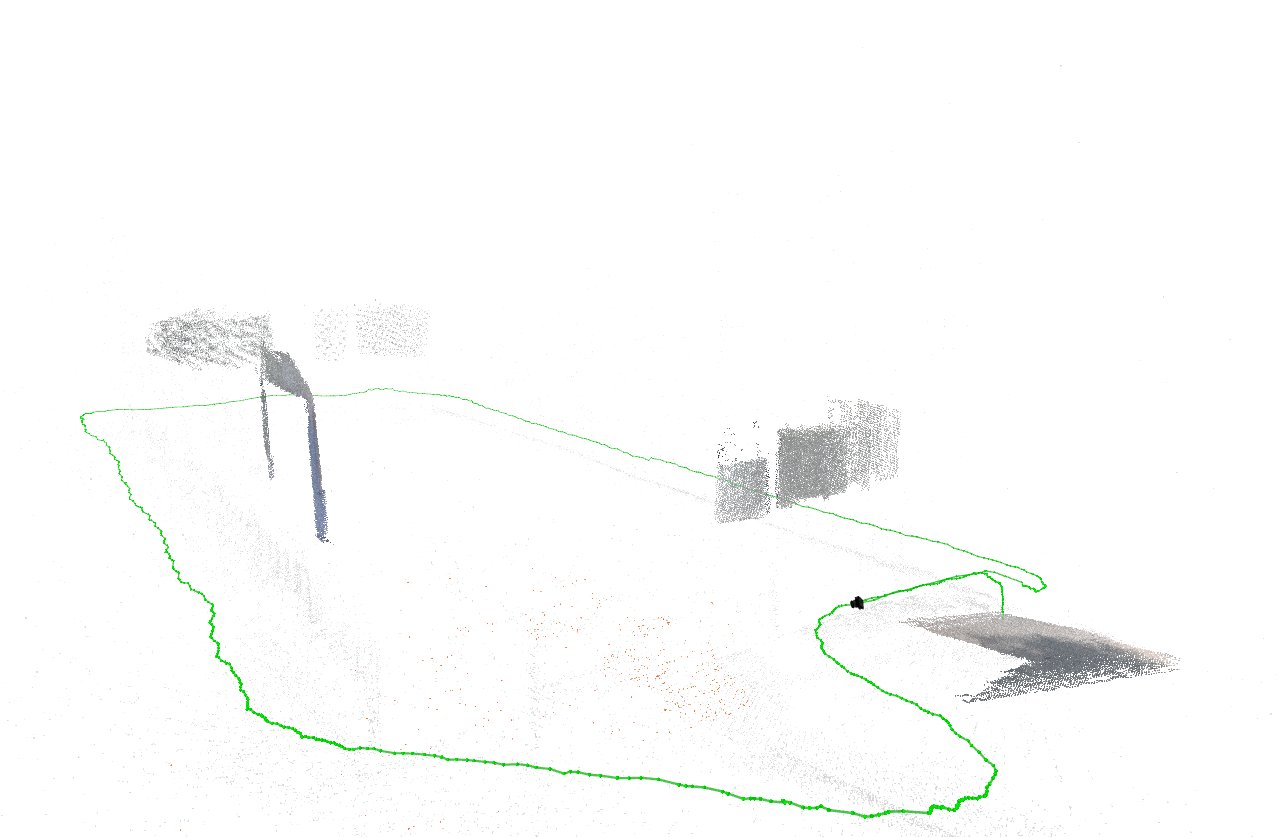

Figure 5: Sample instances of SMapper-light dataset scenarios.

The dataset covers confined single-room, multi-room, and large-scale indoor environments, as well as urban campus outdoor paths. Manual handheld operation introduces realistic motion dynamics and sensor perturbations, enhancing the dataset's relevance for benchmarking robustness and generalization.

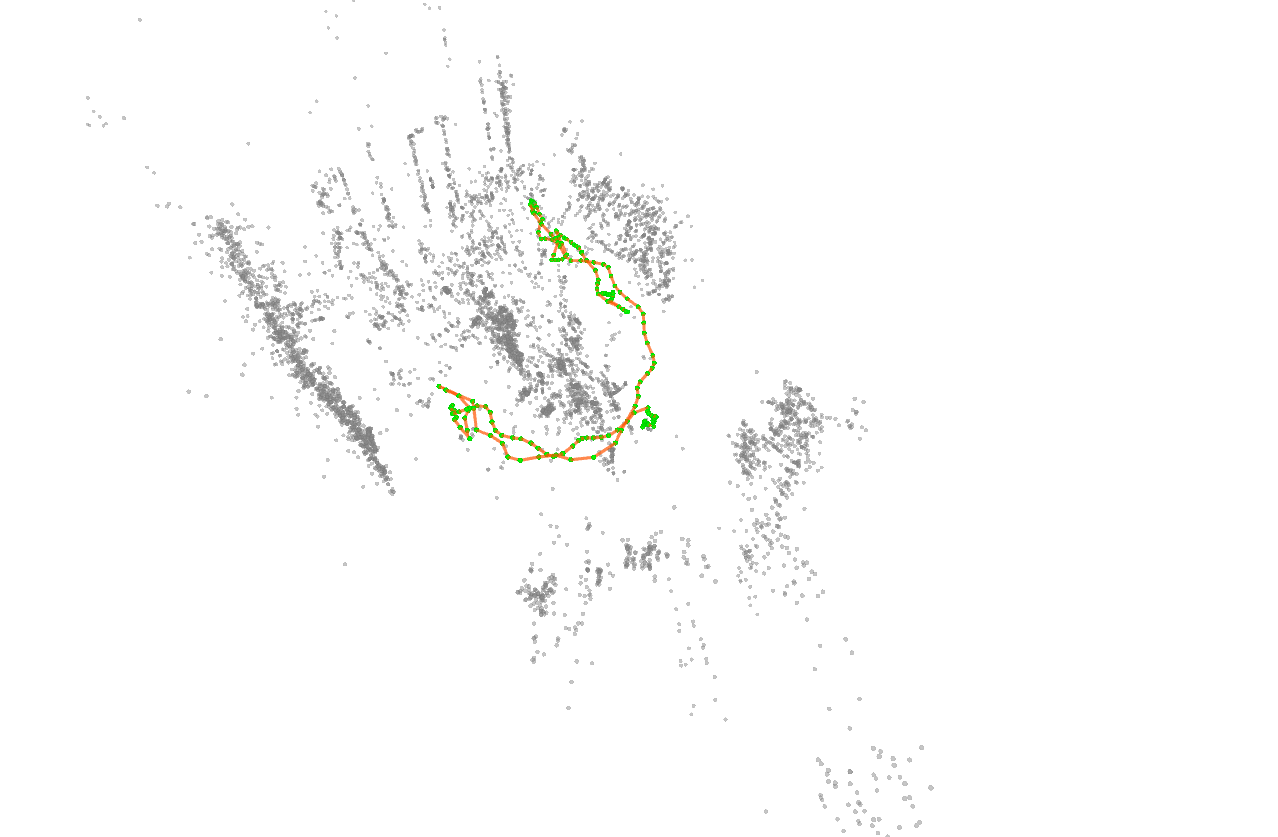

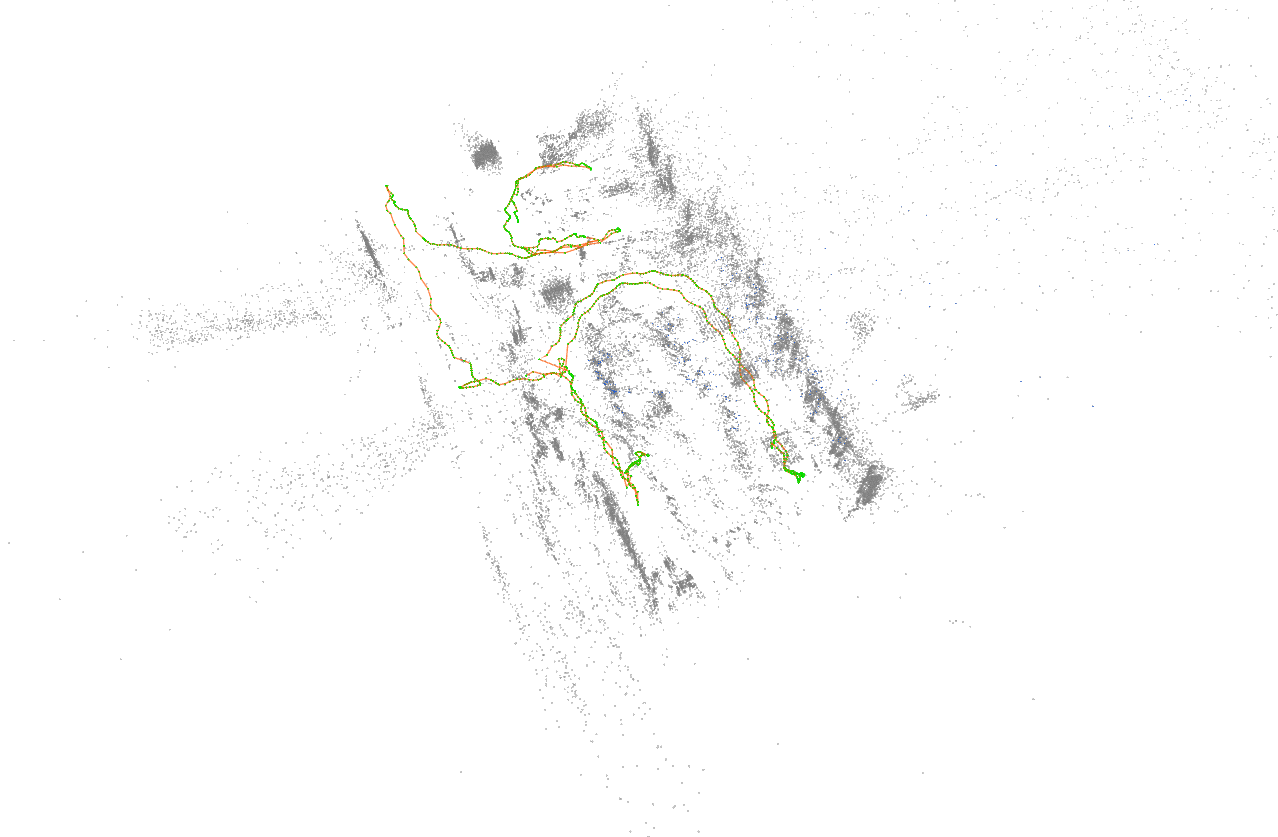

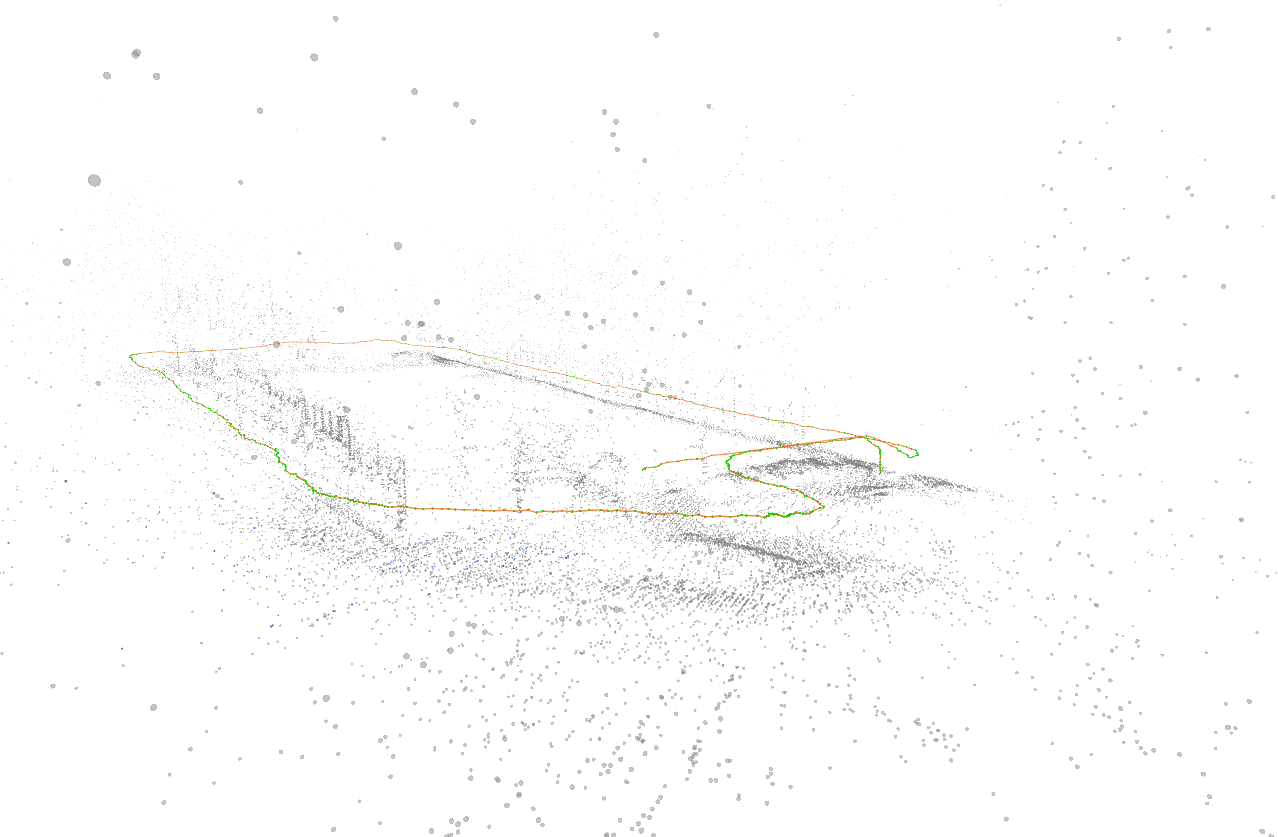

Benchmarking and Experimental Evaluation

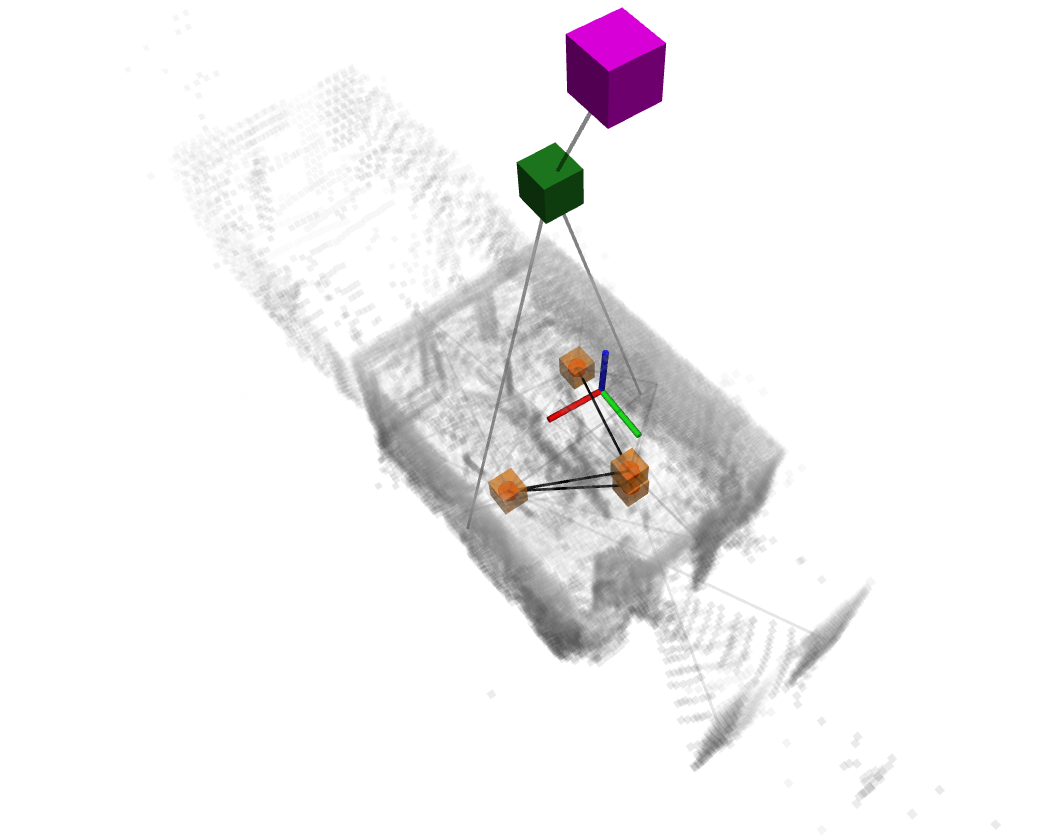

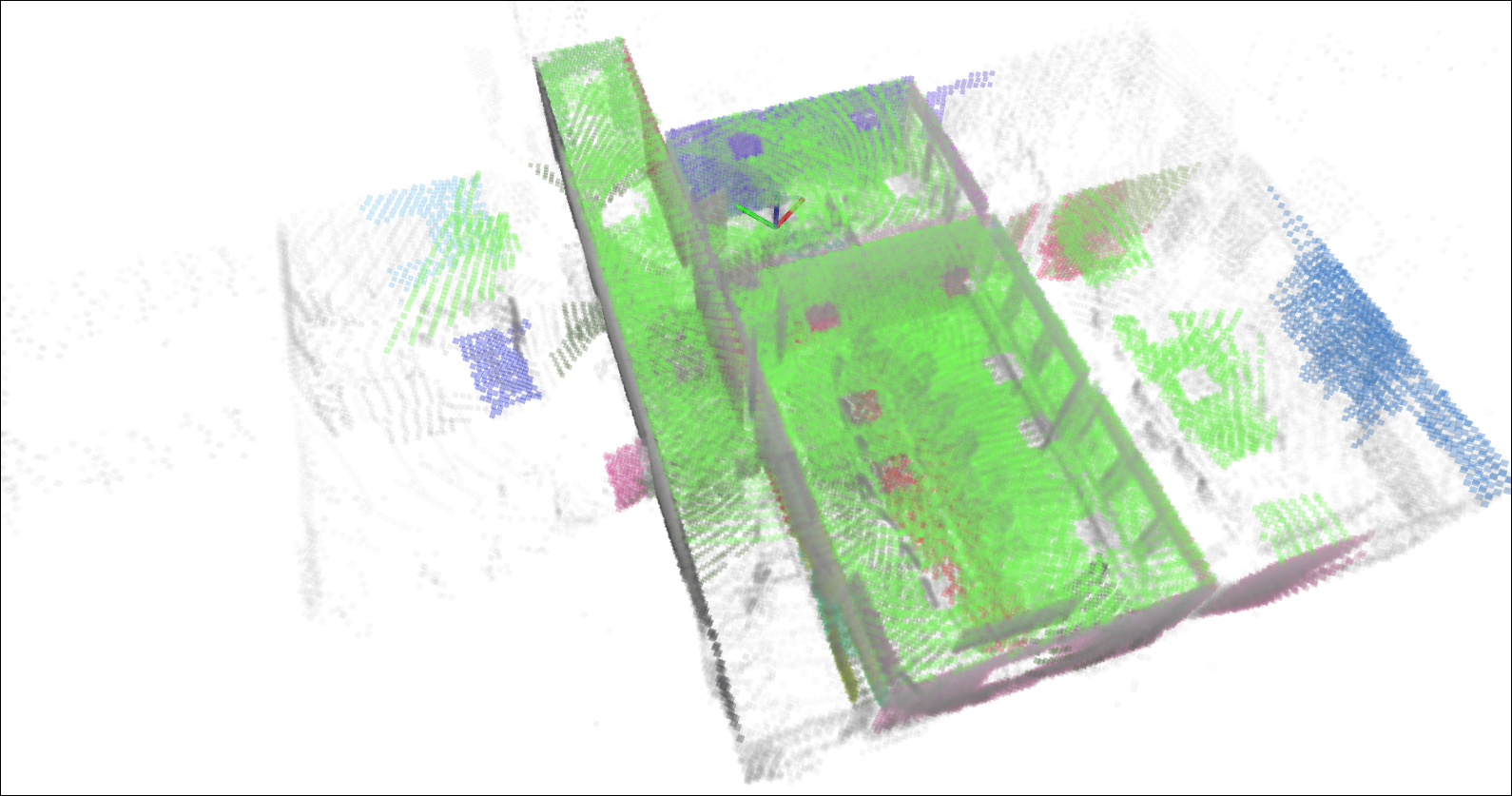

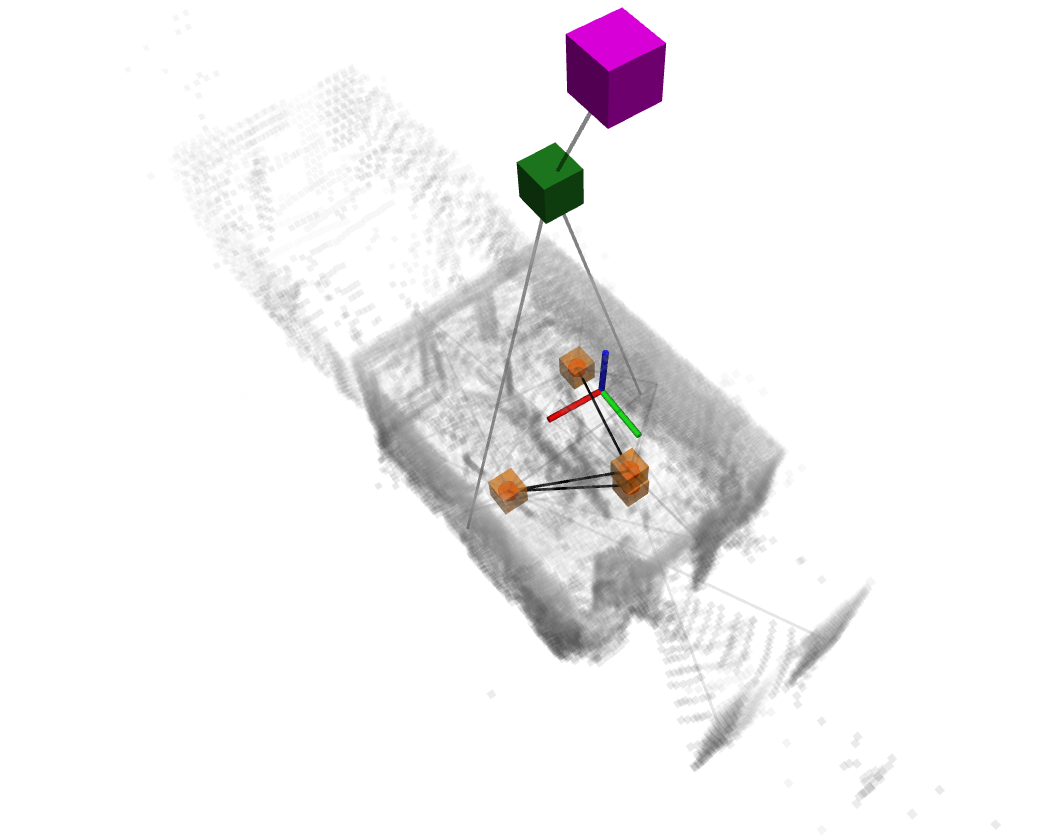

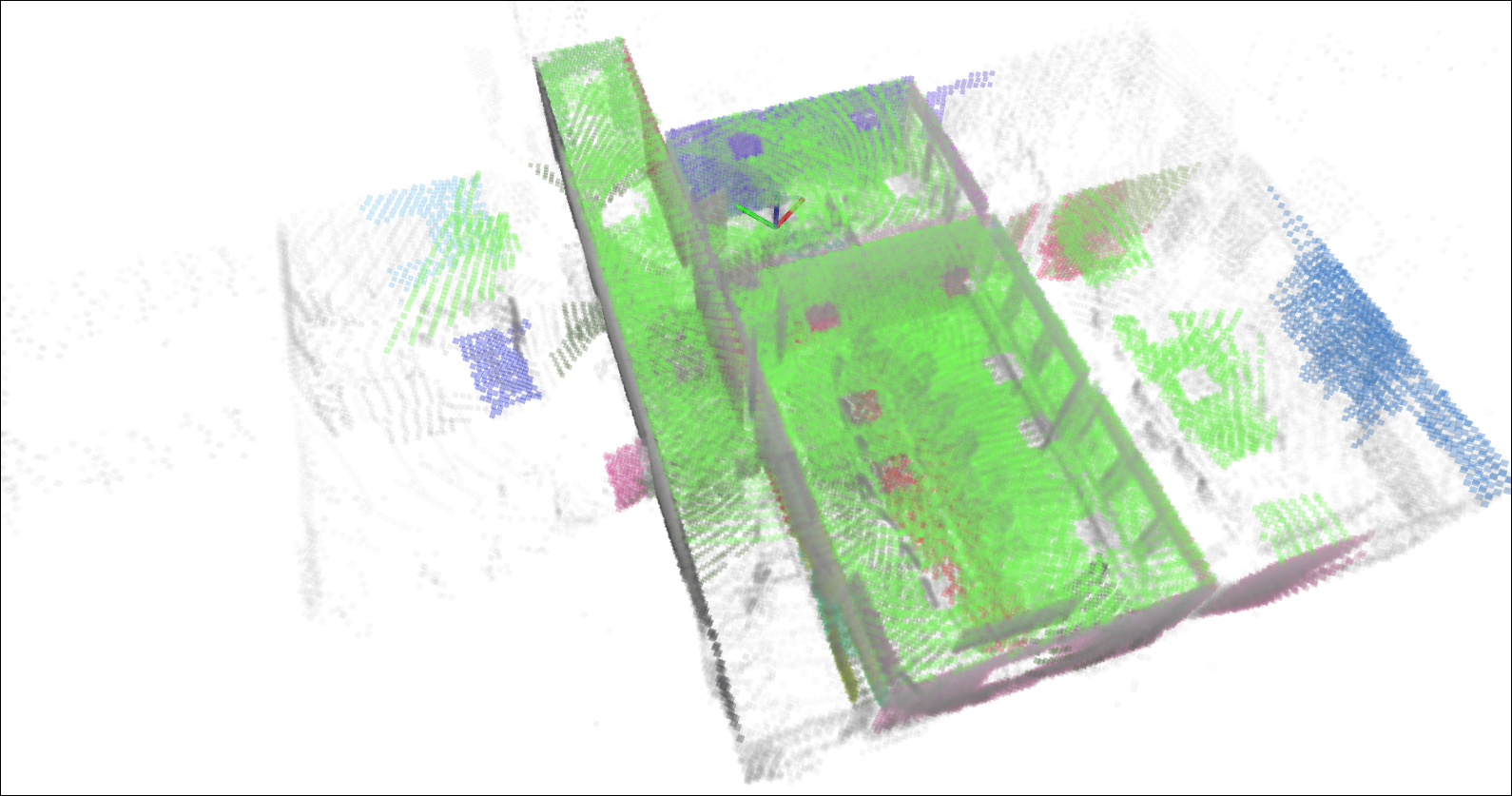

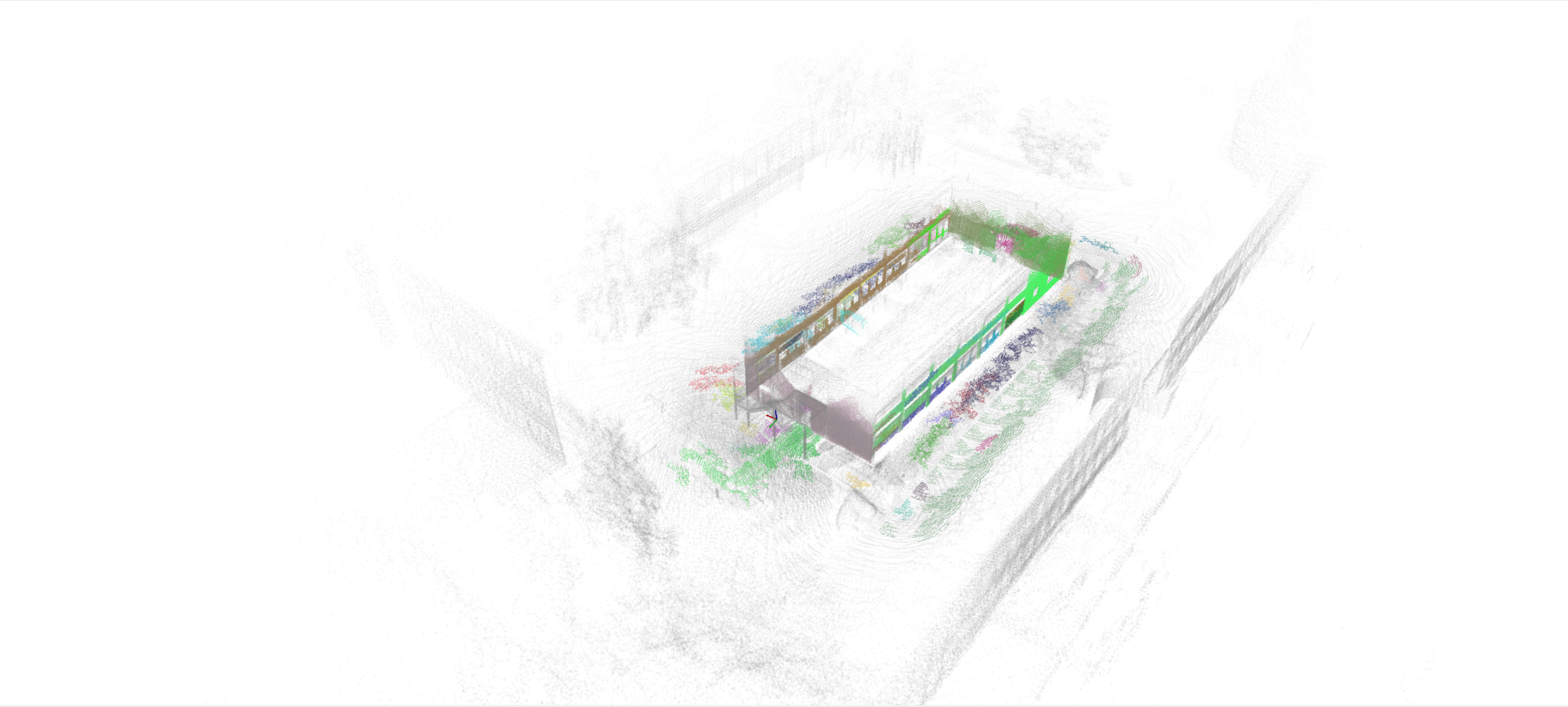

Benchmarking is performed using state-of-the-art SLAM frameworks: GLIM (LiDAR-IMU odometry), S-Graphs (semantic LiDAR SLAM), ORB-SLAM3 (visual/visual-inertial SLAM), and vS-Graphs (visual SLAM with semantic scene graphs). Qualitative results demonstrate that LiDAR-based methods produce denser, geometrically accurate maps, with S-Graphs further extracting semantic structures (walls, rooms). Visual SLAM approaches yield sparser reconstructions but capture appearance-driven information, with vS-Graphs augmenting maps via multi-level scene understanding.

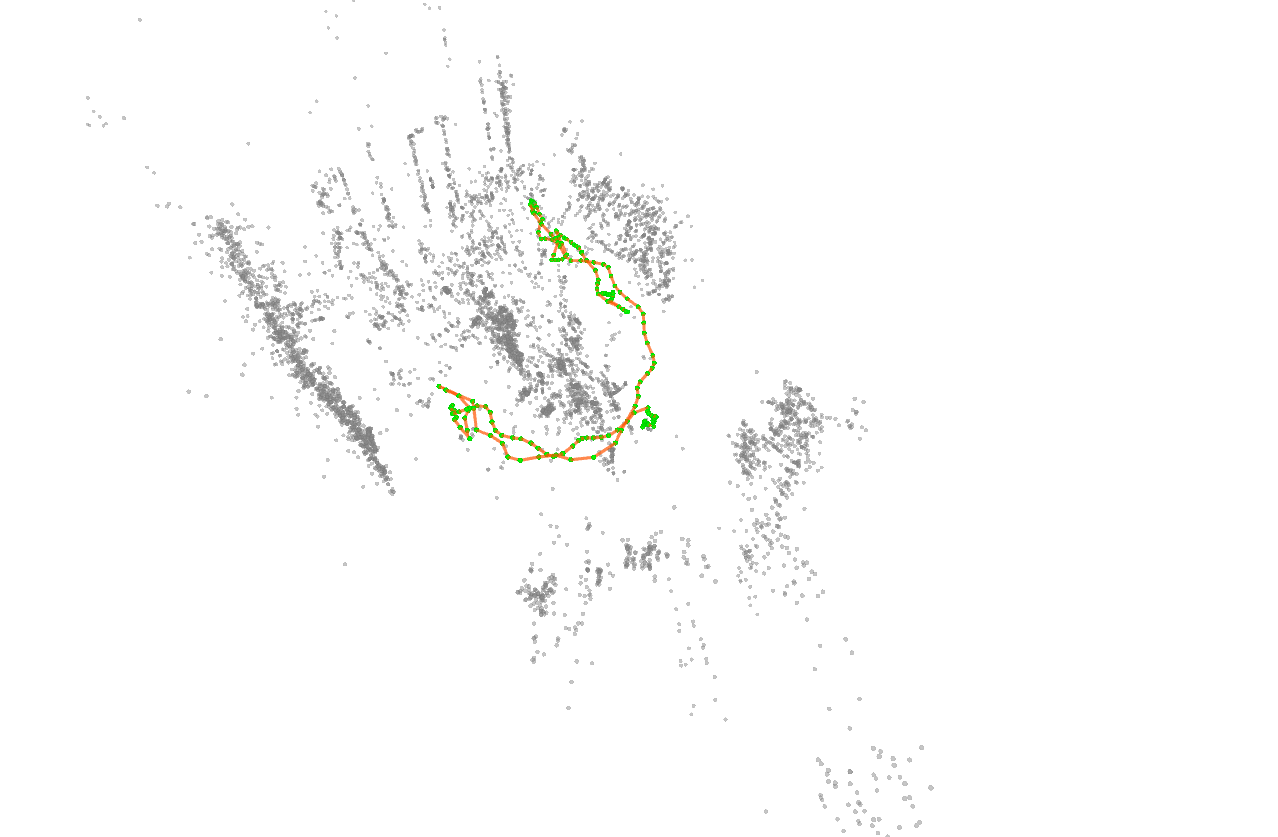

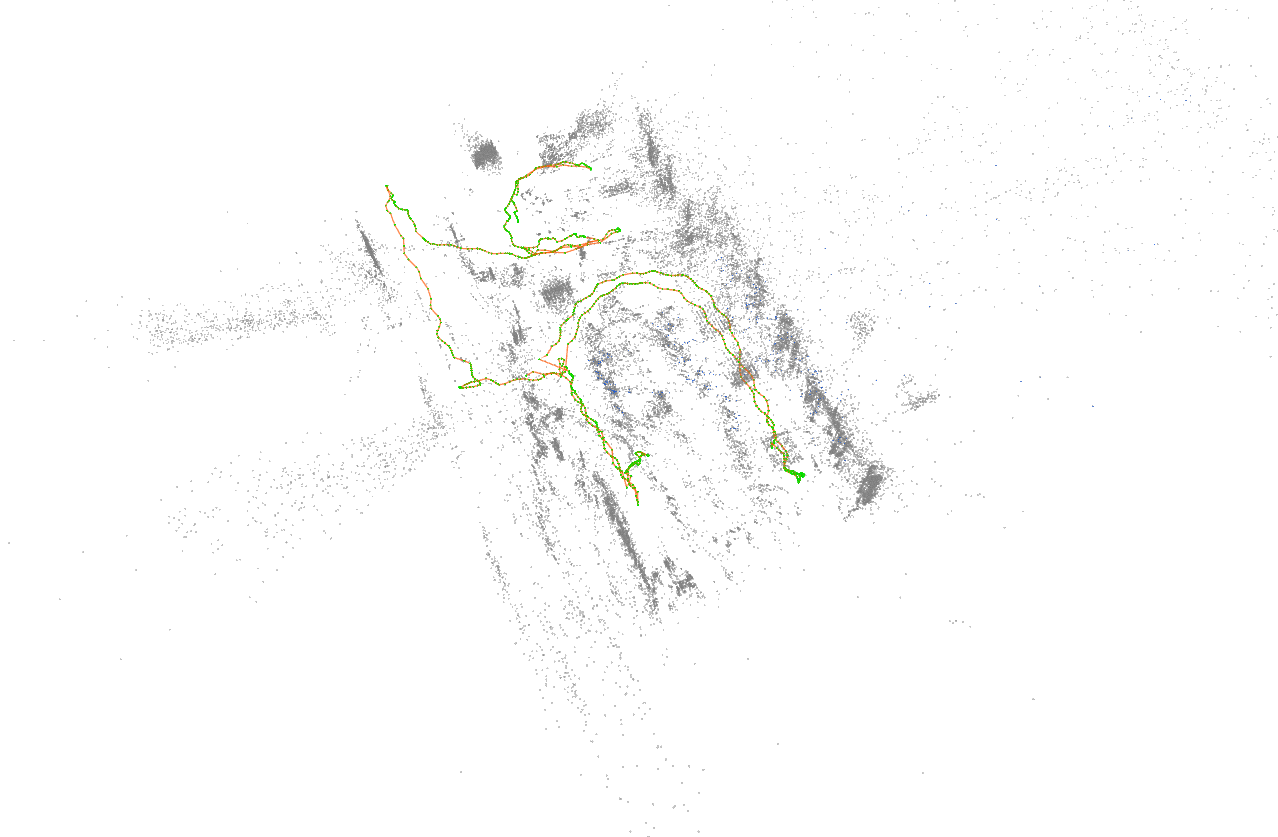

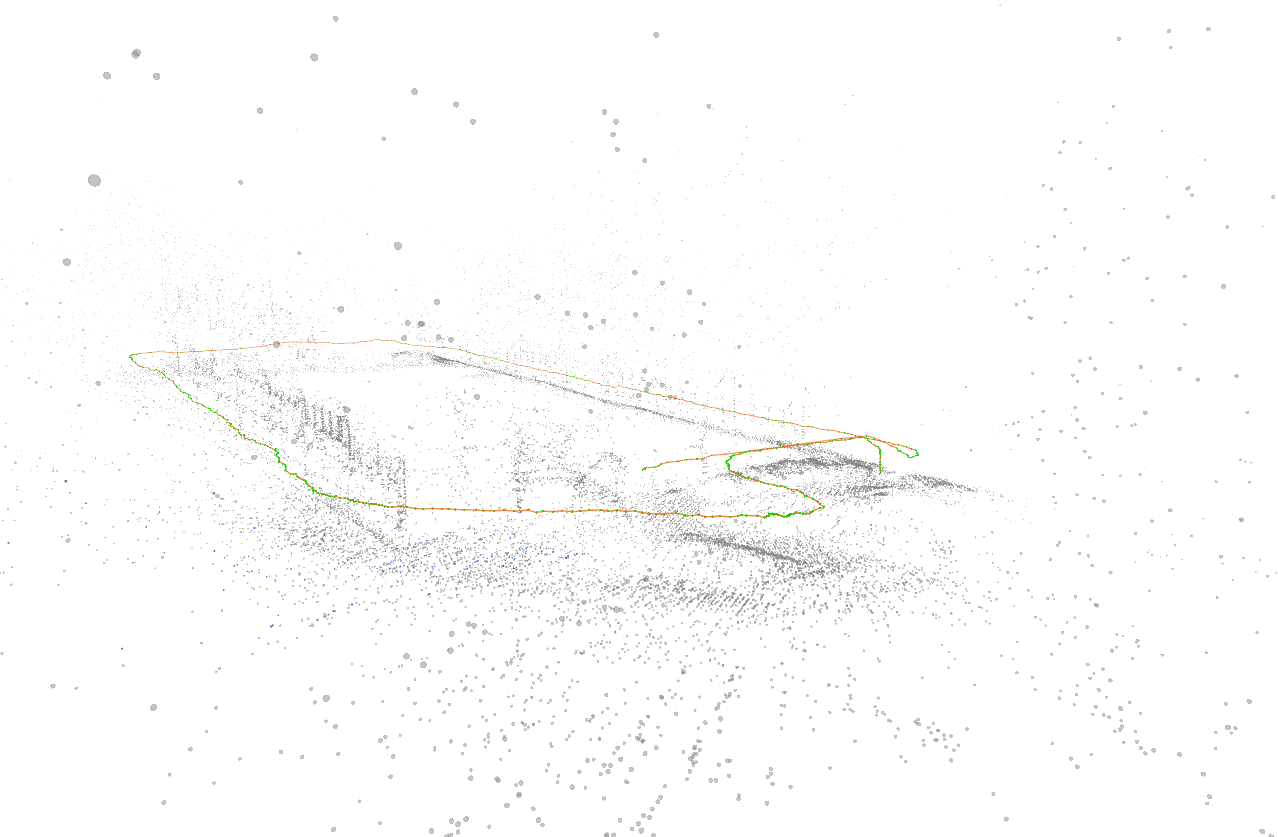

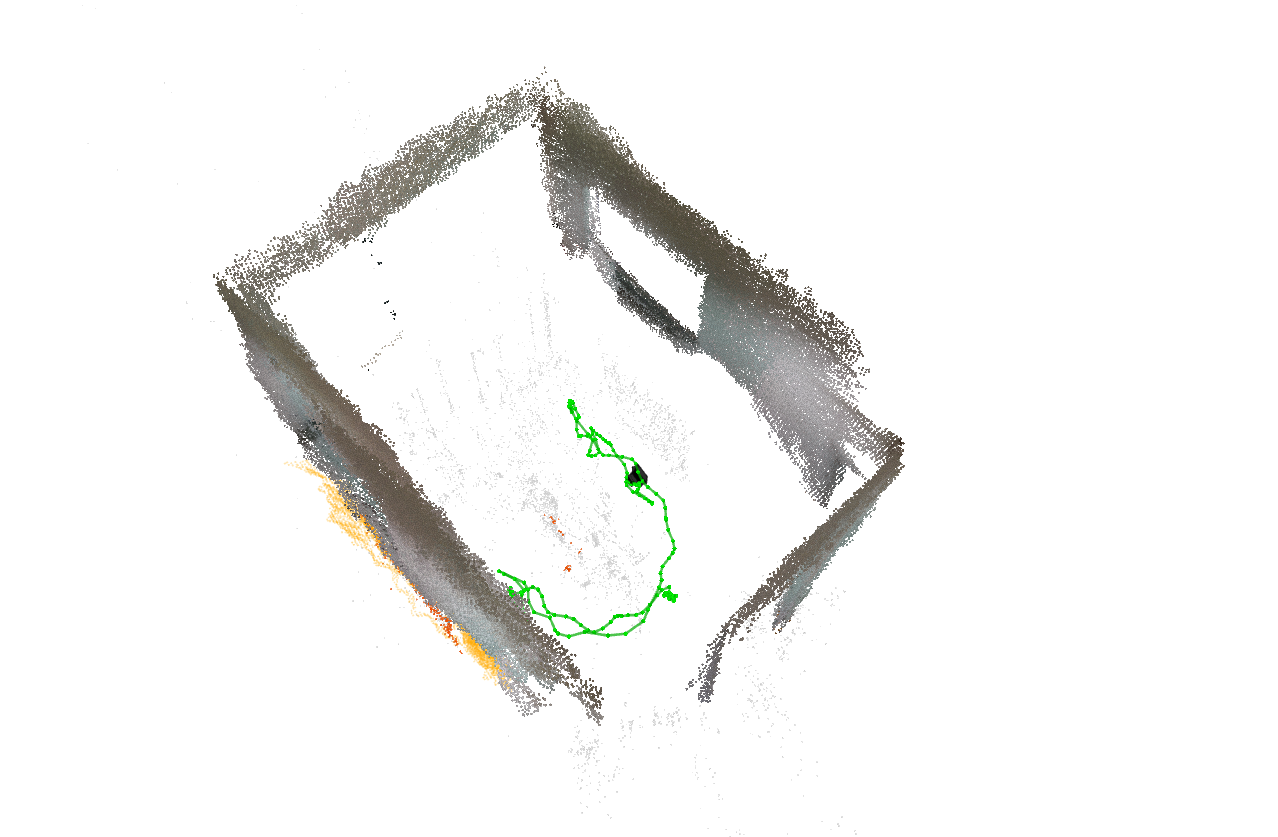

Figure 6: Qualitative results of SLAM benchmarking across selected sequences using various SLAM pipelines, including LiDAR-based (S-Graphs and GLIM) and visual SLAM (ORB-SLAM 3.0 and vS-Graphs).

The dataset enables comparative evaluation of SLAM pipelines under varying sensor configurations and environmental conditions, validating SMapper's suitability for reproducible benchmarking.

Practical and Theoretical Implications

SMapper's open-hardware design and automated calibration pipeline lower the barrier for reproducible SLAM research, enabling rapid deployment and extension to new environments and sensor modalities. The platform's compatibility with both handheld and robot-mounted configurations supports a wide range of use cases, from academic benchmarking to industrial robotics. The release of SMapper-light, with tightly synchronized multimodal data and high-accuracy ground truth, provides a robust foundation for algorithm development, evaluation, and cross-modal learning.

Theoretically, SMapper facilitates research into multi-modal sensor fusion, calibration error propagation, and benchmarking of semantic mapping frameworks. The platform's extensibility supports integration of additional modalities (e.g., event cameras, GNSS), and its calibration/synchronization infrastructure can be adapted for heterogeneous sensor networks.

Future Directions

Potential future developments include expansion of the SMapper-light dataset to cover more diverse environments and robot-mounted scenarios, real-time fusion of multi-camera and LiDAR data for dense colored point cloud generation, and integration of structural priors (e.g., BIMs) for enhanced ground truth generation. The platform's open-source software suite and hardware documentation support community-driven improvements and adaptation to emerging SLAM paradigms.

Conclusion

SMapper establishes a reproducible, extensible, and open-hardware foundation for multi-modal SLAM benchmarking. Its robust sensor integration, calibration, and synchronization pipelines enable high-fidelity data collection and evaluation across diverse environments. The release of SMapper-light and comprehensive benchmarking experiments demonstrate the platform's practical utility and relevance for advancing SLAM algorithm development. SMapper's design and methodology are well-positioned to support future research in multi-modal perception, semantic mapping, and reproducible robotics experimentation.