- The paper demonstrates an integrated ML pipeline that identifies GW231123 with 79-72% confidence and automates glitch detection using transformer-based architectures.

- It details the use of Gaussian Mixture Modeling and peak-finding in ArchGEM to extract physical parameters from scattered light glitches, enhancing detector calibration.

- Waveform reconstruction with AWaRe achieves overlaps above 0.9, validating the robust performance of the method in analyzing intermediate mass black hole mergers.

Introduction

The paper presents a comprehensive ML analysis of GW231123, the most massive binary black hole (BBH) merger observed to date, with a total mass in the range 190−265M⊙ and high component spins. The event is significant for probing the lower end of the intermediate mass black hole (IMBH) regime and the pair-instability mass gap, with implications for black hole formation channels. The analysis addresses two major challenges: waveform-model systematics in the high-mass, high-spin regime, and contamination from non-Gaussian noise artifacts ("glitches") in both LIGO detectors. The authors introduce an integrated ML pipeline comprising GW-Whisper (a transformer-based classifier), ArchGEM (a GMM-based glitch characterizer), and AWaRe (a probabilistic convolutional autoencoder for waveform reconstruction), demonstrating robust signal identification, glitch characterization, and high-fidelity waveform recovery in the presence of complex noise.

Signal Classification with GW-Whisper

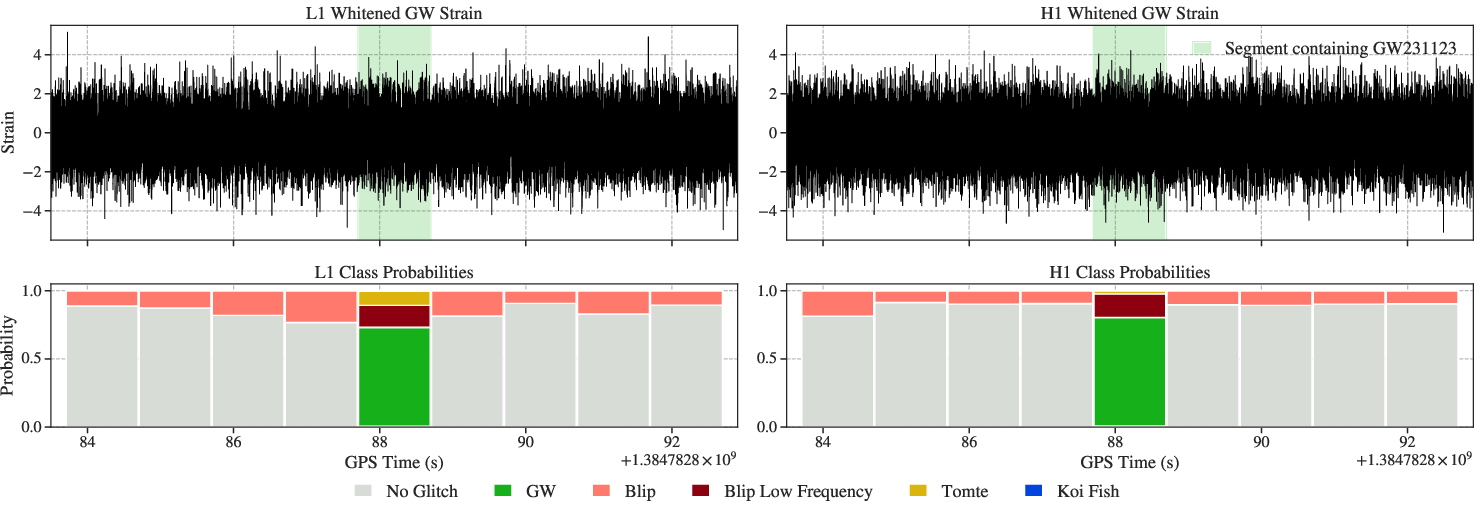

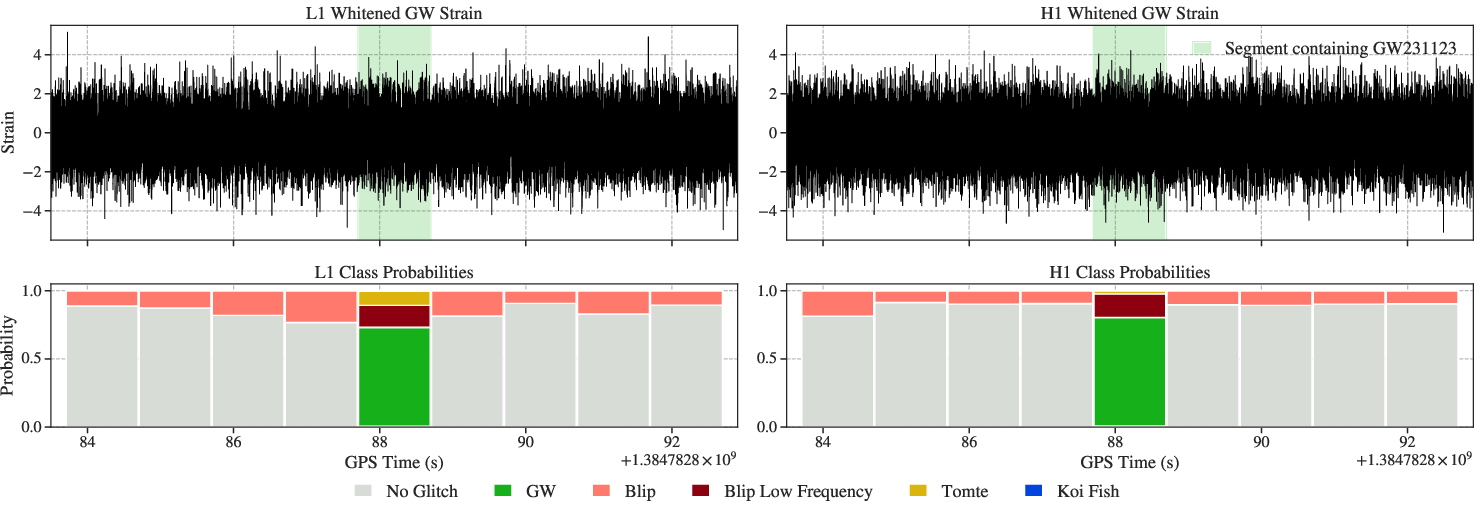

GW-Whisper adapts the OpenAI Whisper audio transformer for gravitational wave (GW) data, leveraging parameter-efficient fine-tuning (DoRA) on curated glitch and BBH merger datasets. The model processes log-mel spectrograms of whitened strain data in 1 s segments, outputting class probabilities for GW signals and common glitch morphologies. In the analysis of 8 s of data around GW231123, GW-Whisper identifies the segment containing the merger with 79.32% (Hanford) and 72.33% (Livingston) confidence, while all other segments are classified as "No Glitch" with high confidence. The preprocessing step, including a 20 Hz high-pass filter, effectively removes low-frequency glitches, aligning with the training data distribution and enhancing model focus on GW signals.

Figure 1: Predicted class label probabilities for 8 seconds around GW231123 using GW-Whisper, showing confident detection of the GW event and absence of glitches in surrounding segments.

This approach demonstrates the feasibility of transformer-based architectures for low-latency, segment-level GW event and glitch classification, providing scalable alternatives to manual inspection and heuristic vetoes.

Scattered Light Glitch Characterization with ArchGEM

ArchGEM applies Gaussian Mixture Modeling (GMM) and peak-finding algorithms to Q-transform spectrograms, isolating and characterizing scattered light glitches in the Livingston detector. The analysis of an 18 s window around GW231123 reveals arch-like features below 40 Hz, typical of scattered light artifacts. The dual-method approach yields physically interpretable parameters: average maximum arch frequencies of 14.36 Hz (peak-finding) and 11.13 Hz (GMM), a scattering recurrence frequency of 0.19 Hz, surface displacement of 0.11 μm, and average surface velocity of 0.41 μm/s. The standard deviation in peak frequency (σf=1.55 Hz) and temporal coherence (σΔt=0.00 s) indicate highly periodic motion. The extracted parameters are consistent with surface oscillations and provide actionable feedback for detector commissioning.

AWaRe is a convolutional U-Net autoencoder with multi-head self-attention, trained on simulated BBH signals (100−1000M⊙) injected into background data. The network outputs mean and variance for each time sample, enabling uncertainty-aware reconstructions. Comparisons with cWB (model-agnostic), Bilby (template-based), and BayesWave (wavelet-based) reconstructions show high overlap: 92–97% (Hanford), 91–98% (Livingston), with slightly stronger agreement to template-free methods, indicating robustness to waveform-model systematics.

(Figure 2)

Figure 2: AWaRe reconstructions of GW231123 in Livingston and Hanford, with uncertainty bands and residuals demonstrating high fidelity and normality of residuals.

Shapiro-Wilk tests on residuals yield p-values of 0.671 (Hanford) and 0.454 (Livingston), supporting the hypothesis that residuals are normally distributed and that the reconstructions effectively subtract the GW signal, leaving negligible residual power.

Robustness to Glitches and Generalization to IMBH Regime

Injection studies with GW231123-like waveforms generated from multiple waveform approximants and injected into O3 data contaminated by seven common glitch morphologies demonstrate that AWaRe reconstructions maintain overlap distributions sharply peaked above 0.9, even in the presence of morphologically similar glitches. Slight broadening for Tomte and Koi-fish glitches reflects increased uncertainty when glitch morphology overlaps the signal's time-frequency support.

(Figure 3)

Figure 3: Overlap distributions between AWaRe reconstructions and GW231123-like injections in Hanford and Livingston, including robustness tests against various glitch types.

Further, box plots of overlap for BBH signals with total masses 100−1000M⊙ show median overlaps above 0.9 up to 500M⊙, gradually decreasing to ∼0.85 at the highest masses, with broader interquartile ranges. This quantifies the onset of accuracy loss for merger-ringdown-dominated signals in noisy data, but demonstrates substantial fidelity even in the 900−1000M⊙ bin.

(Figure 4)

Figure 4: Box plots of overlap between AWaRe reconstructions and original waveforms for simulated BBH events across the 100−1000M⊙ mass range.

Implications and Future Directions

The integrated ML pipeline provides a scalable, automated framework for rapid and robust analysis of massive, glitch-contaminated GW events. GW-Whisper enables low-latency event and glitch classification, ArchGEM delivers physically interpretable glitch diagnostics, and AWaRe achieves high-fidelity, uncertainty-aware waveform reconstructions. The pipeline complements traditional matched filtering and Bayesian analyses, offering enhanced reliability in signal validation and noise characterization, particularly in the IMBH regime where waveform-model systematics and glitch contamination are most severe.

The demonstrated robustness to diverse glitch morphologies and generalization across the IMBH mass range positions this framework as essential for future GW observations, especially as detector sensitivity increases and glitch diversity grows. The ability to extract physically meaningful parameters from noise artifacts and to recover true GW signals in challenging environments will maximize the astrophysical return from the most massive and complex GW sources.

Conclusion

This paper presents the first ML-driven follow-up of GW231123, addressing the dual challenges of waveform-model systematics and non-Gaussian noise transients. The integrated pipeline autonomously identifies the merger, characterizes scattered light glitches, and reconstructs waveforms with high fidelity and uncertainty quantification. Injection studies confirm robustness across glitch types and the IMBH mass regime. As GW detectors advance, such ML frameworks will be indispensable for reliable analysis of massive, glitch-contaminated events, enabling deeper exploration of black hole formation channels and the IMBH population.