- The paper introduces a unified RL framework, AgentGym-RL, that trains LLM agents from scratch using progressive interaction scaling.

- It employs decoupled environment, agent, and training modules to support diverse scenarios and robust RL algorithm performance.

- Empirical results show RL-trained open-source models achieve significant gains over proprietary models across web, game, and scientific tasks.

AgentGym-RL: A Unified RL Framework for Long-Horizon LLM Agent Training

Introduction and Motivation

The paper introduces AgentGym-RL, a modular, extensible reinforcement learning (RL) framework for training LLM agents in multi-turn, long-horizon decision-making tasks. The motivation is to address the lack of a unified, scalable RL platform that supports direct, from-scratch agent training (without supervised fine-tuning) across diverse, realistic environments. The framework is designed to facilitate research on agentic intelligence, enabling LLMs to acquire skills through exploration and interaction, analogous to human cognitive development.

Framework Architecture and Engineering

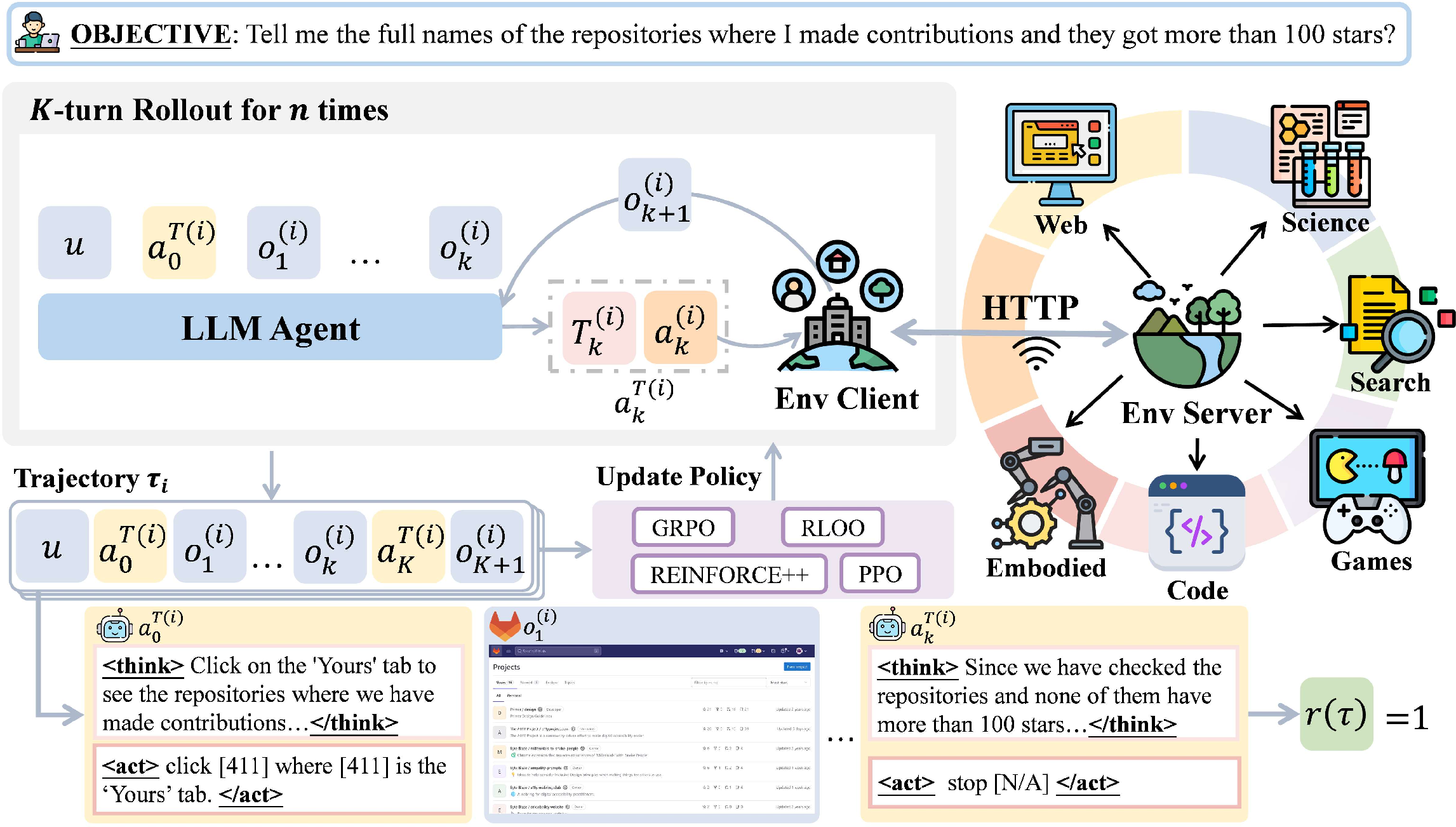

AgentGym-RL is architected around three decoupled modules: Environment, Agent, and Training. This separation ensures flexibility, extensibility, and scalability for large-scale RL experiments.

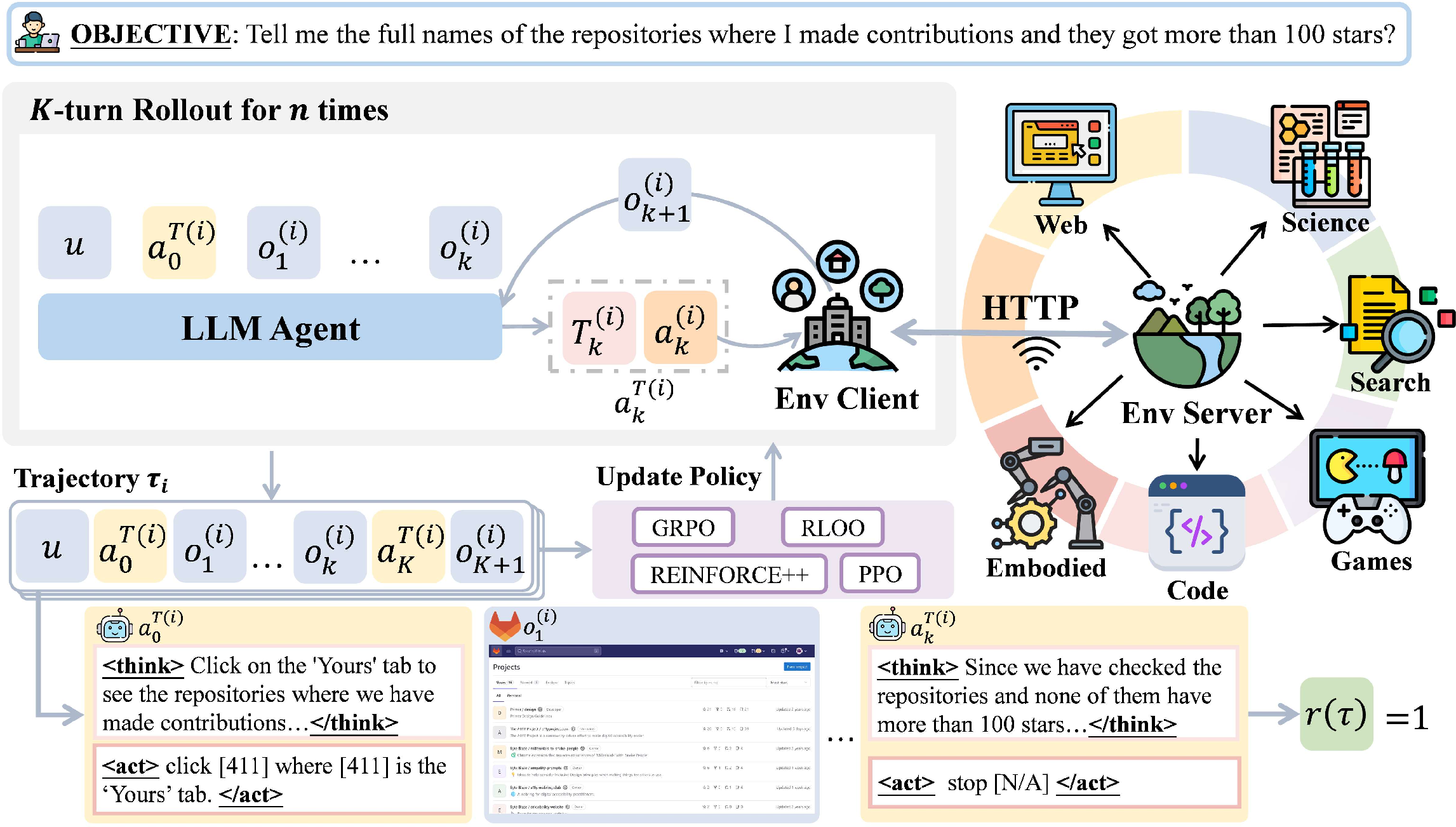

Figure 1: The AgentGym-RL framework comprises modular environment, agent, and training modules, supporting diverse scenarios and RL algorithms.

- Environment Module: Each environment is an independent service, supporting parallelism via multiple replicas and standardized HTTP APIs for observation, action, and reset. The framework covers web navigation, deep search, digital games, embodied tasks, and scientific reasoning.

- Agent Module: Encapsulates the reasoning-action loop, supporting multi-turn interaction, advanced prompting, and various reward functions.

- Training Module: Implements a unified RL pipeline, supporting on-policy algorithms (PPO, GRPO, REINFORCE++, RLOO), curriculum learning, and staged interaction scaling. Distributed training and diagnostics are natively supported.

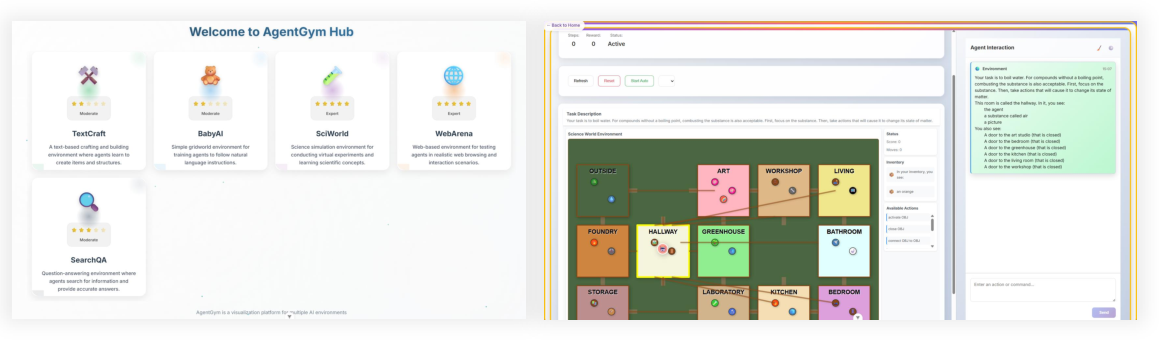

The framework is engineered for reliability (e.g., memory-leak mitigation), high-throughput parallel rollout, and reproducibility, with standardized evaluation and interactive UI for trajectory inspection.

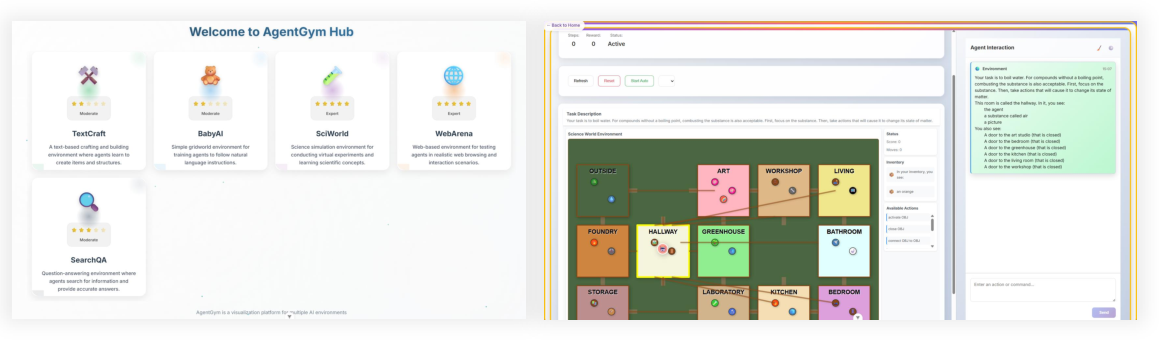

Figure 2: Visualized user interface for stepwise inspection and analysis of agent-environment interactions.

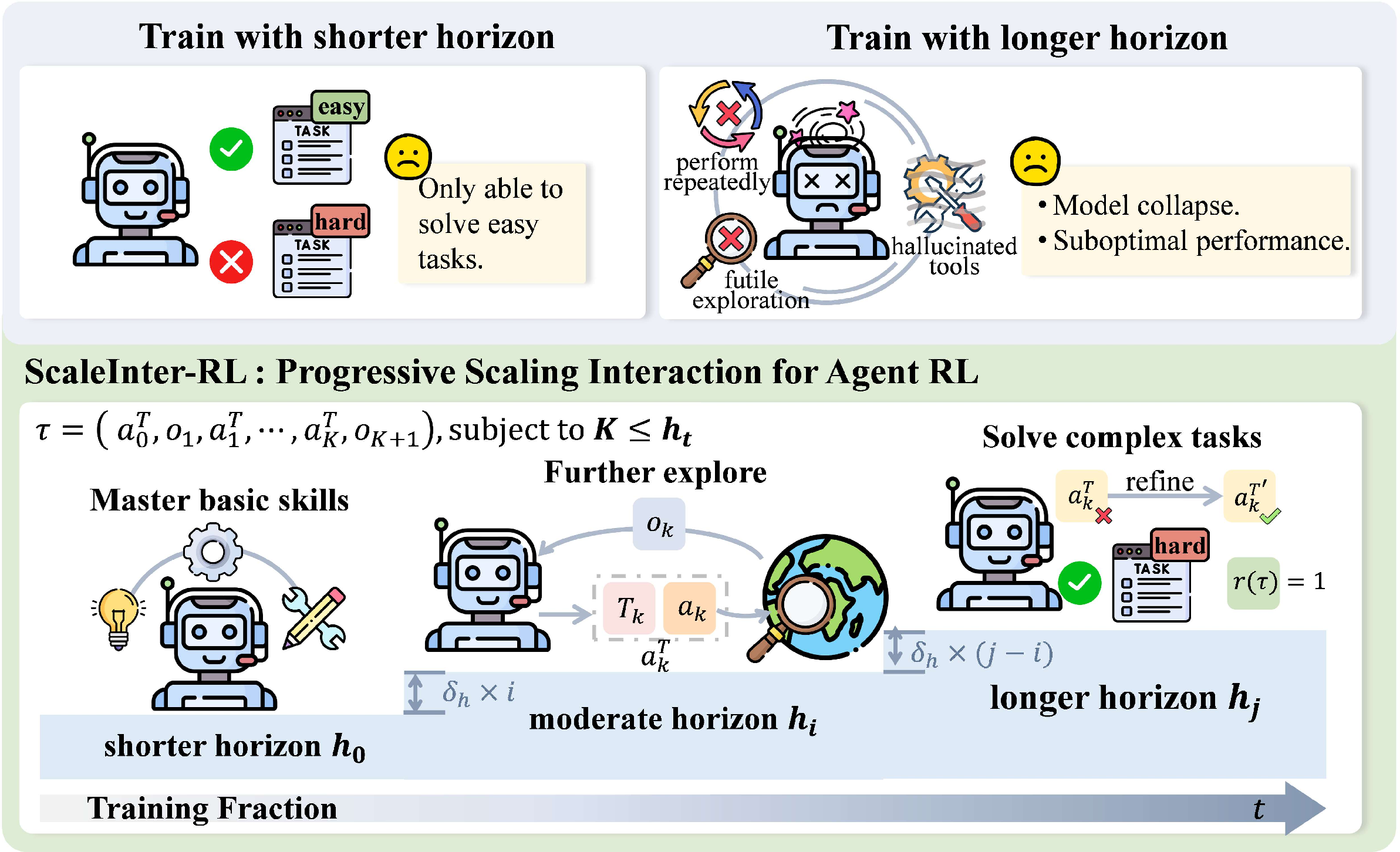

ScalingInter-RL: Progressive Interaction Scaling

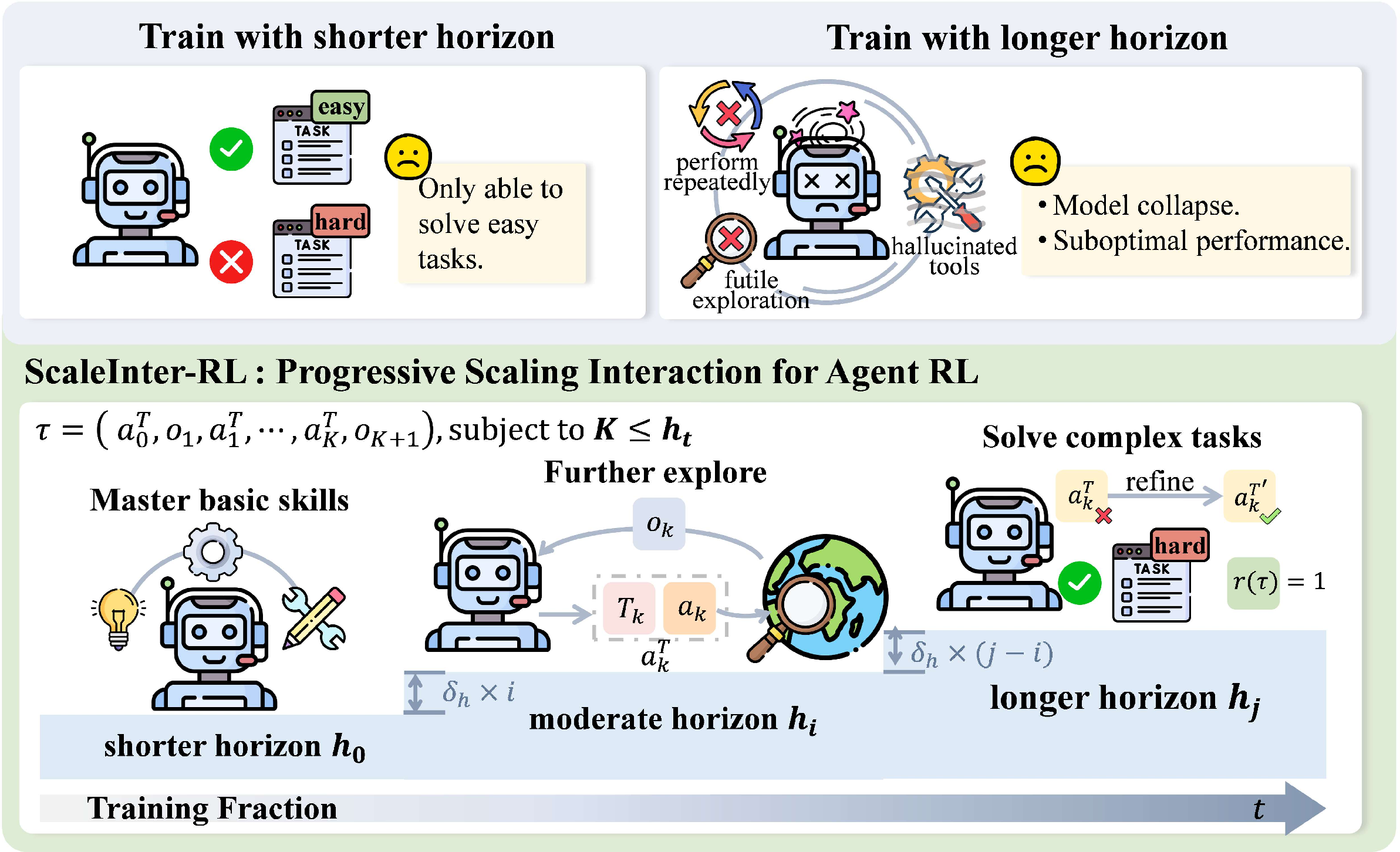

A central methodological contribution is ScalingInter-RL, a curriculum-based RL approach that progressively increases the agent-environment interaction horizon during training. The method is motivated by the observation that large interaction budgets in early training induce instability (high variance, credit assignment issues, overfitting to spurious behaviors), while short horizons limit exploration and skill acquisition.

Figure 3: ScalingInter-RL progressively increases interaction turns, balancing early exploitation with later exploration for robust skill acquisition.

The training schedule starts with a small number of allowed interaction turns (favoring exploitation and rapid mastery of basic skills), then monotonically increases the horizon to promote exploration, planning, and higher-order behaviors. This staged approach aligns the agent's exploration capacity with its evolving policy competence, stabilizing optimization and enabling the emergence of complex behaviors.

Empirical Evaluation and Results

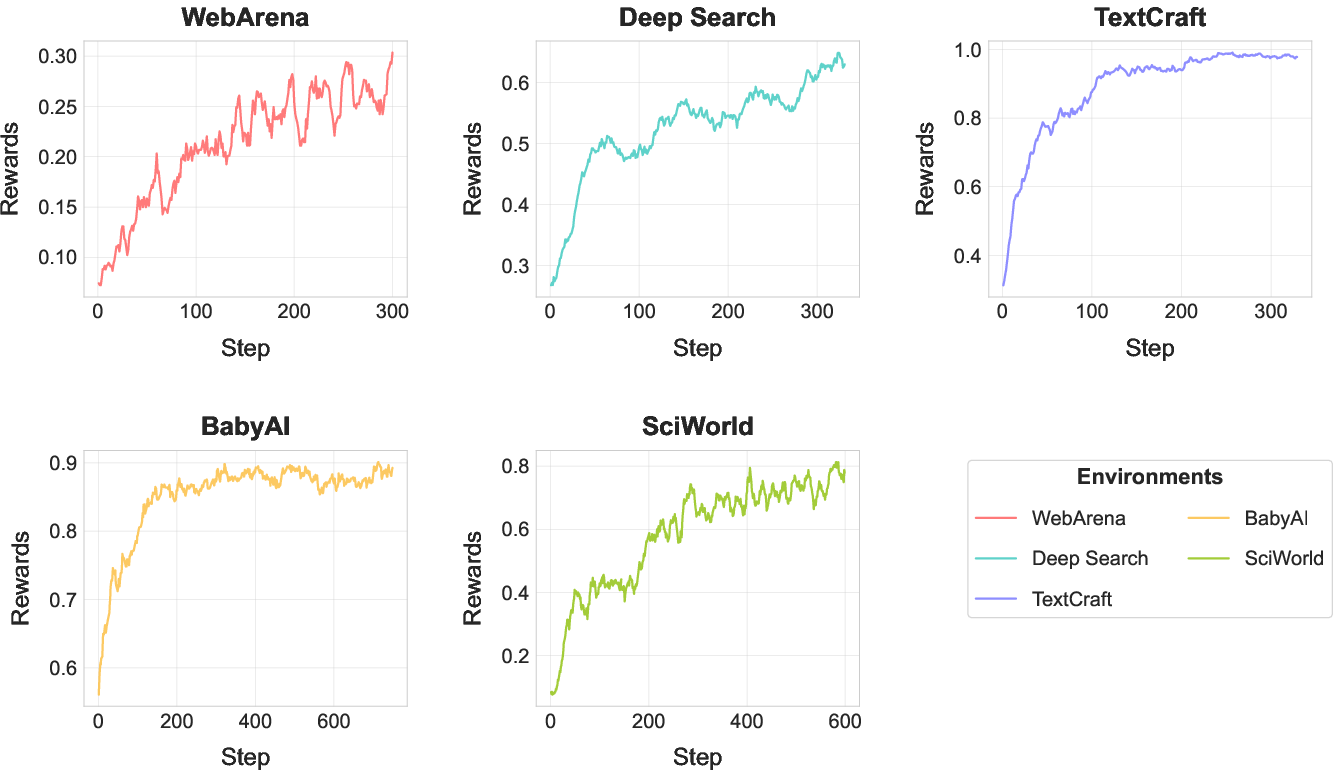

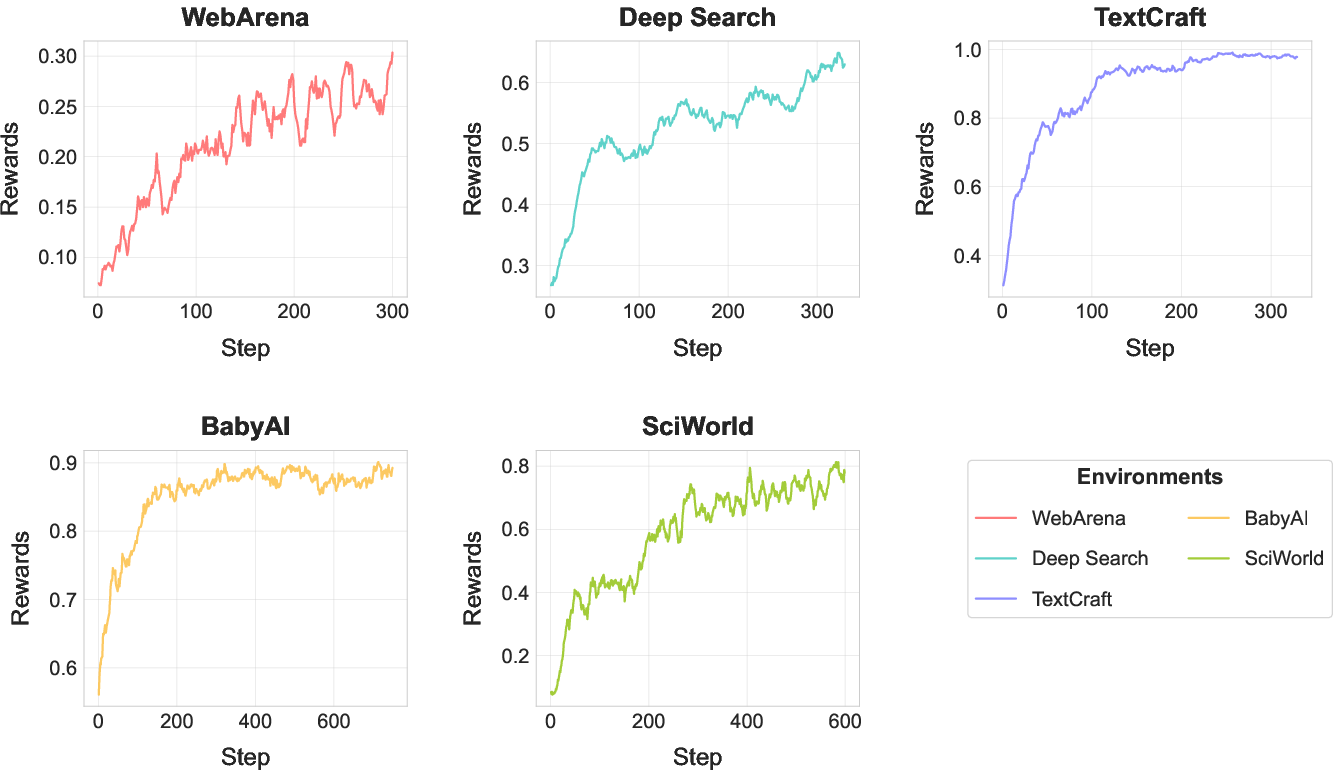

Extensive experiments are conducted across five scenarios: web navigation (WebArena), deep search (RAG-based QA), digital games (TextCraft), embodied tasks (BabyAI), and scientific reasoning (SciWorld). The evaluation benchmarks both open-source and proprietary LLMs, including Qwen-2.5, Llama-3.1, DeepSeek-R1, GPT-4o, and Gemini-2.5-Pro.

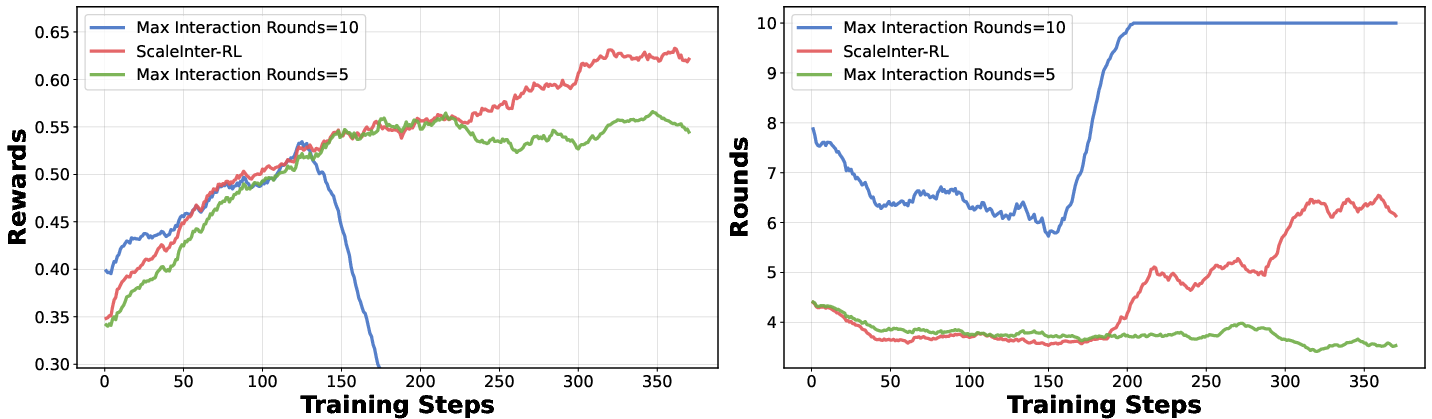

Figure 4: Training reward curves across environments, demonstrating stable and sustained improvements with AgentGym-RL and ScalingInter-RL.

Key findings include:

RL Algorithmic Insights

Comparative analysis of RL algorithms (GRPO vs. REINFORCE++) reveals that GRPO consistently outperforms REINFORCE++ across all benchmarks, even at smaller model scales. The advantage is attributed to GRPO's robust handling of high-variance, sparse-reward settings via action advantage normalization and PPO-style clipping, which stabilizes credit assignment and exploration.

Case Studies and Failure Modes

Qualitative trajectory analyses demonstrate that RL-trained agents exhibit:

- Superior navigation and recovery strategies in web and embodied environments.

- Systematic, compositional task execution in scientific and game-like settings.

- Reduced unproductive behavioral loops and improved error handling.

However, persistent failure modes are identified:

- Over-interaction: RL agents sometimes engage in redundant actions, indicating a gap between state-reaching and efficient action selection.

- Procedural reasoning failures: Intractable tasks (e.g., SciWorld Chem-Mix) expose limitations in deep procedural understanding and systematic exploration.

Implications and Future Directions

AgentGym-RL establishes a robust foundation for research on agentic LLMs, enabling reproducible, large-scale RL experiments across heterogeneous environments. The results demonstrate that RL—especially with progressive interaction scaling—can unlock agentic intelligence in open-source models, closing the gap with proprietary systems.

Practical implications include:

- Open-source agentic RL research is now feasible at scale, lowering the barrier for community-driven advances.

- Curriculum-based interaction scaling is essential for stable, efficient RL optimization in long-horizon, multi-turn settings.

- Algorithmic choices (e.g., GRPO) are more impactful than model scaling in sparse-reward, high-variance environments.

Theoretical implications point to the need for:

- Generalization and transfer: Current agents excel in-domain; future work should address cross-environment and tool adaptation.

- Scaling to physically grounded, real-world tasks: Richer sensory inputs and larger action spaces present new RL and infrastructure challenges.

- Multi-agent RL: Extending the framework to multi-agent settings may yield further gains but introduces additional complexity.

Conclusion

AgentGym-RL provides a unified, extensible RL framework for training LLM agents in long-horizon, multi-turn decision-making tasks. The introduction of ScalingInter-RL addresses the exploration-exploitation trade-off and stabilizes RL optimization, enabling open-source models to achieve or exceed the performance of proprietary systems across diverse environments. The work highlights the importance of curriculum-based interaction scaling, robust RL algorithms, and environment structure in advancing agentic intelligence. Future research should focus on generalization, real-world grounding, and multi-agent extensions to further advance the capabilities of autonomous LLM agents.