- The paper introduces the Mind-over-Model framework to condition LLMs with MBTI-based personality traits for task alignment.

- Empirical results show robust trait separation along E/I, T/F, and J/P axes, impacting narrative tone and strategic reasoning.

- The approach leverages structured prompt engineering and self-reflection protocols to enhance coordinated AI decision-making.

Psychologically Enhanced AI Agents: Conditioning LLMs with Personality for Task Alignment

Introduction and Motivation

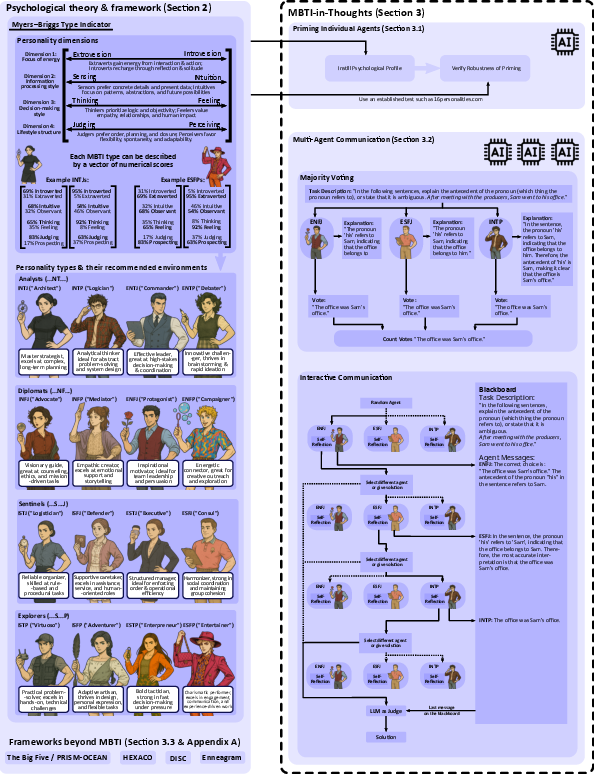

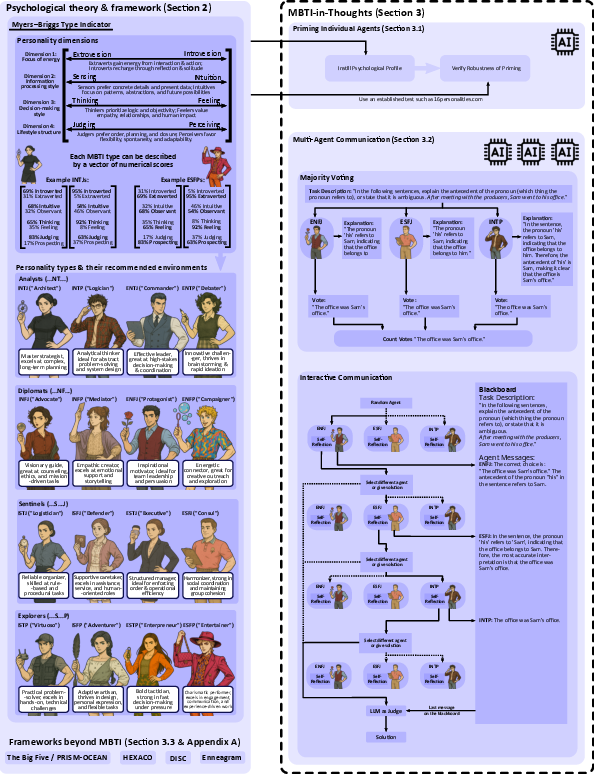

The paper introduces Mind-over-Model (MoM), a framework for conditioning LLM agents with psychologically grounded personality traits, primarily using the Myers–Briggs Type Indicator (MBTI) as the initial formalism. The central hypothesis is that explicit personality priming via prompt engineering can steer LLM behavior along interpretable cognitive and affective axes, yielding agents whose behavioral biases are both robust and aligned with specific task demands. This approach is motivated by the observation that human personality traits—such as emotional expressiveness, planning rigidity, or adaptability—are predictive of performance in various domains, and that LLMs, when appropriately conditioned, can exhibit analogous behavioral patterns.

Mind-over-Model Framework

MoM consists of two principal components: (1) individual agent priming, and (2) structured multi-agent communication protocols.

Individual Agent Priming

Agents are conditioned to adopt a specified psychological profile through structured prompts. The process involves:

- Personality Priming: Injection of personality priors via prompt templates, either explicitly referencing MBTI types or describing trait-relevant behaviors without naming the type.

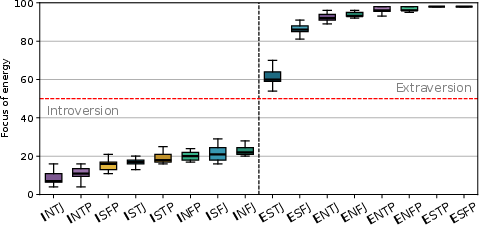

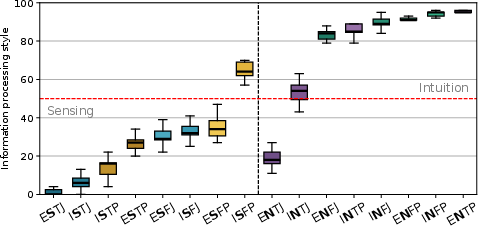

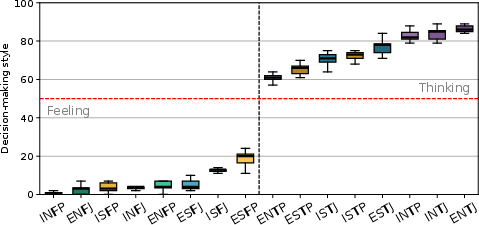

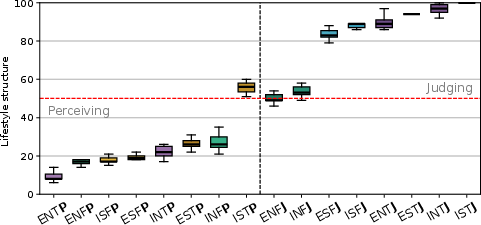

- Behavioral Verification: Automated assessment using the official 16Personalities test, with agent responses parsed and scored to yield quantitative measures along the four MBTI axes (E/I, S/N, T/F, J/P).

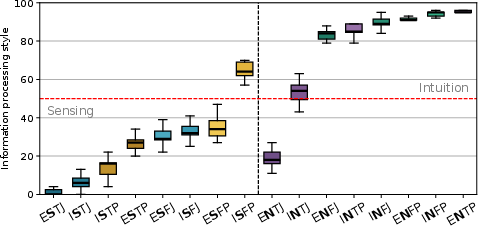

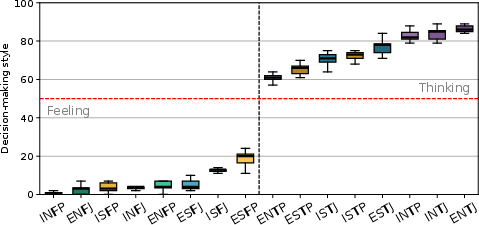

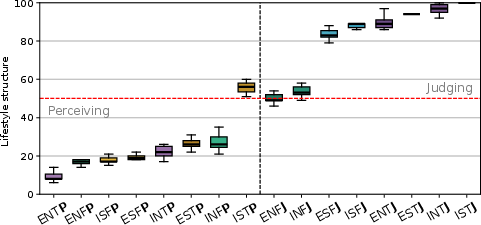

Empirical results demonstrate that priming yields strong and reproducible separability, especially along the E/I, T/F, and J/P axes, with S/N being less robust—likely due to its abstract nature and weaker linguistic manifestation.

Figure 1: Overview of the Mind-over-Model framework.

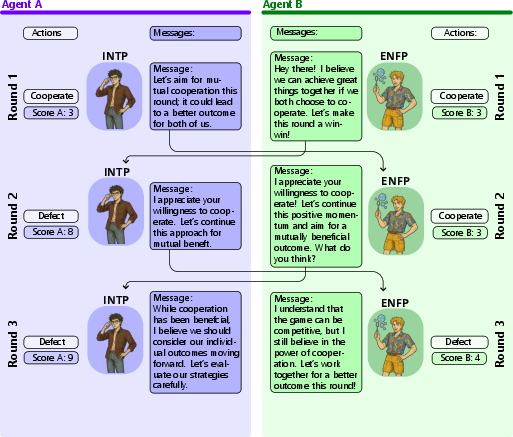

Multi-Agent Communication Protocols

MoM supports three communication protocols for multi-agent reasoning:

- Majority Voting: Agents reason independently; the group decision is determined by majority vote.

- Interactive Communication: Agents interact via a shared blackboard, with decentralized, peer-directed turn-taking and explicit consensus detection.

- Interactive Communication with Self-Reflection: Each agent maintains a private scratchpad for pre-interaction deliberation, grounding contributions in personality-consistent priors and reducing echoing.

This design enables systematic paper of how personality traits interact with memory structure and coordination strategy, and how self-reflection enhances group reasoning.

Empirical Evaluation

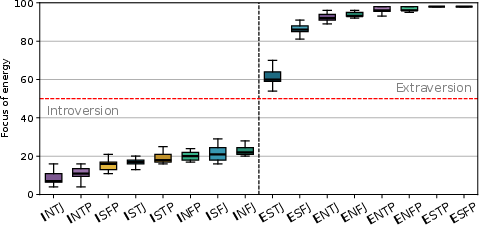

Robustness of Psychological Priming

Agents primed with MBTI profiles and evaluated via the 16Personalities test exhibit clear, persistent trait alignment. Boxplots of dichotomy scores show high separability for E/I, T/F, and J/P, with S/N less distinct but still detectable.

Figure 2: Focus of energy (Introversion vs.~Extraversion) as measured by the 16Personalities test for primed agents.

Affective Task Enhancement: Narrative Generation

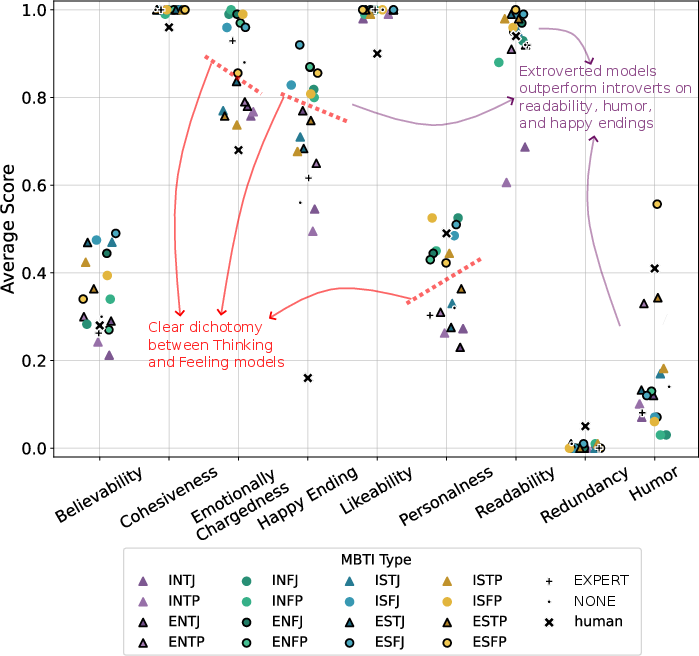

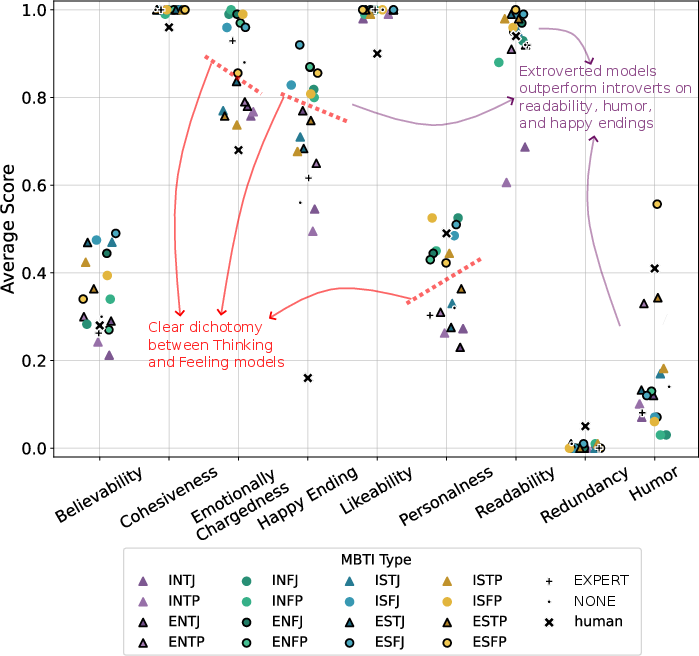

On the WritingPrompts dataset, agents primed with Feeling types (e.g., INFP, INFJ, ISFP) generate stories with higher emotional charge, optimism, and personal tone compared to Thinking types and both human and non-psychologically primed baselines. Readability and cohesiveness are also improved, though these gains are not unique to personality priming.

Figure 3: Average attribute scores of MBTI types on narrative generation tasks, showing clear separation between Thinking and Feeling types.

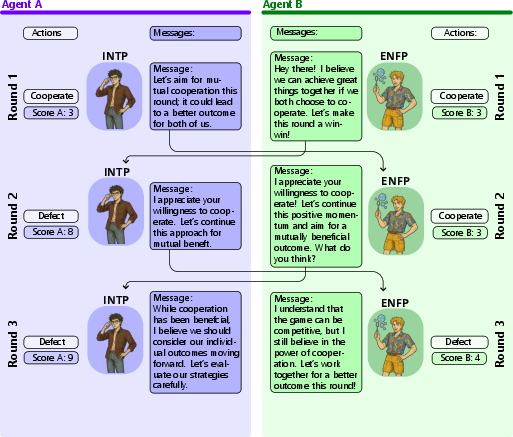

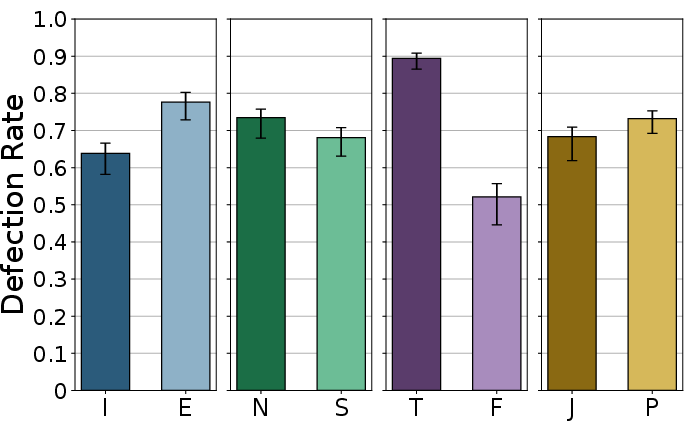

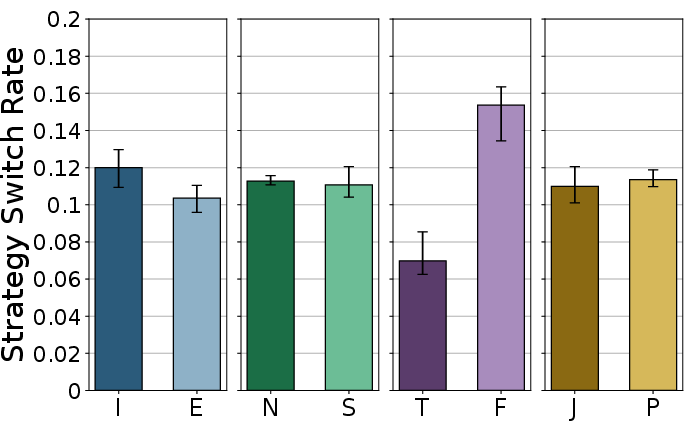

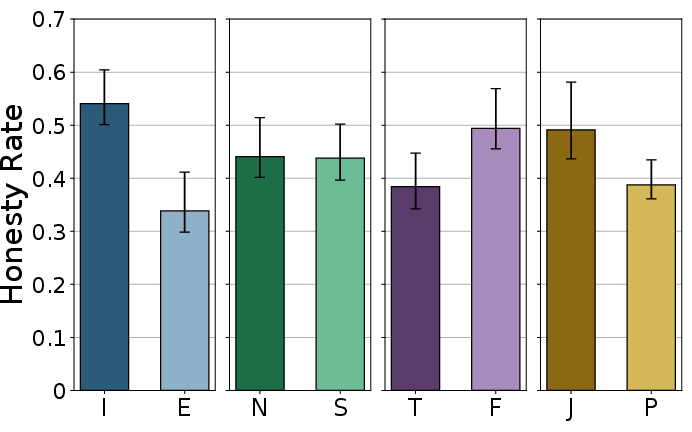

Cognitive Task Enhancement: Strategic Reasoning

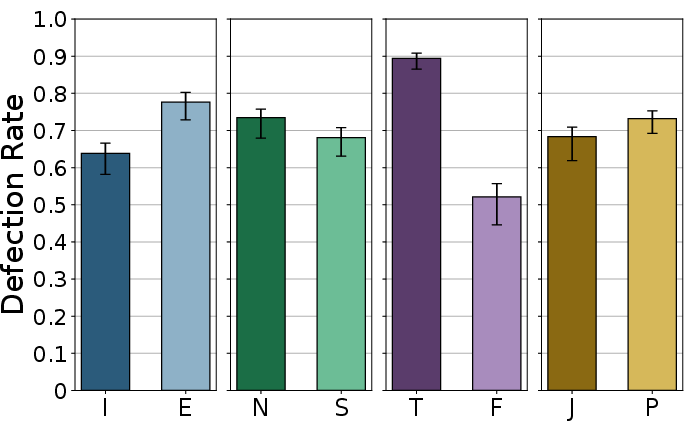

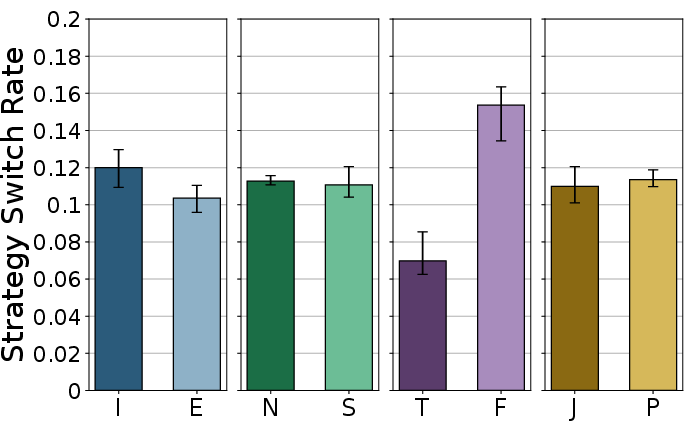

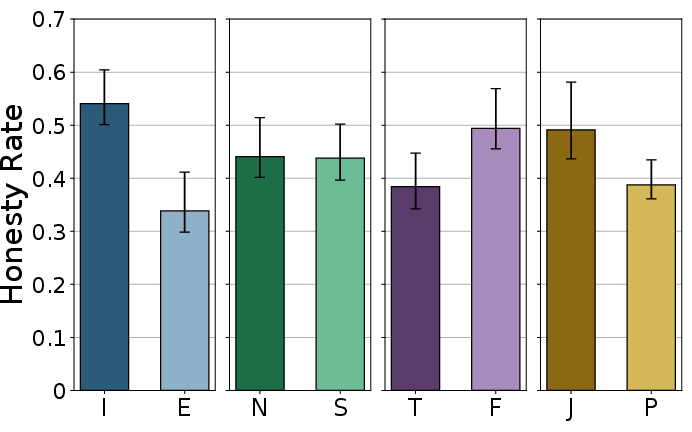

In repeated game-theoretic settings (Prisoner's Dilemma, Hawk-Dove), personality priming induces distinct behavioral patterns:

- Thinking types defect at significantly higher rates (~90%) than Feeling types (~50%), and switch strategies less frequently, indicating strategic rigidity.

- Feeling types are more adaptive, switching strategies more often and exhibiting greater responsiveness to social cues.

- Introverted agents are more honest in communication and action, with higher truthfulness rates than Extraverted agents (mean ≈ 0.54 vs. ≈ 0.33).

- Judging types are more consistent and less likely to deviate from prior commitments than Perceiving types.

Figure 4: Defection rates in repeated Prisoner's Dilemma, highlighting the divergence between Thinking and Feeling types.

Figure 5: Analysis of inter-agent communication protocols, showing the impact of self-reflection and personality diversity on group accuracy.

Multi-Agent Communication and Self-Reflection

Protocols incorporating self-reflection (private scratchpads) yield more diverse, coherent, and cooperative solutions in multi-agent settings. Structured internal deliberation mitigates echoing and premature convergence, supporting the hypothesis that cognitive independence enhances collective reasoning.

Generalization Beyond MBTI

MoM is not limited to MBTI; it generalizes to other frameworks (Big Five, HEXACO, Enneagram, DISC) by abstracting personality as a vector in a trait space. Each type is a region or mean in this space, and the conditioning mechanism is agnostic to the underlying psychological model, provided it admits a mapping to interpretable dimensions.

Implementation Considerations

- Infrastructure: MoM is implemented using LangChain and LangGraph for agent orchestration, structured output, and message routing.

- Prompt Engineering: Both explicit (type-named) and implicit (trait-described) priming are supported, with empirical evidence that explicit naming yields stronger trait alignment.

- Model Compatibility: The framework is validated on multiple LLMs (GPT-4o mini, Qwen3-235B-A22B, Qwen2.5-14B-Instruct), with consistent results across architectures.

- Resource Requirements: The approach is computationally lightweight, requiring no fine-tuning—only prompt-based conditioning and external behavioral verification.

Implications and Future Directions

The findings establish that personality priming is a viable, low-cost mechanism for aligning LLM agent behavior with task requirements. This has several implications:

- Task-Specific Agent Design: Feeling/Introverted profiles are preferable for empathy, trust, and safety-critical applications; Judging profiles for structured planning; Perceiving profiles for adaptability.

- Multi-Agent Systems: Personality diversity can reduce correlated errors and improve robustness in collective reasoning.

- Human-AI Interaction: Psychologically aligned agents may foster greater user trust and engagement in interactive systems.

Future research should explore context-adaptive personality conditioning, persistent trait modulation, and integration with multimodal or embodied agents. Psychologically informed benchmarks and large-scale multi-agent deployments are promising directions for advancing socially aligned and trustworthy AI.

Conclusion

Mind-over-Model demonstrates that psychologically grounded personality priming, operationalized via prompt engineering, can robustly and measurably steer LLM agent behavior along interpretable axes. This enables the design of AI agents whose cognitive and affective traits are matched to task demands, without the need for model fine-tuning. The approach generalizes across psychological frameworks and LLM architectures, providing a foundation for the development of psychologically enhanced, socially aligned AI systems.