RL's Razor: Why Online Reinforcement Learning Forgets Less (2509.04259v1)

Abstract: Comparison of fine-tuning models with reinforcement learning (RL) and supervised fine-tuning (SFT) reveals that, despite similar performance at a new task, RL preserves prior knowledge and capabilities significantly better. We find that the degree of forgetting is determined by the distributional shift, measured as the KL-divergence between the fine-tuned and base policy evaluated on the new task. Our analysis reveals that on-policy RL is implicitly biased towards KL-minimal solutions among the many that solve the new task, whereas SFT can converge to distributions arbitrarily far from the base model. We validate these findings through experiments with LLMs and robotic foundation models and further provide theoretical justification for why on-policy RL updates lead to a smaller KL change. We term this principle $\textit{RL's Razor}$: among all ways to solve a new task, RL prefers those closest in KL to the original model.

Collections

Sign up for free to add this paper to one or more collections.

Summary

- The paper demonstrates that on-policy reinforcement learning minimizes catastrophic forgetting by constraining KL divergence from the base policy.

- Empirical results reveal that RL retains prior knowledge while achieving comparable new-task improvements to supervised fine-tuning.

- The study establishes a strong quadratic relationship between KL divergence and forgetting, offering insights for continual learning design.

RL's Razor: Mechanisms Underlying Reduced Forgetting in On-Policy Reinforcement Learning

Introduction

The paper "RL's Razor: Why Online Reinforcement Learning Forgets Less" (2509.04259) presents a systematic analysis of catastrophic forgetting in foundation models during post-training adaptation. The authors compare supervised fine-tuning (SFT) and on-policy reinforcement learning (RL), demonstrating that RL preserves prior knowledge more effectively, even when both methods achieve similar performance on new tasks. The central claim is that the degree of forgetting is governed by the KL divergence between the fine-tuned and base policy, measured on the new task distribution. RL's on-policy nature implicitly biases solutions toward minimal KL divergence, a principle termed "RL's Razor." This essay provides a technical summary of the paper's findings, empirical results, theoretical contributions, and implications for continual learning in AI.

Empirical Analysis: RL vs. SFT in Catastrophic Forgetting

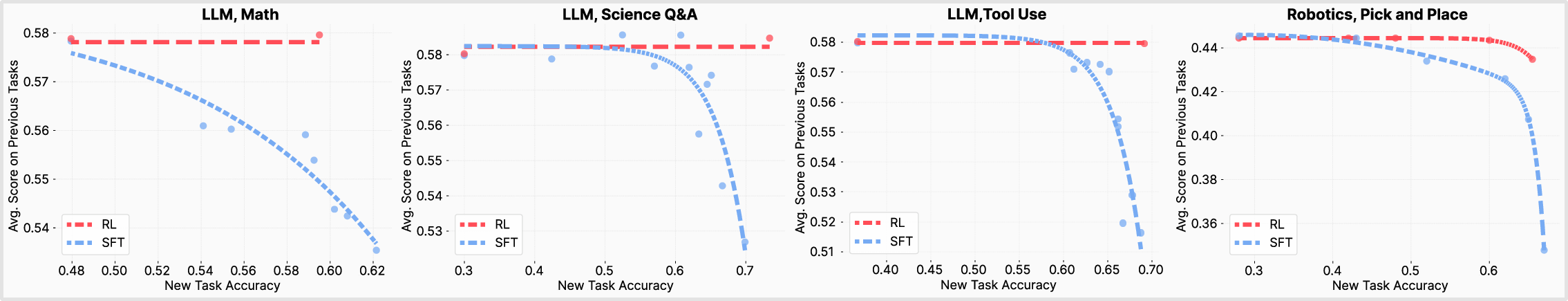

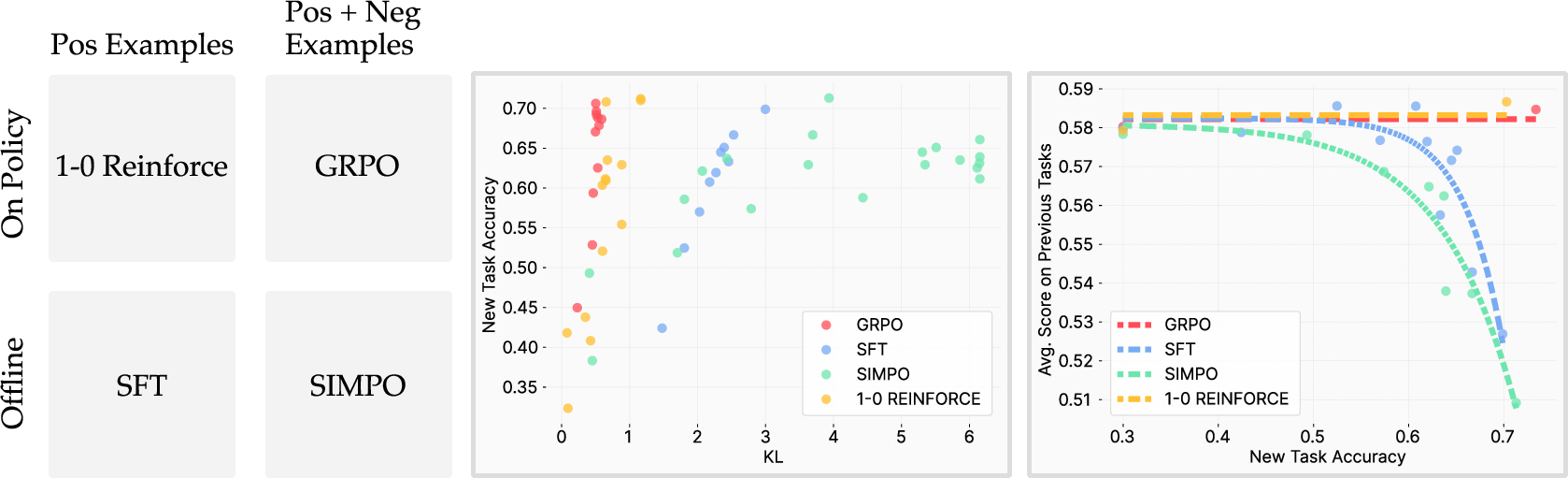

The authors conduct extensive experiments across LLMs and robotic foundation models, evaluating the trade-off between new-task performance and retention of prior capabilities. Models are fine-tuned on new tasks using both SFT and RL (specifically GRPO), and their performance is measured on a suite of unrelated benchmarks to quantify forgetting.

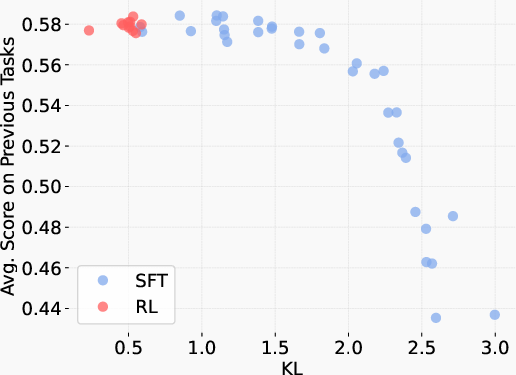

RL consistently achieves new-task improvements while maintaining prior knowledge, whereas SFT's gains on the new task are accompanied by substantial degradation of prior abilities. This is visualized via Pareto frontiers, where RL's curve dominates SFT's, indicating superior retention at matched new-task accuracy.

Figure 1: Pareto frontiers of RL and SFT, showing RL maintains prior knowledge while SFT sacrifices it for new-task gains.

The empirical gap is most pronounced in tasks with multiple valid output distributions (e.g., generative tasks), where RL's on-policy updates constrain the model to solutions close to the base policy, while SFT can converge to arbitrarily distant distributions depending on the annotation source.

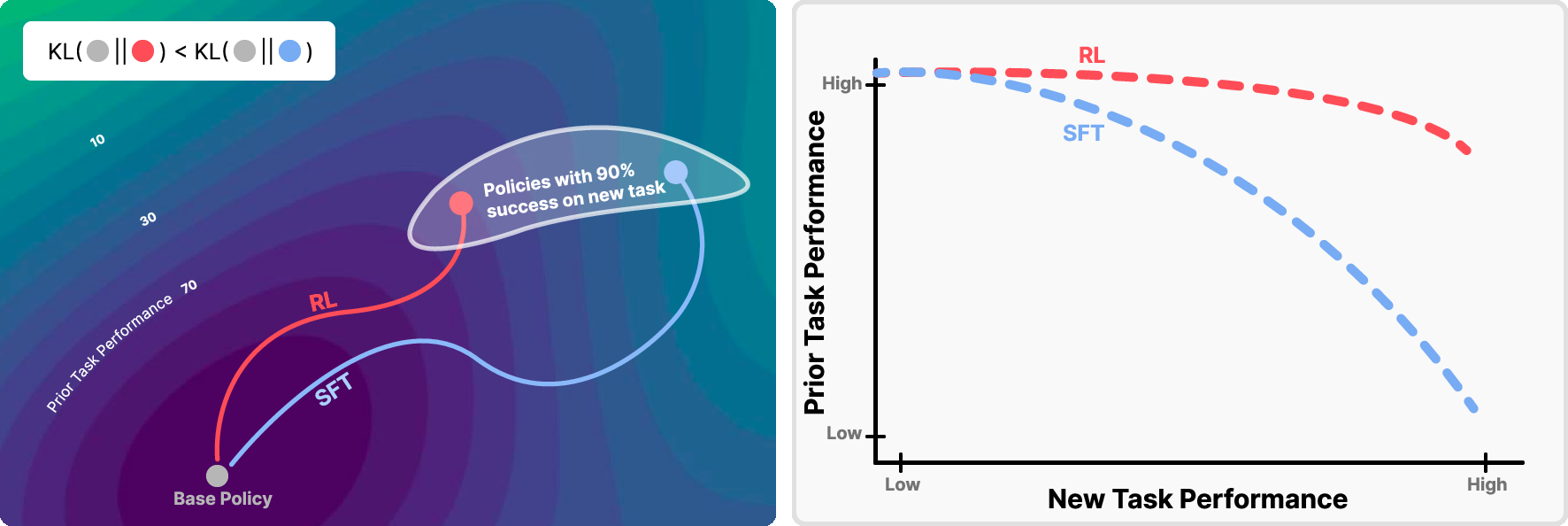

Figure 2: RL converges to KL-minimal solutions among policies that solve the new task, yielding higher prior-task retention compared to SFT.

KL Divergence as a Predictor of Forgetting

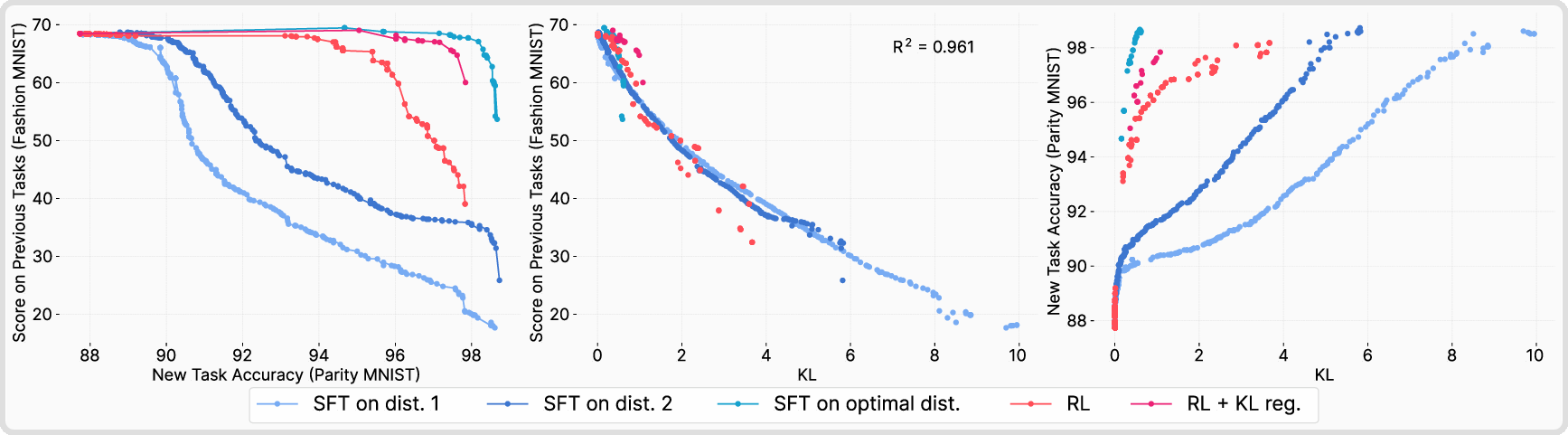

A key contribution is the identification of an empirical "forgetting law": the KL divergence between the fine-tuned and base policy, evaluated on the new task, reliably predicts the degree of catastrophic forgetting. This relationship holds across models, domains, and training algorithms, with a quadratic fit achieving R2=0.96 in controlled settings and R2=0.71 in LLM experiments.

Figure 3: Forgetting aligns to a single curve when plotted against KL divergence, showing KL as a strong predictor across methods.

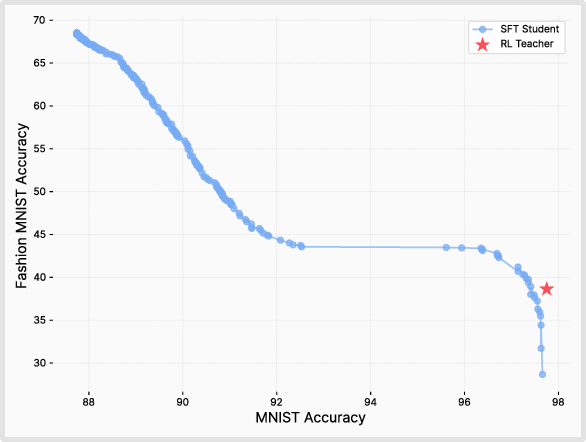

The authors validate this principle in a toy ParityMNIST setting, where RL and SFT are compared under full convergence. SFT trained on an oracle distribution that minimizes KL divergence achieves even less forgetting than RL, confirming that KL minimization, not the optimization path, governs retention.

Figure 4: SFT distillation from an RL teacher matches the teacher's accuracy-forgetting trade-off, indicating the final distribution is what matters.

On-Policy Training and KL-Minimal Solutions

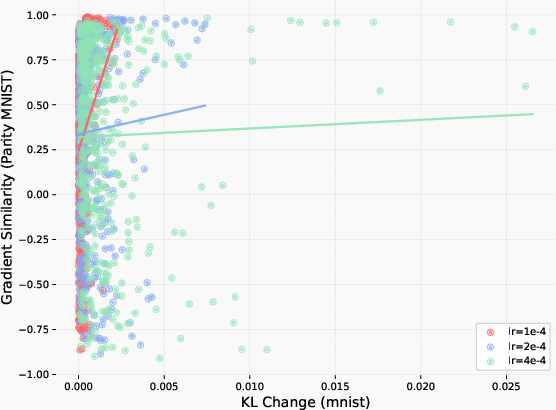

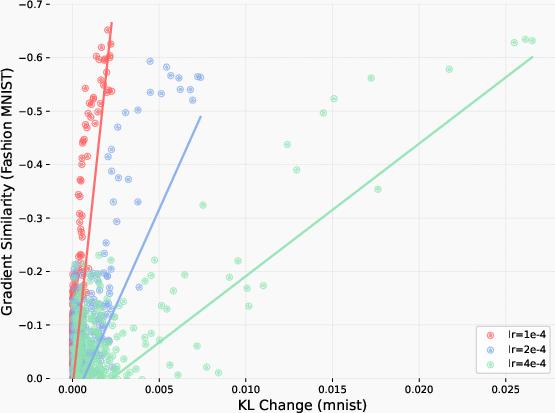

The paper provides both empirical and theoretical evidence that on-policy RL methods (e.g., policy gradient) are inherently biased toward KL-minimal solutions. This bias arises because RL samples from the model's own distribution, reweighting outputs according to reward, and thus updates the policy conservatively relative to its initialization.

A comparison of algorithm classes (on-policy/offline, with/without negative gradients) reveals that on-policy methods (GRPO, 1-0 Reinforce) consistently induce smaller KL shifts and retain prior knowledge more effectively than offline methods (SFT, SimPO), regardless of the use of negative examples.

Figure 5: On-policy methods retain prior knowledge more effectively and induce more conservative KL updates than offline methods.

Theoretical analysis formalizes this intuition: in the binary-reward case, policy gradient converges to the KL-minimal optimal policy within the representable family. The optimization can be viewed as alternating I- and M-projections in probability space, carrying the base policy into the set of optimal policies while preferring the closest solution in KL.

Alternative Hypotheses and Ablations

The authors systematically ablate alternative predictors of forgetting, including weight-level changes, representation drift, sparsity/rank of updates, and other distributional distances (reverse KL, total variation, L2). None approach the explanatory power of forward KL divergence measured on the new task. This finding is robust across architectures and domains.

Optimization Dynamics and Representation Preservation

Analysis of optimization trajectories shows that per-step KL change is strongly correlated with the direction of forgetting gradients. RL fine-tuning integrates new abilities while leaving the overall representation space largely intact, as measured by CKA similarity, whereas SFT induces substantial representational drift.

Figure 6: Gradient similarity and KL change per step are anti-correlated on the new task, indicating larger steps induce more forgetting.

Scaling and Generalization

Experiments with larger model sizes (Qwen 2.5 3B, 7B, 14B) confirm that the fundamental trade-off between new-task performance and prior-task retention persists across scales. While larger models start with better general capabilities, SFT still incurs substantial forgetting to reach high accuracy on new tasks.

Implications and Future Directions

The findings motivate a new design axis for post-training algorithms: continual adaptation should explicitly minimize KL divergence from the base model to preserve prior knowledge. RL's on-policy bias provides a principled mechanism for conservative updates, but the principle is not limited to RL—offline methods can achieve similar retention if guided toward KL-minimal solutions.

Practical implications include the potential for hybrid algorithms that combine RL's retention properties with SFT's efficiency, and the use of KL divergence as a diagnostic and regularization tool in continual learning. The mechanistic basis for the KL–forgetting link remains an open question, as does its behavior at frontier model scales and in more diverse generative domains.

Figure 7: KL divergence between base and fine-tuned model on the new task correlates with forgetting performance across methods.

Conclusion

"RL's Razor: Why Online Reinforcement Learning Forgets Less" provides a rigorous empirical and theoretical account of catastrophic forgetting in foundation models. The central insight is that KL divergence to the base policy, measured on the new task, is a reliable predictor of forgetting, and that on-policy RL methods are naturally biased toward KL-minimal solutions. This principle reframes the design of post-training algorithms for continual learning, emphasizing conservative updates to enable long-lived, adaptive AI agents. Future work should explore mechanistic explanations for the KL–forgetting relationship, extend the analysis to off-policy RL and frontier-scale models, and develop practical algorithms that operationalize KL minimization for lifelong learning.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Follow-up Questions

- How does KL divergence serve as a predictor for catastrophic forgetting in RL models?

- What are the primary differences in retention ability between on-policy RL and supervised fine-tuning?

- How do the empirical findings across LLMs and robotic models support the theory of RL's Razor?

- What potential hybrid methods could combine RL's retention properties with the efficiency of SFT?

- Find recent papers about catastrophic forgetting in reinforcement learning.

Related Papers

- RL with KL penalties is better viewed as Bayesian inference (2022)

- RL, but don't do anything I wouldn't do (2024)

- Efficient Online Reinforcement Learning Fine-Tuning Need Not Retain Offline Data (2024)

- SFT Memorizes, RL Generalizes: A Comparative Study of Foundation Model Post-training (2025)

- All Roads Lead to Likelihood: The Value of Reinforcement Learning in Fine-Tuning (2025)

- Reinforcement Learning Finetunes Small Subnetworks in Large Language Models (2025)

- Learning What Reinforcement Learning Can't: Interleaved Online Fine-Tuning for Hardest Questions (2025)

- Reinforcement Fine-Tuning Naturally Mitigates Forgetting in Continual Post-Training (2025)

- Reinforcement Learning Fine-Tunes a Sparse Subnetwork in Large Language Models (2025)

- RL Is Neither a Panacea Nor a Mirage: Understanding Supervised vs. Reinforcement Learning Fine-Tuning for LLMs (2025)

Authors (3)

Tweets

alphaXiv

- RL's Razor: Why Online Reinforcement Learning Forgets Less (132 likes, 0 questions)