- The paper demonstrates that intermediate AI assistance yields the highest post-test scores and optimal cognitive load compared to other levels.

- It employs mixed-effects linear regression and repeated-measures ANOVA to show that excessive automation reduces active engagement in note-taking.

- The findings imply that adaptive, scaffolded AI support can balance structure and user control to enhance learning outcomes.

More AI Assistance Reduces Cognitive Engagement: The AI Assistance Dilemma in Note-Taking

Introduction and Motivation

This paper investigates the "AI Assistance Dilemma" in the context of note-taking, specifically examining how varying levels of AI support impact cognitive engagement and comprehension during real-time lecture consumption. The study is motivated by the increasing integration of AI tools in cognitively demanding tasks and the open question of whether such assistance enhances or undermines meaningful engagement. Drawing on the encoding-storage paradigm and cognitive load theory, the authors hypothesize that excessive automation may reduce the cognitive effort required for active learning, potentially leading to suboptimal outcomes.

System Design and Experimental Setup

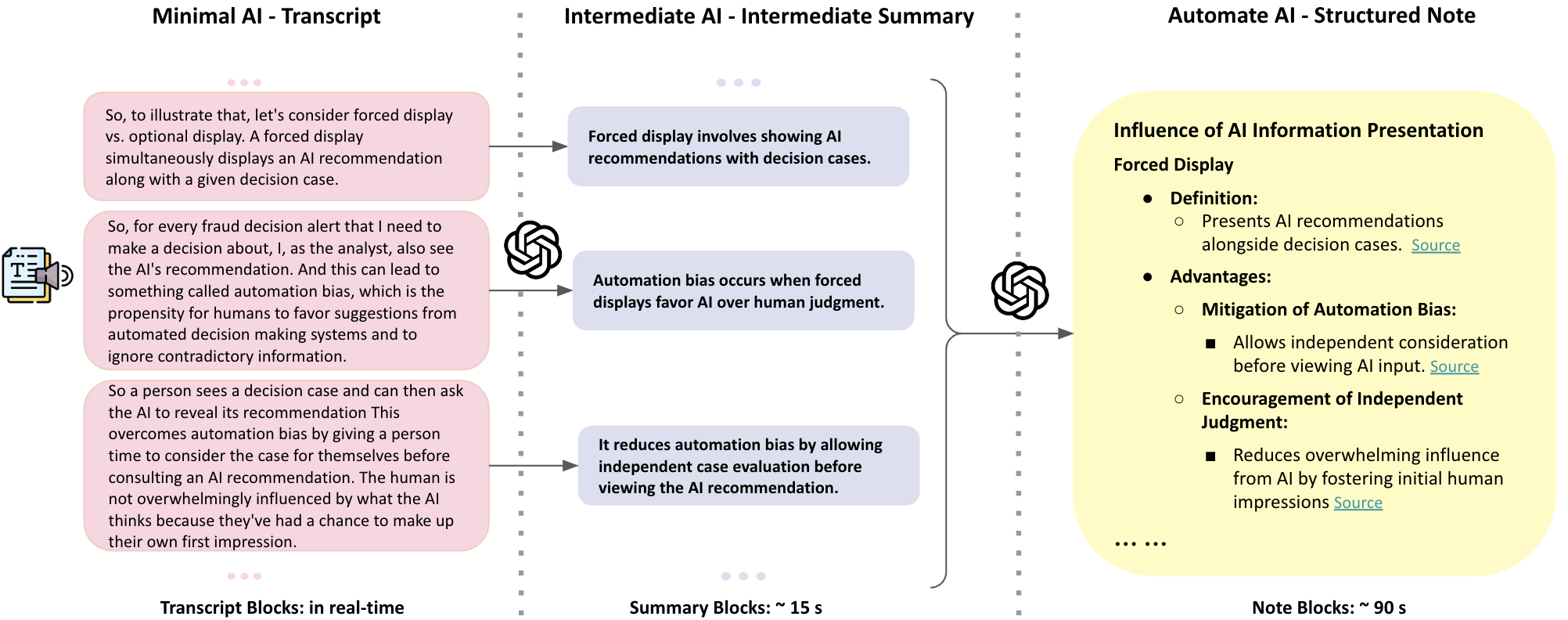

The authors developed NoteCopilot, a custom AI-assisted note-taking system with three distinct levels of AI support:

- Automated AI (high assistance): Provides structured notes every 1–2 minutes, resembling commercial auto-note services.

- Intermediate AI (moderate assistance): Delivers real-time summary blocks after each speaking turn.

- Transcript AI (low assistance): Offers real-time transcript blocks with minimal abstraction.

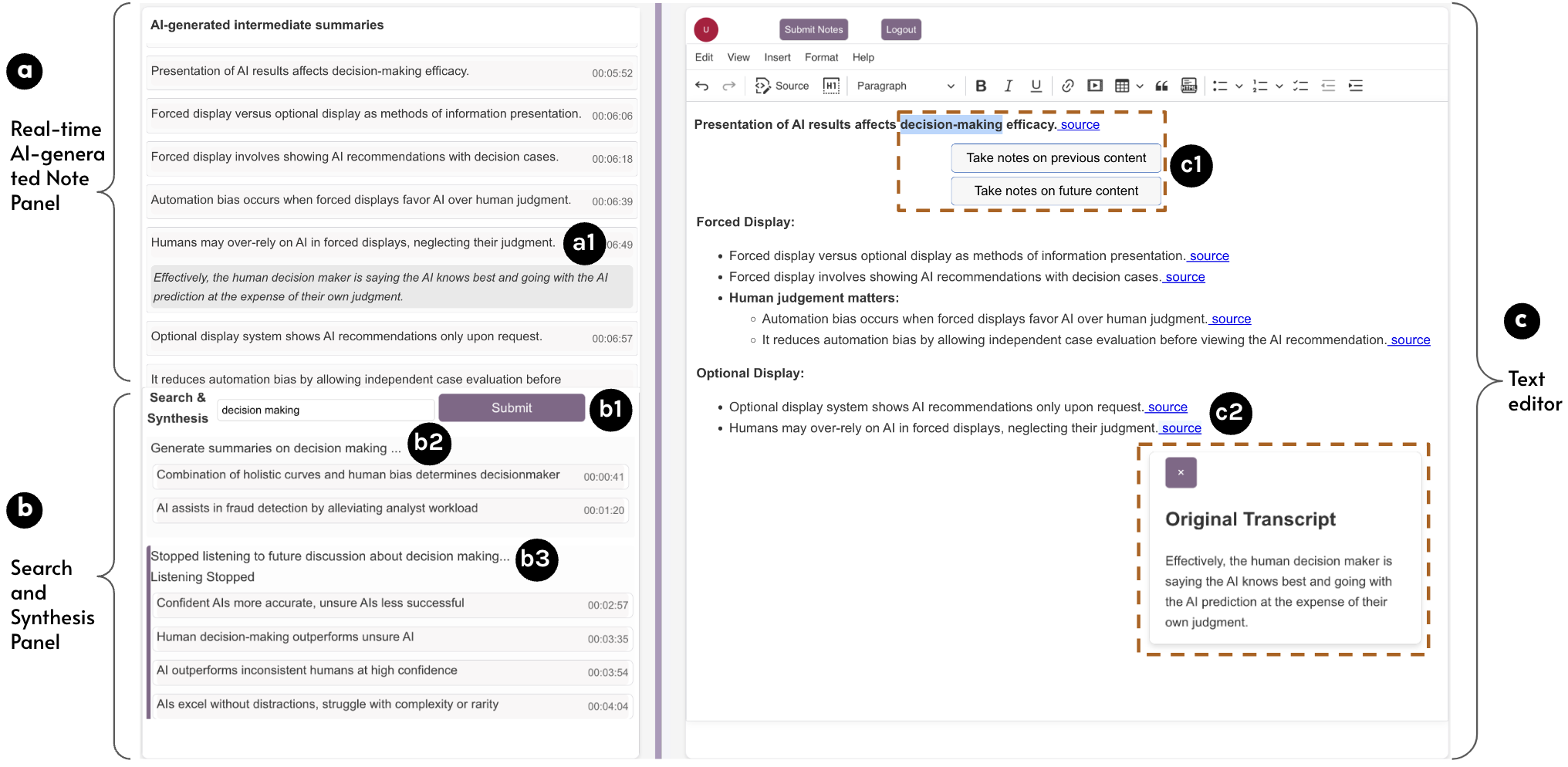

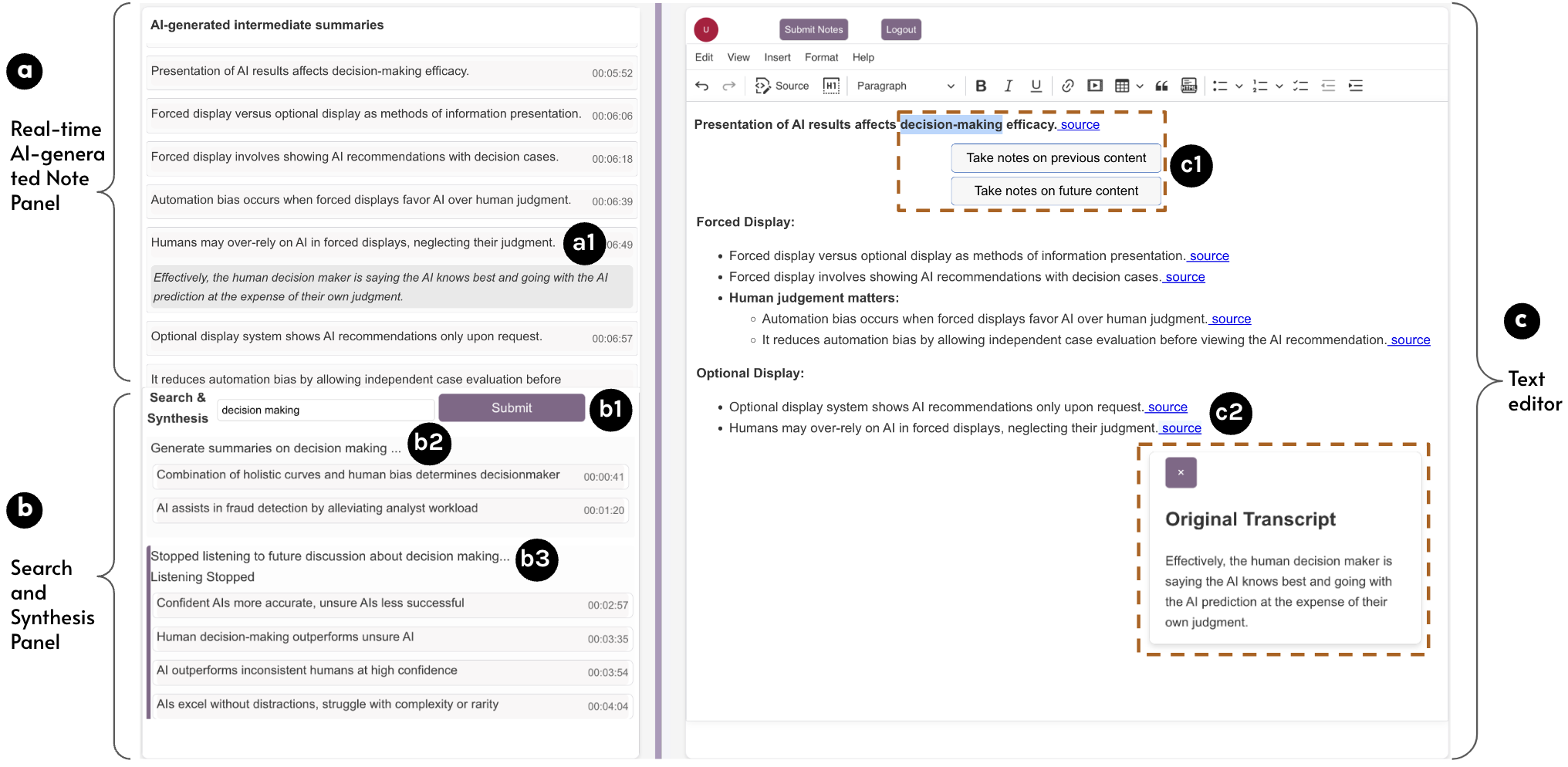

All variants feature a real-time AI-generated note panel, a search and synthesis panel, and a rich text editor for manual note-taking and integration of AI-generated content.

Figure 1: System overview showing the real-time AI-generated note panel, search and synthesis panel, and text editor for user interaction across all assistance levels.

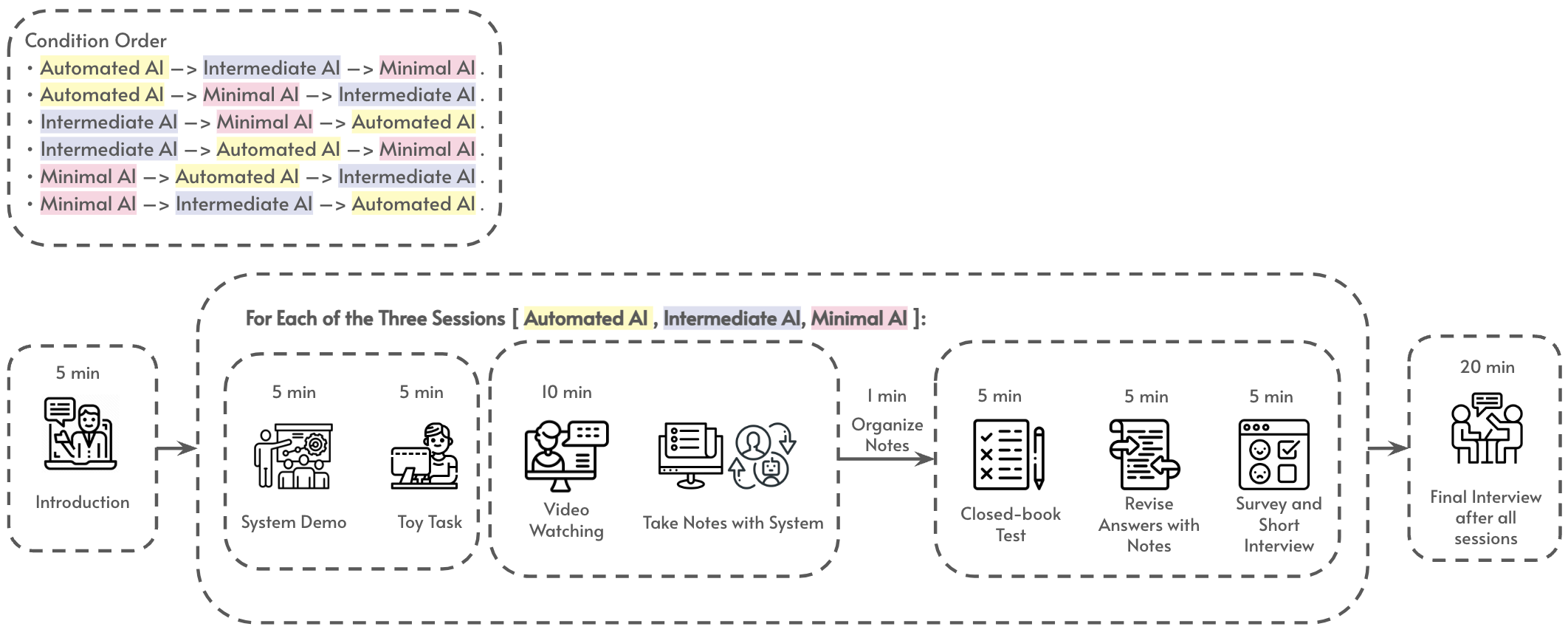

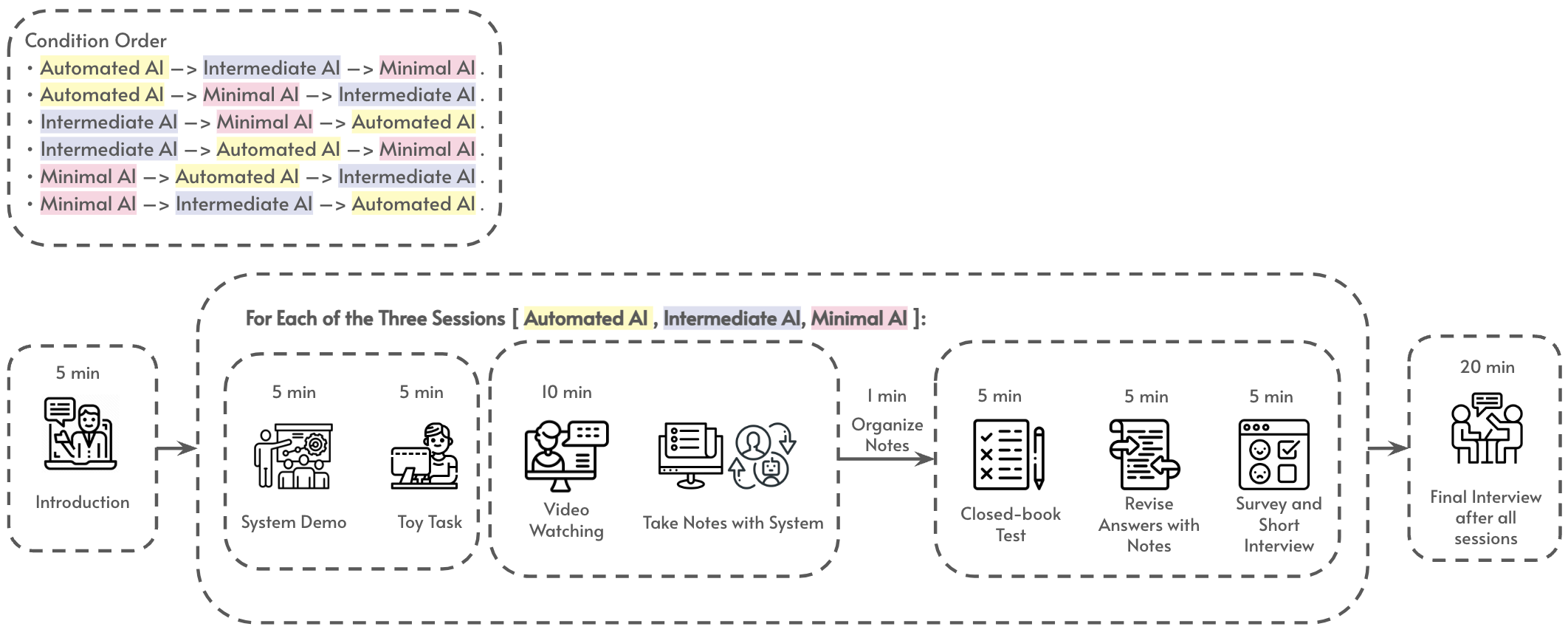

A within-subject experiment was conducted with 30 participants, each experiencing all three conditions in counterbalanced order. Participants watched lecture videos, took notes using the system, completed post-tests (both closed-book and open-note revision), and participated in surveys and interviews.

Figure 2: Study design flow, detailing the sequence of introduction, note-taking, testing, revision, and interviews for each AI assistance condition.

Granularity and Abstraction of AI Notes

The three assistance levels differ in the granularity and abstraction of AI-generated content:

Quantitative Findings

Learning Outcomes

Mixed-effects linear regression and post-hoc Tukey HSD analyses revealed:

Cognitive Load

Repeated-measures ANOVA showed:

- Extraneous cognitive load was lowest in Automated AI, but germane cognitive load (beneficial for learning) was highest in Intermediate AI.

- Manual note-taking (Transcript AI) imposed the highest extraneous load.

- Reading AI-generated notes in Automated AI required more effort than in other conditions.

Note-Taking Behaviors

- Automated AI led to the highest total note count, but with minimal user modification.

- Intermediate AI encouraged more active engagement, with users frequently modifying and reorganizing AI-generated summaries.

- Transcript AI users predominantly relied on manual note-taking.

Qualitative Insights

Interviews revealed nuanced perceptions:

- Intermediate AI was valued for fostering active engagement and providing digestible, synchronous information.

- Automated AI was appreciated for its structure and completeness but led to disengagement and over-reliance.

- Transcript AI, while cognitively demanding, was trusted for its fidelity to the original content.

- Participants preferred maintaining control over their notes, using AI as augmentation rather than replacement.

- Users desired more contextual and intent-aware AI assistance, adaptable to their evolving needs and learning goals.

Theoretical and Practical Implications

The Assistance Dilemma

The findings empirically validate the assistance dilemma: excessive automation reduces cognitive engagement and comprehension, while moderate, scaffolded AI support optimizes learning outcomes. This aligns with cognitive load theory and the encoding-storage paradigm, suggesting that AI should offload mechanical effort but preserve opportunities for active reasoning and reflection.

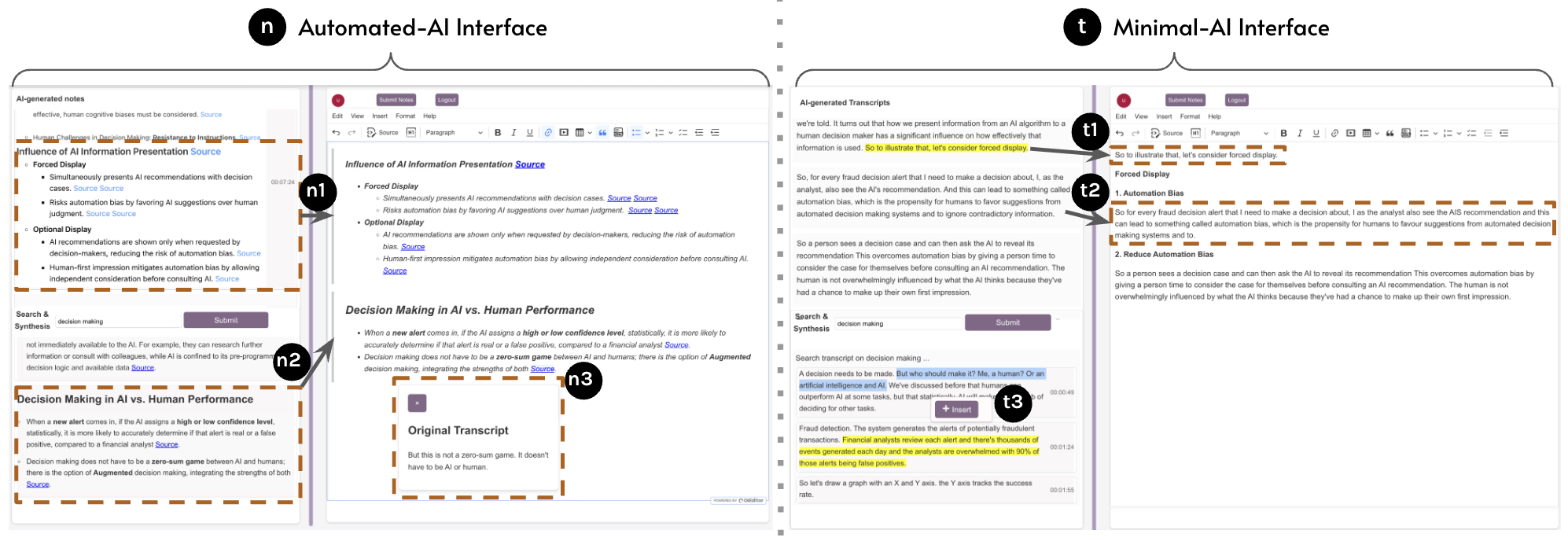

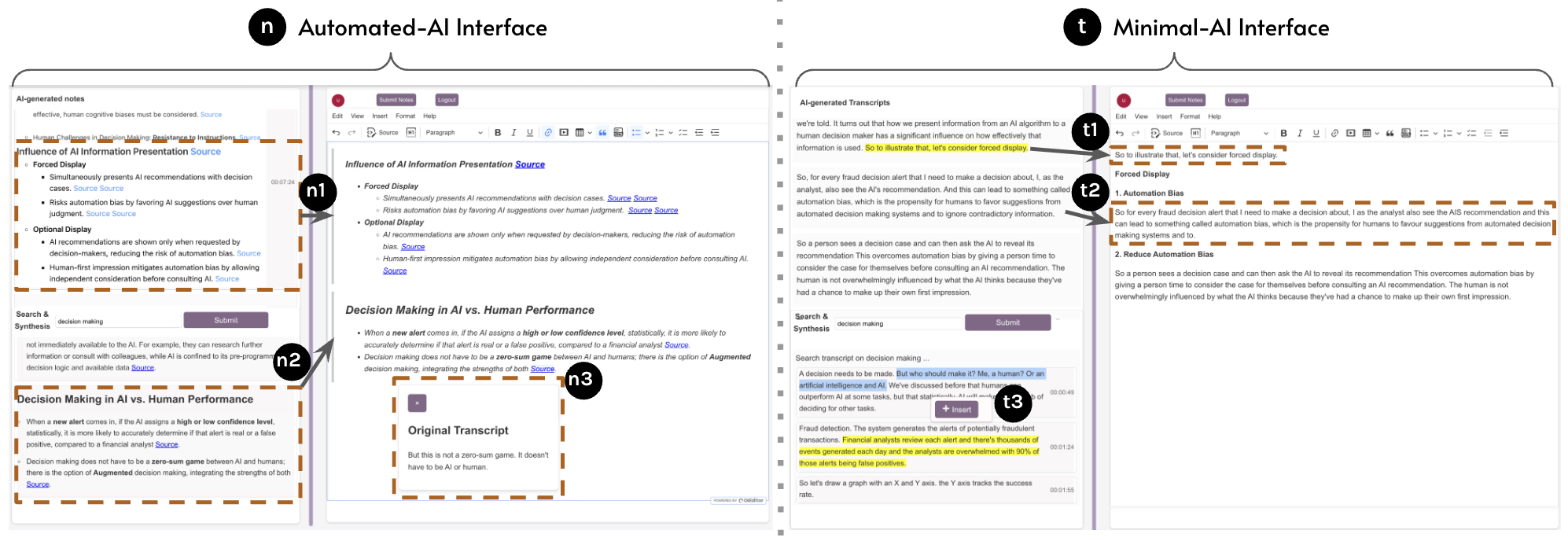

Intermediate AI, characterized by real-time, granular summaries and user agency in note composition, emerges as an effective paradigm for supporting cognitive tasks. The system's middleware layer enables users to evaluate, modify, and integrate AI-generated content, promoting desirable difficulty and scaffolding metacognitive processes.

Figure 5: Interfaces for Intermediate and Transcript AI, highlighting user interaction with AI-generated summaries and transcript blocks.

Adaptivity and Personalization

Future AI systems should dynamically adjust content granularity, abstraction, and interaction modalities based on user intent, topic complexity, and behavioral signals. This requires moving beyond static scaffolding to engagement-sensitive, goal-oriented adaptation.

Limitations

- The study's sample size and short video duration limit generalizability.

- Note quality was not assessed; only quantity and modification behaviors were measured.

- Dynamic editing processes during note-taking were not fully captured.

Conclusion

This work demonstrates that more AI assistance does not necessarily yield better cognitive outcomes in note-taking. Moderate, scaffolded AI support (Intermediate AI) maximizes comprehension and engagement, while excessive automation (Automated AI) risks disengagement and over-reliance. The results highlight the need for careful calibration of AI support in cognitive tasks, advocating for adaptive, intermediate AI paradigms that preserve human agency and foster meaningful learning.