- The paper introduces UI-TARS-2, a comprehensive framework that integrates a scalable data flywheel and a stabilized multi-turn reinforcement learning pipeline.

- It details a hybrid GUI-SDK environment that supports diverse tasks across GUI, game, and system-level domains with unified interaction protocols.

- Empirical results demonstrate improved performance metrics, robust cross-domain generalization, and enhanced inference speed through effective quantization.

UI-TARS-2: A Unified Framework for GUI-Centered Agents via Multi-Turn Reinforcement Learning

Introduction and Motivation

The UI-TARS-2 technical report presents a comprehensive framework for developing robust, generalist agents capable of operating across graphical user interfaces (GUIs), games, and system-level environments. The work addresses persistent challenges in GUI agent research: data scarcity for long-horizon tasks, instability in multi-turn RL, the limitations of GUI-only operation, and the engineering complexity of large-scale, reproducible environments. UI-TARS-2 advances the field by integrating a scalable data flywheel, a stabilized multi-turn RL pipeline, a hybrid GUI-SDK environment, and a unified sandbox infrastructure.

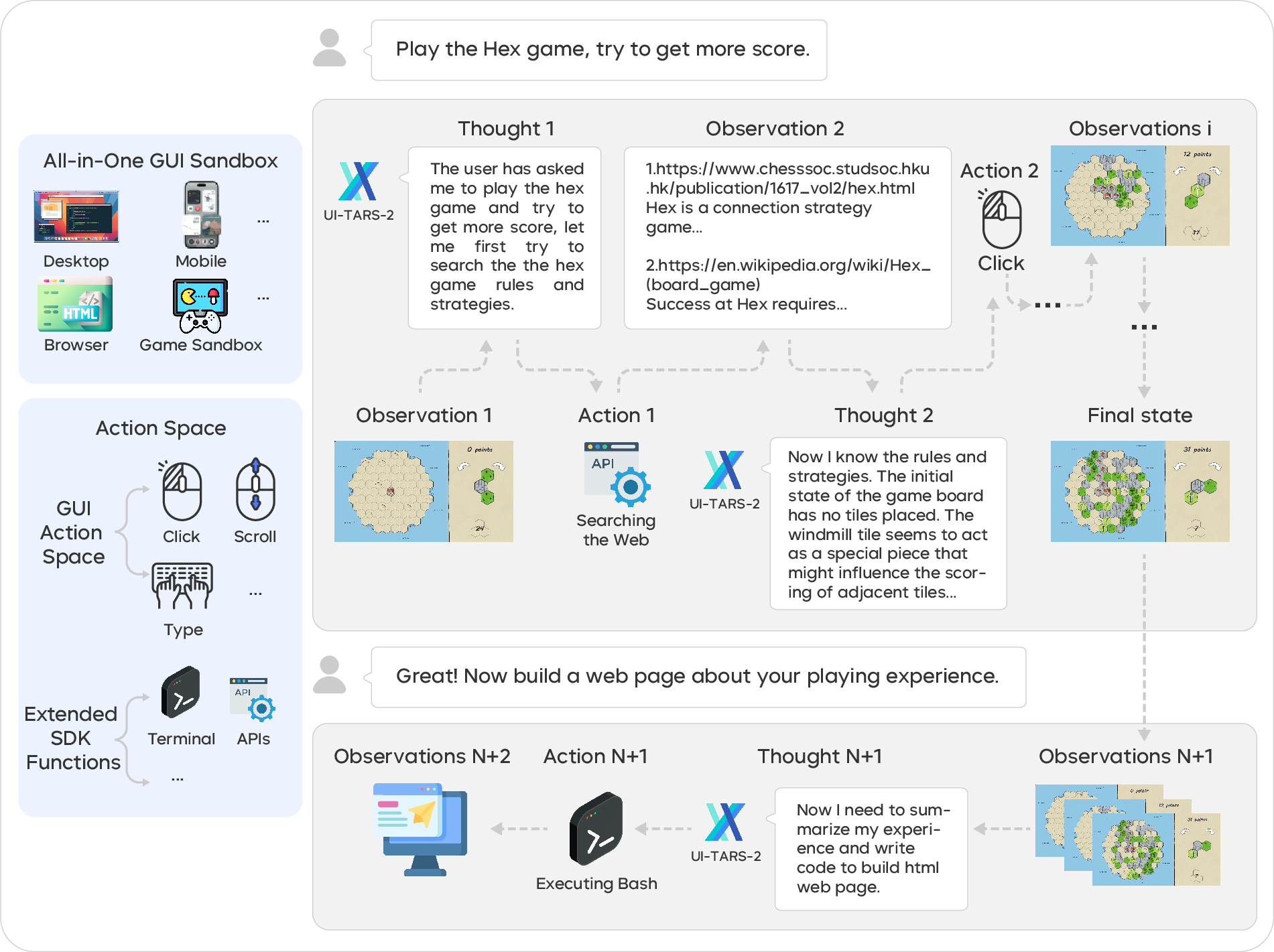

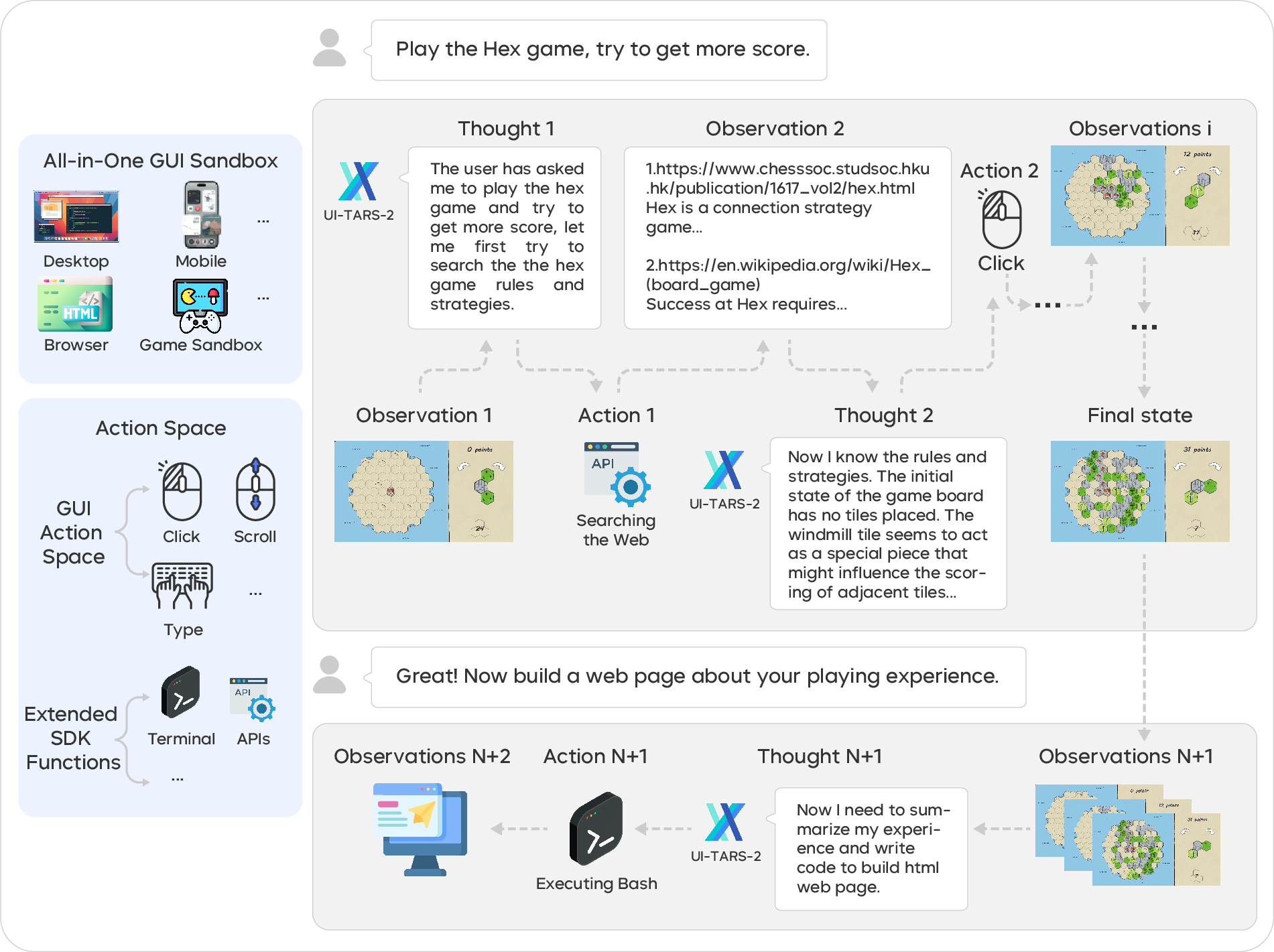

Figure 1: A demo trajectory of UI-TARS-2.

System Architecture and Environment Design

UI-TARS-2 adopts a native agent paradigm, modeling the agent as a parameterized policy that interleaves reasoning, action, and observation in a ReAct-style loop. The action space encompasses both GUI primitives (click, type, scroll) and SDK-augmented operations (terminal commands, tool invocations), with hierarchical memory for both short-term and episodic recall.

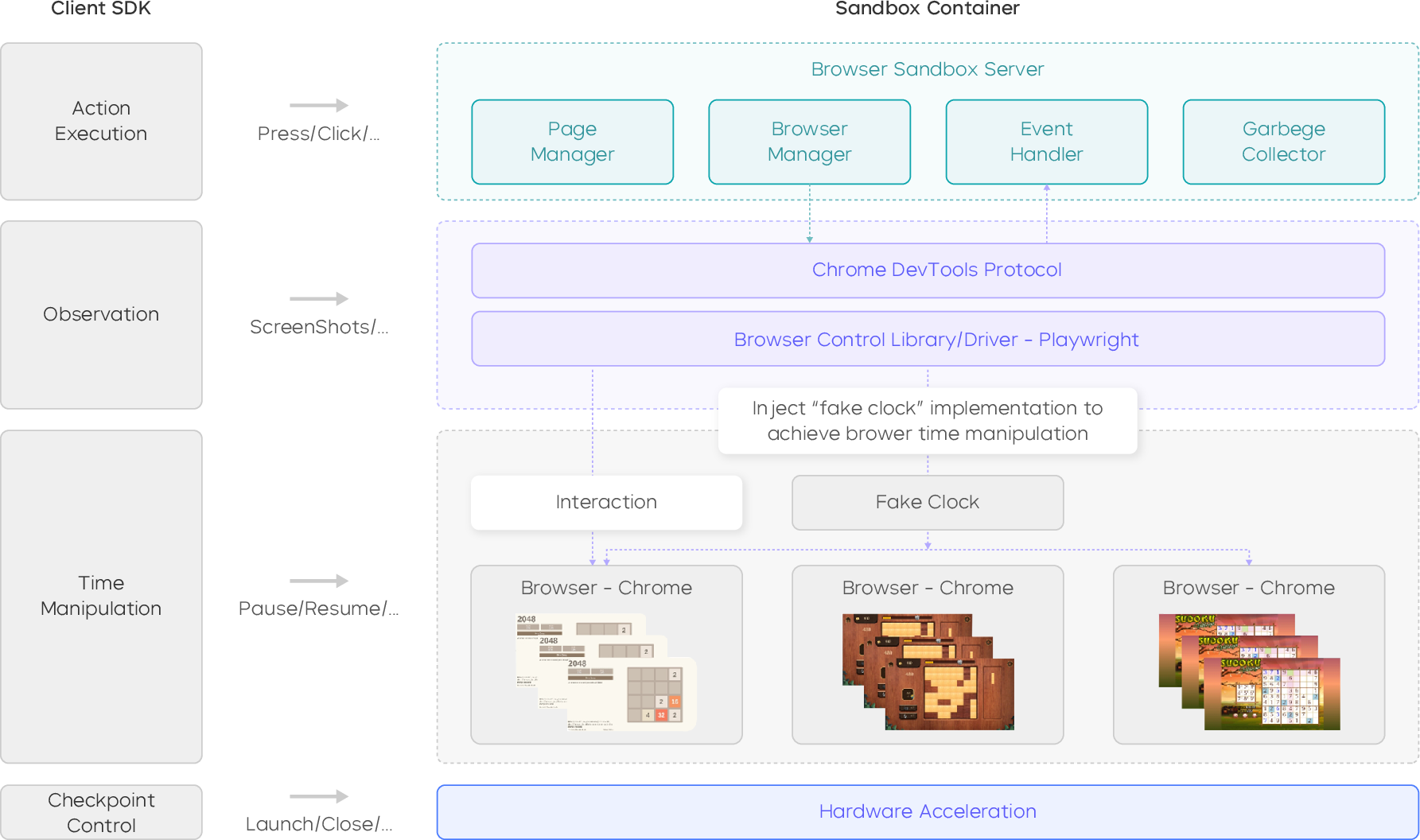

The environment is engineered as a universal sandbox, supporting both GUI and game domains. For GUI tasks, a distributed VM platform runs Windows, Ubuntu, and Android OSes, exposing a unified SDK for agent interaction, observation, and evaluation. The infrastructure supports thousands of concurrent sessions, with real-time monitoring and resource management.

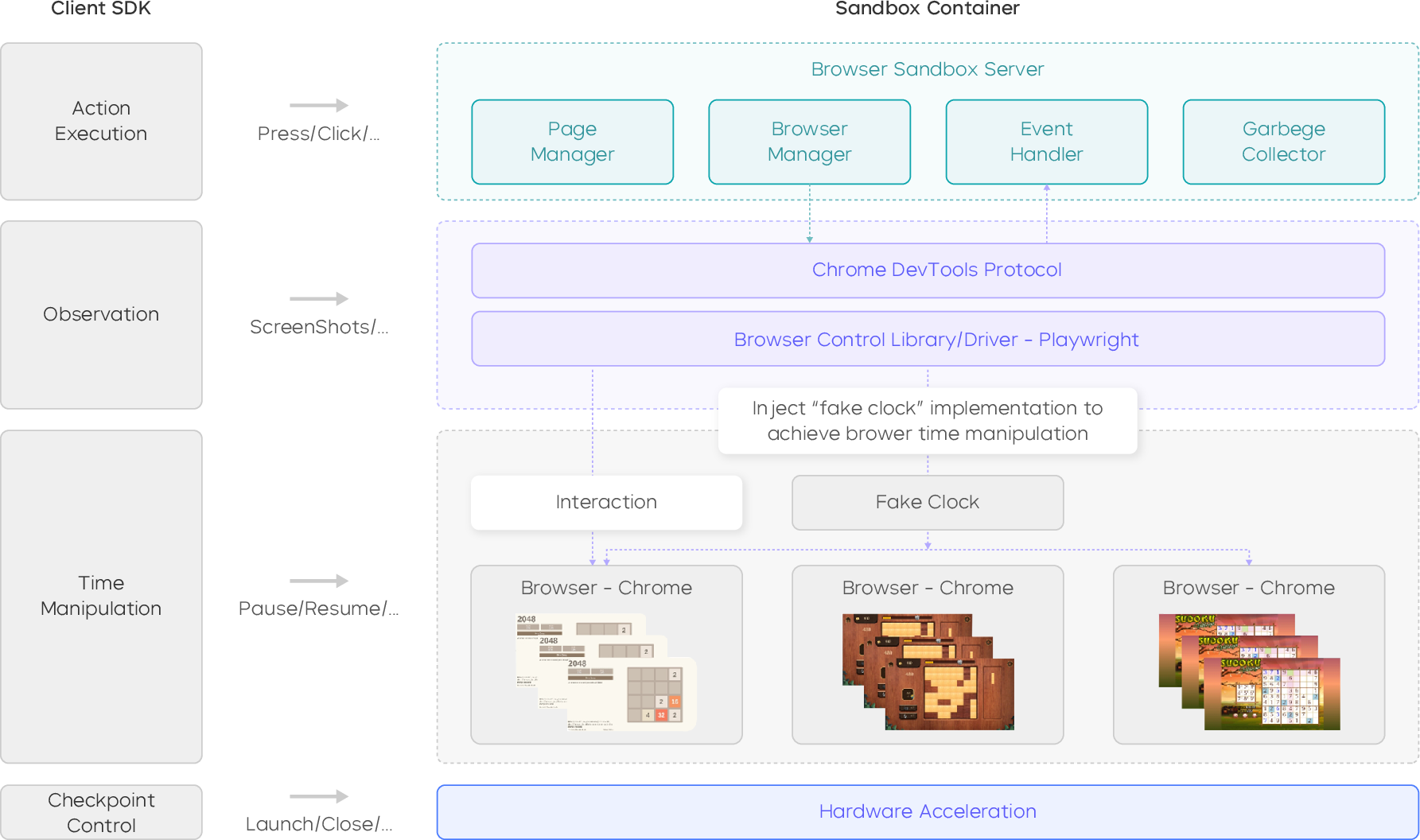

For games, a browser-based sandbox executes HTML5/WebGL mini-games, exposing a standardized API for action and observation, with GPU acceleration and elastic scheduling for high-throughput rollouts.

Figure 2: Browser sandbox (container) architecture.

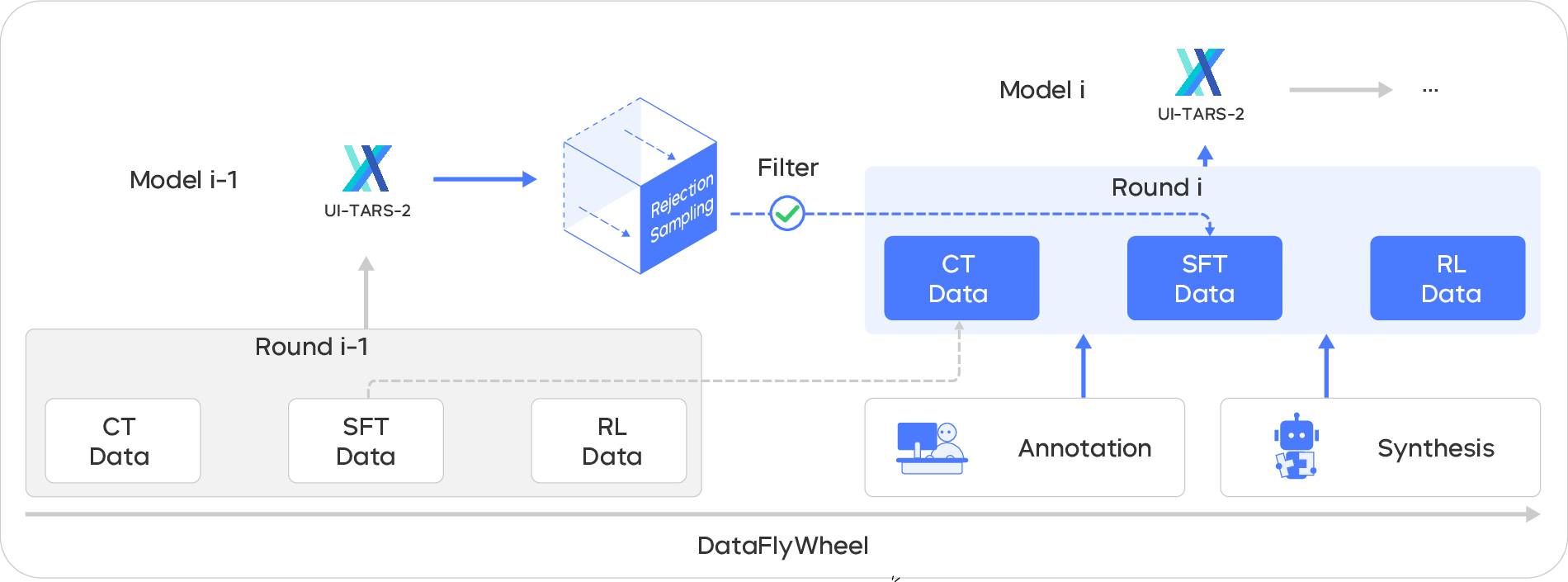

Data Flywheel: Scalable Data Generation and Curation

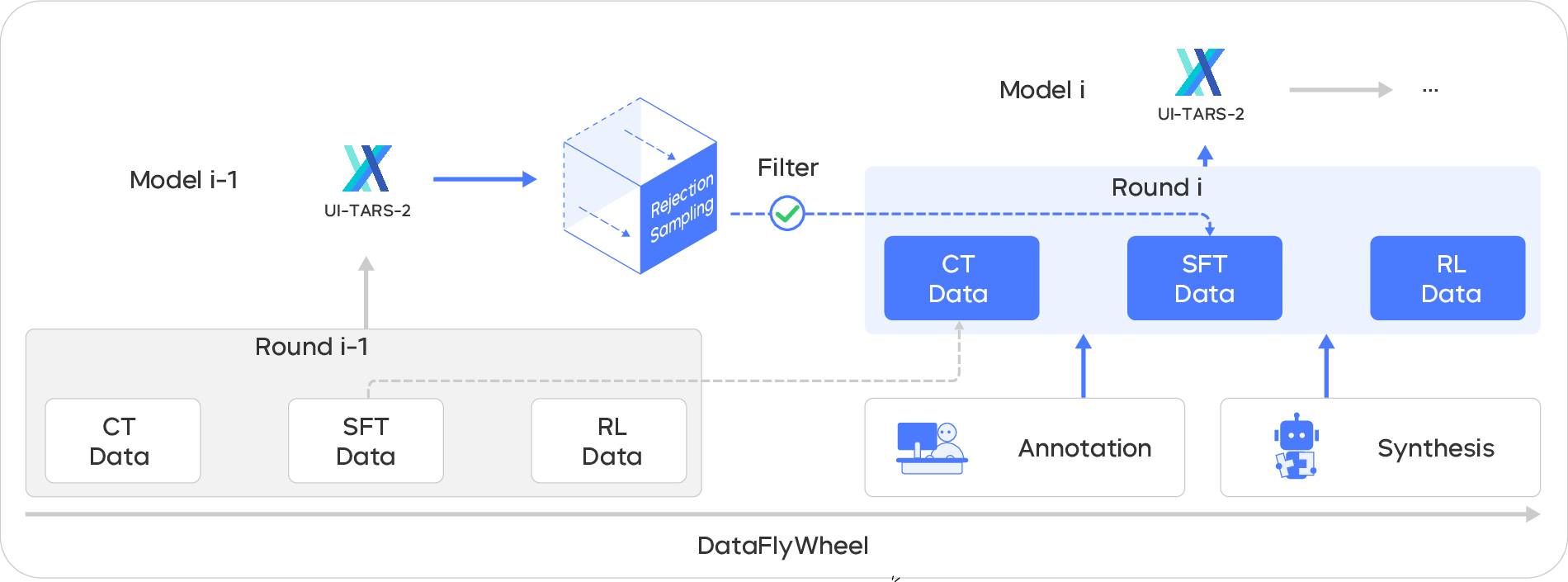

A central innovation is the data flywheel, which iteratively co-evolves model and data quality. The flywheel operates in three stages: continual pre-training (CT) on broad, diverse data; supervised fine-tuning (SFT) on high-quality, task-specific trajectories; and multi-turn RL on verifiable interactive tasks. Each cycle leverages the latest model to generate new trajectories, which are filtered and routed to the appropriate stage, ensuring that SFT receives only high-quality, on-policy data while CT absorbs broader, less polished samples.

Figure 3: We curate a Data Flywheel for UI-TARS-2, establishing a self-reinforcing loop that continuously improves both data quality and model capabilities.

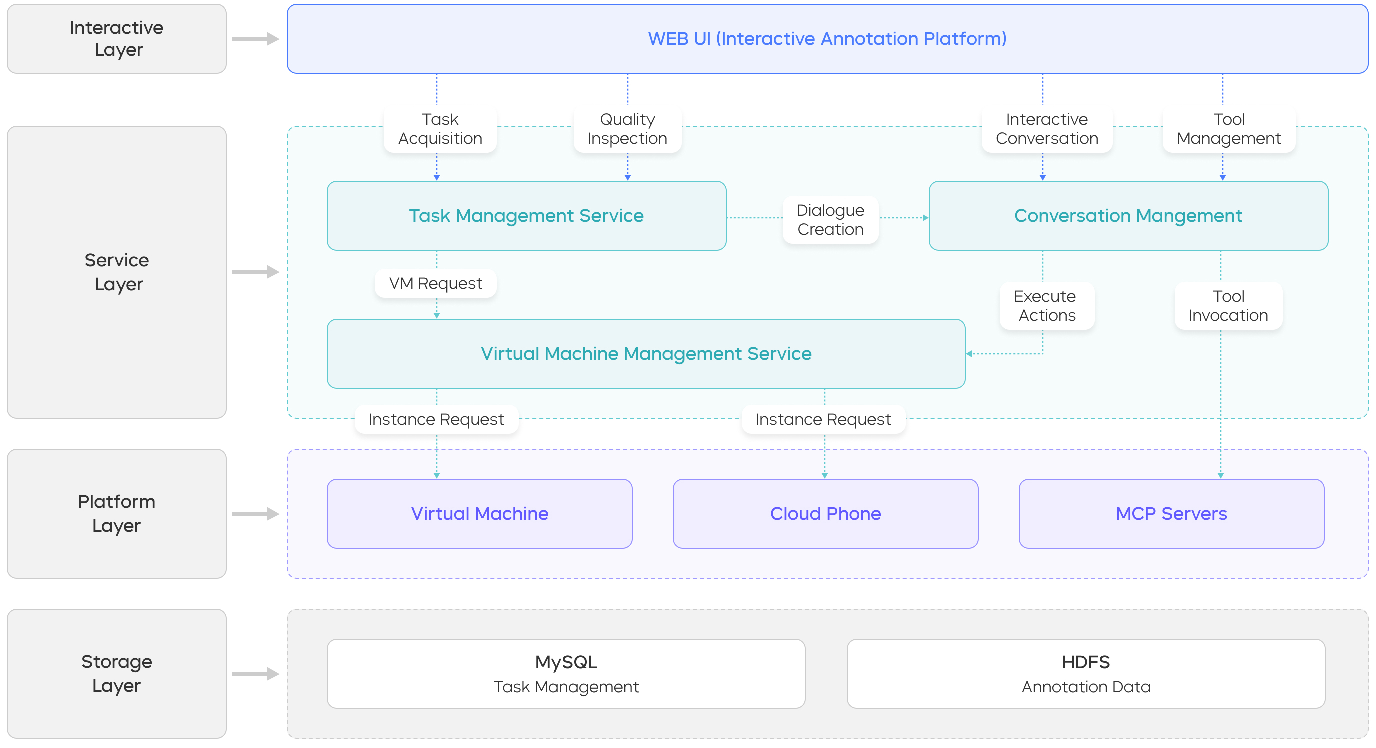

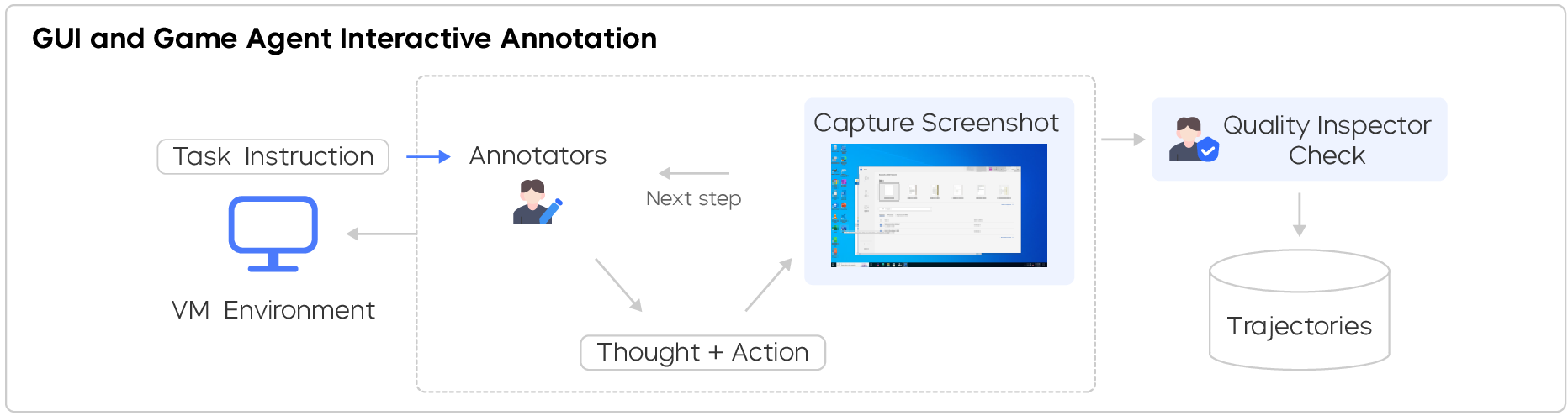

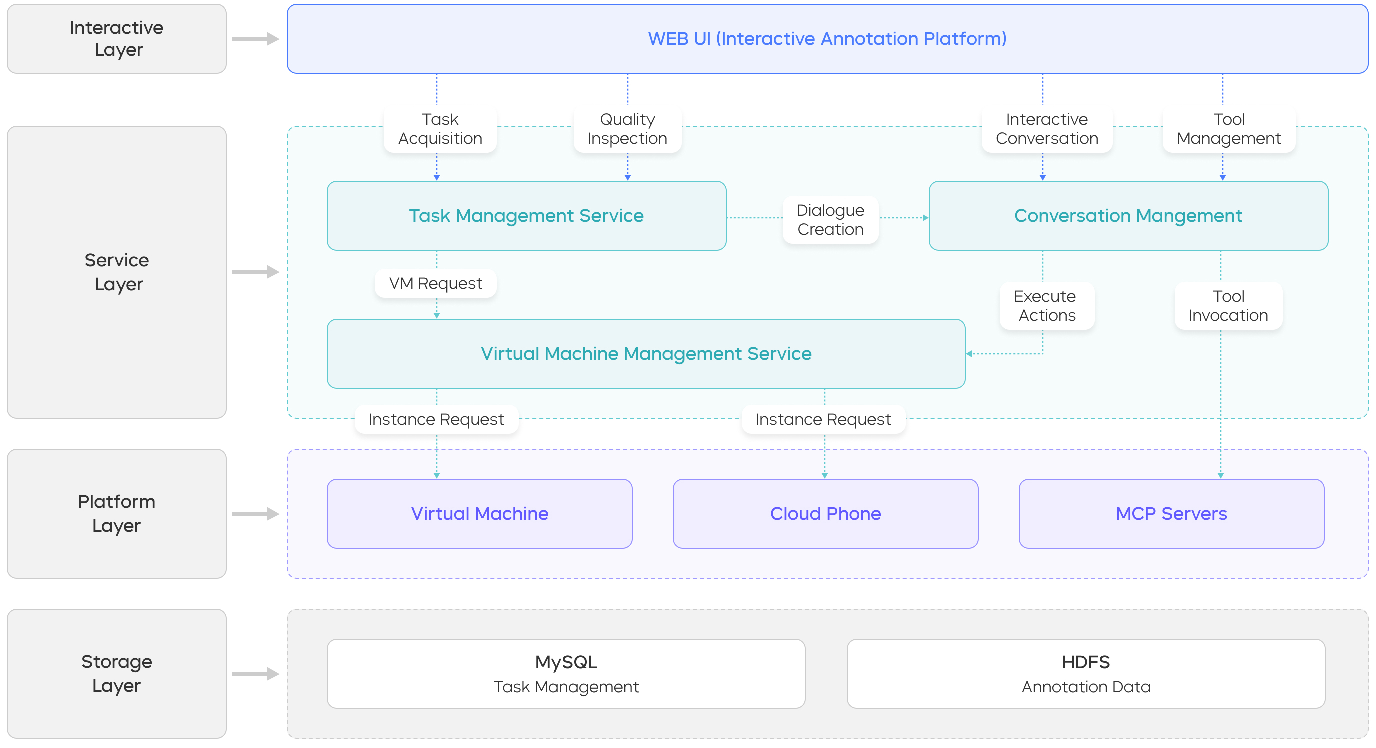

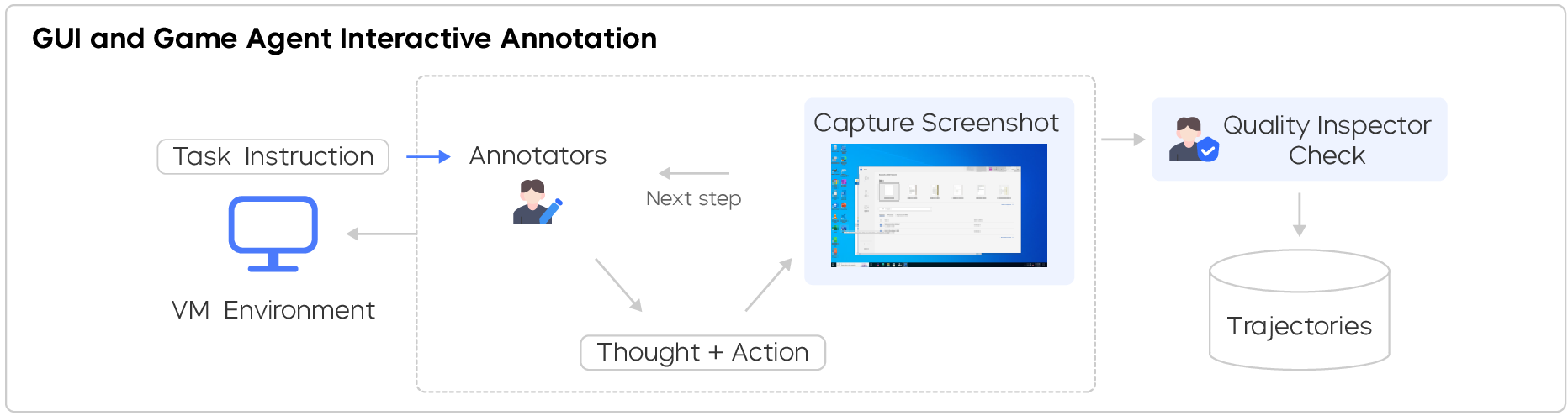

Data scarcity for agentic tasks is addressed via a large-scale, in-situ annotation platform. Annotators use a think-aloud protocol, verbalizing reasoning during real GUI interactions, with audio transcribed and aligned to actions. The annotation system is deployed directly on annotators' machines, capturing authentic, context-rich trajectories.

Figure 4: The four-layer architecture of the interactive annotation platform.

Figure 5: The interactive annotation workflow.

Multi-Turn Reinforcement Learning Framework

UI-TARS-2 employs a multi-turn RL framework based on RLVR, with domain-specific pipelines for GUI-Browsing, GUI-General, and games. Task synthesis leverages LLMs for generating complex, multi-hop, and obfuscated queries, ensuring diversity and verifiability. Rewards are deterministically computed where possible (e.g., games), or via LLM-as-Judge and generative outcome reward models (ORMs) for open-ended tasks.

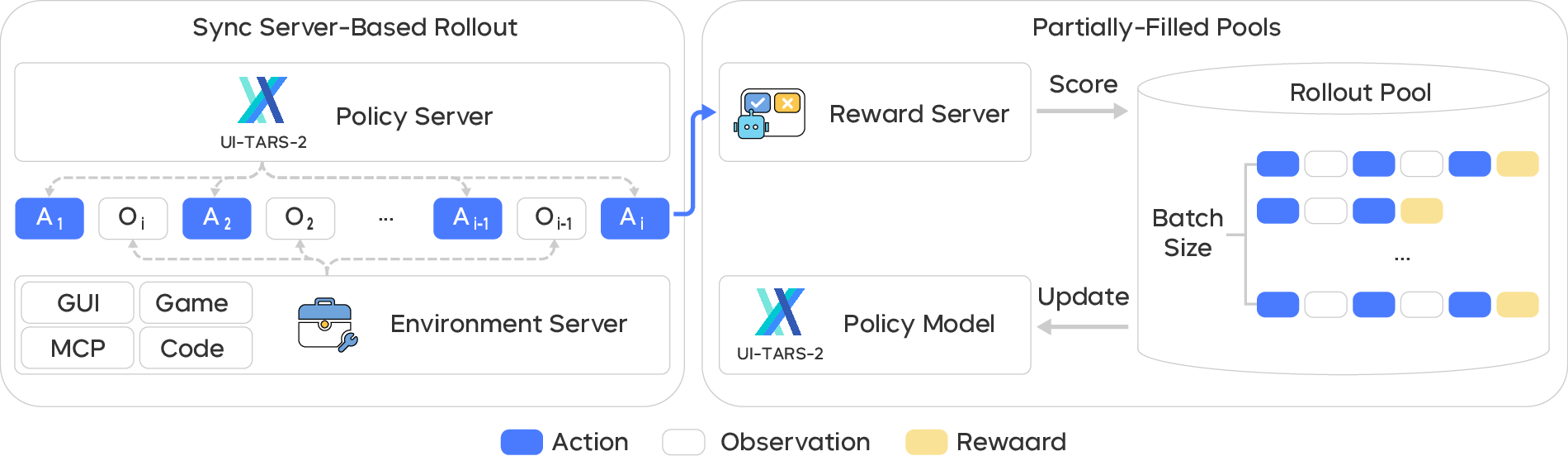

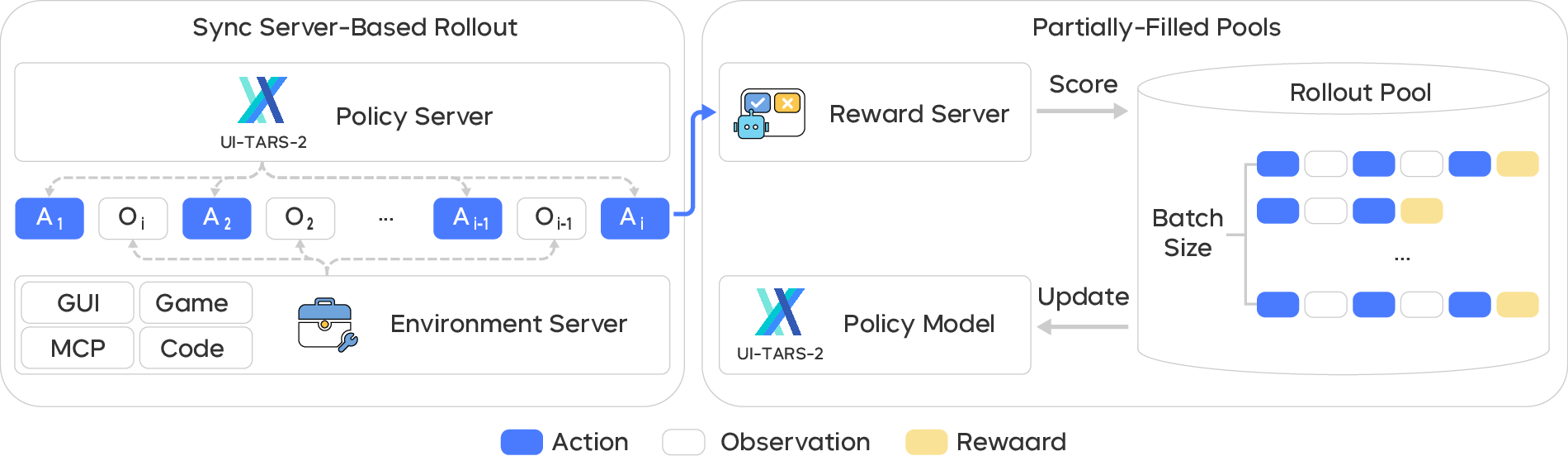

The RL infrastructure is fully asynchronous, with server-based rollout, streaming training from partially-filled pools, and stateful environments that preserve context across long episodes. PPO is the primary optimization algorithm, enhanced with reward shaping, decoupled and length-adaptive GAE, value pretraining, and asymmetric clipping to promote exploration and stability.

Figure 6: The multi-turn RL training infrastructure of UI-TARS-2.

Model Merging and Hybridization

Rather than joint RL across all domains—which is unstable and computationally prohibitive—UI-TARS-2 trains domain-specialized agents and merges them via parameter interpolation, leveraging the linear mode connectivity of models fine-tuned from a shared checkpoint. This approach preserves domain-specific performance while enabling strong cross-domain generalization.

Empirical Results

UI-TARS-2 demonstrates strong empirical performance across a wide spectrum of benchmarks:

- GUI Benchmarks: 88.2 on Online-Mind2Web, 47.5 on OSWorld, 50.6 on WindowsAgentArena, 73.3 on AndroidWorld. The model outperforms Claude and OpenAI agents on several metrics.

- Game Benchmarks: Mean normalized score of 59.8 across a 15-game suite (approaching 60% of human-level performance), with competitive results on LMGame-Bench.

- System-Level Tasks: 45.3 on Terminal Bench, 68.7 on SWE-Bench Verified, 29.6 on BrowseComp-en, 50.5 on BrowseComp-zh (with GUI-SDK enabled).

Notably, RL-trained models exhibit strong out-of-domain generalization, with RL on browser tasks transferring to OSWorld and AndroidWorld, yielding +10.5% and +8.7% improvements, respectively.

Training Dynamics and Analysis

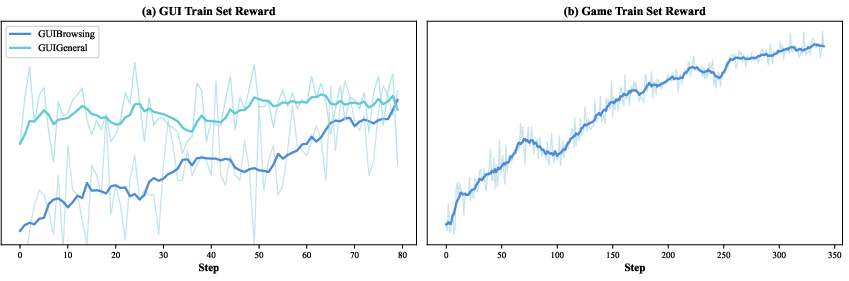

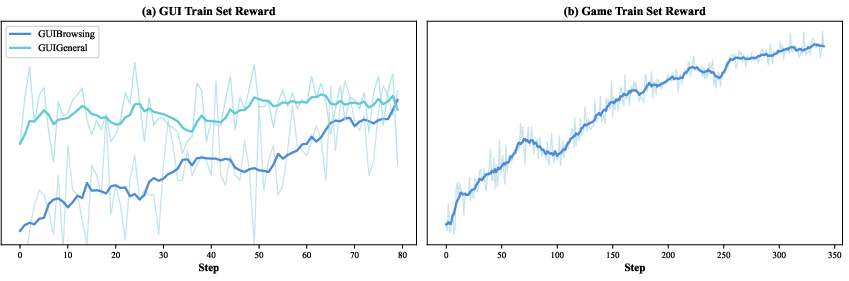

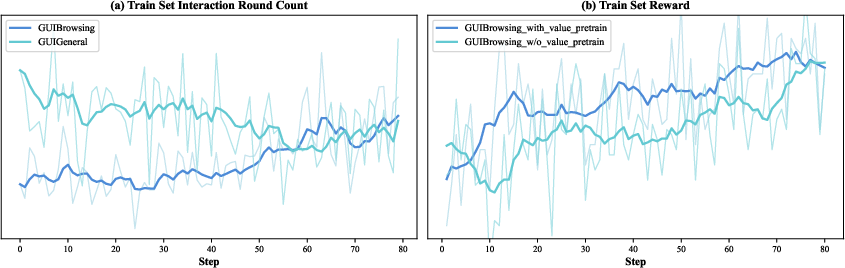

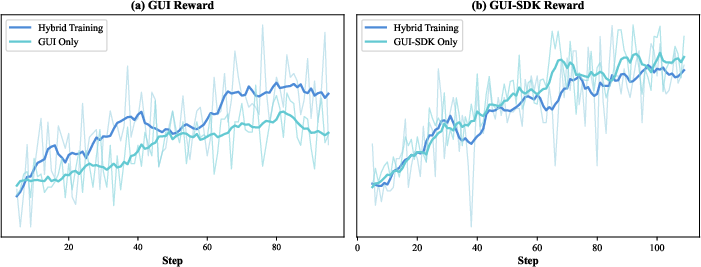

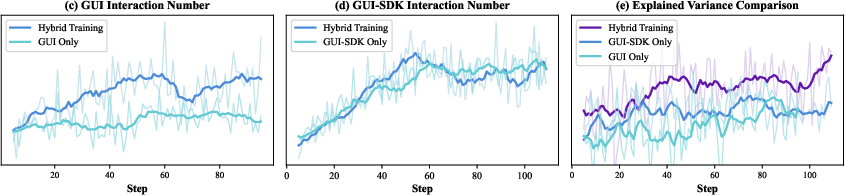

Training reward curves show consistent improvement across GUI and game domains.

Figure 7: Training reward dynamics for GUI-Browsing, GUI-General, and game scenarios in UI-TARS-2.

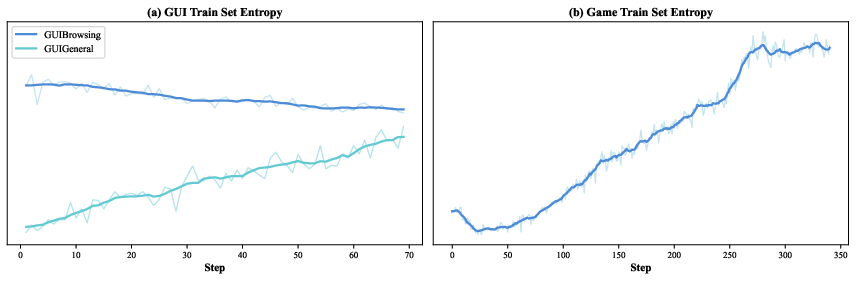

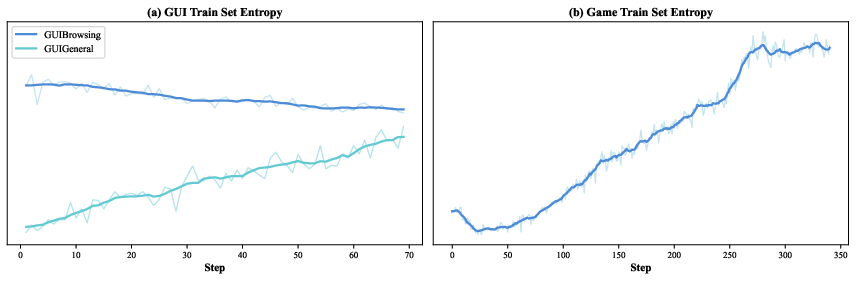

Entropy dynamics reveal that, unlike reasoning-focused RL (where entropy typically decreases), UI-TARS-2 often maintains or increases entropy, indicating sustained exploration in visually rich, interactive environments.

Figure 8: Training entropy dynamics for GUI-Browsing, GUI-General, and game scenarios in UI-TARS-2.

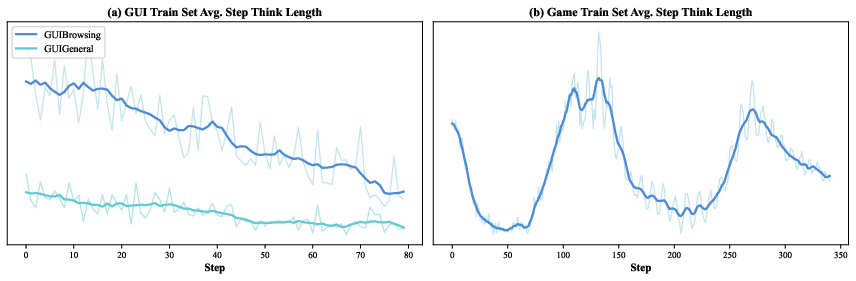

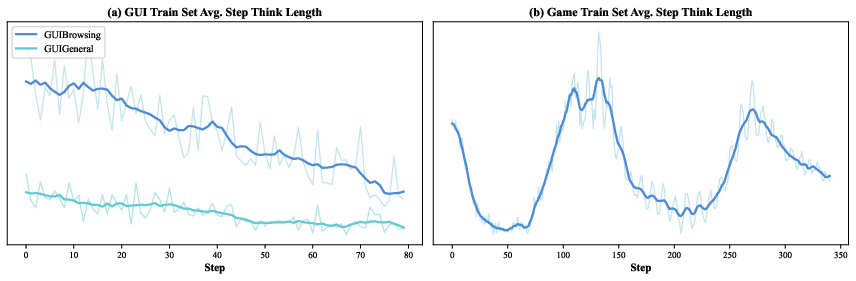

Average think length per step decreases over training, suggesting that agents learn to act more efficiently, relying less on extended internal reasoning once correct action patterns are acquired.

Figure 9: Training dynamics of average step think length for the GUI-Browsing, GUI-General, and game scenarios in UI-TARS-2 RL training.

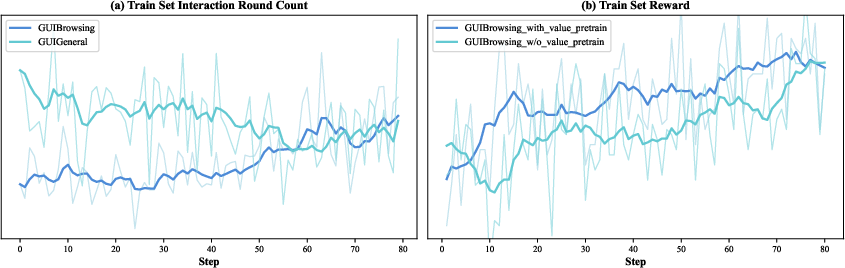

Value model pretraining is shown to be critical for PPO stability, with pretraining leading to higher rewards and more reliable value estimates.

Figure 10: (a) Training dynamics of average interaction round count for the GUI-Browsing and GUI-General scenarios in UI-TARS-2 RL training; (b) Impact of value model pretraining in GUI-Browsing scenarios.

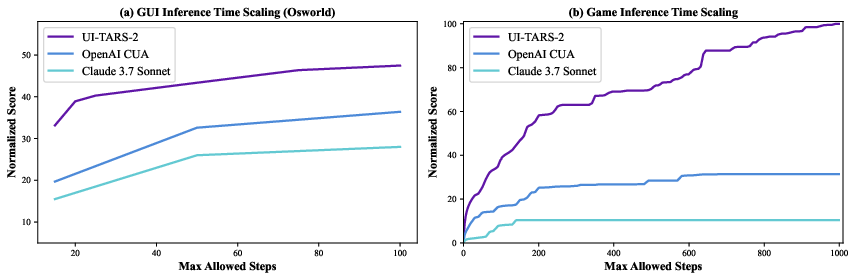

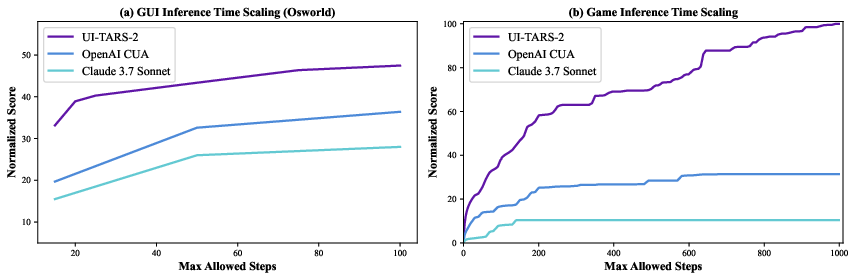

Inference-time scaling experiments demonstrate that UI-TARS-2 can effectively leverage increased step budgets, with performance improving monotonically as allowed steps increase, in contrast to baselines that plateau.

Figure 11: Inference-time scaling evaluation on OSWorld and game benchmarks.

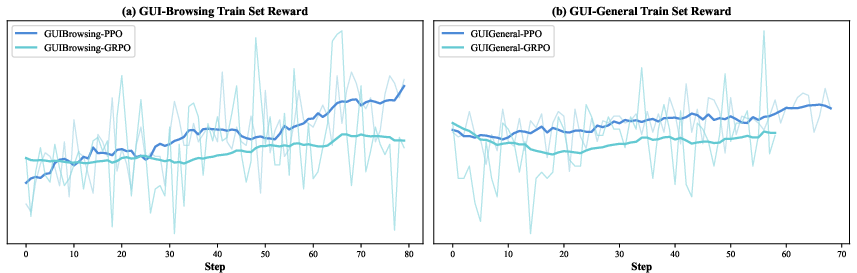

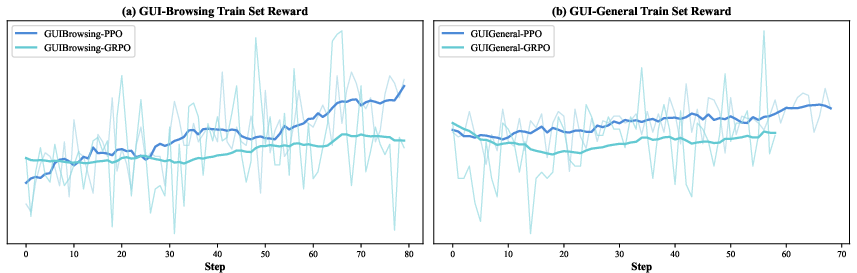

PPO outperforms GRPO in this setting, with higher rewards and lower volatility.

Figure 12: Training dynamics training reward of GUI-Browsing and GUI-General during PPO and GRPO training.

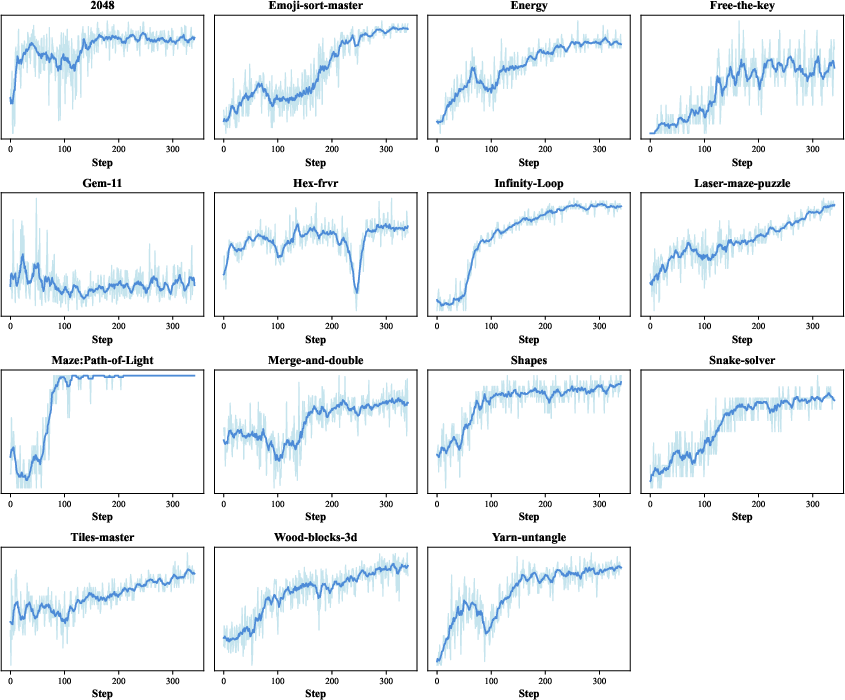

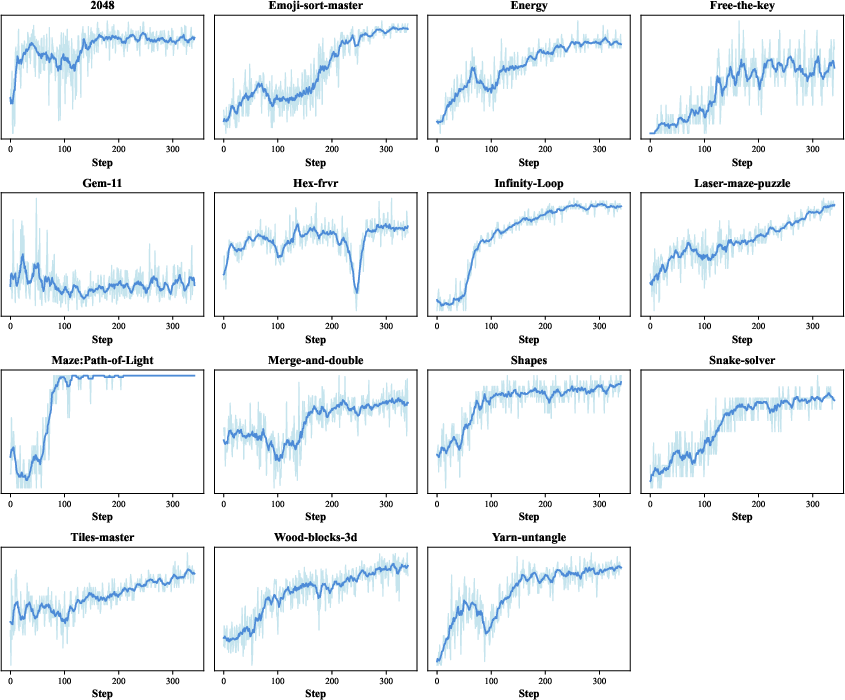

Per-game reward curves in the 15-game suite show both rapid learning and plateaus, with some games reaching or surpassing human-level reference.

Figure 13: Training dynamics of training rewards for each game in the 15 Games collection.

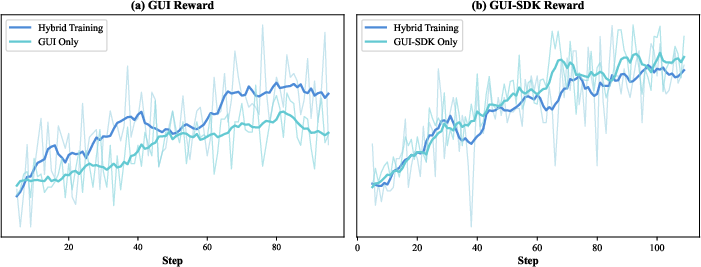

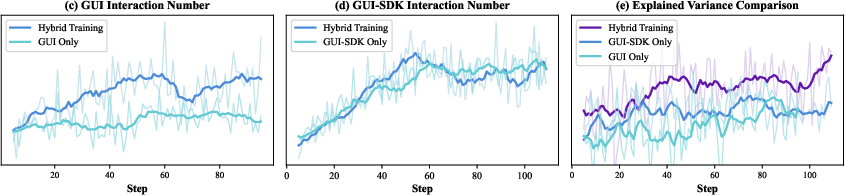

Hybrid RL (training on both GUI and GUI-SDK interfaces) yields stronger transfer and higher explained variance in the value model, compared to single-interface baselines.

Figure 14: Training reward of hybrid training versus training purely on one interface.

Quantization (W4A8) increases inference speed from 29.6 to 47 tokens/s and reduces latency from 4.0 to 2.5 seconds per round, with only a minor drop in accuracy, supporting practical deployment.

Implications and Future Directions

UI-TARS-2 establishes a robust methodology for scaling GUI agents via multi-turn RL, data flywheel curation, and hybrid environment design. The empirical results highlight the feasibility of unifying GUI, game, and system-level tasks within a single agentic framework, with strong generalization and efficiency.

The work suggests several directions for future research:

- Long-Horizon Planning: While UI-TARS-2 achieves strong results, certain games and complex tasks reveal a ceiling imposed by the backbone's reasoning and planning capacity. Advances in credit assignment, curriculum learning, and memory architectures are needed to further improve long-horizon performance.

- Reward Modeling: The use of generative ORMs and LLM-as-Judge is effective, but further work is required to reduce false positives and improve reward fidelity, especially in open-ended domains.

- Unified Optimization: Parameter interpolation is effective for merging domain-specialized agents, but more principled approaches to joint optimization and knowledge transfer could yield further gains.

- Scalable Data Curation: The data flywheel paradigm demonstrates the importance of continual, on-policy data generation and curation. Automated, scalable annotation and synthesis pipelines will be critical for future agent scaling.

Conclusion

UI-TARS-2 represents a significant advance in the development of generalist GUI agents, integrating scalable data generation, stabilized multi-turn RL, hybrid environment design, and efficient model merging. The system achieves strong, balanced performance across GUI, game, and system-level tasks, with detailed analyses providing actionable insights for large-scale agent RL. The methodological contributions and empirical findings provide a solid foundation for future research on unified, robust, and versatile digital agents.