- The paper introduces DBStereo, which decouples spatial and disparity aggregation in 4D cost volumes using efficient 2D convolutions.

- It replaces traditional 3D convolutions with a novel Channel2Disp operator and BGA blocks, reducing computational cost and overfitting.

- The method achieves superior accuracy on benchmarks like Scene Flow and KITTI while enabling real-time inference on resource-constrained devices.

Decoupling Bidirectional Geometric Representations of 4D Cost Volume with 2D Convolution

Introduction

This paper introduces DBStereo, a stereo matching framework that fundamentally rethinks the cost aggregation paradigm for 4D cost volumes. The authors challenge the prevailing empirical design that mandates dimension-matched 3D convolutions for regularizing 4D cost volumes, arguing that this approach is suboptimal for both computational efficiency and representational fidelity. DBStereo leverages pure 2D convolutions to decouple spatial and disparity aggregation, yielding a model that achieves state-of-the-art accuracy and real-time inference, even on resource-constrained devices.

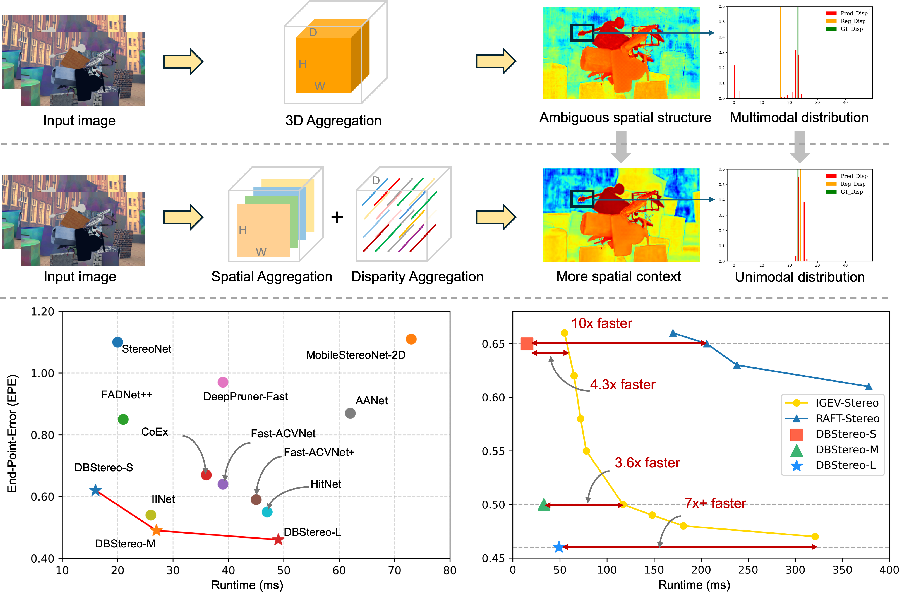

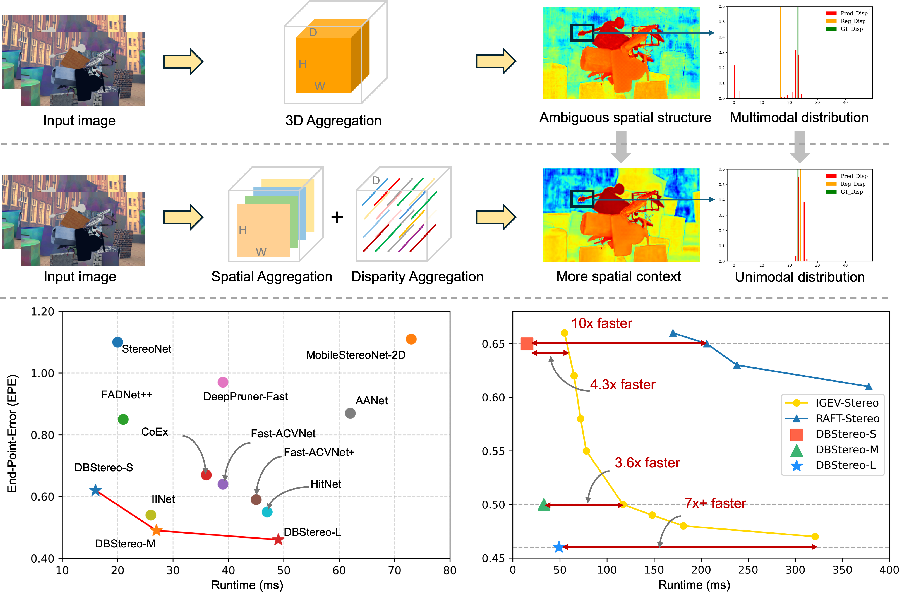

Figure 1: DBStereo decouples traditional 3D aggregation into spatial and disparity aggregation using 2D convolutions, outperforming all existing aggregation-based methods in both accuracy and inference time.

Limitations of 3D Convolutional Aggregation

Traditional stereo matching frameworks construct a 4D cost volume (D×C×W×H) and employ 3D CNNs for regularization. This coupled aggregation pattern assumes that spatial and disparity contexts can be jointly modeled with local 3D kernels. However, this design introduces two critical limitations:

- Coupled Aggregation: 3D convolutions force spatial and disparity features to share receptive fields, which is misaligned with the task-specific priors of stereo matching. Spatial context benefits from local smoothness, while disparity context requires global unimodality.

- Slow Receptive Field Expansion: The disparity dimension's receptive field grows slowly with stacked 3D convolutions, necessitating deep networks and incurring high computational and memory costs.

Empirical evidence shows that these limitations lead to overfitting, poor generalization in ill-posed regions, and prohibitively slow inference for real-time applications.

Spatial-Disparity Decoupled Aggregation Paradigm

The authors propose a paradigm shift: decouple the aggregation of spatial and disparity information using pure 2D convolutions. The key insights are:

- Spatial Local Smoothness Prior: Adjacent pixels at the same depth should have similar disparities.

- Disparity Unimodality Prior: Each pixel's disparity distribution should be sharply unimodal.

DBStereo operationalizes these priors by reshaping the 4D cost volume into a 3D tensor (D⋅C×W×H) via the Channel2Disp operator. Aggregation proceeds in two stages:

- Spatial Aggregation: 2D convolutions with 3×3 kernels are applied to each disparity plane, smoothing spatial noise and resolving ambiguities.

- Disparity Aggregation: 2D convolutions with 1×1 kernels act globally across the disparity dimension, enforcing unimodality and suppressing incorrect responses.

This decoupling imposes precise inductive biases, reducing the model's search space and mitigating overfitting.

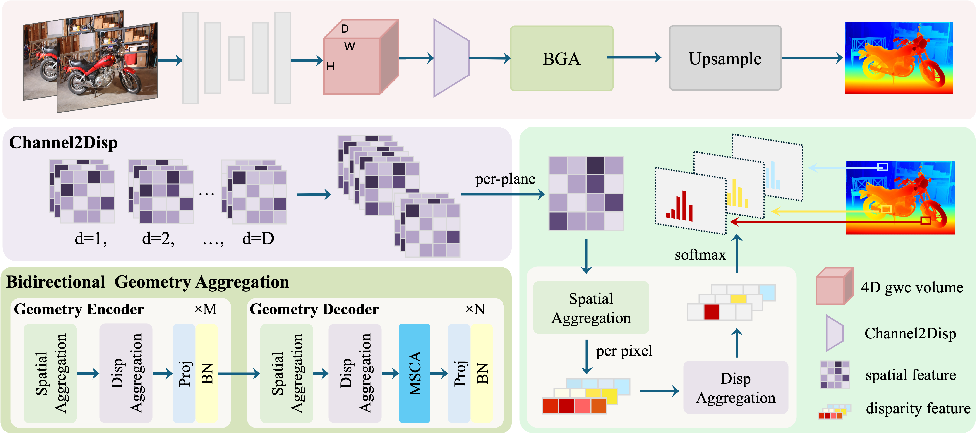

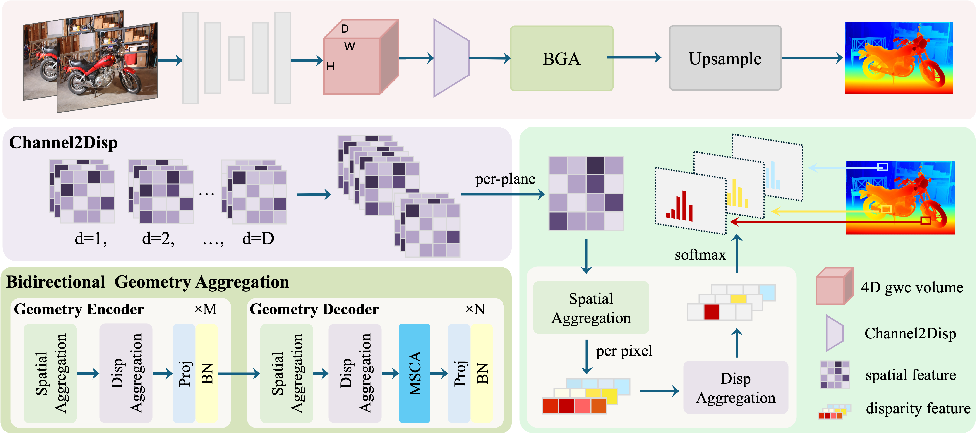

Figure 2: DBStereo framework with stacked Bidirectional Geometry Aggregation (BGA) blocks, alternating spatial and disparity aggregation modules for efficient cost volume regularization.

DBStereo Architecture

DBStereo utilizes MobileNetV2 pretrained on ImageNet for multi-scale feature extraction. Features from both left and right images are upsampled to $1/4$ resolution and used to construct the cost volume and spatial attention maps.

Cost Volume Construction

A 4D group-wise correlation volume is constructed by splitting features into groups and computing correlation maps for each disparity candidate. This approach balances information richness and computational efficiency.

Channel2Disp Operator

The Channel2Disp operator fuses the feature and disparity dimensions, reshaping the cost volume to (G⋅D)×H×W. This transformation enables the use of standard 2D convolutions for subsequent aggregation.

Bidirectional Geometry Aggregation (BGA) Block

The BGA block alternates between spatial aggregation (2D 3×3 convolutions) and disparity aggregation (2D 1×1 convolutions). This encoder-decoder structure efficiently extracts geometric representations, enforcing the desired priors.

Disparity Prediction

A softmax operation regresses the disparity map at $1/4$ resolution, which is then upsampled to full resolution for supervision. The loss function is a weighted smooth L1 loss on both initial and final disparity predictions.

Experimental Results

DBStereo is evaluated on Scene Flow and KITTI benchmarks. The large variant, DBStereo-L, achieves an EPE of 0.45 px and D1 of 1.57% on Scene Flow, outperforming all published real-time methods and even surpassing high-performance iterative methods such as RAFT-Stereo and IGEV-Stereo, with an inference time of 49 ms (over 85% faster than iterative baselines). The model demonstrates strong generalization and robustness in both synthetic and real-world datasets.

Implementation and Deployment Considerations

- Computational Efficiency: Pure 2D convolutions drastically reduce memory and FLOPs compared to 3D CNNs, enabling deployment on edge devices.

- Scalability: The decoupled aggregation paradigm allows flexible scaling of model capacity by adjusting the number of BGA blocks and convolutional channels.

- Generalization: Explicit inductive biases improve performance in textureless, occluded, and reflective regions, addressing common failure modes of prior methods.

- Resource Requirements: DBStereo can be trained on commodity GPUs and achieves real-time inference on RTX 3090-class hardware.

Theoretical and Practical Implications

The decoupled aggregation paradigm challenges the necessity of dimension-matched convolutions for high-dimensional cost volumes, suggesting that task-specific priors can be more effectively encoded via architectural design. This approach opens new avenues for efficient geometric reasoning in stereo matching and potentially other dense correspondence tasks.

Future Directions

- Extension to Other Modalities: The decoupling strategy may generalize to multi-view stereo, optical flow, and depth estimation tasks.

- Hardware Optimization: Further reduction in inference time is possible via quantization and hardware-specific optimizations for 2D convolutions.

- Inductive Bias Exploration: Investigating additional priors (e.g., semantic or temporal) could further enhance performance and robustness.

Conclusion

DBStereo demonstrates that pure 2D convolutional architectures, when guided by explicit spatial and disparity priors, can outperform traditional 3D CNN-based and iterative stereo matching methods in both accuracy and efficiency. The decoupled aggregation paradigm provides a strong baseline and a promising direction for future research in real-time, high-accuracy stereo matching.