- The paper presents DMCM which disentangles task-specific factors into separate context vectors, enabling selective and robust adaptation.

- It employs a controlled update mechanism during inner-loop adaptation, significantly enhancing zero-shot generalization in tasks like sine regression.

- DMCM demonstrates superior sim-to-real transfer in quadrupedal robot locomotion, achieving high performance under out-of-distribution conditions.

Disentangled Multi-Context Meta-Learning: Robust and Generalized Task Adaptation

Introduction and Motivation

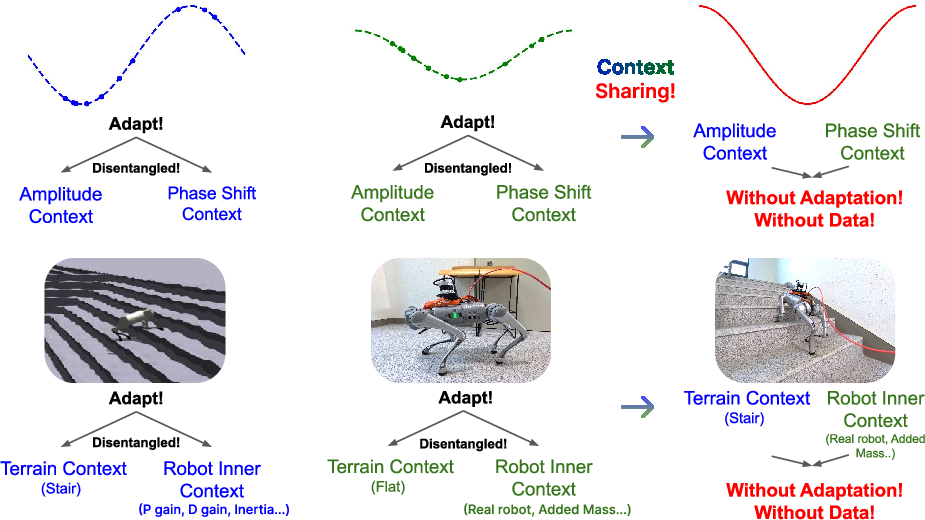

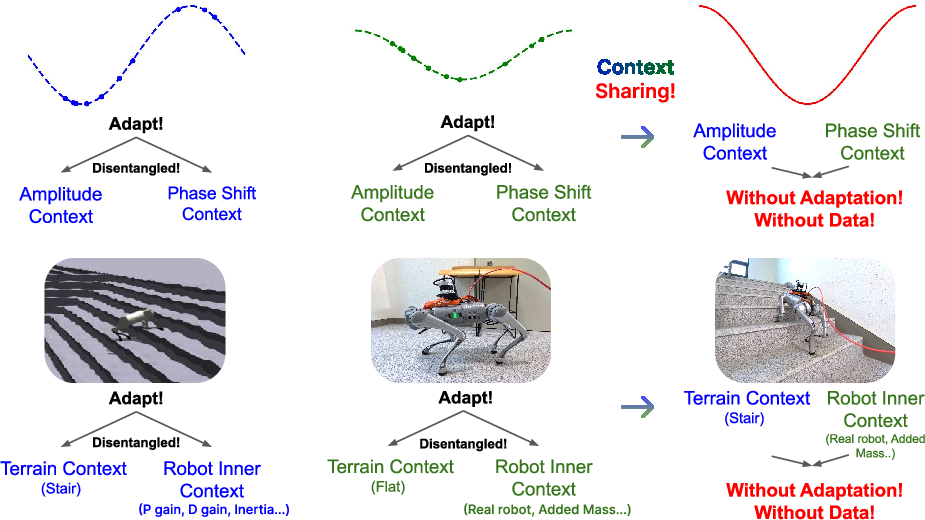

The paper introduces Disentangled Multi-Context Meta-Learning (DMCM), a meta-learning framework designed to address the limitations of conventional gradient-based meta-learning (GBML) methods, such as MAML and CAVIA, which typically entangle multiple task factors into a single context representation. This entanglement impedes interpretability, generalization, and robustness, especially in domains with compositional task variations, such as robotics and regression tasks. DMCM explicitly assigns each factor of variation to a distinct context vector, enabling selective adaptation and context sharing across tasks with overlapping factors.

Figure 1: DMCM adapts by disentangling task-specific factors into separate context vectors, which can be reused across tasks with overlapping factors, enabling generalization.

DMCM Framework and Algorithmic Details

DMCM extends the CAVIA meta-learning paradigm by introducing K context vectors {ϕ1,…,ϕK}, each corresponding to a distinct task factor (e.g., amplitude, phase, terrain, robot-specific property). During inner-loop adaptation, only the context vector associated with the changed factor is updated, while others remain fixed. This selective update is orchestrated via regulated data sequencing and explicit task labeling, ensuring that each context vector specializes in its assigned factor.

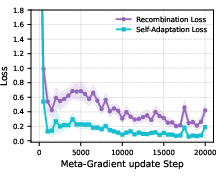

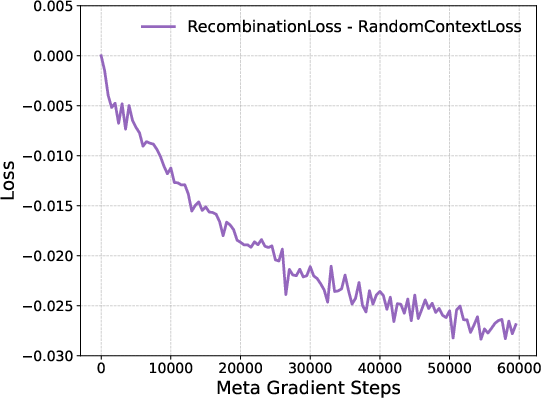

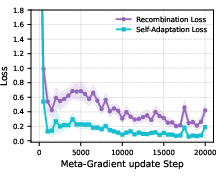

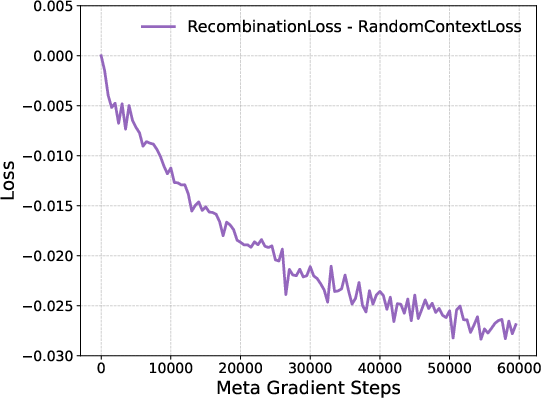

The outer loop meta-gradient step updates shared model parameters θ after a warm-up phase, allowing context vectors to accumulate meaningful information. An optional recombination loop enables zero-shot generalization by updating θ using context vectors that were not jointly adapted, thus encouraging the model to operate effectively with independently learned contexts.

Pseudocode Overview

1

2

3

4

5

|

for task in batch:

for s in range(K):

# Only update context vector phi^s for the changed factor

phi_i[s] -= alpha * grad_phi^s(Loss(f(phi_i^1, ..., phi_i^K, theta), D_train))

theta -= beta * grad_theta(Loss(f(phi_i^1, ..., phi_i^K, theta), D_test)) |

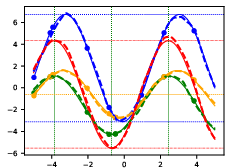

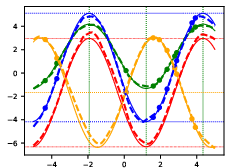

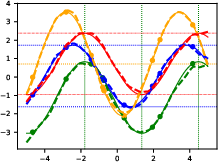

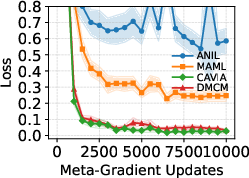

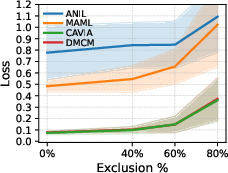

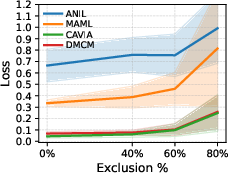

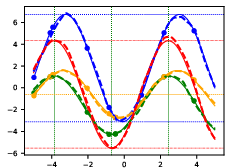

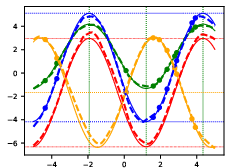

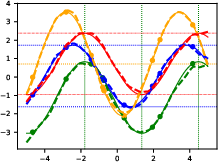

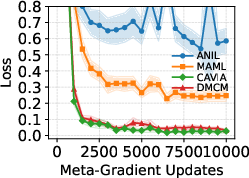

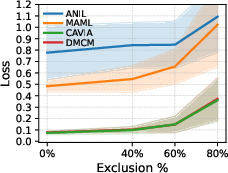

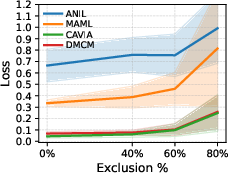

Sine Regression: Robustness and Zero-Shot Generalization

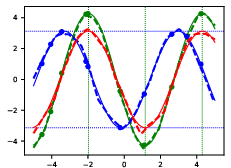

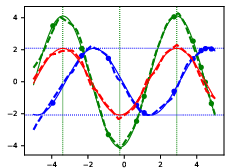

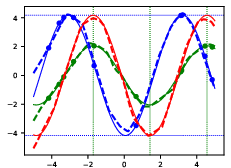

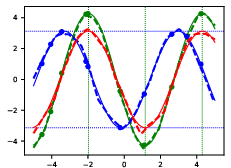

DMCM is evaluated on the sine regression benchmark, where tasks are parameterized by amplitude and phase shift. The model disentangles these factors into separate context vectors, enabling robust adaptation and generalization under out-of-distribution (OOD) conditions.

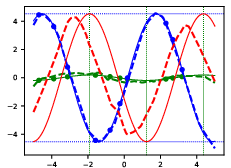

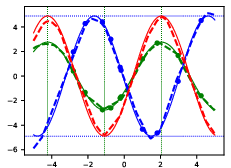

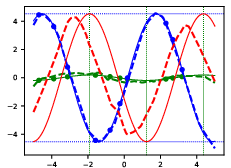

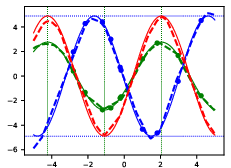

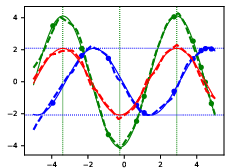

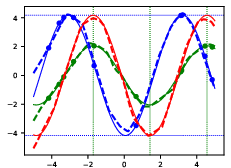

Empirical results demonstrate that DMCM maintains low loss and variance even when 40–80% of amplitude-phase combinations are excluded during training, outperforming MAML, CAVIA, and ANIL in OOD settings. Notably, DMCM supports zero-shot prediction by recombining context vectors learned from different tasks, achieving accurate predictions for unseen sine functions without further adaptation.

Figure 2: DMCM learning curve for sine regression with recombination loop, showing robust adaptation and zero-shot generalization.

Figure 3: Zero-shot predictions from DMCM with two contexts; red dots indicate predictions using shared context vectors.

Figure 4: Zero-shot predictions from DMCM with three contexts (amplitude, phase-shift, y-shift), demonstrating compositional generalization.

The number of context vectors is critical: aligning the context count with the true number of task factors yields optimal robustness, while excessive contexts slow adaptation and reduce stability.

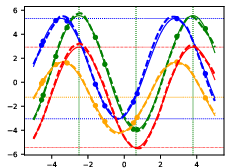

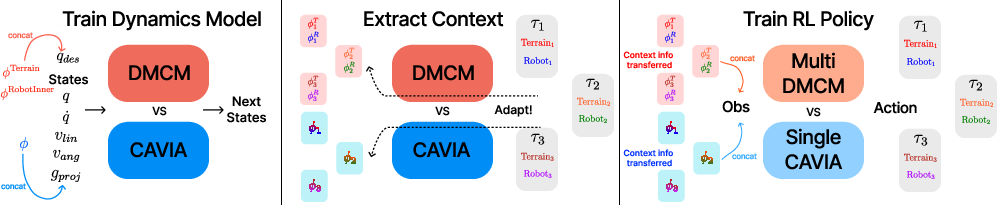

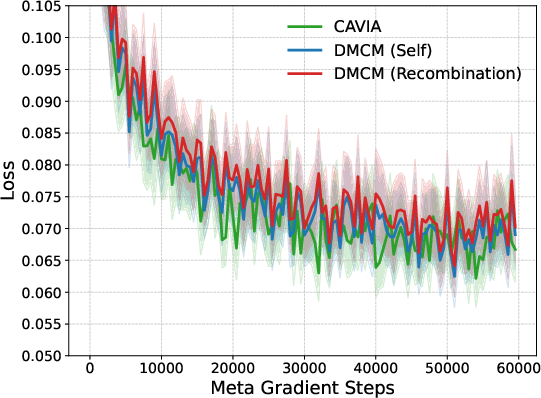

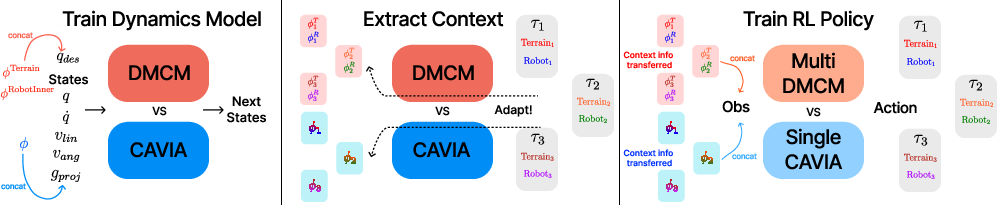

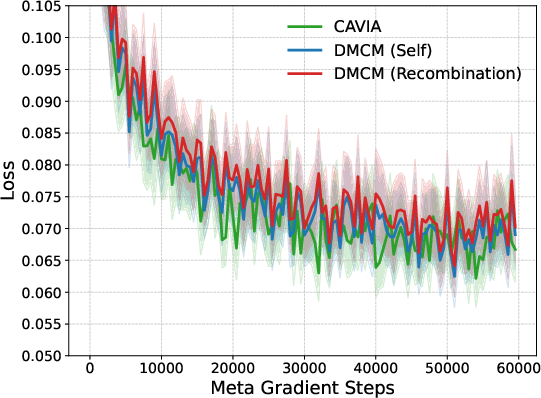

Quadrupedal Robot Locomotion: Sim-to-Real Transfer and Robustness

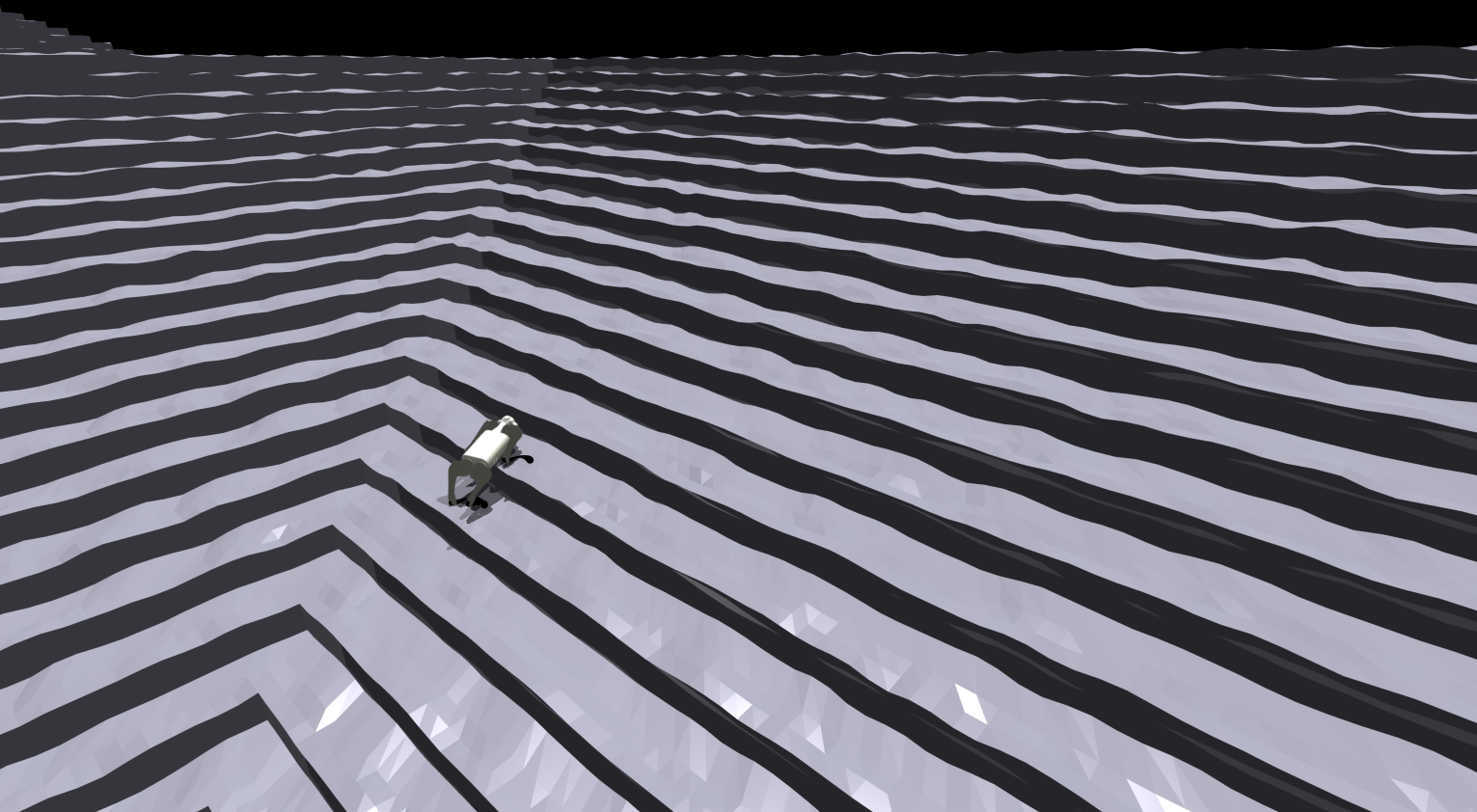

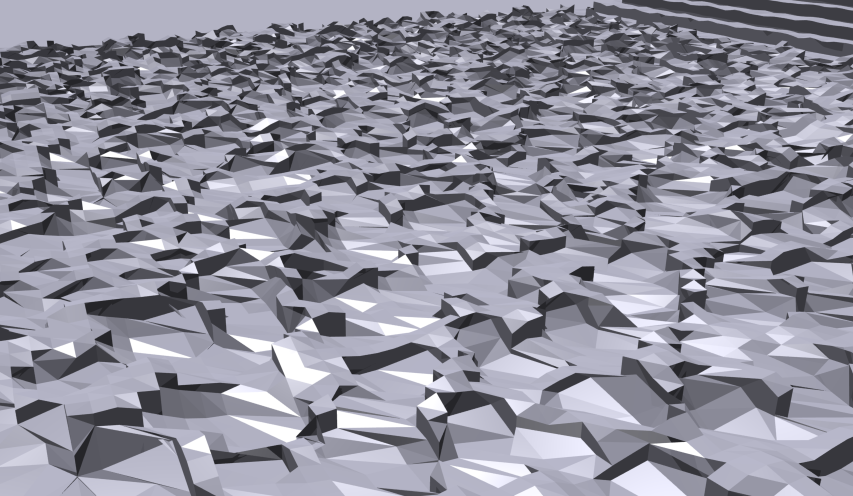

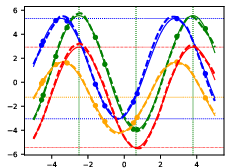

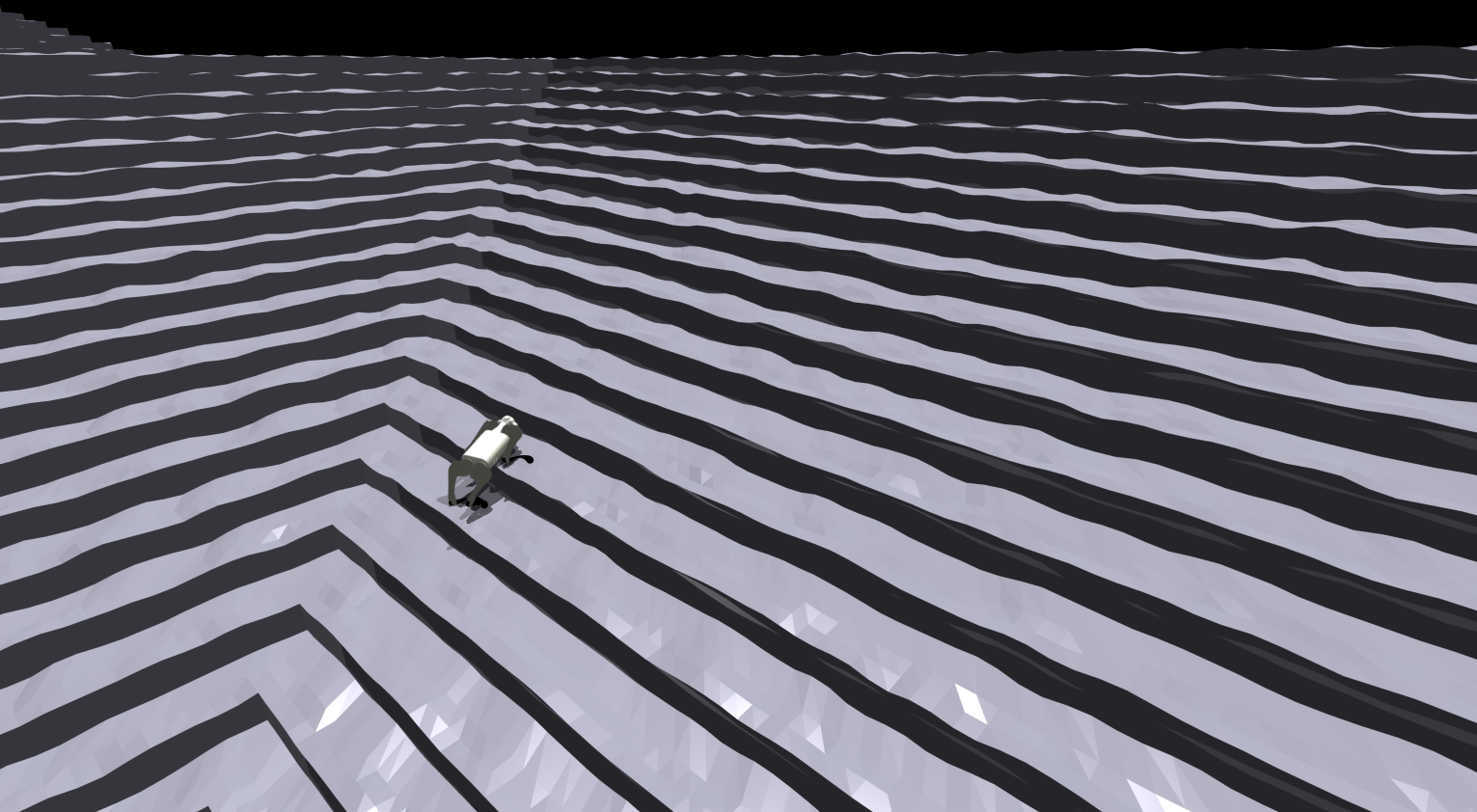

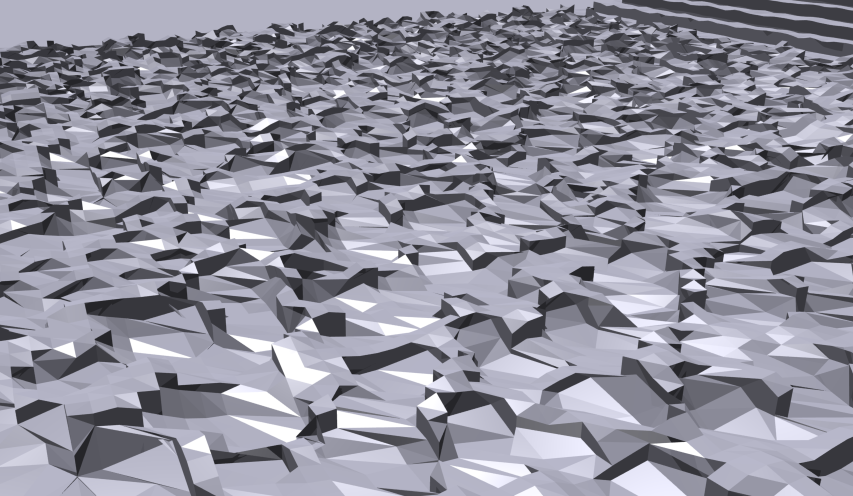

DMCM is applied to quadrupedal robot locomotion, where tasks vary along terrain and robot-specific properties (e.g., payload, control gains). The dynamics model is trained to predict robot states under diverse conditions, with context vectors disentangling terrain and robot-specific factors.

Figure 5: DMCM learning procedure for quadrupedal robot locomotion, illustrating context extraction and transfer to RL policy.

Figure 6: Evaluation of DMCM context separation on real-world datasets; context-aligned vectors yield lower loss than unrelated contexts.

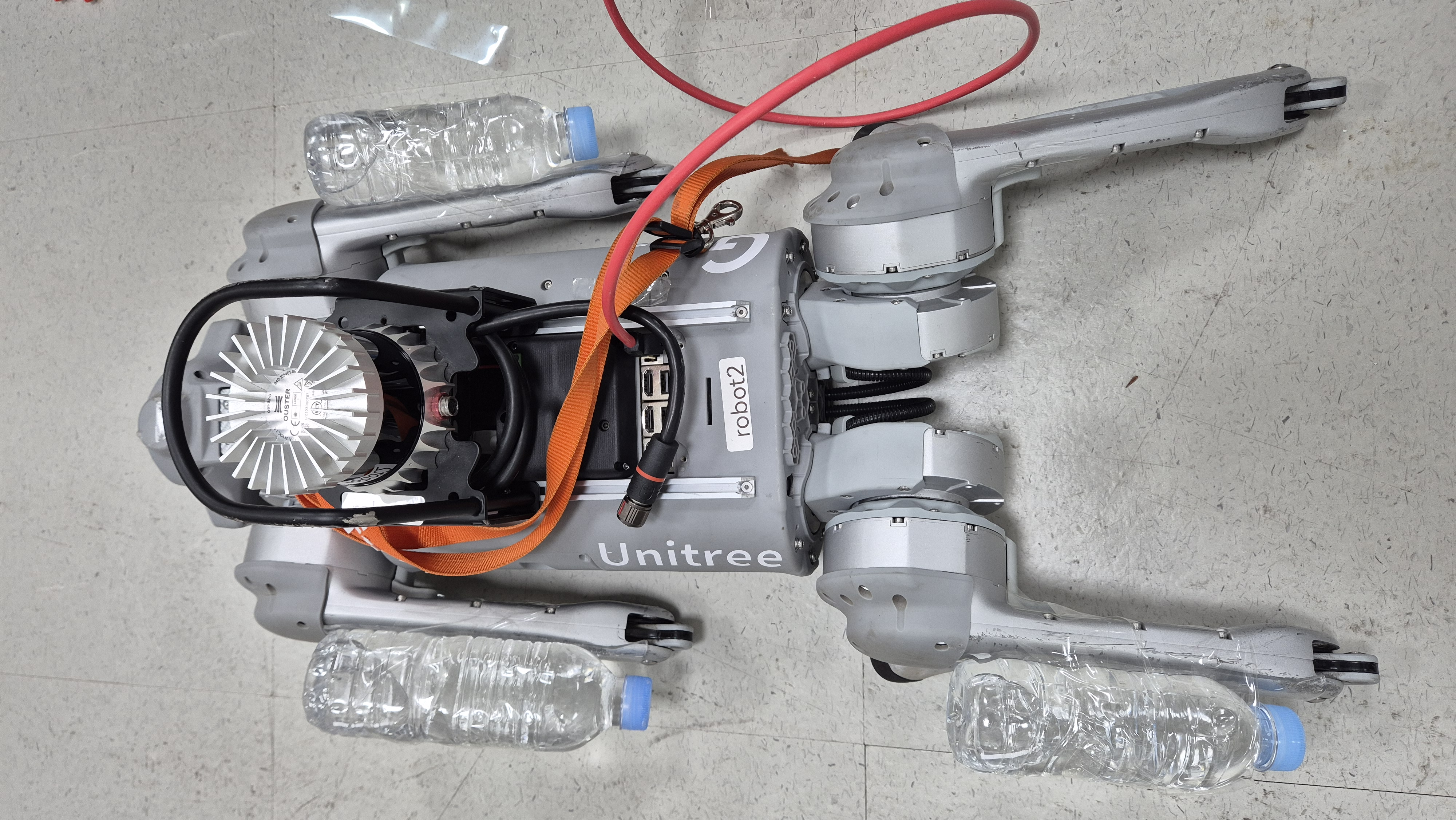

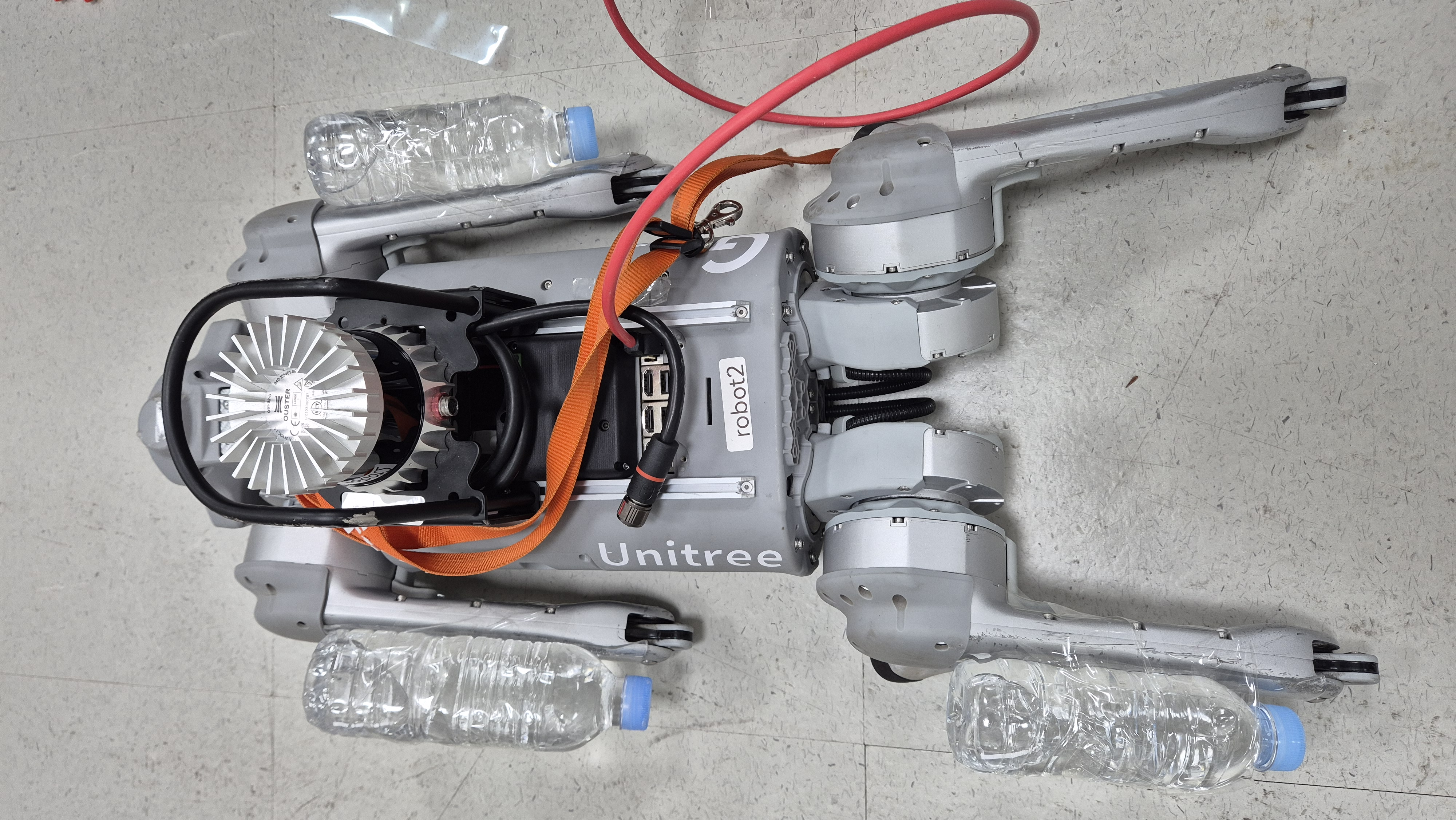

Context vectors extracted from the dynamics model are transferred to reinforcement learning (RL) policies. Three policies are compared: vanilla (no context), single-CAVIA (unified context), and multi-DMCM (disentangled contexts). DMCM consistently achieves superior robustness and success rates under OOD terrain and robot-specific property conditions, including payloads and control gains outside the training distribution.

Strong numerical results include:

- 80% success rate for DMCM in real-world stair climbing under OOD conditions (reduced Kp gain, added payload), using only 20 seconds of flat-terrain data for adaptation.

- Vanilla and single-CAVIA policies fail completely under the same OOD conditions.

- DMCM maintains high reward and lifespan under increasing payloads, while other policies degrade sharply.

Figure 7: Dynamics model loss for CAVIA, DMCM with self-adaptation, and DMCM with recombination (zero-shot with shared context vectors).

Figure 8: Highest level stair terrain used for OOD evaluation.

Figure 9: Go1 robot with water bottles attached at three legs, demonstrating DMCM robustness under asymmetric payloads.

Implementation Considerations and Trade-offs

Computational Requirements

- DMCM introduces additional memory overhead for storing K context vectors and recombination loop buffers.

- Training time per meta-gradient decreases with more context vectors (due to smaller update sizes), but adaptation time increases as each context must be updated separately.

- For K=3, recombination loop requires six extra context vectors in memory.

Hyperparameter Selection

- The number of context vectors should match the true number of task factors for optimal robustness.

- Context vector dimensionality must be sufficient to encode the assigned factor; underparameterization impedes expressiveness, while overparameterization reduces stability.

Deployment Strategies

- DMCM supports context sharing for zero-shot adaptation, enabling rapid deployment to novel tasks with overlapping factors.

- Real-world deployment requires pre-collected data for context extraction; integrating fast, online adaptation remains an open challenge.

Limitations and Future Directions

- Manual context labeling is required; automating context discovery is a key area for future research.

- DMCM has been validated on regression and RL tasks; extension to classification and other domains is needed.

- Real-time adaptation with selective context updates is not yet fully realized.

Theoretical and Practical Implications

DMCM advances the interpretability and compositionality of meta-learning by disentangling task factors, enabling robust adaptation and generalization in complex, multi-factor environments. The framework is particularly suited for domains with compositional structure, such as robotics, where sim-to-real transfer and OOD robustness are critical. The explicit separation of context vectors facilitates analysis of task variation and supports modular policy design.

Conclusion

Disentangled Multi-Context Meta-Learning (DMCM) provides a principled approach to robust and generalized task adaptation by learning multiple context vectors, each corresponding to a distinct factor of variation. Empirical results in sine regression and quadrupedal robot locomotion demonstrate superior OOD robustness, zero-shot generalization, and sim-to-real transfer capabilities compared to entangled context baselines. DMCM's compositional structure and context sharing mechanisms offer significant advantages for interpretable, scalable, and modular meta-learning, with promising implications for future research in automatic context discovery, broader domain applicability, and real-time adaptation.