- The paper introduces a novel SPMLL framework that reframes verb classification as a multi-label problem to effectively handle inherent semantics overlaps.

- It employs a Graph Enhanced Verb MLP with GCN and adversarial training, achieving over 3% improvement in mean average precision compared to single-label baselines.

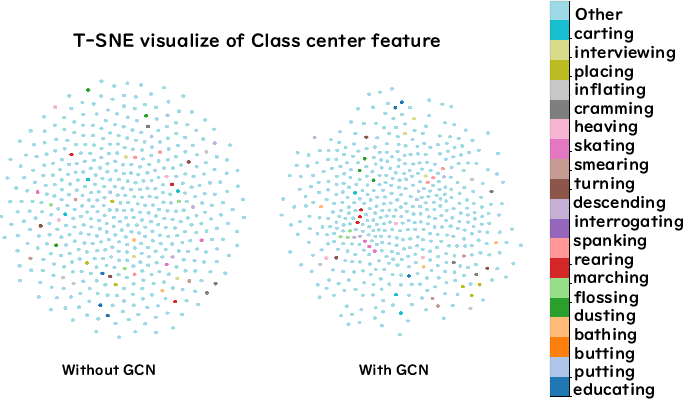

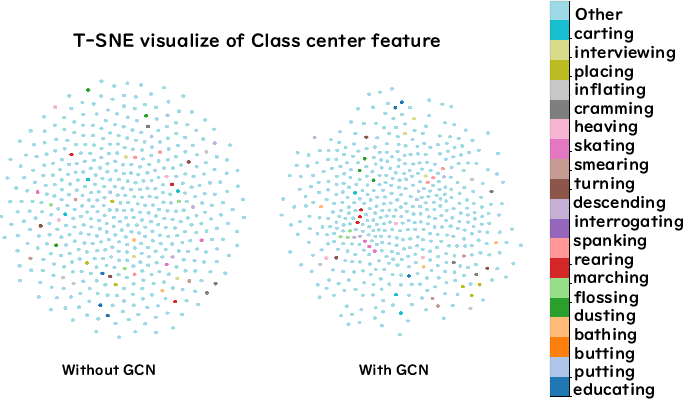

- Empirical evaluations using visual embeddings and t-SNE plots validate that the approach clusters semantically similar classes more effectively.

The Demon is in Ambiguity: Revisiting Situation Recognition with Single Positive Multi-Label Learning

Introduction

This paper addresses a significant challenge in situation recognition (SR) within computer vision: the prevalent ambiguity in verb classification. Traditional SR frameworks classify events in images using single-label approaches that fail to capture the multi-faceted nature of visual events. This paper posits that verb classification in SR is inherently a multi-label task and introduces a novel approach to address this need by framing it as a Single Positive Multi-Label Learning (SPMLL) problem.

The Ambiguity Challenge in Verb Classification

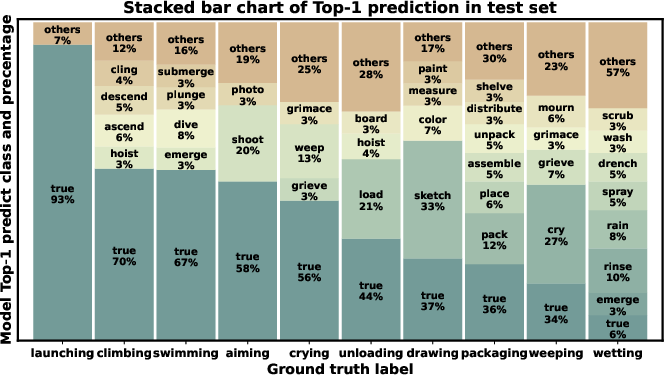

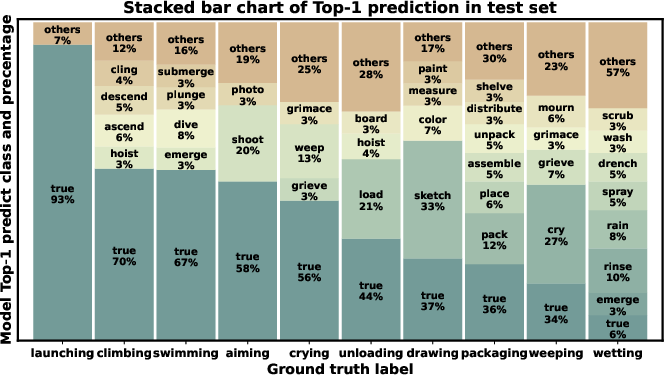

Existing SR tasks divide into three main components: verb classification, semantic role labeling, and semantic role grounding. Verb classification acts as the keystone, influencing subsequent tasks. Current methods, however, simplify verb classification into a single-label task, ignoring the inherent overlap among verb categories (e.g., "crying" vs. "weeping") (Figure 1).

Figure 1: Illustration of Baseline model's Top-1 predictions on test set for random sampled 10 classes. Each bar shows the predicted label and its proportion, with the ground truth label on the x-axis. It can be seen that most wrong cases are semantically similar or even semantically overlapped with the ground truth class.

The paper provides empirical evidence through visual embeddings and t-SNE plots that demonstrate significant overlaps in class distributions. Given this ambiguity, a multi-label framework better suits verb classification tasks.

Given the prohibitive cost of fully annotating existing datasets with multiple labels, the authors advocate for an SPMLL approach. This perspective allows training robust multi-label models using only single positive labels per instance, thereby aligning training requirements with practical constraints.

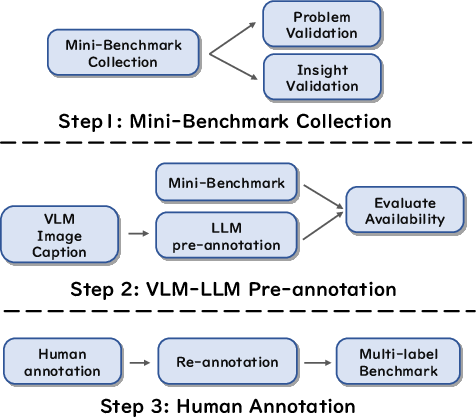

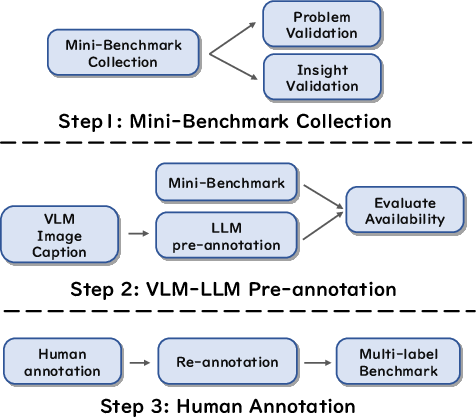

A comprehensive multi-label evaluation benchmark was created using automated pre-annotation steps that leveraged LLMs, followed by manual verification to ensure high-quality annotations (Figure 2).

Figure 2: Three main steps for data collection: a mini-benchmark dataset to validate the problem's existence, pre-annotation using LLMs, and refinement through human verification.

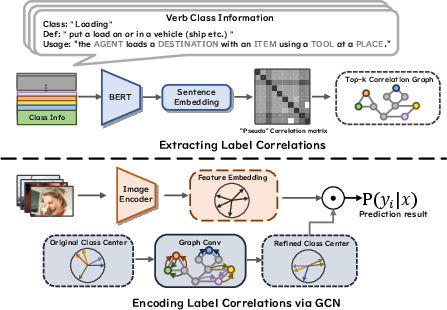

Proposed Method: Graph Enhanced Verb MLP

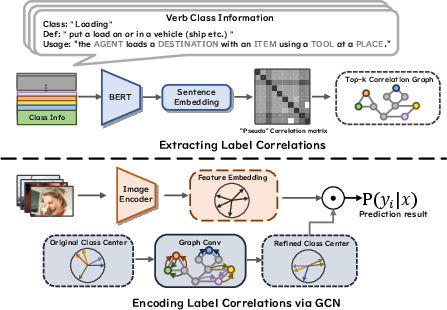

The solution proposed is the Graph Enhanced Verb Multilayer Perceptron (GE-VerbMLP), integrating graph neural networks to encode label correlations and adversarial training to refine decision boundaries. A sparse semantic similarity matrix functions as a proxy for label correlation, guiding the model through a multi-layer GCN to enhance classification accuracy.

Figure 3: Usage of semantic relations to regularize relations between categories. The semantic similarity matrix is employed to construct a label correlation graph used in a multi-layer GCN.

Adversarial training with FGSM and PGD further mitigates ambiguities by smoothing decision boundaries, achieving a balance between robustness and classification accuracy. Experimental results demonstrated over 3% improvement in MAP compared to single-label baselines.

Experimental Evaluation

The paper benchmarks several existing models with the proposed GE-VerbMLP, showing considerable improvements in the multi-label field without sacrificing single-label performance. The qualitative analyses using embedding visualizations reveal improved clustering of semantically similar classes after incorporating GCN layers (Figure 4).

Figure 4: T-SNE visualization of different class center vectors. Semantically similar classes are closer after GCN layers, illustrating effective clustering.

Conclusion

The paper presents a valuable rethinking of verb classification in SR, addressing fundamental ambiguities through an SPMLL framing. By developing a robust multi-label dataset and leveraging advanced neural frameworks, the paper provides crucial insights and methodologies for SR improvements. The implications extend beyond merely SR to other complex classification tasks dealing with inherent label ambiguities. Future research can build on this foundation to refine verb classification techniques further and explore applications to related tasks in multimedia and beyond.