- The paper presents MENTAT, a two-phase framework that evolves prompts and aggregates rollouts to overcome LLM limitations in reasoning-intensive regression.

- The methodology integrates batch-reflective prompt optimization with neural ensemble learning, addressing quantization issues and enhancing numerical prediction precision.

- Experimental results demonstrate up to a 46.2% improvement in mathematical error detection and a superior Concordance Correlation Coefficient over traditional baselines.

Reasoning-Intensive Regression: An Analytical Overview

Introduction

The paper "Reasoning-Intensive Regression" introduces a novel perspective on applying LLMs to a segment of natural language regression tasks termed Reasoning-Intensive Regression (RiR). Unlike standard tasks such as sentiment analysis or similarity scoring, RiR tasks demand a higher level of reasoning and step-by-step deduction, making them particularly challenging for current LLM strategies. The research identifies a gap in the ability of both prompting and gradient-based finetuning methods when faced with RiR problems, setting the stage for the proposed MENTAT framework.

Conceptual Framework of RiR

RiR tasks require models to not only predict a numerical score but also perform intricate reasoning across discrete steps preceding the regression. This blending of deep reasoning with precise score prediction suggests a new behavior of LLMs beyond traditional tasks. The paper organizes regression problems into three levels by complexity: feature-based, semantic analysis, and reasoning-intensive, with RiR representing the most complex category due to its requirement for logical decomposition and high-precision inferences.

The authors highlight three specific tasks as representatives of RiR: mathematical error detection, pairwise Retrieval-Augmented Generation (RAG) comparison, and essay grading. Each task exemplifies different nuances of reasoning and scoring, highlighting the diverse application domains and the critical need for efficient methodologies capable of addressing these challenges within resource constraints.

Methodological Innovation: MENTAT

MENTAT stands for Mistake-Aware prompt Evolver with Neural Training And Testing. This method integrates batch-reflective prompt optimization with neural ensemble learning, functioning as a two-phase process. In Phase 1, the LLM evolves its prompt through error-driven analysis, adapting iteratively to its own prediction patterns. This batch processing approach helps uncover systematic errors and optimize prompts beyond individual examples.

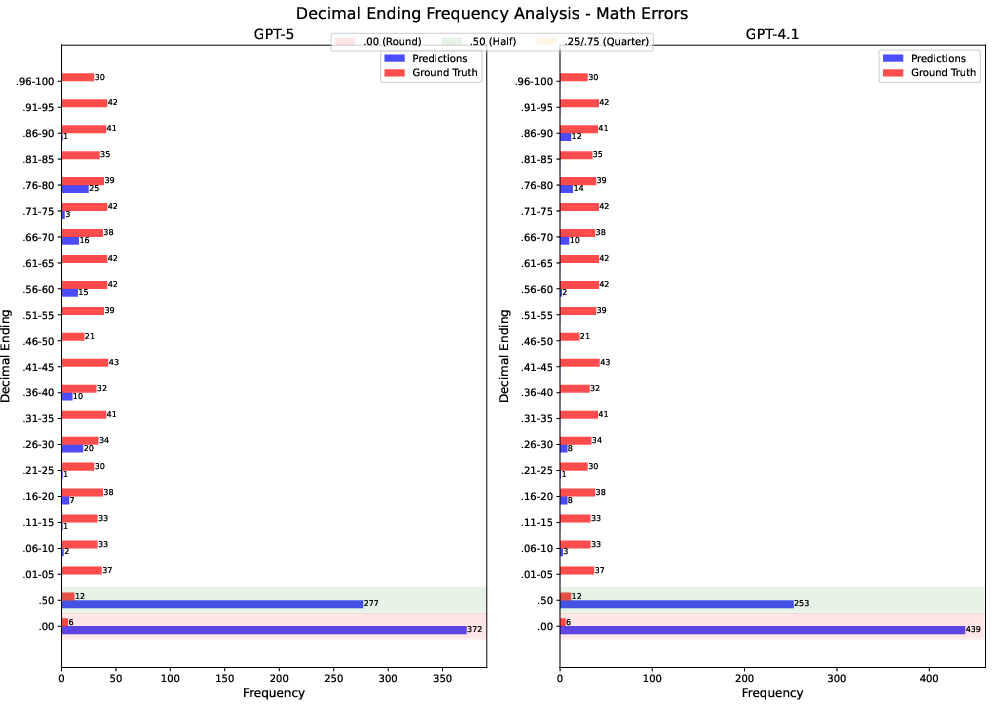

Phase 2 involves using the refined prompt from Phase 1 to generate multiple rollouts for each example. An MLP aggregates these stochastic predictions, harmonizing the variance and leveraging the LLM's reasoning power while circumventing the quantization limitations of direct numerical predictions. This dual-phase procedure enhances model performance on RiR tasks by offering data-efficient adaptations.

Evaluation and Results

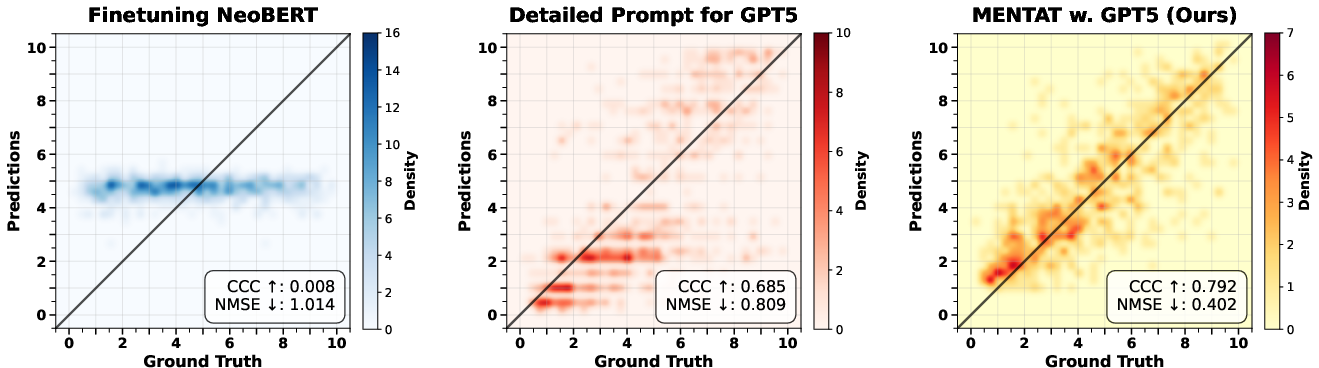

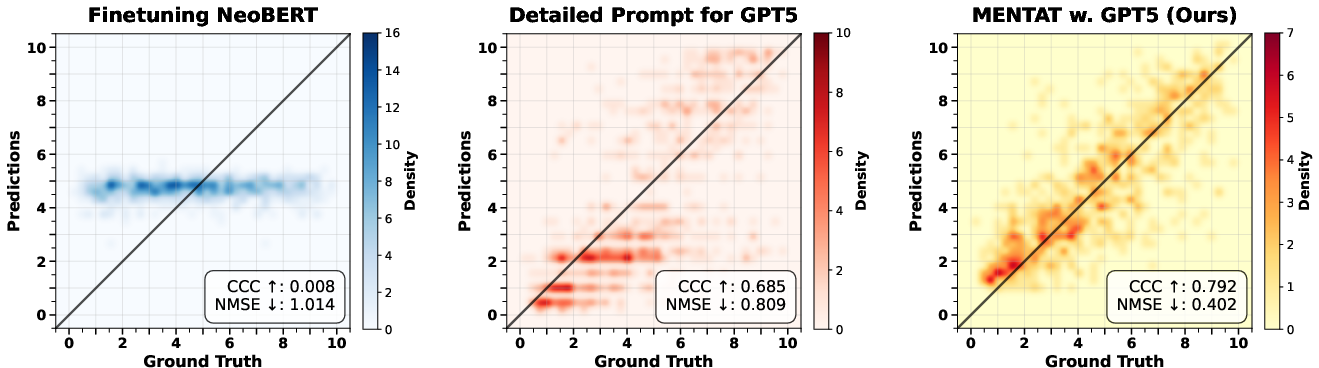

The paper provides a thorough experimental evaluation across various configurations with distinctive baselines such as NeoBERT and LLM prompting with GPT-4.1 and GPT-5. The results indicate a significant performance boost using MENTAT, especially noticeable in more reasoning-demanding tasks like mathematical error detection, where it recorded up to a 46.2% improvement over the detailed prompting baseline.

In terms of regression metrics, the Concordance Correlation Coefficient (CCC) emerged as a more reflective metric of performance over traditional NMSE, capturing both correlation and systematic bias—a critical feature for understanding the true effectiveness of RiR problem-solving strategies.

Figure 1: On regression for detecting the first math error, a finetuned NeoBERT model collapses to mean predictions, while MENTAT's performance exemplifies better CCC.

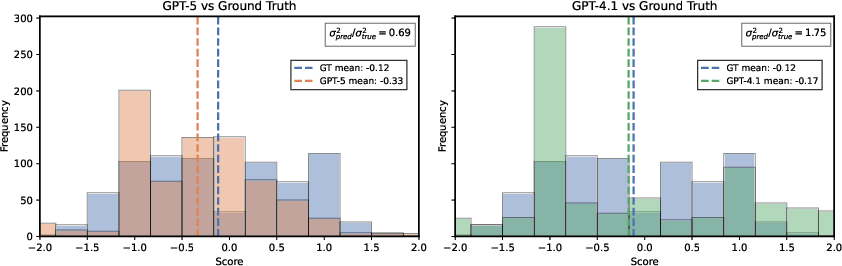

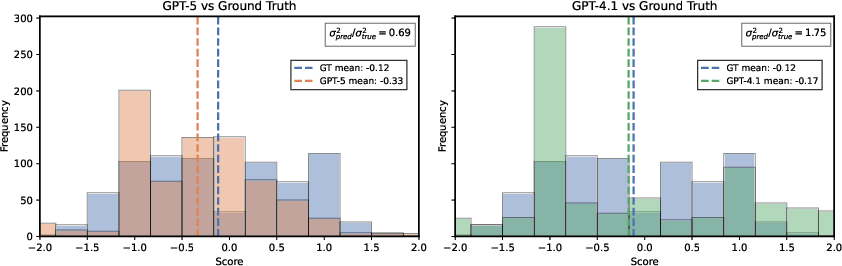

In particular, in the pairwise RAG comparison task, an unexpected result was observed where GPT-4.1 outperformed GPT-5. This highlights challenges such as over-deliberation by more powerful reasoning models, showcasing the complexity inherent in balancing reasoning depth with regression precision.

Figure 2: Pairwise RAG distributions of mean rollouts versus ground truth, illustrating gpt-5's center-seeking behavior versus gpt-4.1's better distributional adherence.

Challenges and Future Directions

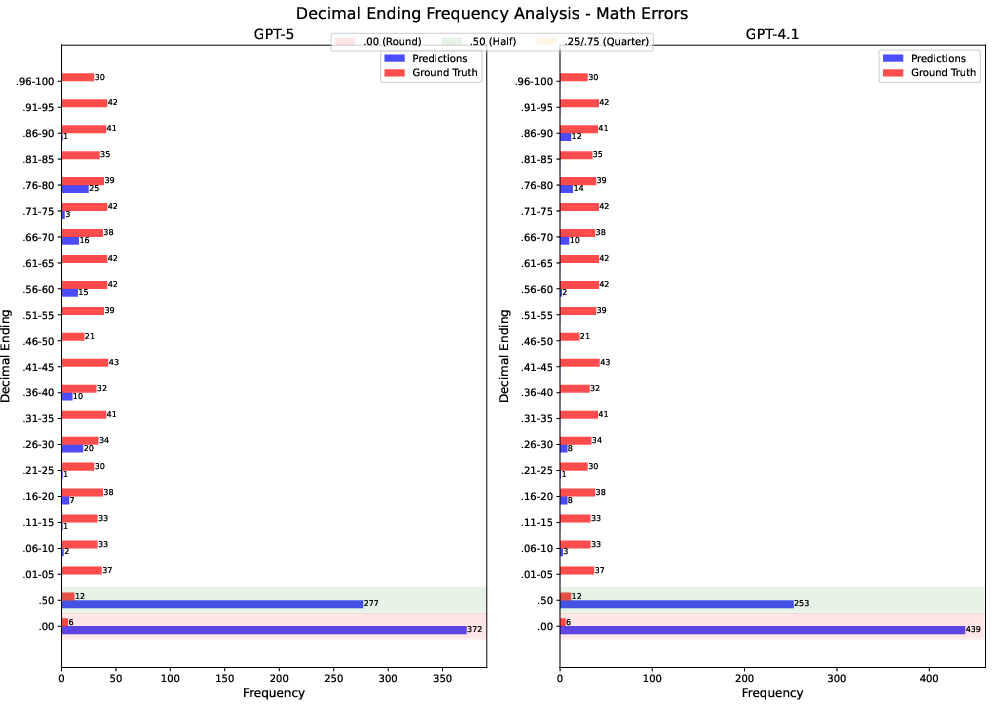

The research identifies notable challenges, especially regarding LLM's inherent quantization tendencies in numerical outputs, demanding hybrid solutions like MENTAT that can bridge reasoning capabilities with precise numerical prediction. The exploration of RiR tasks has broader implications across AI domains, suggesting a trajectory toward developing comprehensive models that integrate reasoning and regression in more coherent frameworks.

Additionally, MENTAT's success suggests that future work could extend its principles to more extensive RiR benchmarks and explore multi-stage LLMs or more sophisticated neural aggregation techniques to further close the gap in performance.

Figure 3: Distribution of decimal endings in LLM predictions shows clustering bias requiring nuanced aggregation techniques.

Conclusion

The paper advances the understanding of how LLMs can be adapted to tackle reasoning-intensive regression tasks efficiently. By introducing MENTAT, it opens new avenues for leveraging LLM architectures in domains that necessitate simultaneous reasoning and regression prowess. Nevertheless, substantial opportunities for refining and further enhancing RiR methodologies remain, particularly as AI systems continue to evolve toward greater reasoning sophistication and numerical precision.