- The paper introduces MCP-Bench, a benchmark that evaluates LLM agents performing real-world multi-server tasks using a diverse ecosystem of 250 tools.

- The methodology leverages an LLM-driven synthesis pipeline to generate complex tasks with dependency chains, fuzzy instructions, and quality filtering.

- Empirical results show strong models efficiently manage multi-server workflows while exposing challenges in long-horizon planning and cross-domain orchestration.

Motivation and Benchmark Design

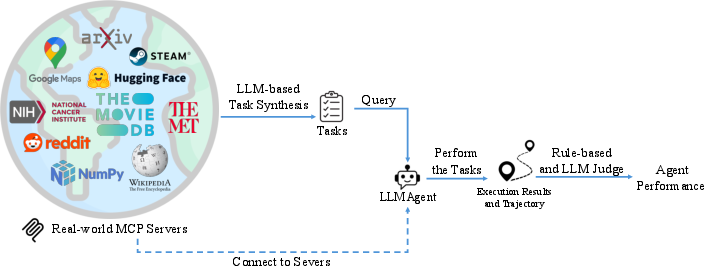

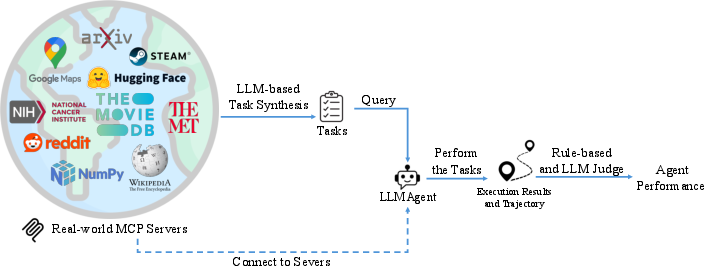

MCP-Bench addresses critical deficiencies in prior tool-use benchmarks for LLM agents by leveraging the Model Context Protocol (MCP) to expose agents to a diverse, production-grade ecosystem of 28 live servers and 250 structured tools spanning domains such as finance, science, healthcare, travel, and research. Unlike API aggregation benchmarks (e.g., ToolBench, BFCL v3), which suffer from shallow, artificially stitched workflows and limited cross-tool compatibility, MCP-Bench enables authentic multi-step, multi-domain tasks with rich input-output coupling and realistic dependency chains. The benchmark is constructed via an LLM-driven synthesis pipeline that analyzes tool I/O signatures to discover dependency chains, generates natural language instructions, and applies rigorous quality filtering for solvability and utility. Each task is further rewritten into a fuzzy, instruction-minimal variant, omitting explicit tool names and steps to stress-test agents' retrieval and planning capabilities under ambiguity.

Figure 1: MCP-Bench connects LLM agents to real-world MCP servers exposing 250 structured tools across domains such as finance, science, and research. Tasks are generated via LLM-based synthesis, then executed by the agent through multi-turn tool invocations. Each execution trajectory is evaluated using a combination of rule-based checks and LLM-as-a-Judge scoring, assessing agent performance in tool schema understanding, multi-hop planning, and real-world adaptability.

MCP Server Ecosystem and Task Synthesis

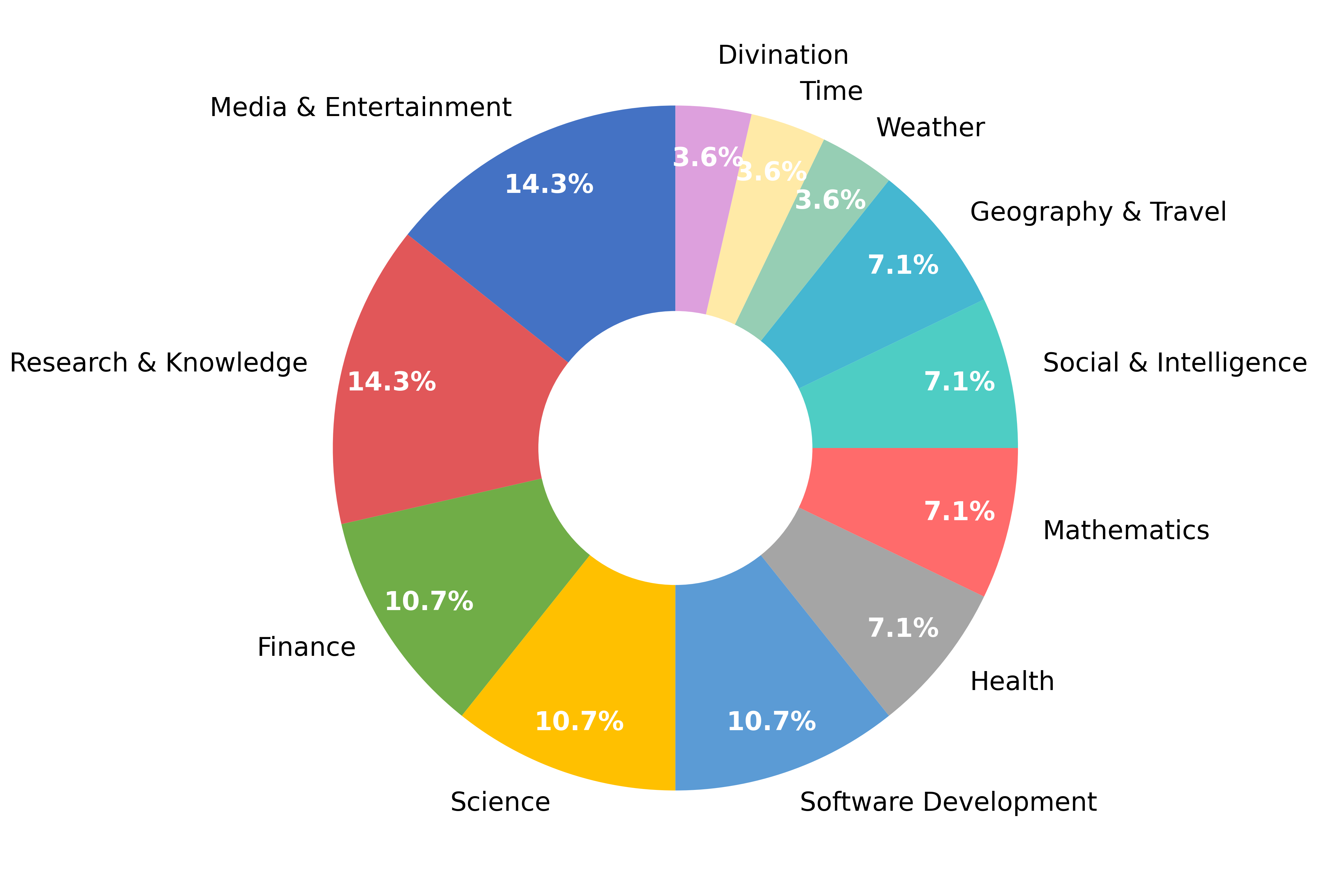

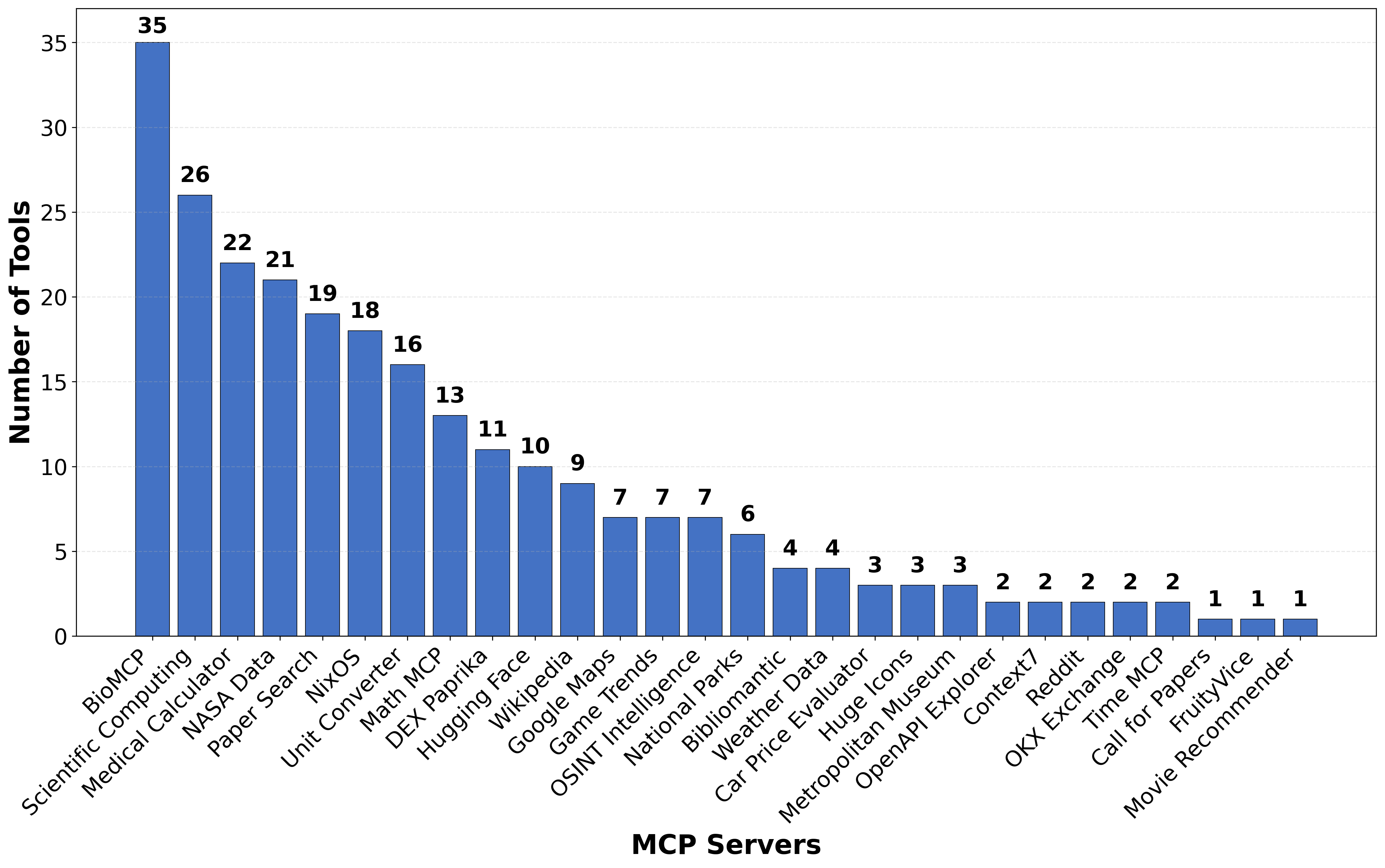

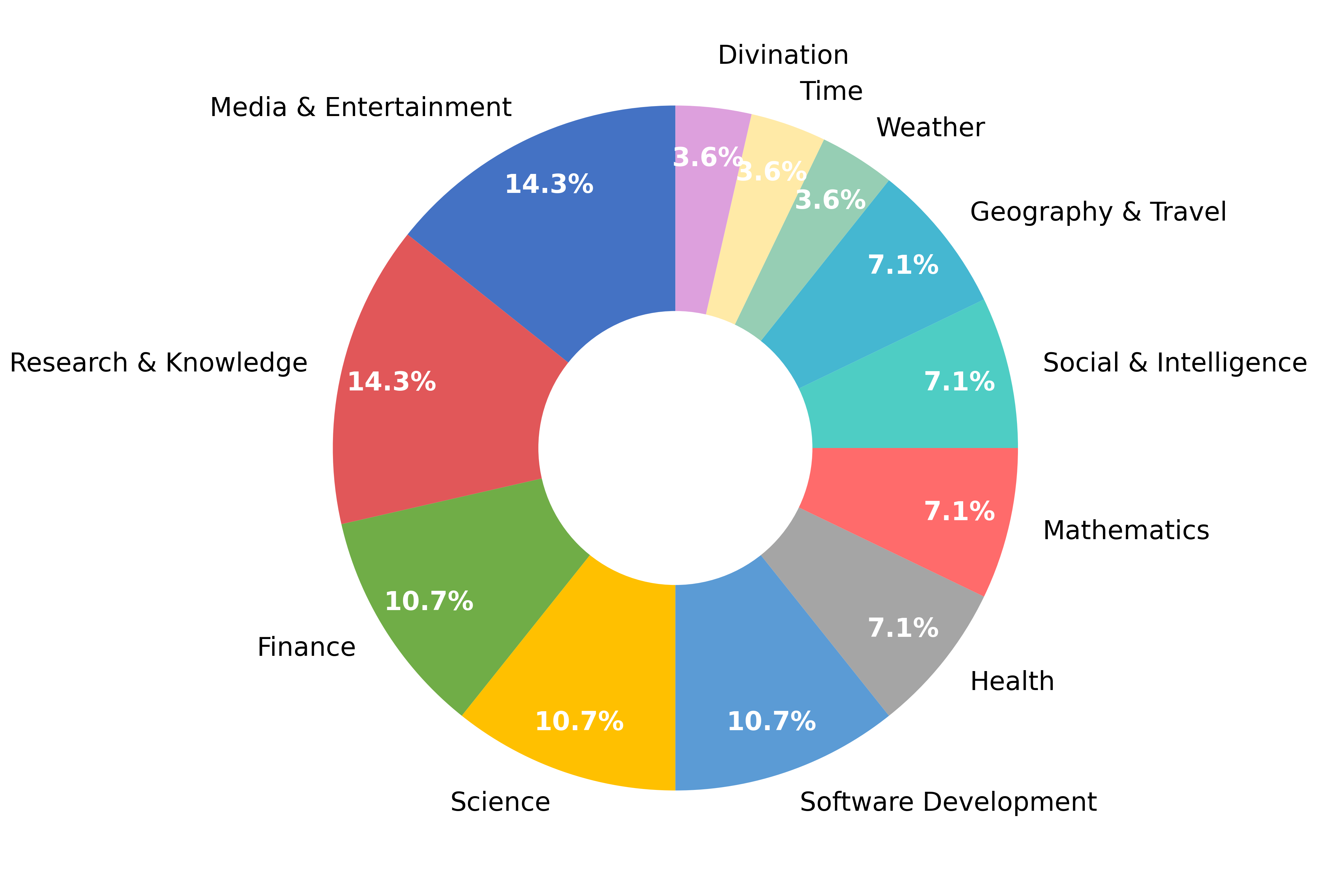

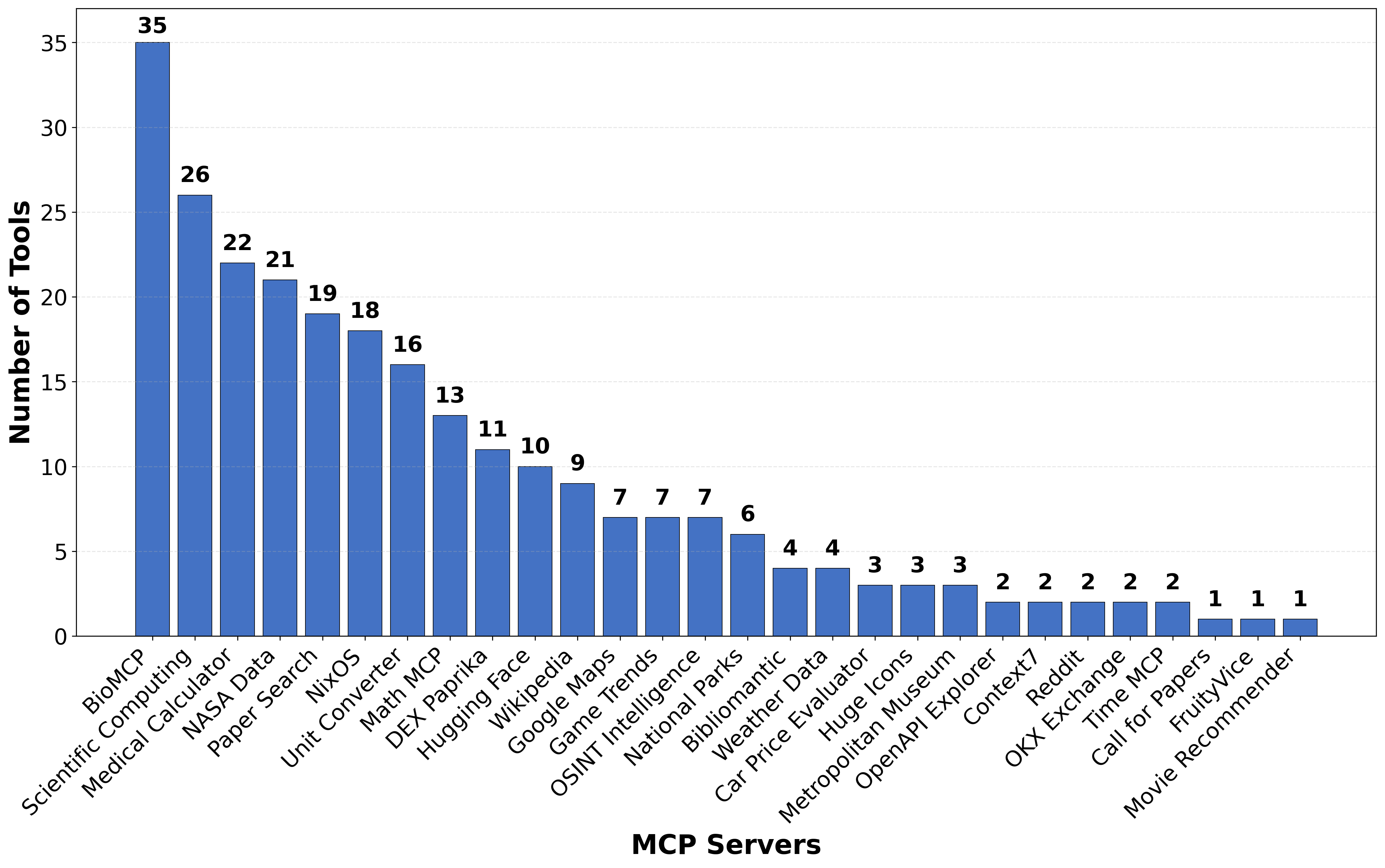

The MCP server ecosystem in MCP-Bench is highly heterogeneous, with servers ranging from single-tool endpoints (e.g., FruityVice, Movie Recommender) to complex platforms such as BioMCP (35 tools), Scientific Computing (26 tools), and Medical Calculator (22 tools). Domains include media, finance, research, software development, travel, health, and more, ensuring broad coverage of real-world tool-use scenarios.

Figure 2: Overview of MCP server ecosystem used in the benchmark, illustrating the diversity of domains and tool distributions.

Task synthesis proceeds in three stages: (1) dependency chain discovery, (2) automatic quality filtering, and (3) task description fuzzing. Dependency chains are identified by analyzing tool input/output relationships, enabling the construction of tasks with deep sequential and parallel dependencies, cross-server orchestration, and multi-goal objectives. Quality filtering ensures that only tasks with high solvability (≥9/10) and practical utility (≥5/10) are retained. Fuzzy task variants are generated to require agents to infer tool selection and execution strategies from context, rather than explicit instructions, thereby testing semantic retrieval and planning under ambiguity.

MCP-Bench formalizes tool-using agent evaluation as a structured extension of the POMDP framework, with each task represented as (S,A,O,T,R,U,Σ), where Σ is the set of available MCP servers and T=⋃iTi is the global tool set. Agents operate in a multi-round decision process, generating plans, executing parallel tool calls, compressing observations, and updating internal state at each round. The process continues for up to Tmax rounds (20 in MCP-Bench) or until termination is signaled. This paradigm supports both intra-server and cross-server workflows, with explicit support for parallel execution and dependency management.

Evaluation Framework

MCP-Bench employs a dual evaluation framework:

- Rule-based metrics: Tool name validity, schema compliance, and execution success are computed from execution traces, penalizing hallucinations, malformed requests, and runtime failures.

- LLM-as-a-Judge scoring: Strategic quality is assessed across three axes—task completion, tool usage, and planning effectiveness—using structured rubrics. Each axis is decomposed into sub-dimensions (e.g., fulfiLLMent, grounding, appropriateness, parameter accuracy, dependency awareness, parallelism/efficiency), scored on a strict percentage-based system. Prompt shuffling and score averaging are applied to mitigate scoring bias and improve robustness.

Ablation studies demonstrate that prompt shuffling and score averaging reduce the coefficient of variation among LLM judges (from 16.8% to 15.1%) and improve human agreement scores (from 1.24 to 1.43 out of 2), substantiating the reliability of the evaluation pipeline.

Empirical Results and Analysis

Twenty advanced LLMs were evaluated on 104 MCP-Bench tasks, spanning both single-server and multi-server settings. Schema understanding and valid tool naming have largely converged across models, with top-tier systems (o3, gpt-5, gpt-oss-120b, qwen3-235b-a22b-2507, gpt-4o) exceeding 98% compliance. However, substantial gaps persist in higher-order reasoning and planning:

- Overall scores: gpt-5 (0.749), o3 (0.715), and gpt-oss-120b (0.692) lead, reflecting robust planning and execution. Smaller models (e.g., llama-3-1-8b-instruct, 0.428) exhibit weak dependency awareness and parallelism.

- Scalability: Strong models maintain stable performance across increasing server counts, while weaker models degrade, especially in multi-server scenarios. The main sources of decline are dependency management and parallel orchestration.

- Resource efficiency: Smaller models require more rounds and tool calls (e.g., llama-3-1-8b-instruct: 17.3 rounds, 155.6 calls/task), whereas strong models (e.g., o3, gpt-4o) achieve comparable success with leaner execution (6–8 rounds, <40 calls/task).

These results indicate that basic execution fidelity is no longer the bottleneck; the key differentiators are long-horizon planning, cross-server orchestration, and robust adaptation to complex, ambiguous workflows.

Implementation Considerations

MCP-Bench is designed for extensibility and reproducibility. The benchmark is open-source (https://github.com/Accenture/mcp-bench), with all MCP server endpoints, tool schemas, and task generation pipelines documented. The evaluation framework is modular, supporting integration with new LLMs and custom judge models. For practical deployment, agents must implement robust schema validation, error handling, and context compression to manage long tool outputs and avoid context window overflow. The multi-round planning paradigm requires agents to maintain persistent state and reason over intermediate results, with explicit support for parallel execution and dependency tracking.

Implications and Future Directions

MCP-Bench exposes persistent weaknesses in current LLM agents, particularly in multi-hop planning, cross-domain orchestration, and evidence-based reasoning under fuzzy instructions. The benchmark provides a rigorous platform for advancing agentic capabilities, with implications for real-world deployment in domains such as healthcare, finance, scientific research, and travel planning. Future developments may include:

- Expansion to additional MCP servers and domains, increasing task diversity and complexity.

- Integration of multimodal tools (e.g., vision, audio) and real-time data sources.

- Development of advanced agent architectures with explicit memory, hierarchical planning, and meta-reasoning capabilities.

- Refinement of evaluation metrics to capture nuanced aspects of strategic reasoning, error recovery, and user-centric adaptability.

Conclusion

MCP-Bench establishes a new standard for evaluating tool-using LLM agents in realistic, ecosystem-based scenarios. By bridging the gap between isolated API benchmarks and production-grade multi-server environments, MCP-Bench enables comprehensive assessment of agentic reasoning, planning, and tool-use capabilities. The empirical results highlight that while schema understanding and execution fidelity have converged, robust planning and cross-domain orchestration remain open challenges. MCP-Bench provides the necessary infrastructure and methodology for driving progress in agentic LLM research and deployment.