- The paper presents SuperSimpleNet, a unified model that achieves up to 98.0% AUROC and 97.8% AP, demonstrating robust defect detection across diverse supervision regimes.

- Methodologically, the model builds on a WideResNet50 backbone with feature upscaling and latent-space synthetic anomaly generation, which enhances precision in detecting small defects.

- The study combines segmentation and classification heads with dynamic loss weighting to reduce annotation needs while delivering high computational efficiency for industrial quality control.

A Unified Model for Surface Defect Detection Across All Supervision Regimes

Introduction and Motivation

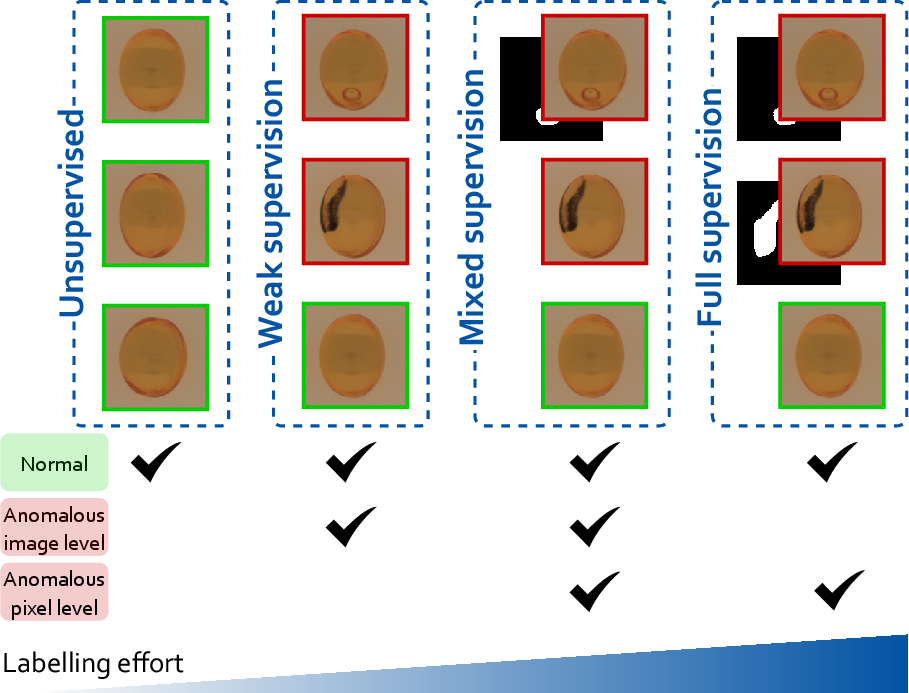

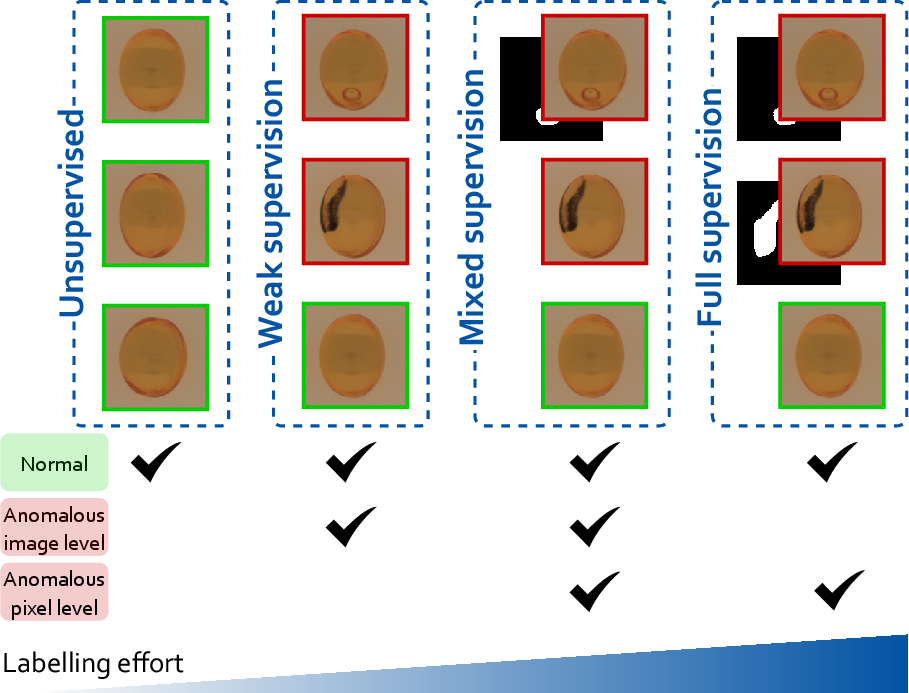

Surface defect detection is a critical task in industrial quality control, where the ability to identify and localize anomalies on manufactured components directly impacts product reliability and production efficiency. Traditional approaches in this domain are typically tailored to specific supervision regimes—either fully supervised, weakly supervised, or unsupervised—each with inherent limitations regarding data annotation requirements and adaptability to real-world manufacturing scenarios. The paper "No Label Left Behind: A Unified Surface Defect Detection Model for all Supervision Regimes" (2508.19060) introduces SuperSimpleNet, a discriminative model designed to operate efficiently and effectively across all four major supervision paradigms: unsupervised, weakly supervised, mixed supervision, and fully supervised learning.

Figure 1: Different supervision scenarios within manufacturing processes, highlighting the increasing annotation effort from left to right. SuperSimpleNet uniquely supports all four regimes.

SuperSimpleNet: Architecture and Key Innovations

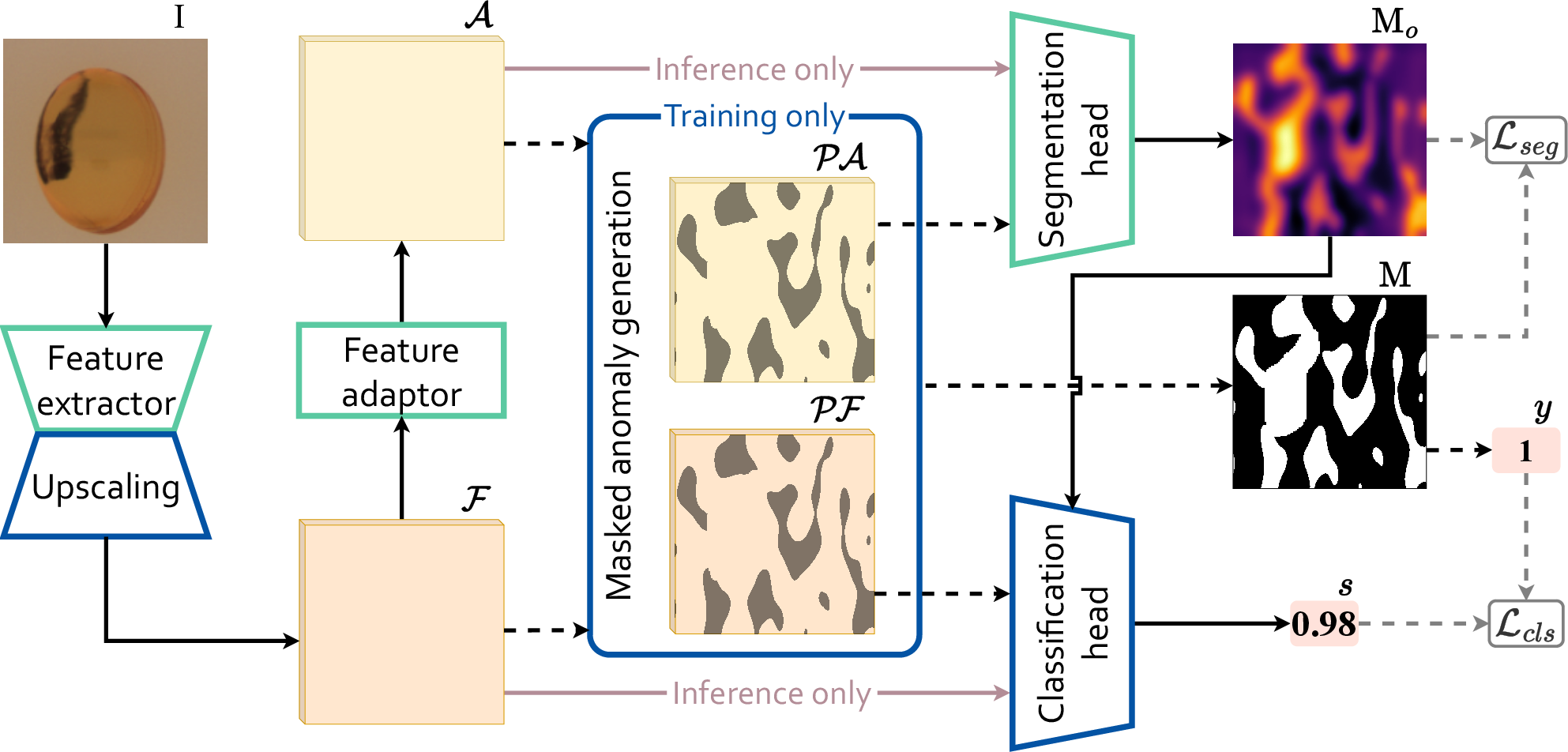

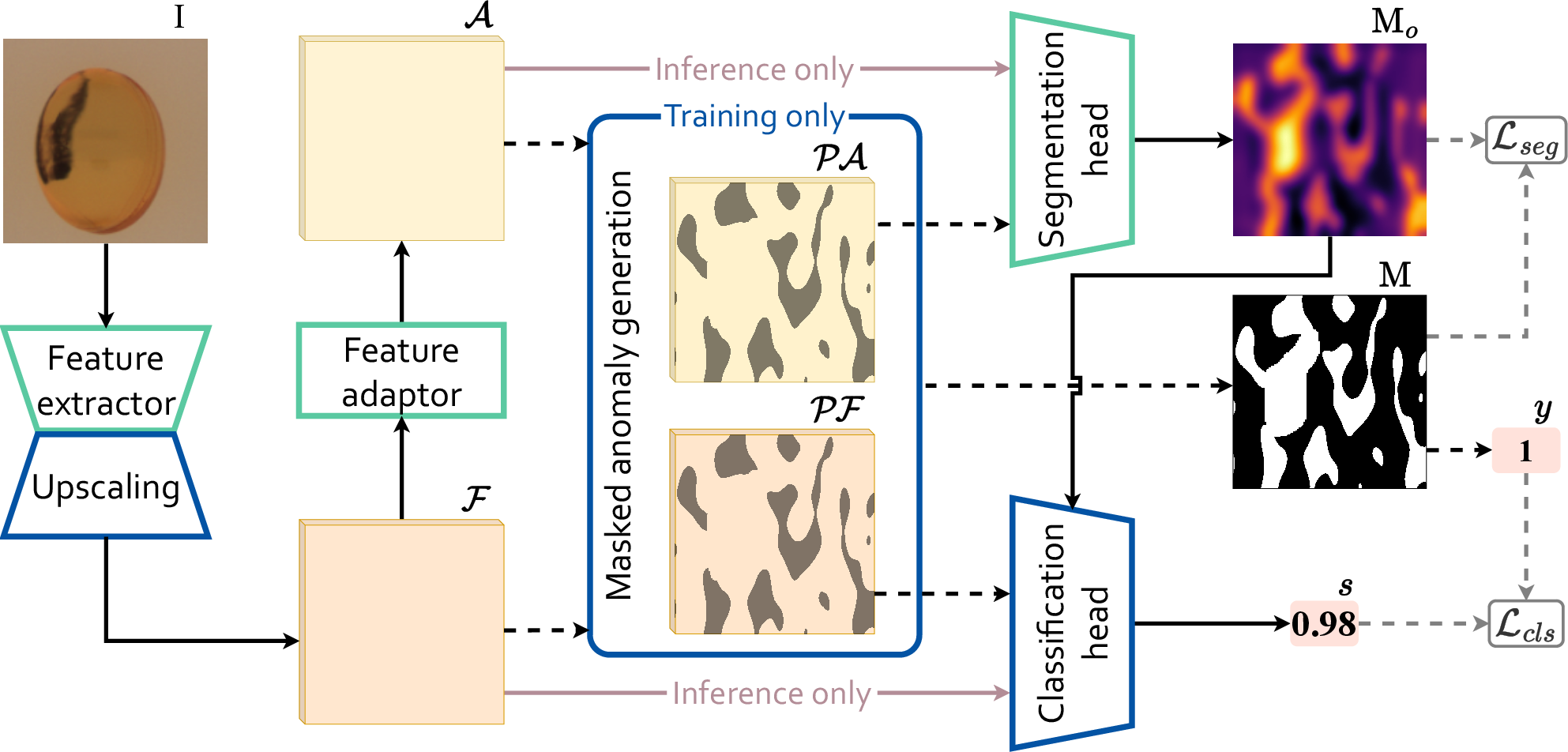

SuperSimpleNet builds upon the SimpleNet backbone, introducing several architectural and methodological enhancements to enable unified operation across diverse annotation regimes:

- Feature Extraction and Upscaling: Utilizes a WideResNet50 pretrained on ImageNet, extracting features from intermediate layers and upscaling them to improve spatial resolution, which is critical for precise localization of small defects.

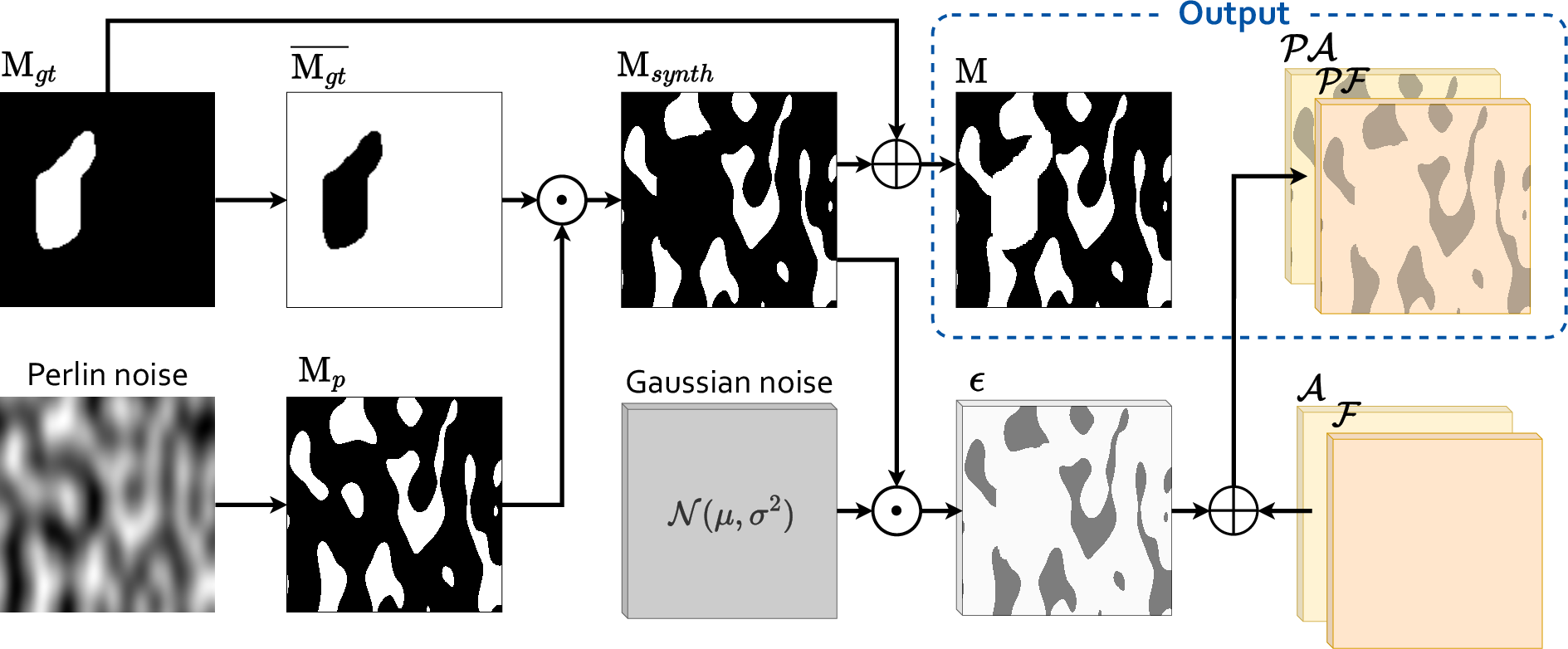

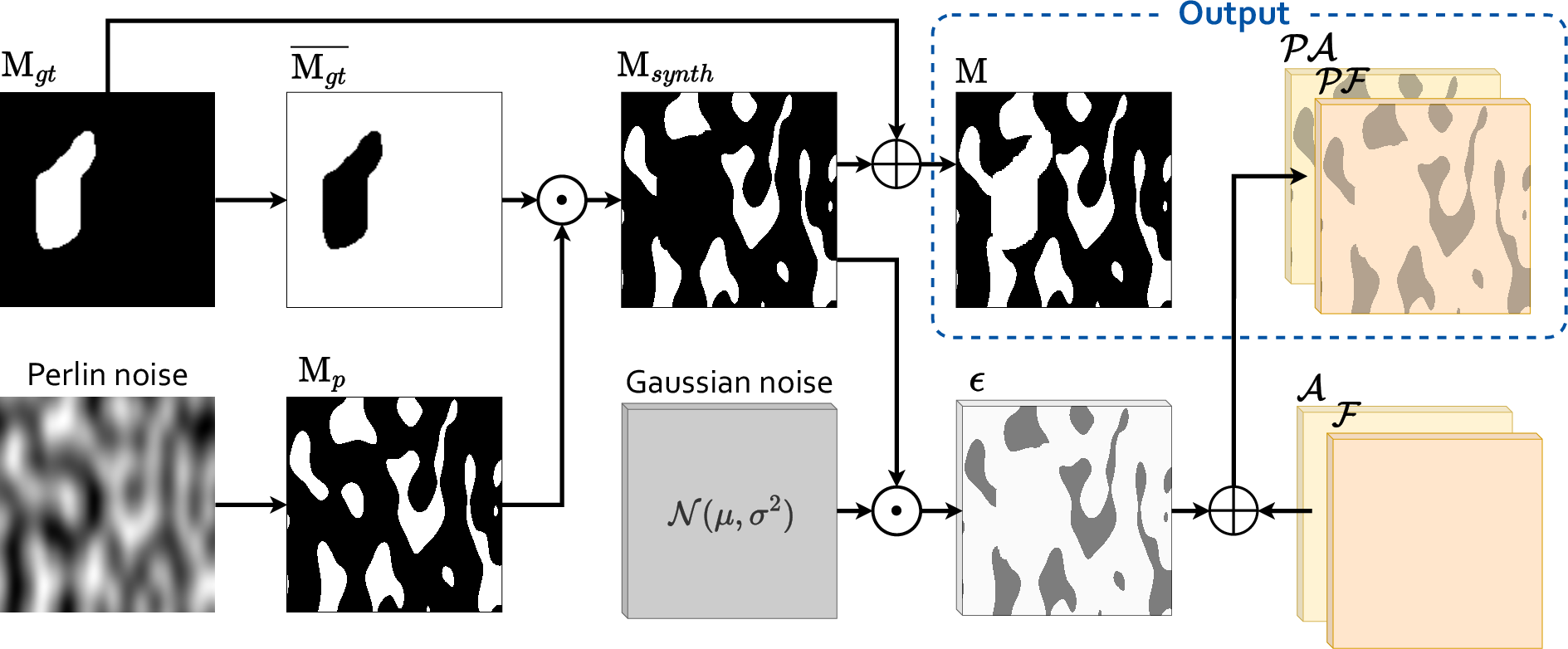

- Latent-Space Synthetic Anomaly Generation: Introduces a novel mechanism for generating synthetic anomalies in the latent feature space, guided by binarized Perlin noise masks. This process injects spatially coherent, randomized anomalies, enabling effective training even in the absence of real defect annotations.

Figure 2: SuperSimpleNet's architecture, showing feature extraction, upscaling, synthetic anomaly injection, and dual-branch segmentation-detection heads.

Figure 3: Synthetic anomaly generation using Perlin noise masks, with Gaussian noise applied only to selected regions in the latent space.

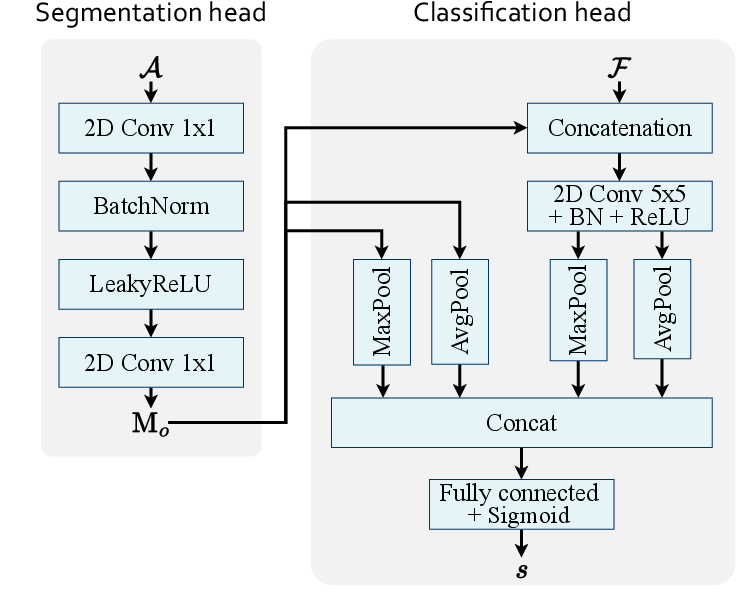

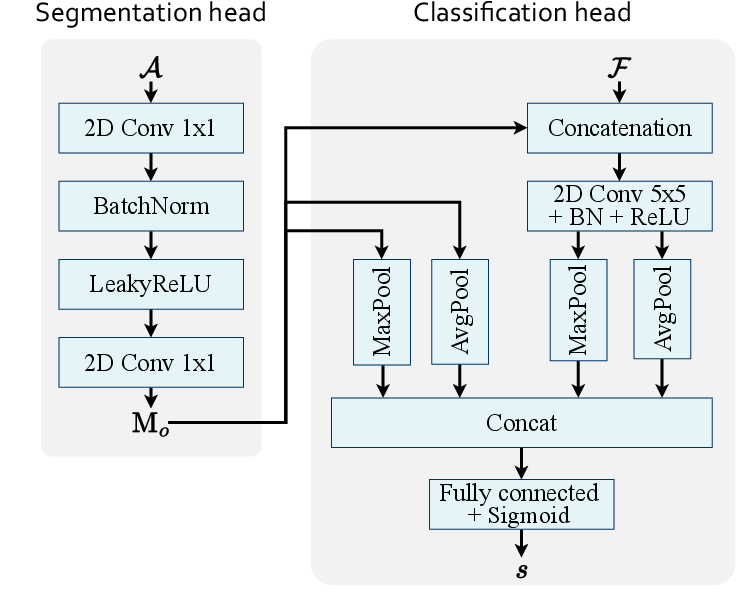

- Dual-Branch Segmentation-Detection Module: Retains the segmentation head from SimpleNet and introduces a simple yet effective classification head with a wide convolutional kernel, enabling robust global context modeling and efficient utilization of image-level labels.

Figure 4: Detailed architecture of the segmentation-detection module, illustrating the dual-branch design and improved contextual understanding.

- Unified Loss and Training Strategy: Employs a combination of truncated L1 loss and focal loss, with dynamic weighting to accommodate mixed supervision. The segmentation head is trained on all images with pixel-level labels, while the classification head is always trained, ensuring maximal exploitation of available annotations.

Experimental Evaluation

Fully Supervised, Weakly Supervised, and Mixed Supervision

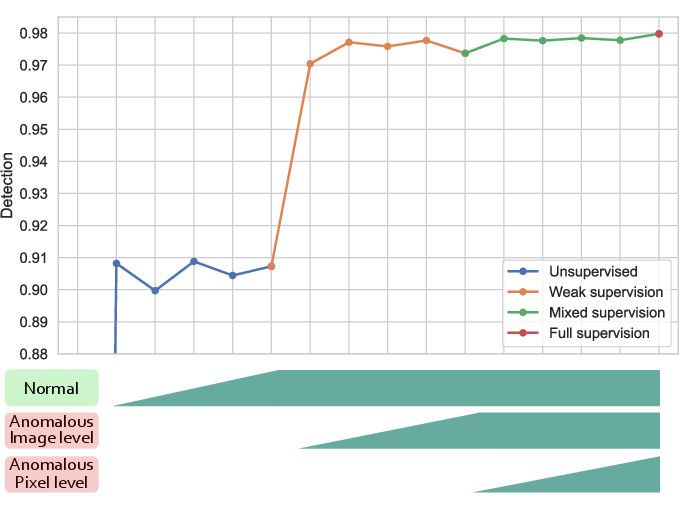

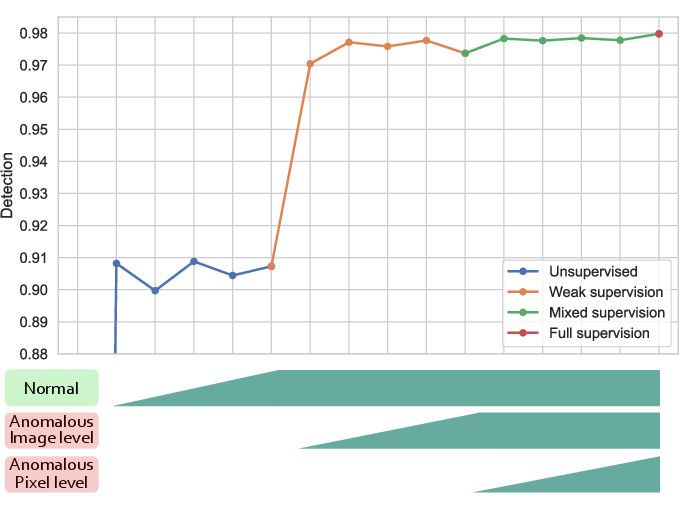

SuperSimpleNet is evaluated on SensumSODF and KSDD2 datasets under various supervision regimes. In the fully supervised setting, it achieves 98.0% AUROC on SensumSODF and 97.8% AP on KSDD2, surpassing previous state-of-the-art by 1.1 and 1.6 percentage points, respectively. Notably, in the weakly supervised regime (image-level labels only), SuperSimpleNet attains 97.4% AUROC on SensumSODF, outperforming prior fully supervised SOTA and demonstrating only a marginal drop compared to its own fully supervised performance.

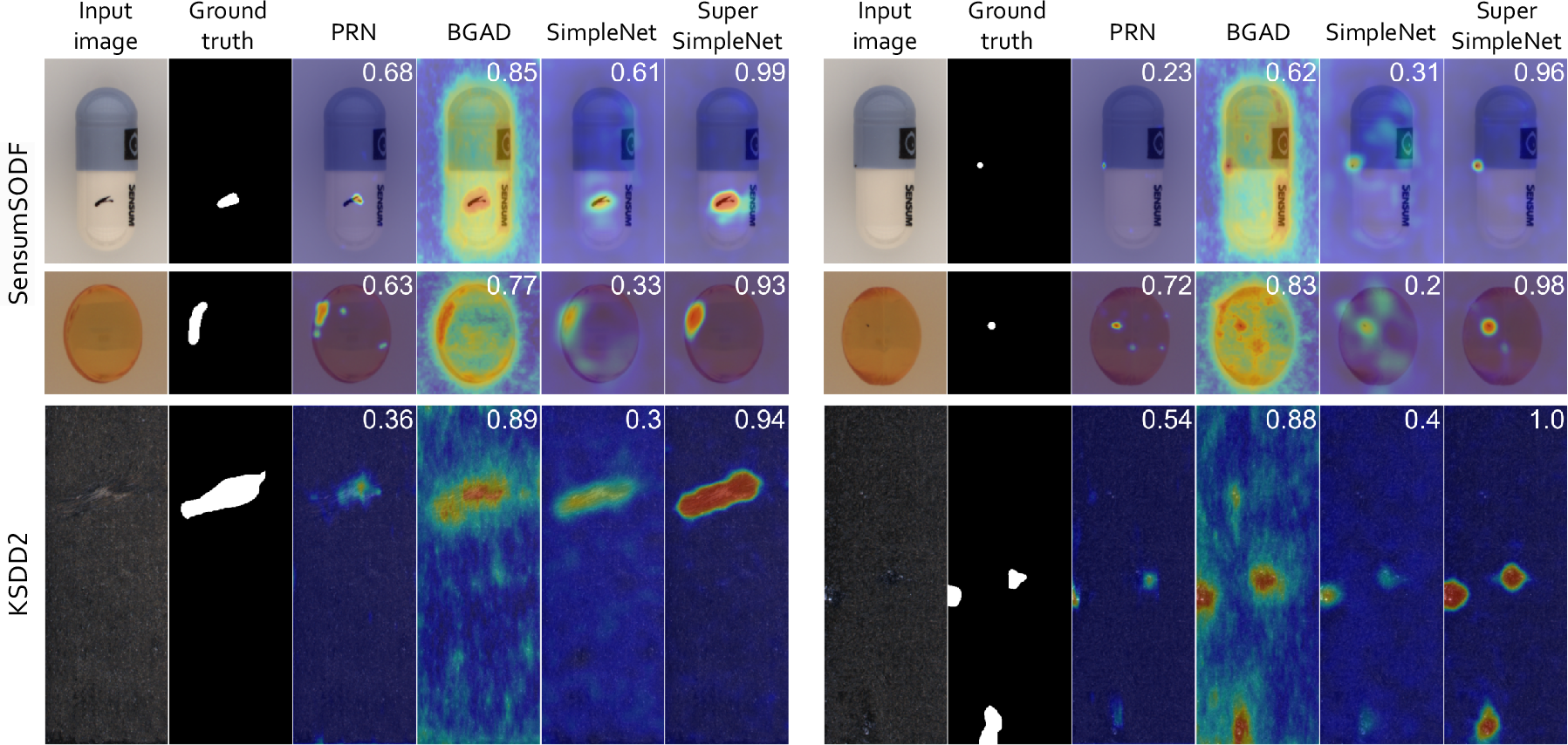

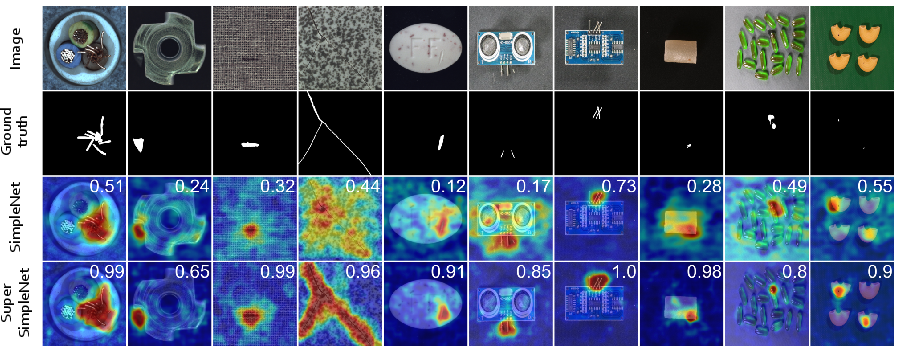

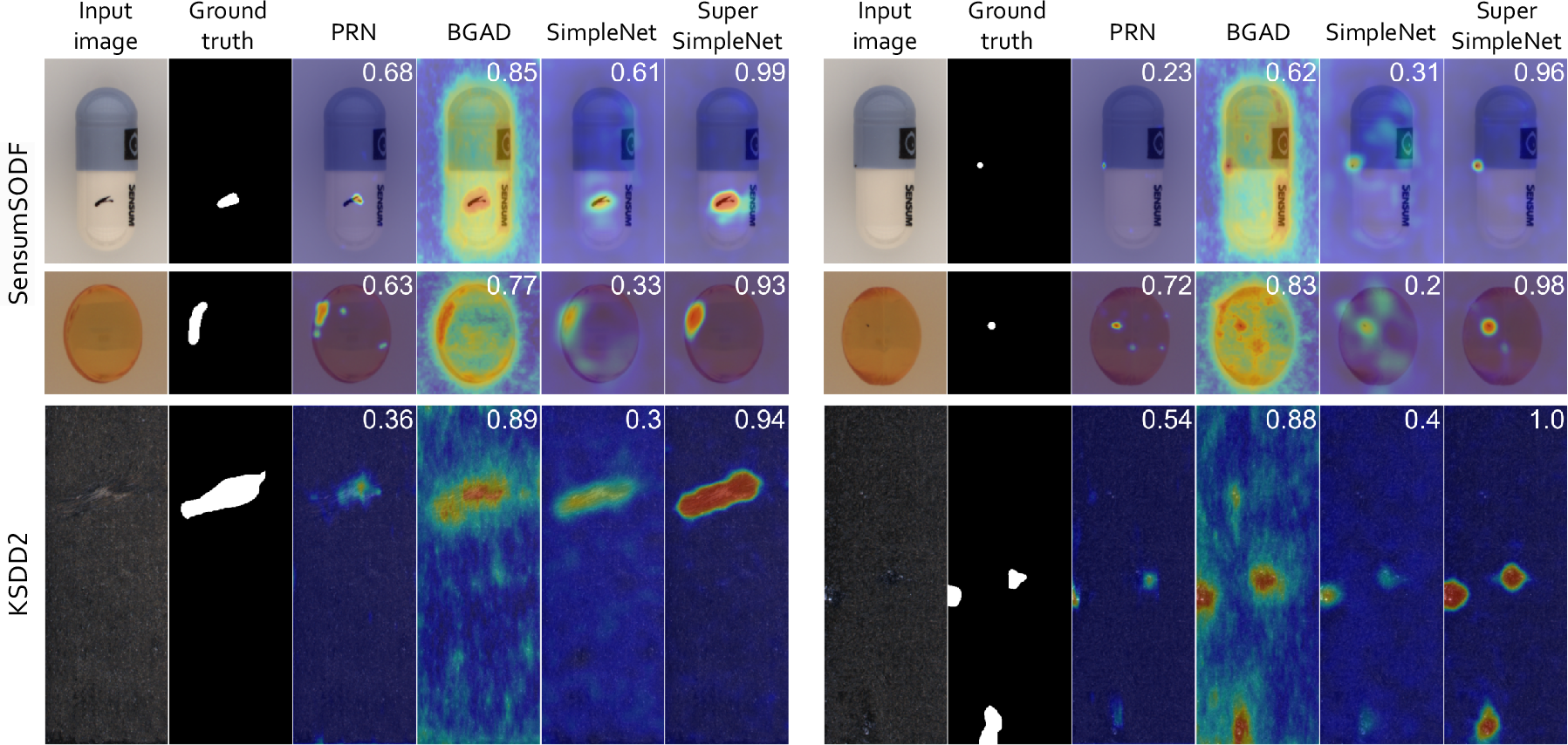

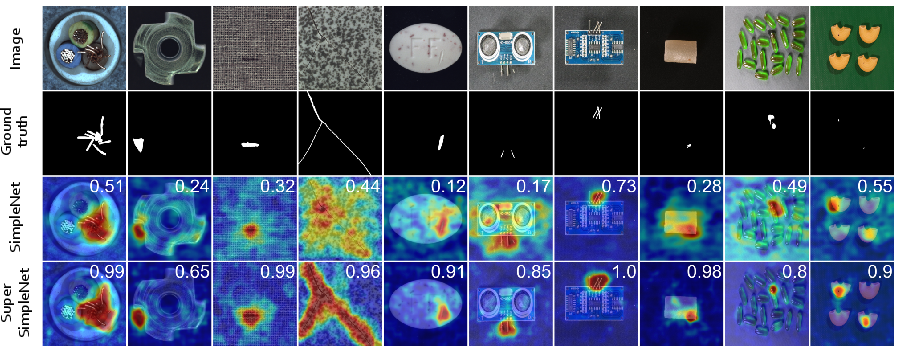

Figure 5: Qualitative comparison of anomaly maps in the fully supervised setting, showing input, ground truth, and model outputs.

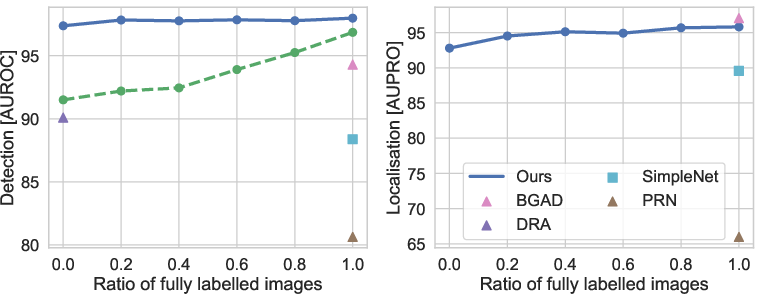

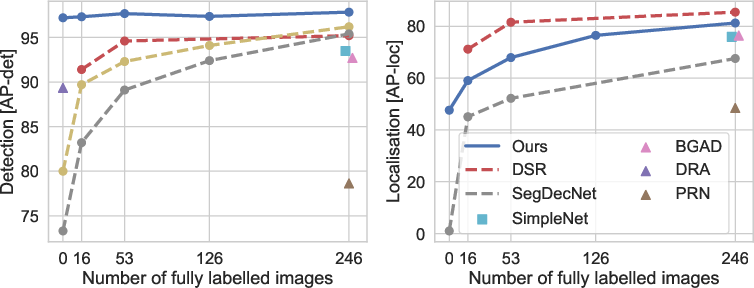

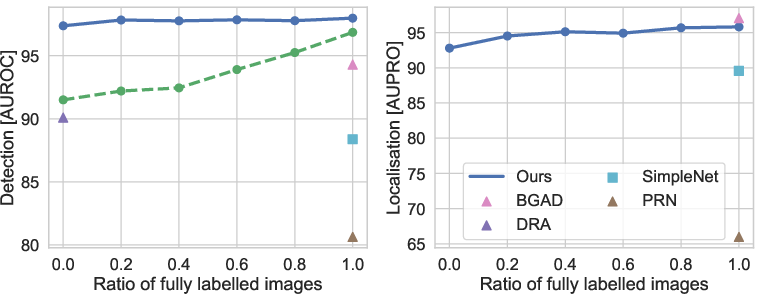

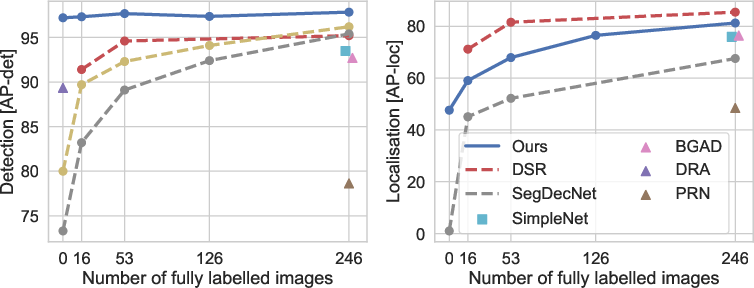

In mixed supervision, where only a subset of images have pixel-level labels, SuperSimpleNet maintains superior detection and localization performance even with limited annotation, highlighting its efficiency in reducing labeling effort.

Figure 6: Anomaly detection (AUROC) and localization (AUPRO) on SensumSODF under mixed supervision, as a function of pixel-level label ratio.

Figure 7: Detection and localization results on KSDD2 with varying numbers of pixel-level labels.

Figure 8: Qualitative comparison of anomaly maps under mixed supervision, illustrating the effect of increasing pixel-level annotation.

Unsupervised Anomaly Detection

On the MVTec AD and VisA benchmarks, SuperSimpleNet achieves 98.3% AUROC and 93.6% AUROC, respectively, matching or exceeding the performance of specialized unsupervised methods. The model demonstrates stable and interpretable anomaly maps, with improved separation of true defects from background noise.

Figure 9: Qualitative comparison of anomaly maps by unsupervised SuperSimpleNet and SimpleNet, highlighting improved interpretability and accuracy.

Computational Efficiency

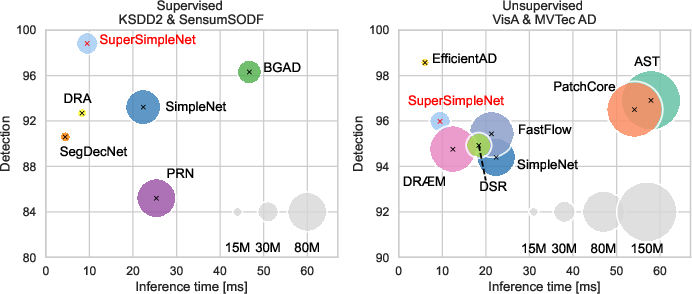

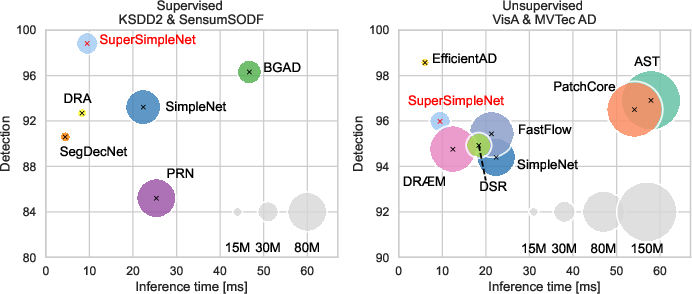

SuperSimpleNet is highly efficient, achieving an inference time of 9.5 ms and throughput of 262 images per second on an NVIDIA Tesla V100S, outperforming most contemporary models in both speed and parameter efficiency.

Figure 10: Inference time and anomaly detection performance for different models, with circle size indicating parameter count.

Ablation Studies and Analysis

Comprehensive ablation studies confirm the importance of each architectural component:

- Feature Upscaling: Removing upscaling reduces detection and localization by up to 1.4 percentage points.

- Classification Head Simplicity: A simple, wide-kernel classification head prevents overfitting and is critical for robust performance, especially in unsupervised settings.

- Synthetic Anomaly Generation: The new Perlin-guided strategy is essential for bridging the gap between weak and full supervision, and for regularizing the model in the presence of limited real anomalies.

- Loss Weighting and Training Optimizations: Segmentation loss weighting and improved training protocols contribute to both stability and accuracy.

Figure 11: Label ablation paper showing anomaly detection performance as a function of available labeled data, across supervision regimes.

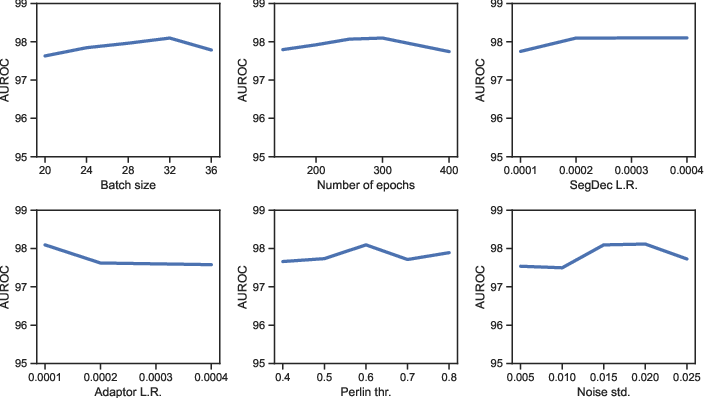

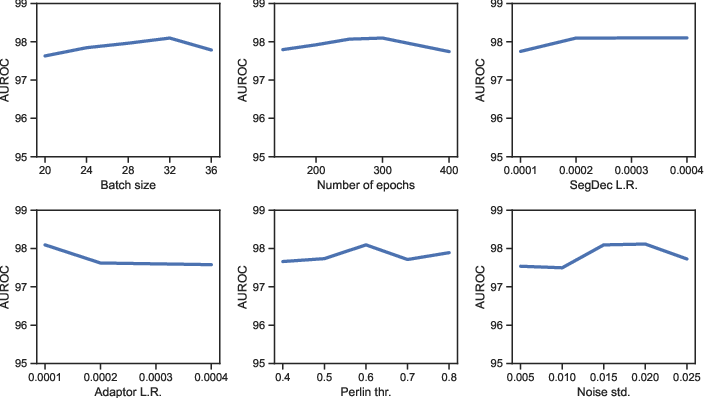

Figure 12: Hyperparameter sensitivity analysis, demonstrating robustness to parameter selection.

Limitations and Practical Considerations

While SuperSimpleNet demonstrates strong generalization and efficiency, its performance is contingent on the quality of the pretrained feature extractor. Detection of extremely small anomalies may require higher input resolutions, which increases computational cost. Hyperparameters are robust but may require tuning for specific industrial scenarios.

Implications and Future Directions

SuperSimpleNet establishes a new paradigm for industrial anomaly detection by unifying all supervision regimes within a single, efficient architecture. This approach enables practitioners to fully exploit all available data, regardless of annotation granularity, and adapt seamlessly as more labels become available during the product lifecycle. The demonstrated reduction in annotation effort, combined with high throughput and accuracy, makes SuperSimpleNet particularly attractive for real-world deployment in manufacturing environments.

Theoretically, the work underscores the value of synthetic anomaly generation in the latent space and the importance of architectural simplicity for generalization across supervision regimes. Future research may explore further improvements in feature extractor design, domain adaptation for non-industrial settings, and integration with active learning or continual learning frameworks to further reduce annotation costs and improve adaptability.

Conclusion

SuperSimpleNet provides a unified, efficient, and high-performing solution for surface defect detection across all supervision regimes. Its ability to leverage all available annotations, combined with strong empirical results and computational efficiency, positions it as a robust candidate for industrial deployment and a foundation for future research in unified anomaly detection architectures.