- The paper shows that model size, training duration, and image domain individually and jointly drive representational convergence between DINOv3 and human brain responses, using high-resolution fMRI and MEG data.

- It employs linear encoding analysis with ridge regression to quantify brain-model similarity through encoding, spatial, and temporal metrics, analyzing various DINOv3 variants.

- The findings reveal a staged emergence of brain-like representations that mirror cortical developmental trajectories, emphasizing the importance of ecologically valid training data.

Disentangling the Factors of Convergence between Brains and Computer Vision Models

Introduction

This paper provides a systematic investigation into the factors that drive representational convergence between artificial neural networks and the human brain in visual processing. By leveraging a family of self-supervised vision transformers (DINOv3) and manipulating model size, training duration, and image domain, the authors dissect the independent and interactive contributions of architecture, experience, and data type to brain-model similarity. The paper employs high-resolution fMRI and MEG datasets to quantify representational alignment using encoding, spatial, and temporal metrics, offering a multi-faceted view of how and when artificial models come to resemble biological vision.

Methodological Framework

The core methodology centers on linear encoding analysis, quantifying the correspondence between DINOv3 activations and brain responses to identical images. Ridge regression is used to map model activations to brain activity, with cross-validation ensuring robust estimation. Three metrics are defined:

- Encoding Score: Pearson correlation between predicted and actual brain responses, summarizing overall representational similarity.

- Spatial Score: Correlation between model layer hierarchy and anatomical hierarchy (Euclidean distance from V1), probing topographical alignment.

- Temporal Score: Correlation between model layer depth and the timing of peak predictability in MEG signals, assessing dynamic correspondence.

Multiple DINOv3 variants are trained from scratch, varying in parameter count (Small, Base, Large, Giant, 7B), training steps, and image domain (human-centric, satellite, cellular). The use of both fMRI and MEG enables spatial and temporal resolution in the analysis.

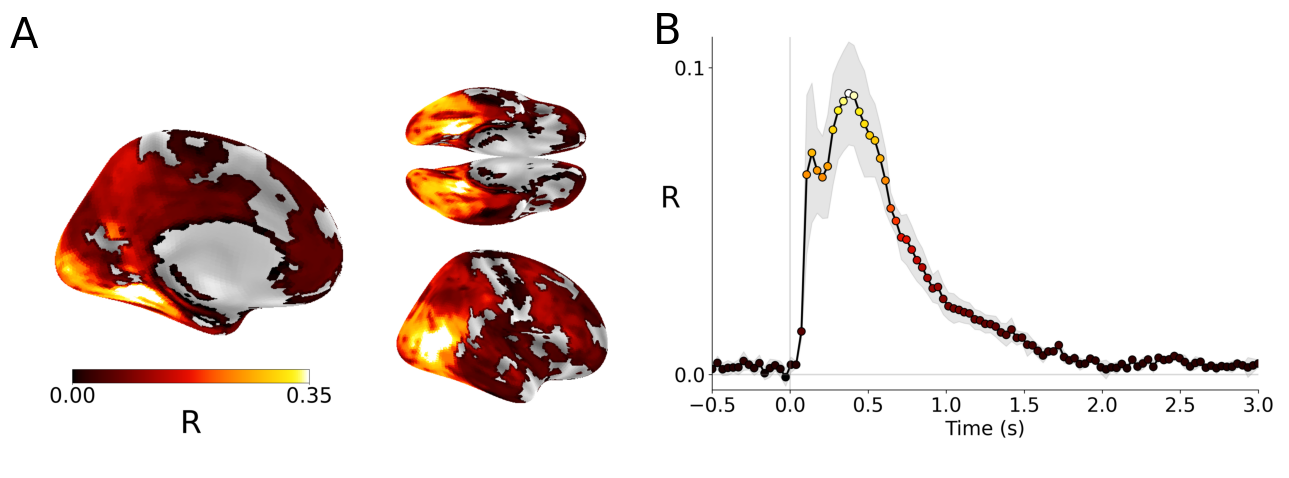

Brain-Model Representational Similarity

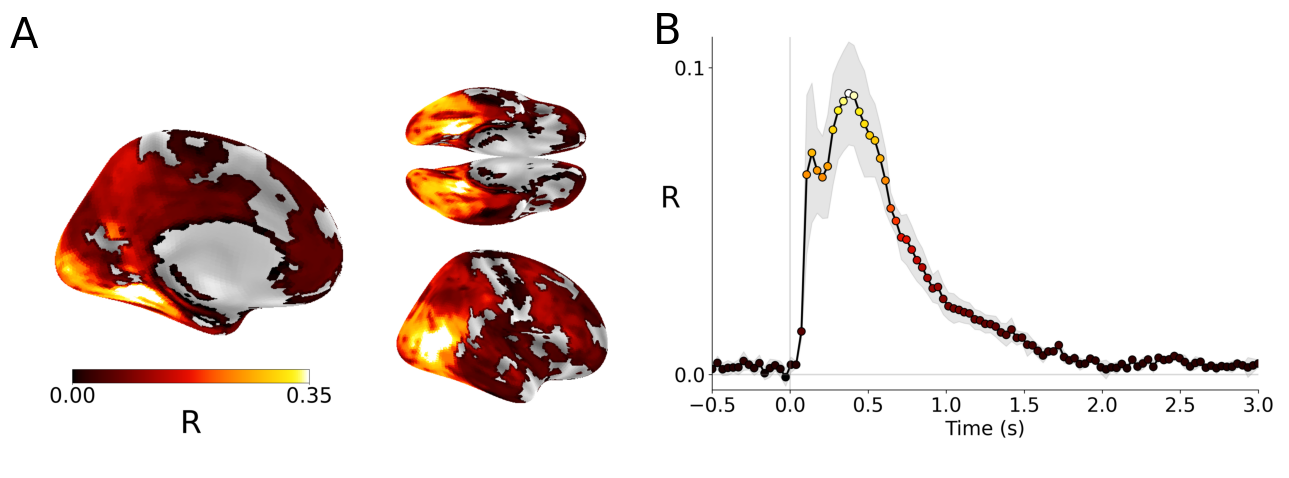

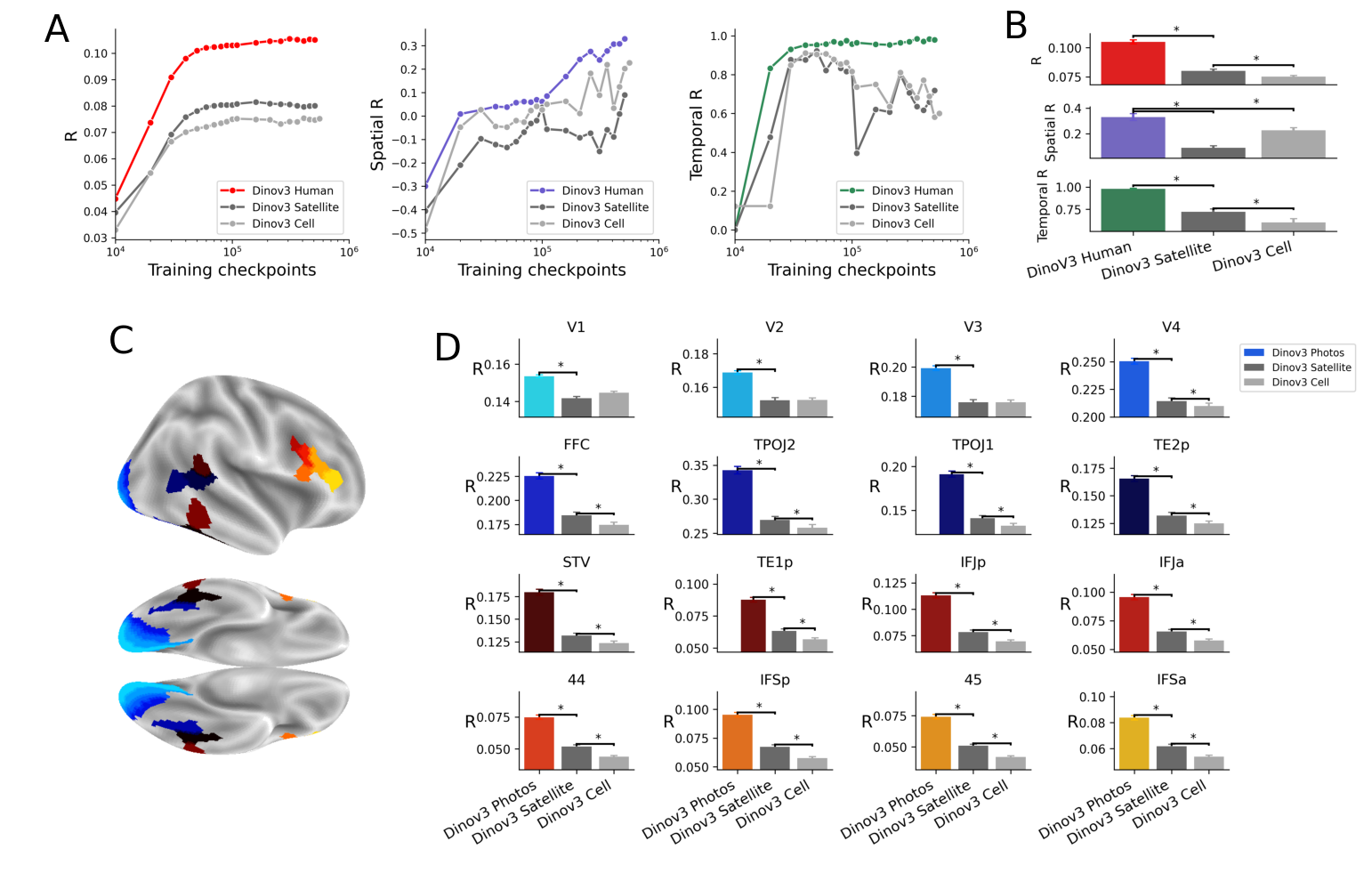

The results confirm robust representational similarity between DINOv3 and the human brain, with encoding scores peaking in the visual pathway (R=.45±.039 in fMRI, R=.09±.017 in MEG). Notably, predictability extends beyond classical visual regions into prefrontal cortices, challenging the notion that model-brain alignment is confined to sensory areas.

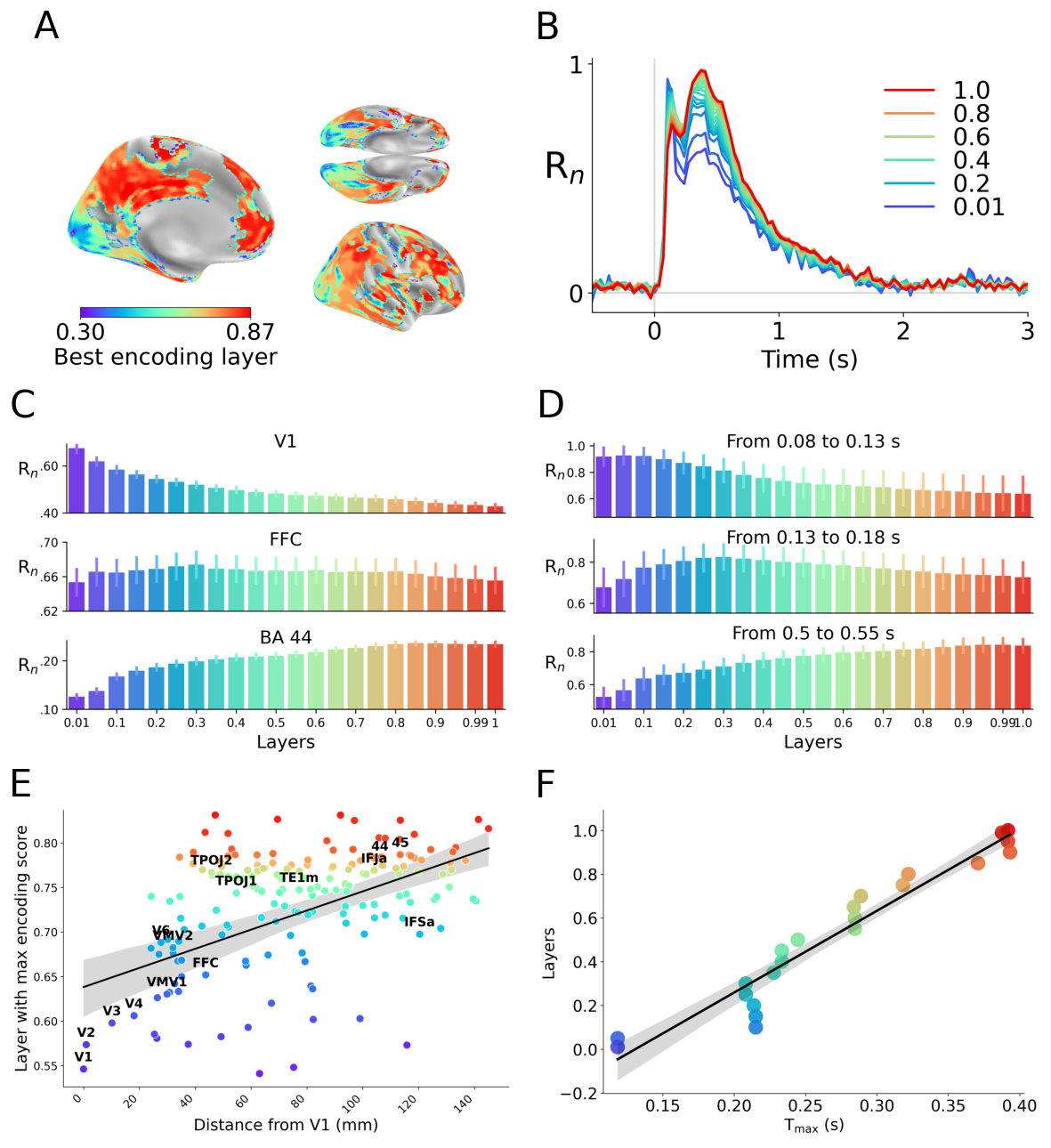

Figure 1: DINOv3 embeddings exhibit significant similarity to fMRI and MEG responses, with peak encoding scores in visual cortices and sustained predictability in prefrontal regions.

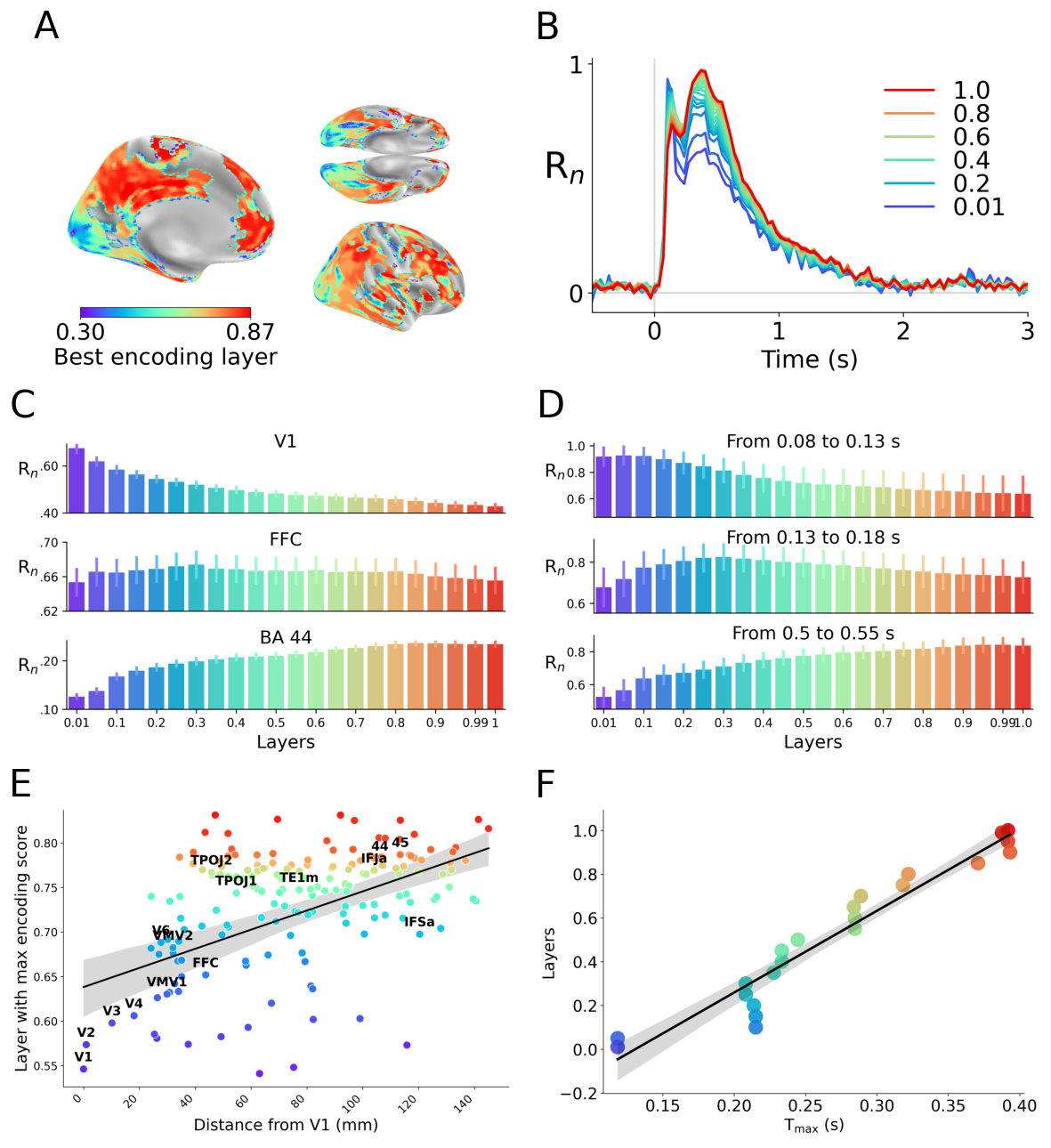

Spatial analysis reveals a hierarchical correspondence: early model layers best predict low-level sensory regions (V1), while deeper layers align with higher-order cortices (e.g., BA44, IFSp). The spatial score (R=0.38, p<1e−6) quantifies this alignment.

Temporal analysis demonstrates that model layer depth tracks the timing of brain responses, with the first and last layers aligning with the earliest and latest MEG signals, respectively (temporal score R=0.96, p<1e−12).

Figure 2: The representational hierarchy of DINOv3 mirrors the anatomical and temporal hierarchy of the cortex, with significant correlations in both spatial and temporal scores.

Developmental Trajectories and Training Dynamics

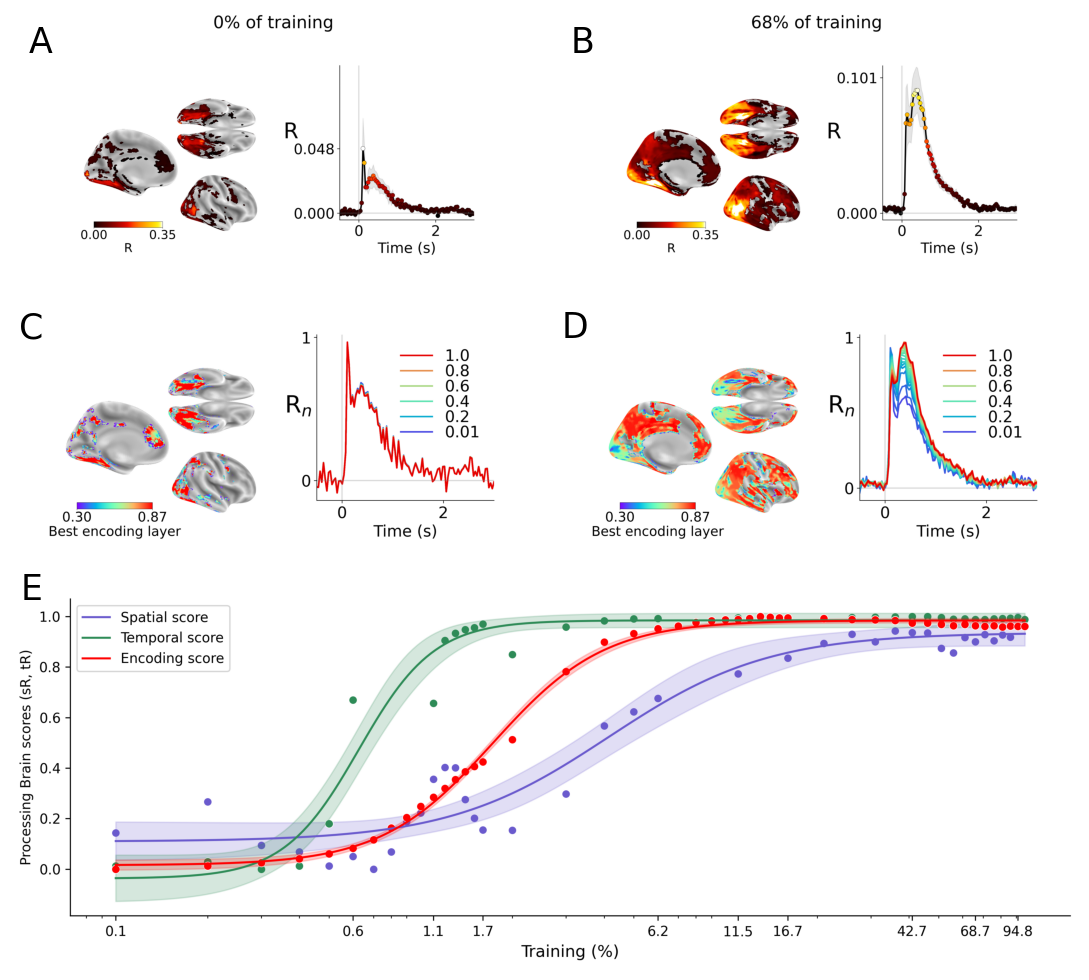

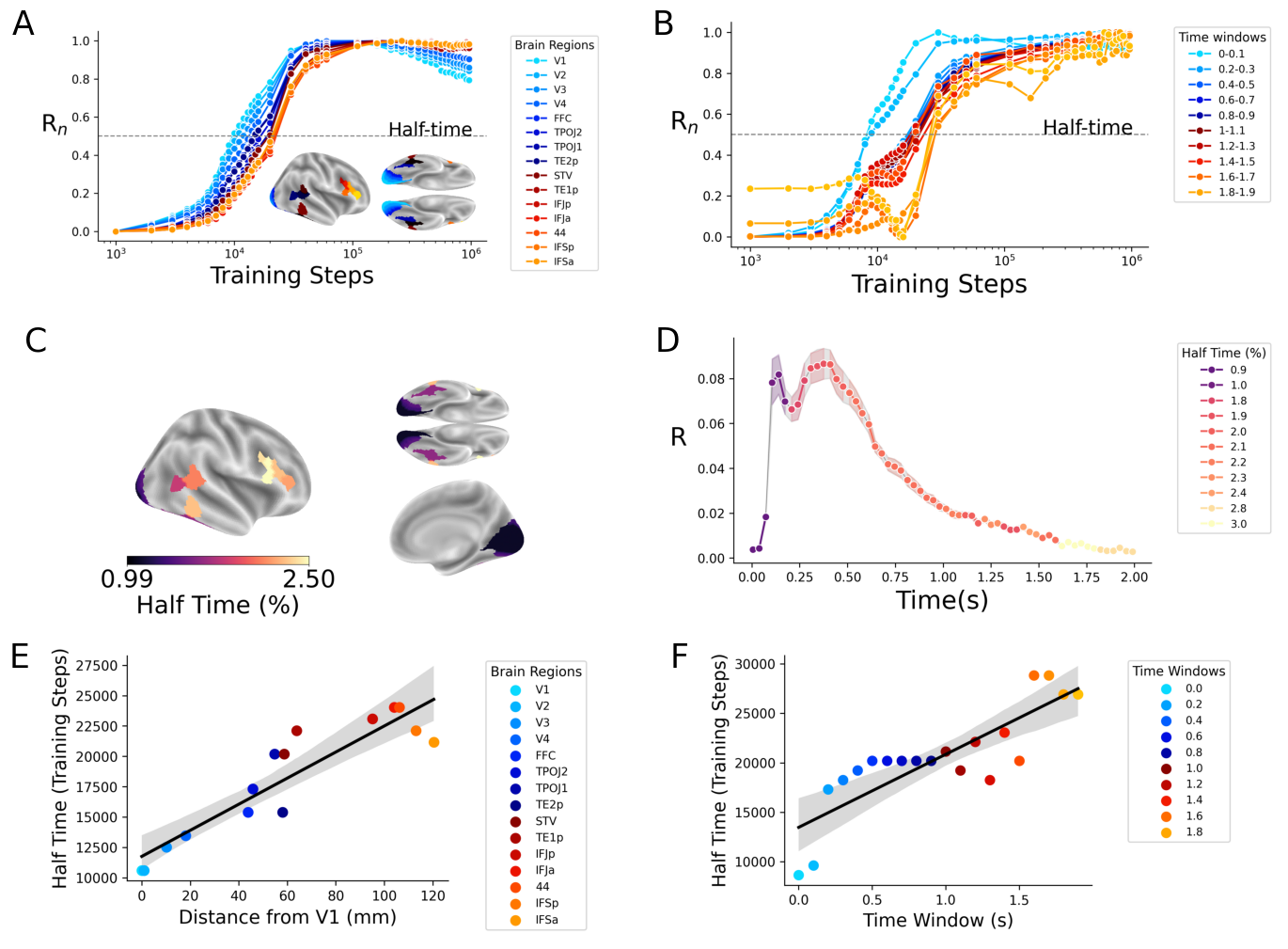

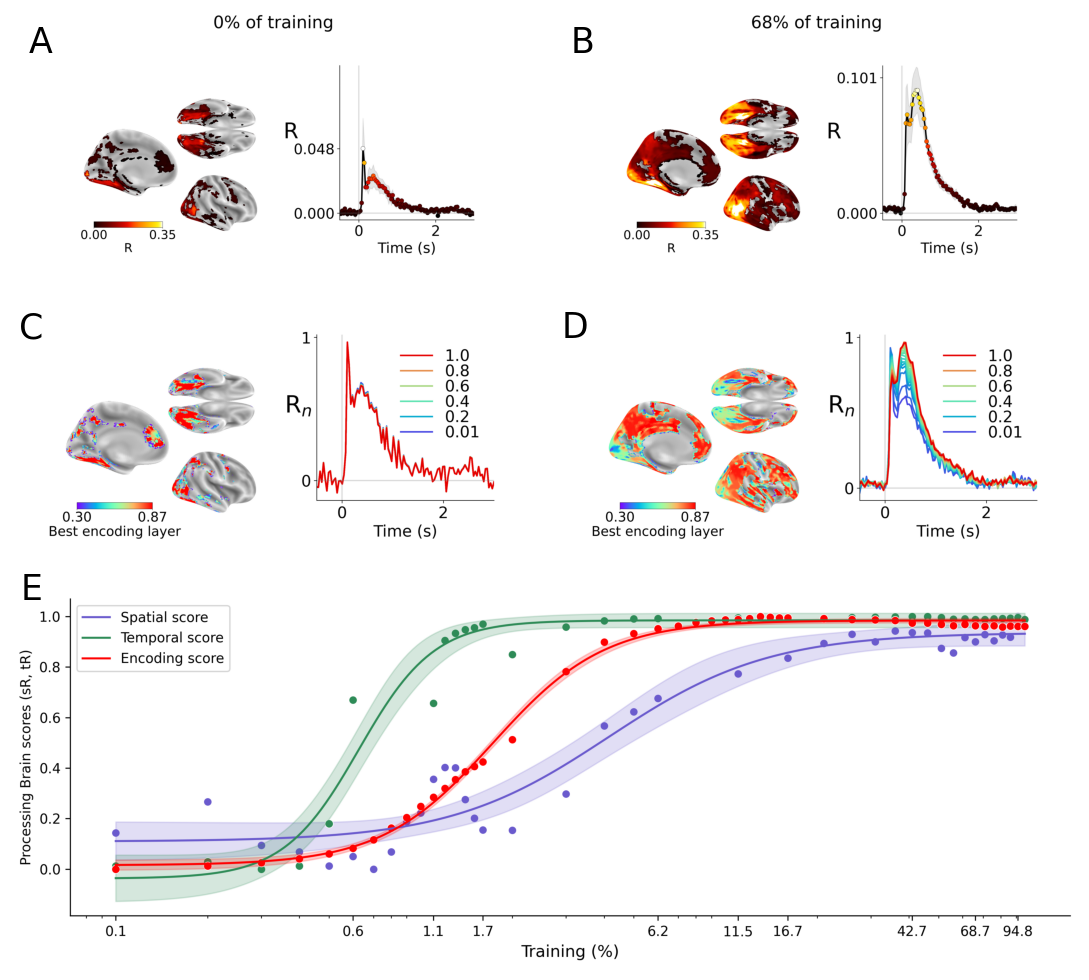

A key contribution is the characterization of the developmental trajectory of brain-like representations in DINOv3. The emergence of encoding, spatial, and temporal scores is non-simultaneous:

- Encoding scores reach half-maximal values early in training (~2% of total steps).

- Temporal scores emerge even faster (~0.7%).

- Spatial scores require more extensive training (~4%).

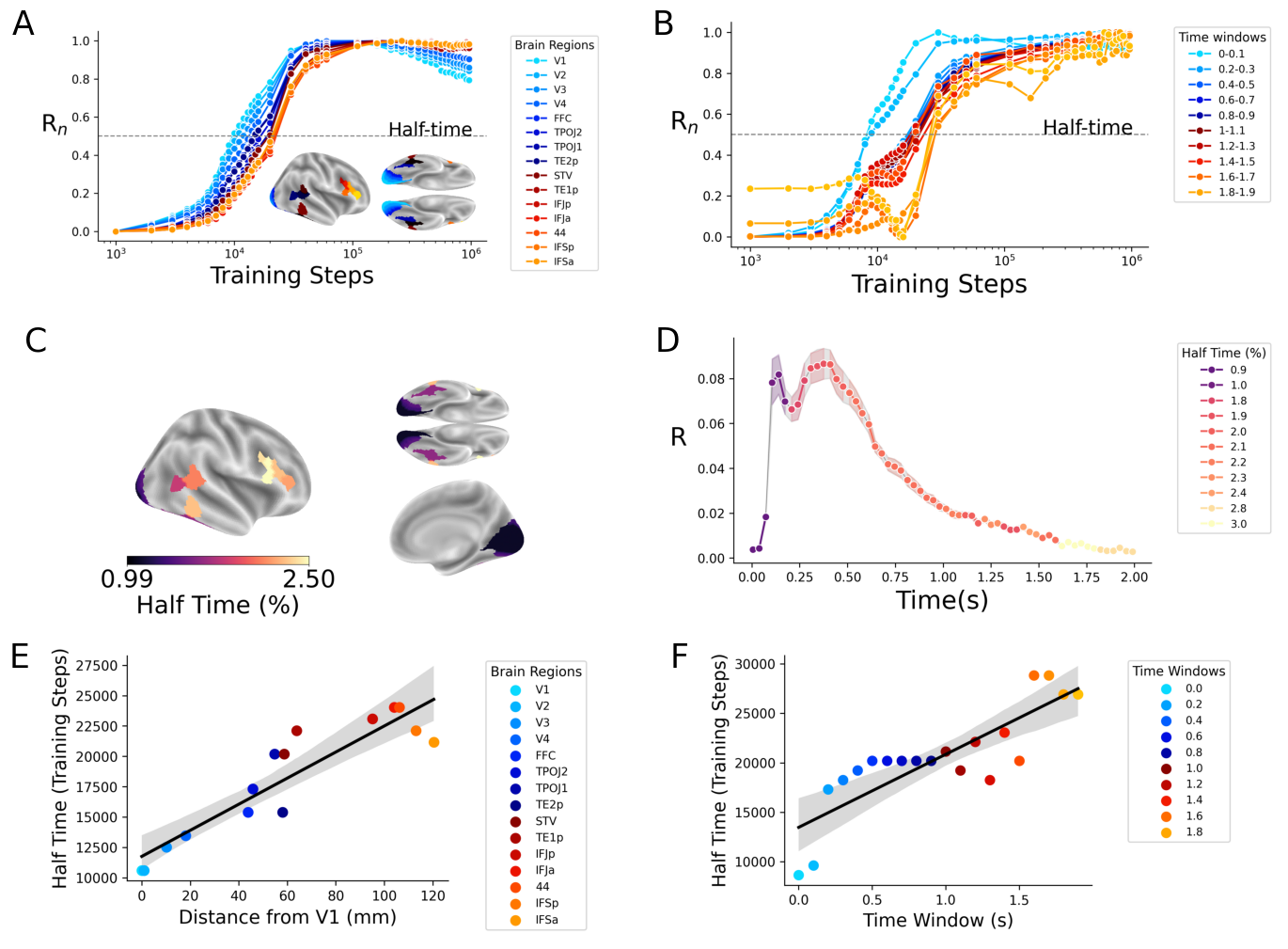

Low-level visual regions and early MEG windows converge rapidly, while high-level cortices and late MEG windows require substantially more data. The correlation between half time and anatomical location (R=0.91, p<1e−5) and between half time and temporal peak (R=0.84, p<1e−5) underscores the staged acquisition of representations.

Figure 3: Training progression reveals rapid emergence of low-level alignment and delayed convergence for high-level regions and late temporal windows.

Figure 4: The half time of representational alignment varies systematically across brain regions and temporal windows, reflecting staged developmental trajectories.

Impact of Model Size and Image Domain

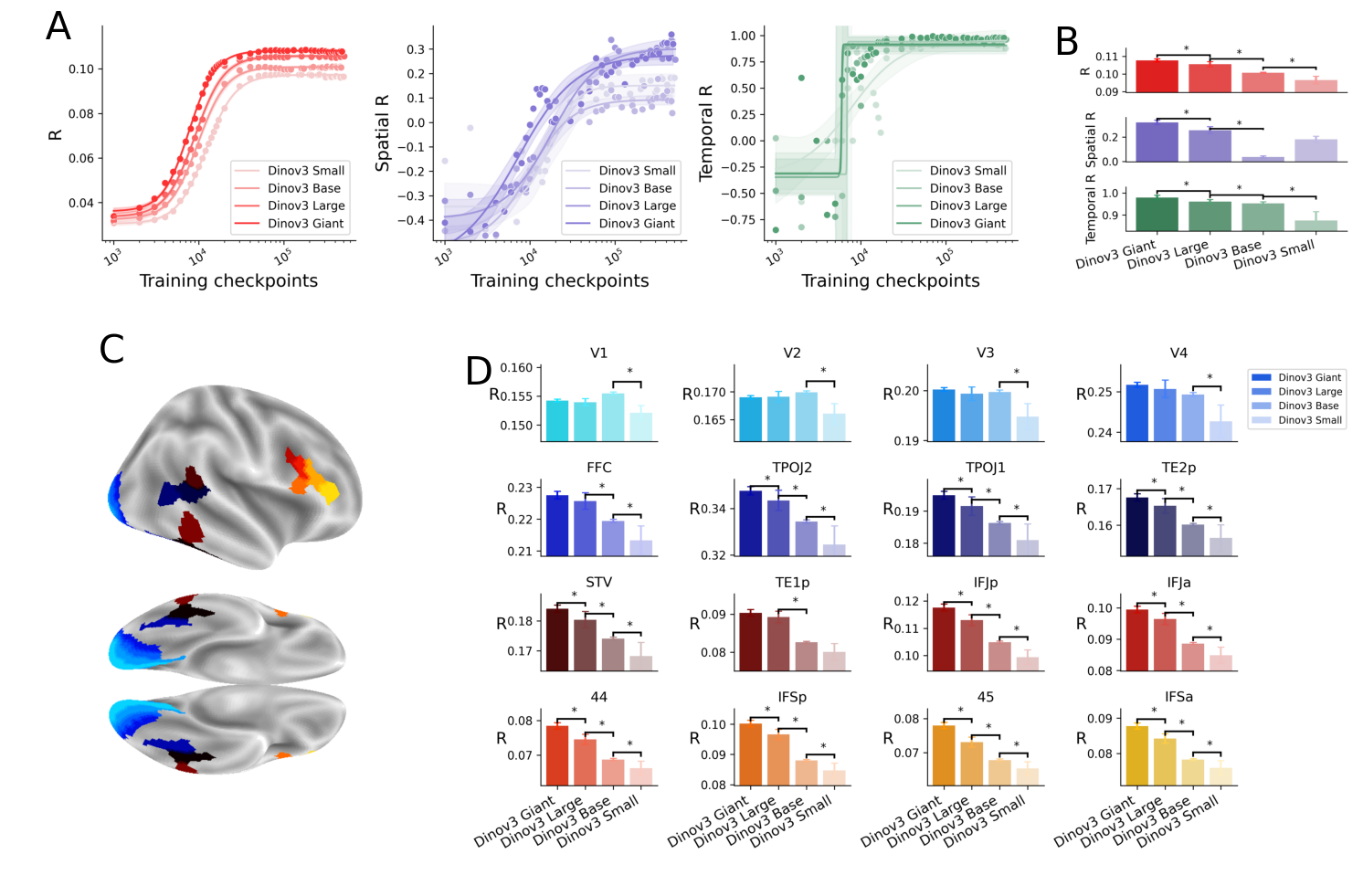

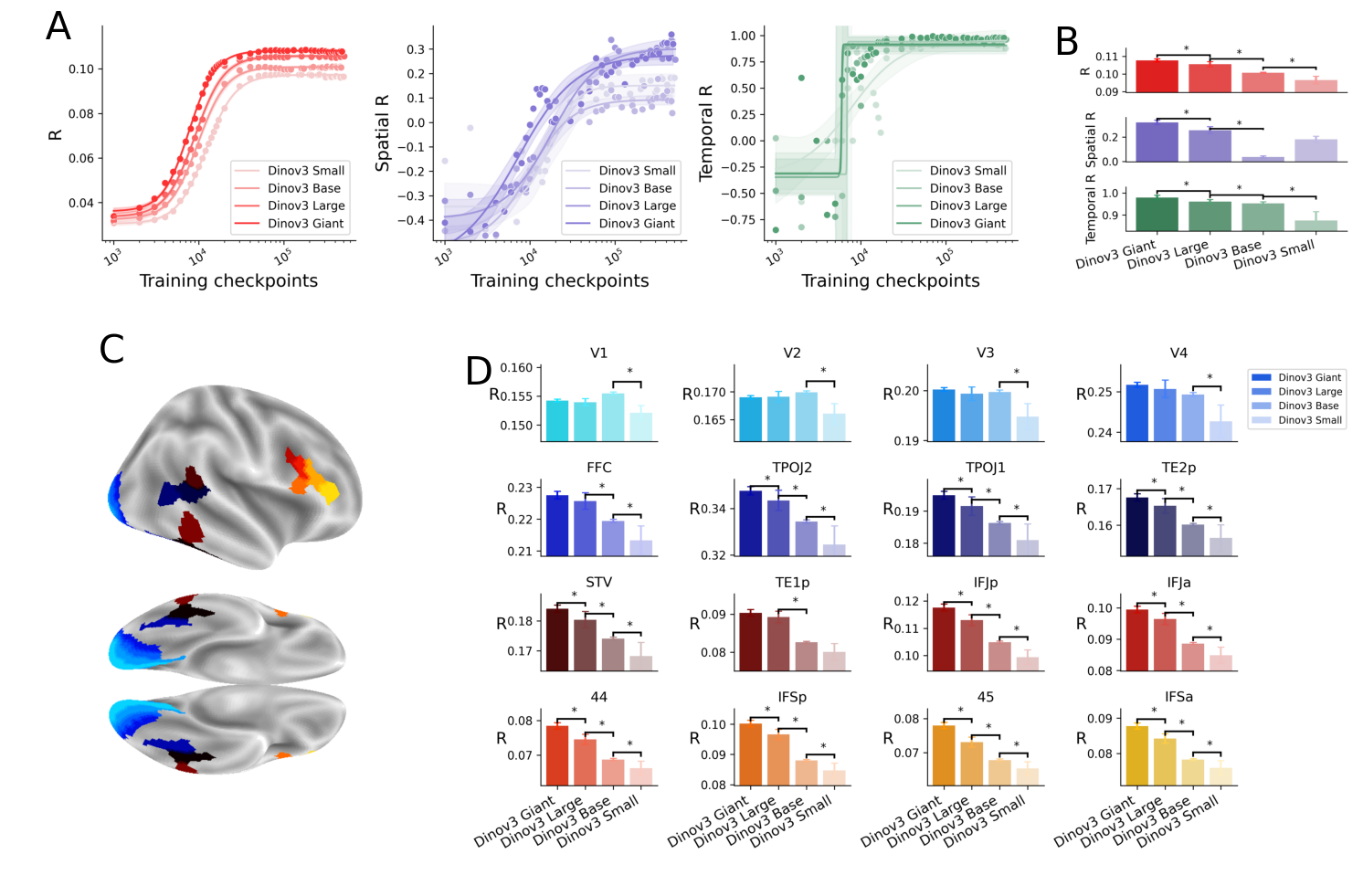

Model size exerts a pronounced effect on convergence. Larger models achieve higher encoding scores (Giant: R=0.107 > Large: R=0.105 > Base: R=0.101 > Small: R=0.096, p<1e−3) and better encode higher-order cortices. The effect is less pronounced in early visual areas, indicating that architectural capacity is most critical for complex, high-level representations.

Figure 5: Larger DINOv3 models yield higher encoding, spatial, and temporal scores, especially in higher-order cortical regions.

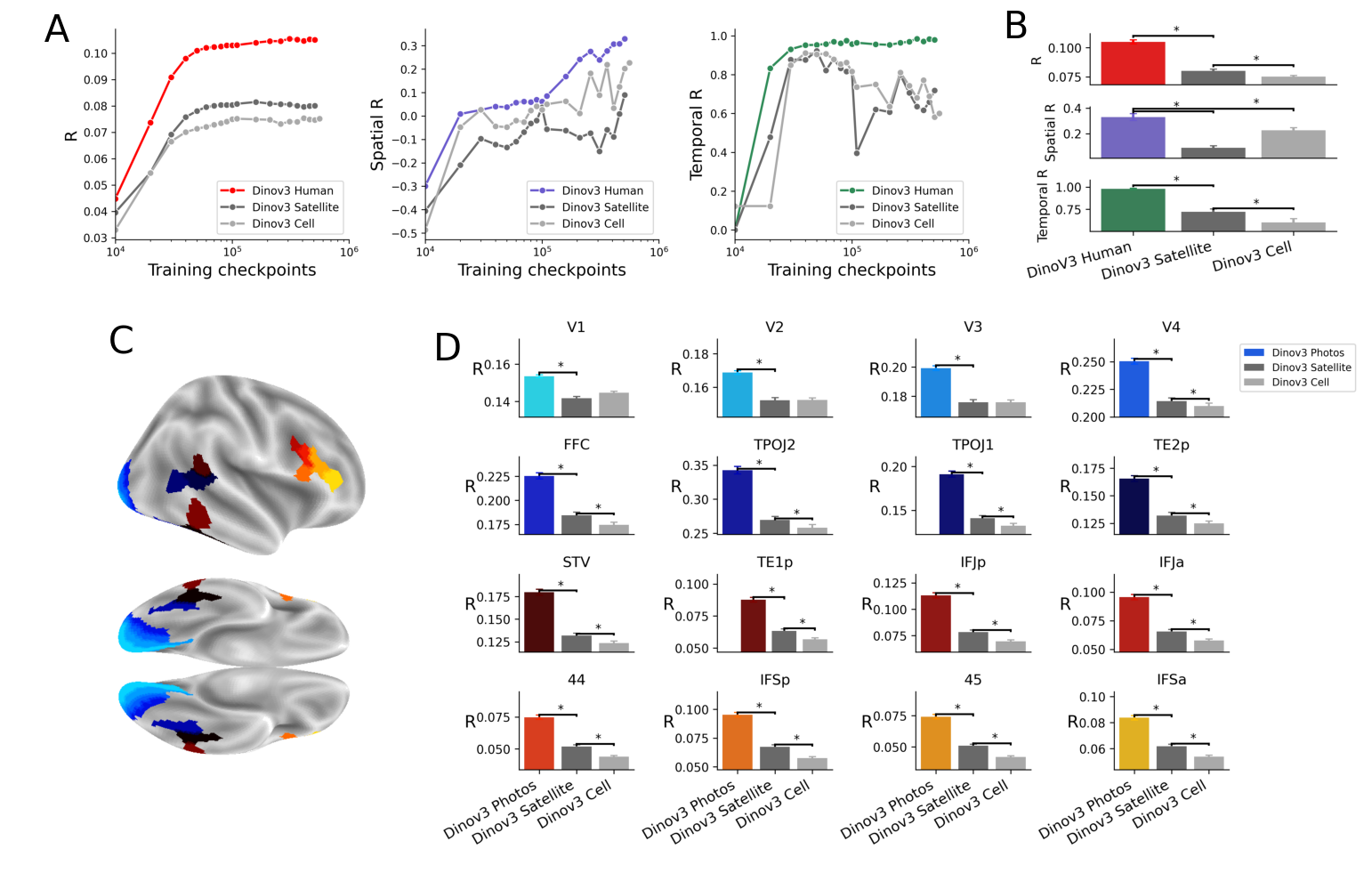

Image domain also modulates convergence. Models trained on human-centric images outperform those trained on satellite or cellular images across all metrics (p<1e−3), with the effect observed in both low-level and high-level regions. This suggests that ecological validity of training data is essential for full representational alignment.

Figure 6: Human-centric image training leads to superior brain-model similarity compared to satellite or cellular domains.

Relationship to Cortical Properties

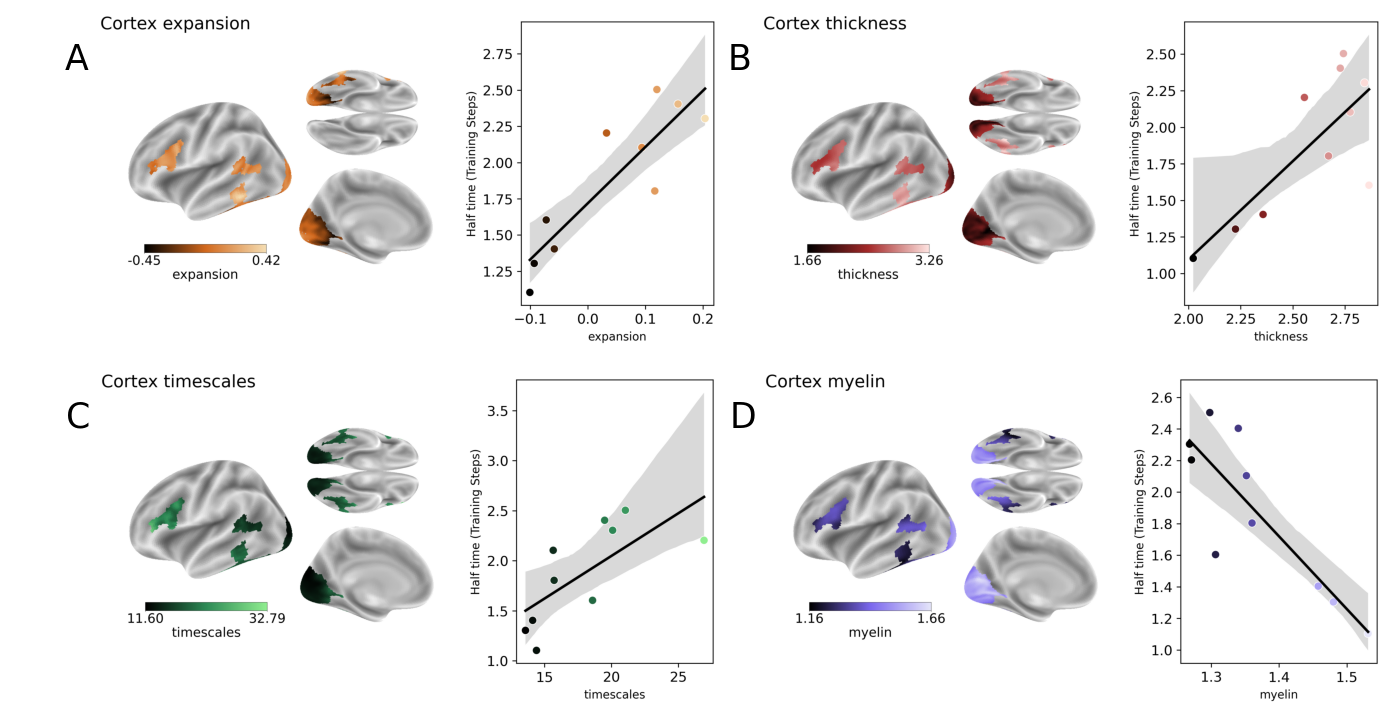

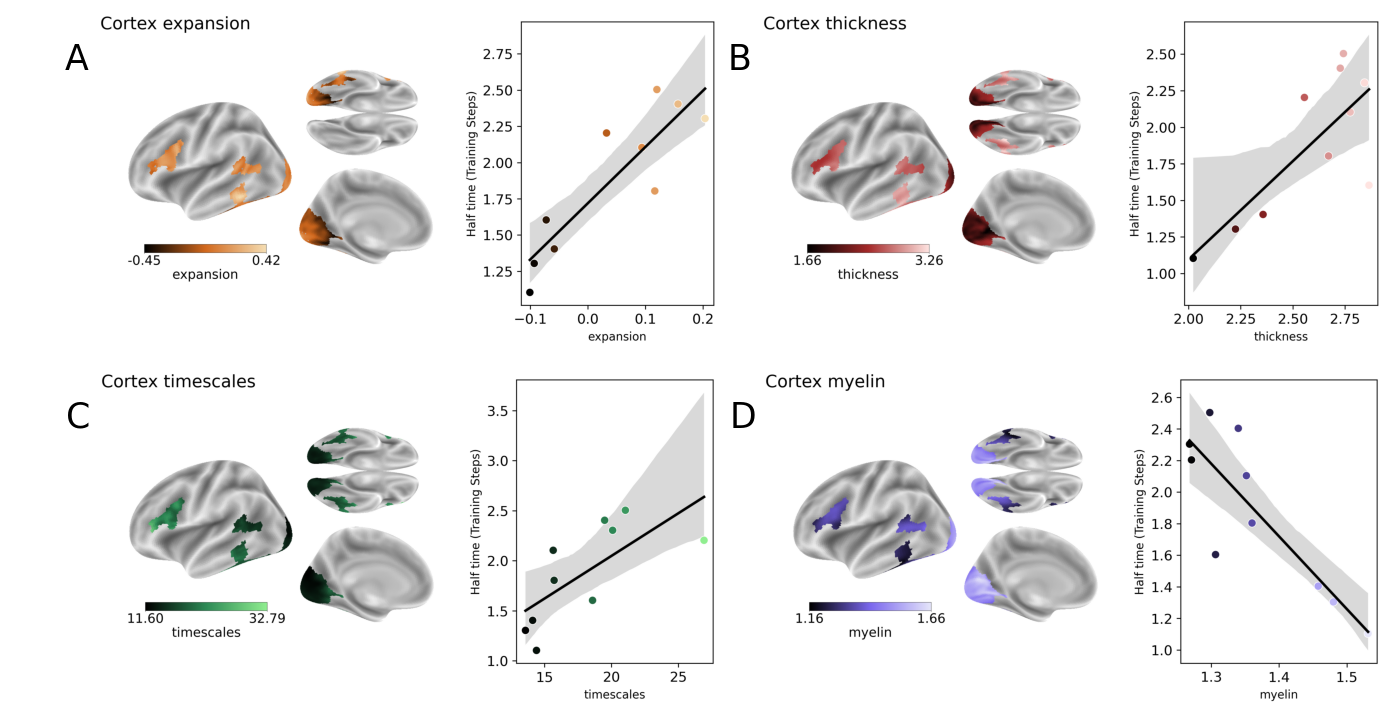

The developmental trajectory of representational alignment in DINOv3 is strongly indexed by structural and functional cortical properties:

- Cortical Expansion: Regions with greater developmental growth exhibit longer half times (R=0.88, p<1e−3).

- Cortical Thickness: Thicker regions align later (R=0.77, p<1e−2).

- Intrinsic Timescales: Regions with slower dynamics require more training (R=0.71, p=0.022).

- Myelin Concentration: Higher myelination correlates with shorter half times (R=-0.85, p=1e−3).

Figure 7: The speed of representational convergence in DINOv3 is predicted by cortical expansion, thickness, timescale, and myelination.

Theoretical and Practical Implications

The findings provide empirical support for the interaction between architectural potential and experiential data in shaping representational convergence. The staged acquisition of brain-like representations in DINOv3 parallels the ontogeny of the human cortex, with early sensory areas maturing rapidly and associative cortices developing slowly. This suggests that artificial models can serve as computational analogs for studying cortical development and the principles underlying hierarchical information processing.

The extension of model-brain alignment into prefrontal regions and the dependence on ecologically valid data challenge simplistic views of convergence and highlight the necessity of both scale and domain relevance in model training. The results also inform debates on nativism versus empiricism, demonstrating that both innate architectural capacity and experiential input are necessary for full alignment.

Limitations and Future Directions

The paper is limited to a single family of hierarchical, self-supervised vision transformers. Generalization to other architectures and modalities remains an open question. The reliance on adult brain data precludes developmental analyses, and the coarse resolution of fMRI and MEG may obscure fine-grained mechanisms. Future work should extend these analyses to other model families, developmental cohorts, and higher-resolution neural recordings.

Conclusion

This paper provides a rigorous, multi-factorial analysis of the convergence between artificial vision models and the human brain, demonstrating that model size, training duration, and image domain independently and interactively shape representational alignment. The staged emergence of brain-like representations in DINOv3 mirrors cortical development and is indexed by structural and functional properties of the cortex. These findings establish a framework for using artificial models to probe the organizing principles of biological vision and inform the design of future AI systems that more closely emulate human cognition.