- The paper introduces GANGRL-LLM, a semi-supervised framework merging GANs and LLMs to generate high-quality malcode in few-shot learning scenarios.

- The framework employs a collaborative training loop with dynamic reward shaping and feature matching to stabilize generation and improve detection performance.

- Experimental results show enhanced IDS accuracy and superior generation scores, with strong transferability across models and attack types.

High-Quality Malcode Generation from Few Samples: The GANGRL-LLM Framework

Introduction and Motivation

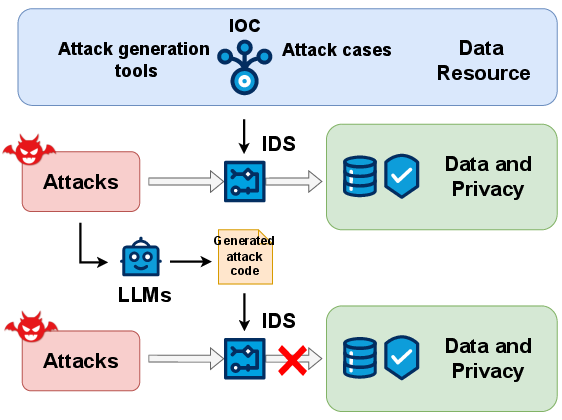

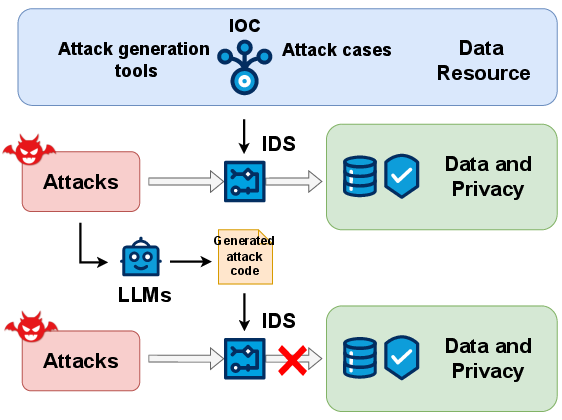

The paper addresses the acute challenge of training robust Intrusion Detection Systems (IDS) in environments where labeled malicious samples are scarce. Traditional sources of attack data—real-world incidents, open-source generators, and threat intelligence—are limited by privacy, legal, and timeliness constraints, resulting in insufficient diversity and volume for effective model training. The authors propose GANGRL-LLM, a semi-supervised framework that synergistically combines Generative Adversarial Networks (GANs) and LLMs to generate high-quality malicious code (malcode) and improve detection capabilities in few-shot learning scenarios.

Figure 1: Motivation for high-quality malcode generation to address the scarcity and diversity limitations of real-world attack samples.

Framework Architecture

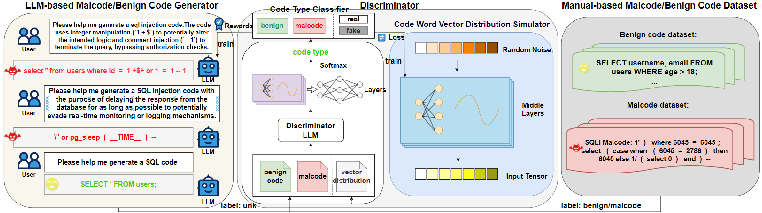

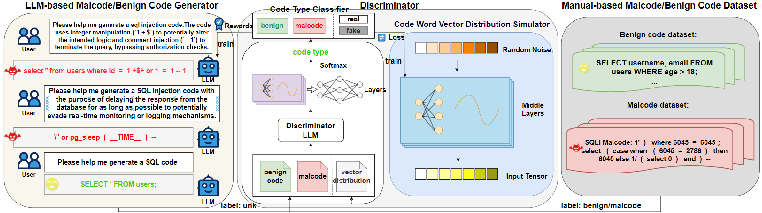

GANGRL-LLM consists of a collaborative training loop between a GAN-based discriminator and an LLM-based generator. The generator, initialized from Qwen2.5Coder, produces SQL injection (SQLi) code snippets from prompts. The discriminator, built on GAN-BERT, distinguishes between benign and malicious samples and provides dynamic reward signals to guide the generator. The framework incorporates contrastive constraints to preserve semantic consistency and prevent mode collapse, enabling stable and effective bidirectional learning even under extreme data scarcity.

Figure 2: Overview of the GANGRL-LLM framework, illustrating the adversarial and collaborative training between the LLM generator and GAN-based discriminator.

Methodological Details

Discriminator Design

The discriminator integrates a code word vector distribution simulator and a multi-layer perceptron (MLP) classifier. The simulator generates fake samples from random noise to enhance diversity, while the classifier performs k+1-class classification (benign, SQLi, and fake). The loss function combines supervised and unsupervised components, penalizing misclassification and improving fake sample detection. Feature matching regularization ensures the simulated distribution aligns with real data.

Generator Training

The generator is optimized using a composite loss: cross-entropy anchors the model to the ground truth, while a policy gradient term leverages the discriminator's log-probability output as a reward. Adaptive reward weighting decays the influence of the discriminator over training epochs, stabilizing learning and preventing overfitting to adversarial feedback. This protocol enables the generator to produce increasingly sophisticated and attack-like code structures.

Training Protocol

The training loop alternates between generator and discriminator updates. Generated samples are labeled as "unk" and used to update the discriminator, while the generator receives reward signals based on the discriminator's output. Gradient clipping and dynamic reward decay are employed to ensure stability and convergence.

Experimental Results

Malcode Generation Quality

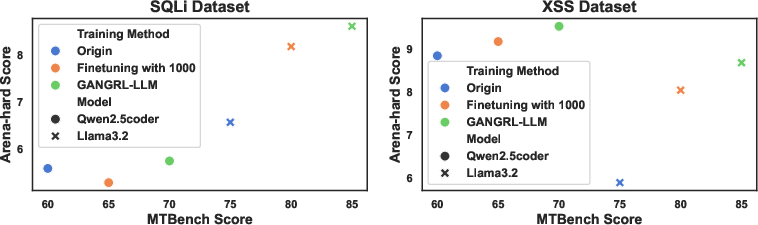

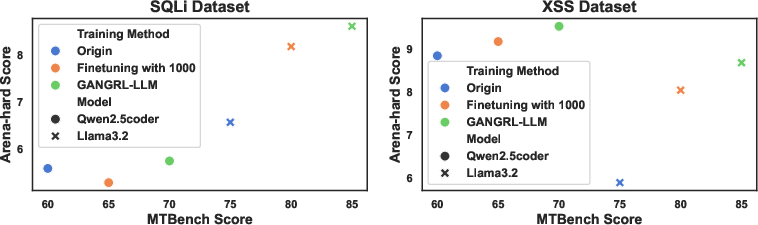

Comparative analysis of Qwen2.5Coder variants (no fine-tuning, fine-tuned, and GANGRL-LLM trained) on 100 SQLi prompts demonstrates that GANGRL-LLM consistently improves generation scores, especially in low-data regimes. The framework maintains performance with limited labeled samples and exhibits diminishing relative gains as data volume increases.

Figure 3: Scores for different models trained on SQLi and XSS datasets using various training methods, highlighting the superior performance of GANGRL-LLM in few-shot settings.

Models trained on datasets augmented with GANGRL-LLM-generated samples show improved accuracy, precision, recall, and F1 scores across CNN, Naive Bayes, SVM, KNN, and Decision Tree classifiers. The discriminator achieves a recall of 99.9%, outperforming established benchmarks (Gamma-TF-IDF, EP-CNN, ASTNN, Trident) despite using fewer training samples.

Ablation Studies

Ablation experiments reveal that removing the discriminator or simulator components leads to significant performance degradation, underscoring the importance of adversarial learning and feature matching. The full model achieves the highest generation score, with each component contributing to stability and effectiveness under data scarcity.

Transferability

GANGRL-LLM demonstrates strong transferability across models (Llama3.2, Qwen2.5Coder) and attack types (SQLi, XSS), maintaining high generation and detection performance with limited samples. This adaptability is critical for practical deployment in diverse security domains.

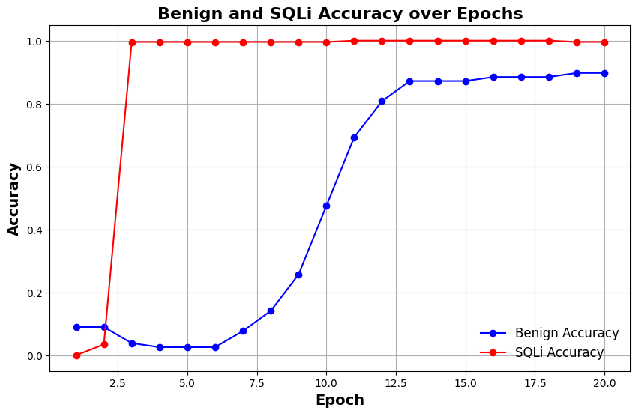

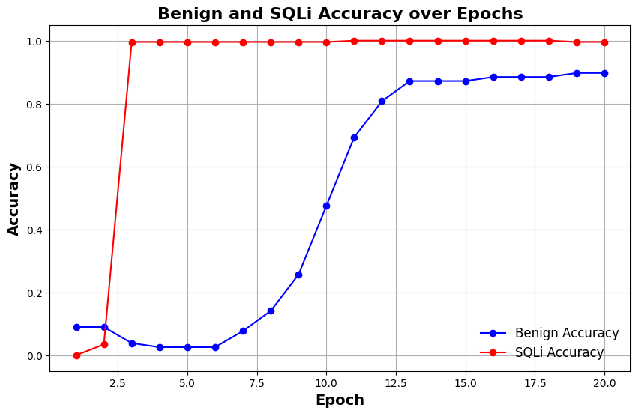

Training Dynamics

Accuracy improves steadily with training epochs, even with few labeled samples, indicating effective learning and generalization. The framework's reward mechanism ensures that generated code adheres to prompt requirements and exhibits complex, realistic attack patterns.

Figure 4: Training accuracy progression, demonstrating stable improvement in detection capability with limited labeled samples.

Comparative Analysis

GANGRL-LLM outperforms RL-based, Codex+RLHF, and MixMatch semi-supervised baselines in both malcode generation and detection tasks. The GAN-based reward mechanism provides denser and more effective feedback than sparse RL signals, resulting in higher-quality code and better model generalization.

Practical and Theoretical Implications

The framework enables the synthesis of high-quality, diverse malicious samples from few labeled instances, directly addressing the bottleneck in IDS training. Its modular design allows integration with various LLMs and discriminators, facilitating rapid adaptation to emerging threats. The approach is resource-efficient, requiring fewer labeled samples and computational resources than traditional methods.

Theoretically, the work advances semi-supervised adversarial learning by demonstrating effective co-training of generator and discriminator in discrete code generation tasks. The use of dynamic reward shaping and feature matching regularization contributes to stable training and improved sample diversity.

Limitations and Future Directions

While GANGRL-LLM enhances detection and generation capabilities, further refinement of the reward mechanism is needed to optimize generator learning. Extending the framework to multi-domain malcode generation and integrating samples from diverse security domains could yield a more versatile and comprehensive defense tool. Future research should explore automated domain adaptation, continual learning, and integration with real-time threat intelligence.

Conclusion

GANGRL-LLM presents a robust solution for high-quality malcode generation and IDS training under data-scarce conditions. By leveraging adversarial and collaborative learning between LLMs and GAN-based discriminators, the framework achieves superior generation and detection performance, strong transferability, and resource efficiency. Its methodological innovations and empirical results have significant implications for adaptive cybersecurity defense and semi-supervised learning in AI.