- The paper introduces YOLOR, a single-stage rerank method that eliminates the General Search Unit to improve list evaluations.

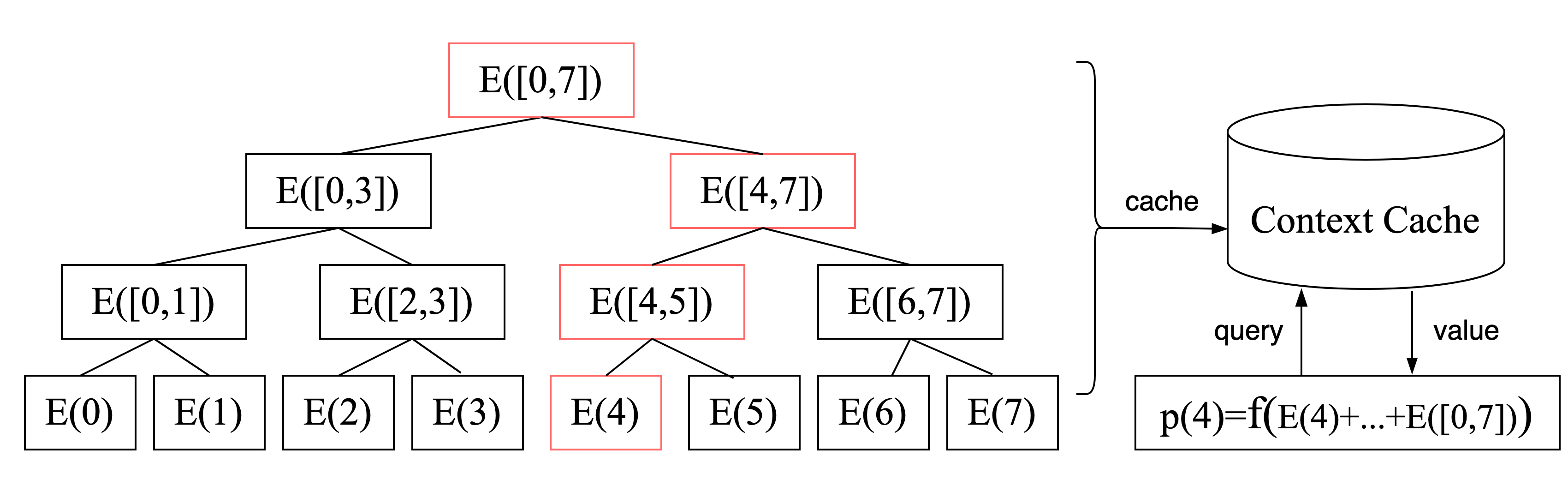

- It employs a Tree-based Context Extraction Module and a Context Cache Module to aggregate multi-scale contextual features and reduce redundant computations.

- Experimental results demonstrate significant gains in AUC, CTR (+5.13%), and GMV (+7.64%) on Meituan and Taobao datasets, confirming its practical effectiveness.

You Only Evaluate Once: A Tree-based Rerank Method at Meituan

Introduction

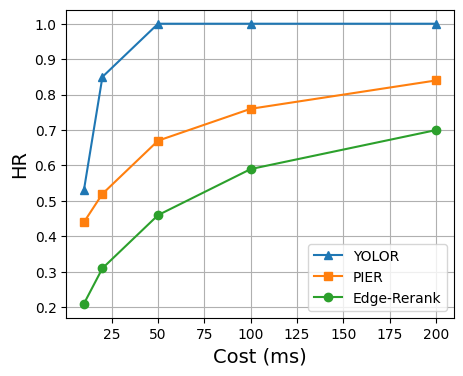

The paper presents a novel reranking framework, YOLOR, applied to recommendation systems on platforms like Meituan. YOLOR addresses the shortcomings of traditional two-stage recommendation processes that suffer from inconsistency between the General Search Unit (GSU) and Exact Search Unit (ESU), leading to suboptimal list selection. Utilizing a single-stage approach, YOLOR retains only the ESU to maximize both efficiency and effectiveness by integrating a Tree-based Context Extraction Module (TCEM) and a Context Cache Module (CCM).

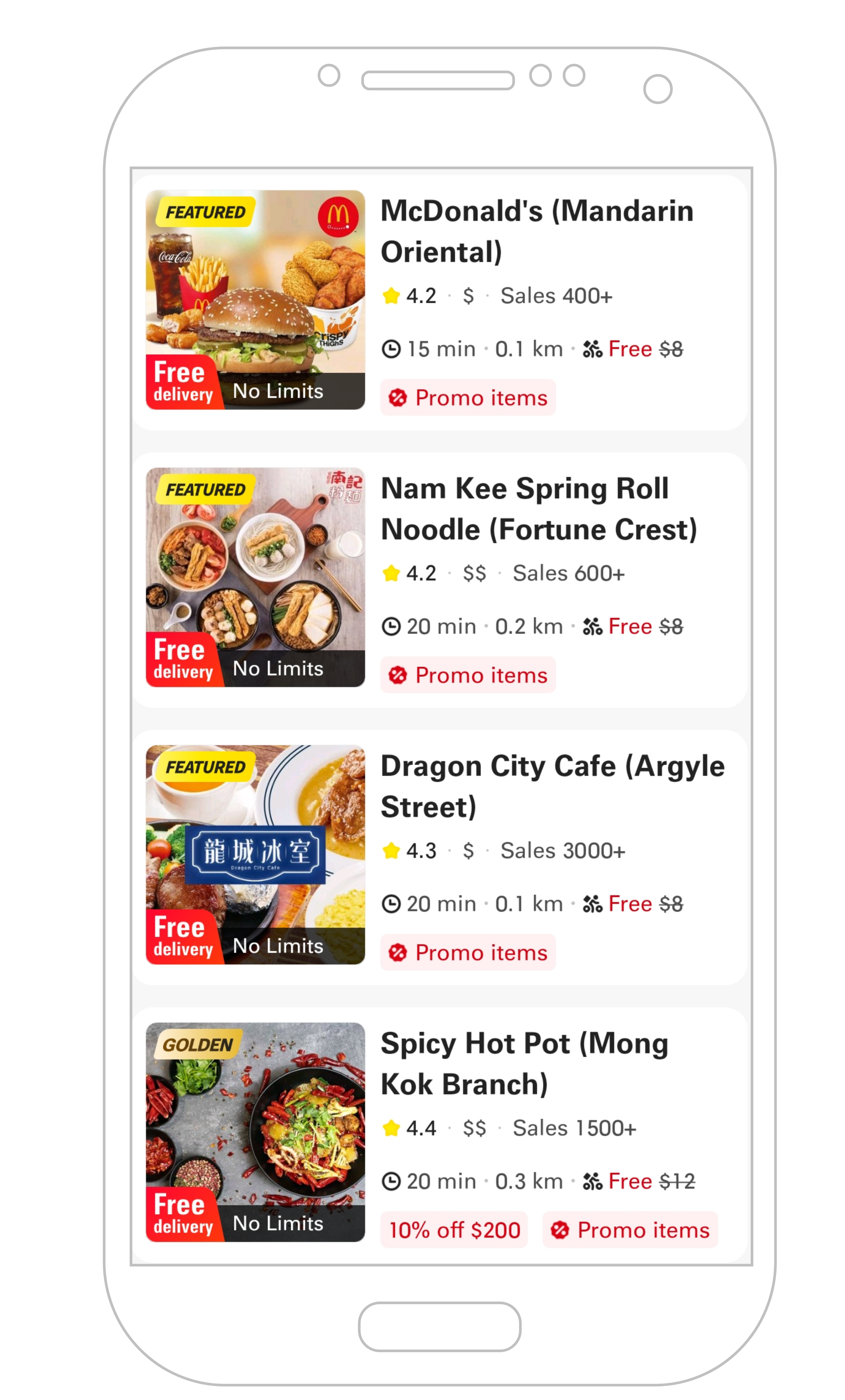

Figure 1: List recommendations on Meituan food delivery platform.

Reranking Challenges and YOLOR's Approach

The reranking task in recommendation systems is inherently challenging due to the combinatorial nature of the search space for optimal list permutations. Traditional methods employ a two-stage process that introduces inefficiencies due to the misalignment between the GSU and ESU. The paper identifies two main problems: (1) the difficulty for the GSU to identify high-value lists due to its time constraints, and (2) the ESU's lack of multi-scale contextual information affecting its decision-making power.

YOLOR resolves these by eliminating the GSU and utilizing a hierarchical approach in TCEM to aggregate contextual features at different scales, thereby enhancing list-level effectiveness. At the same time, CCM optimizes permutation-level efficiency through feature caching, thus enabling rapid and consistent evaluations across different list permutations.

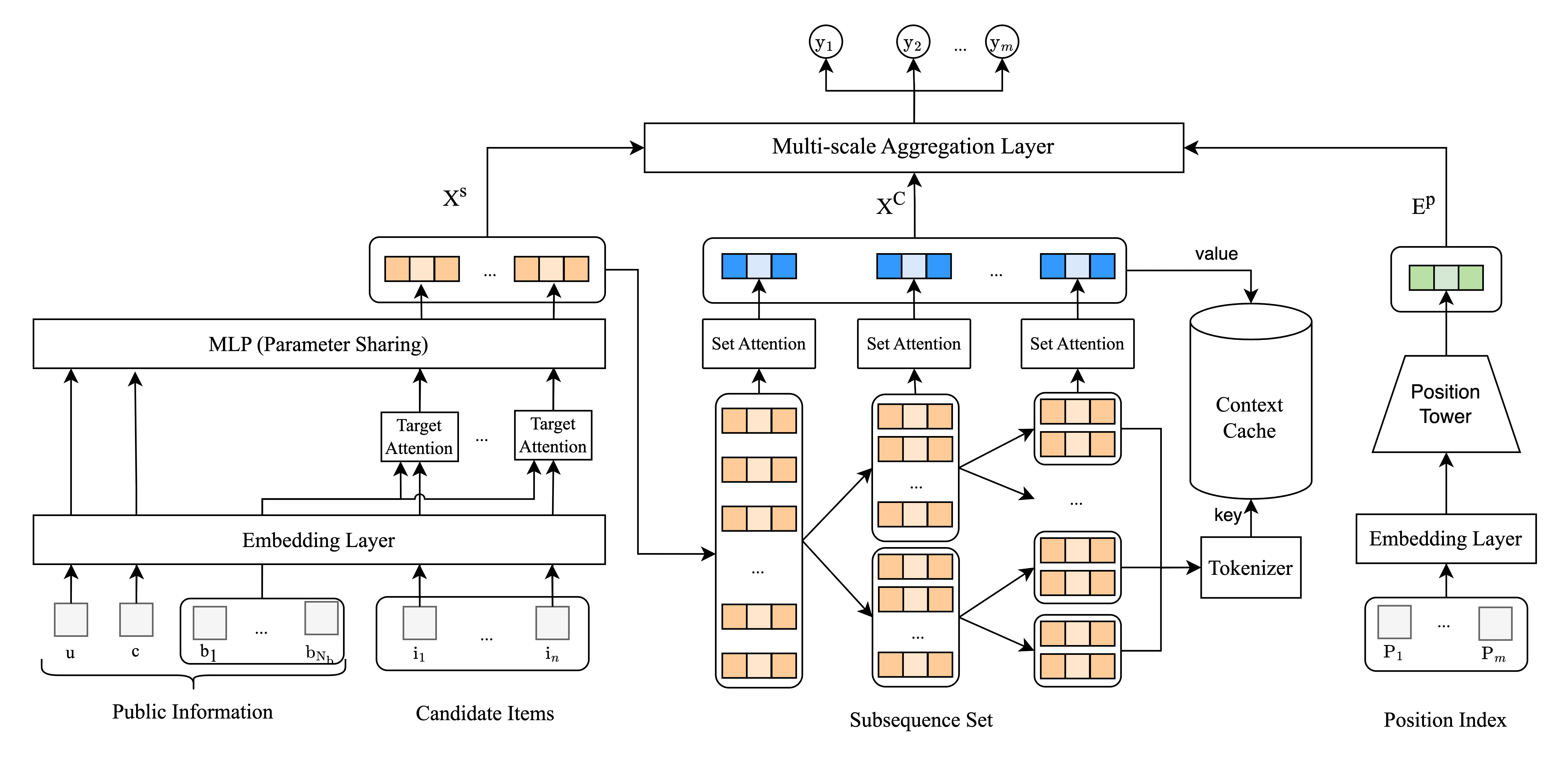

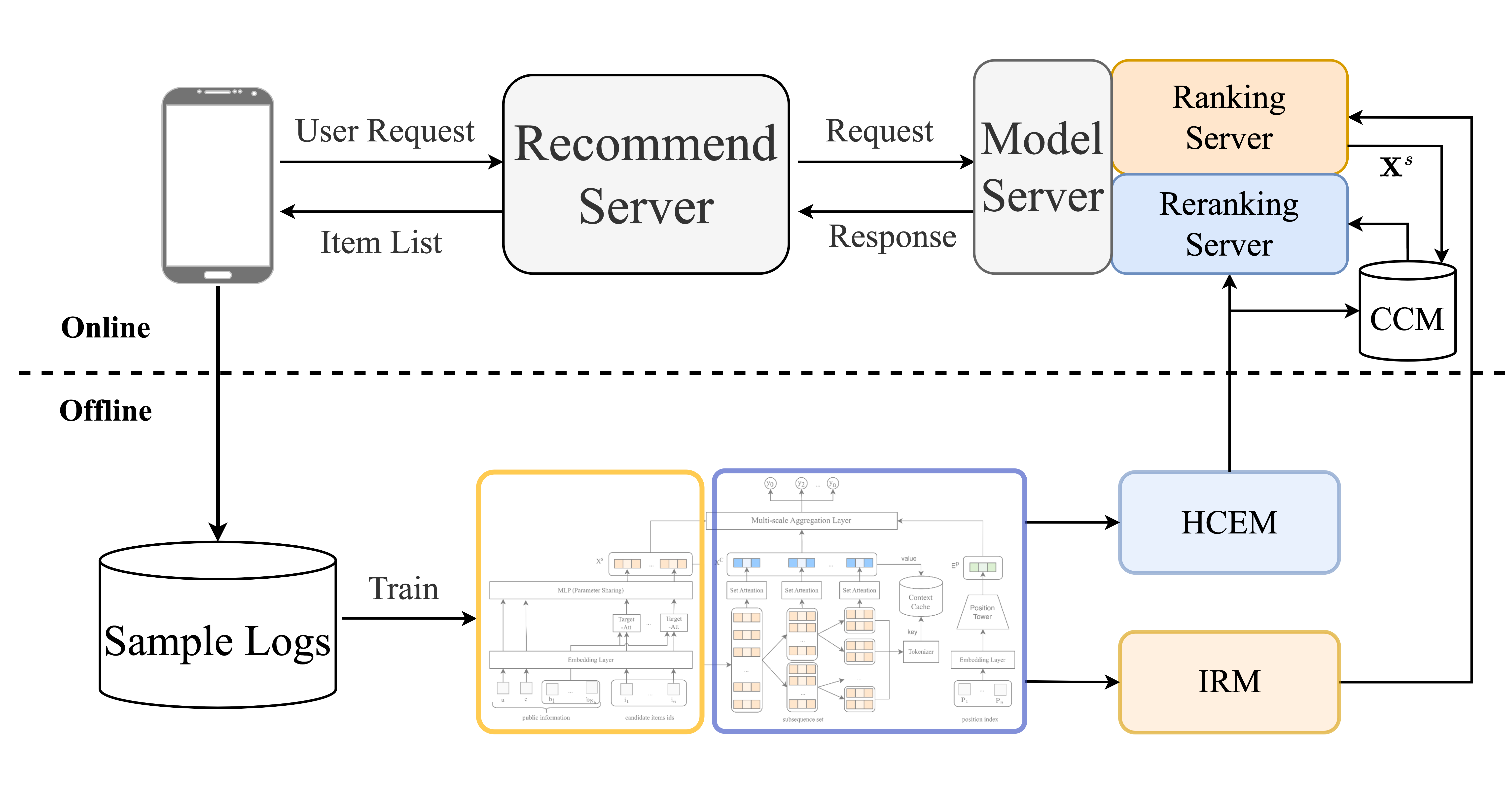

Figure 2: The overall architecture of YOLOR.

Methodology

The paper delineates the architecture and components of YOLOR:

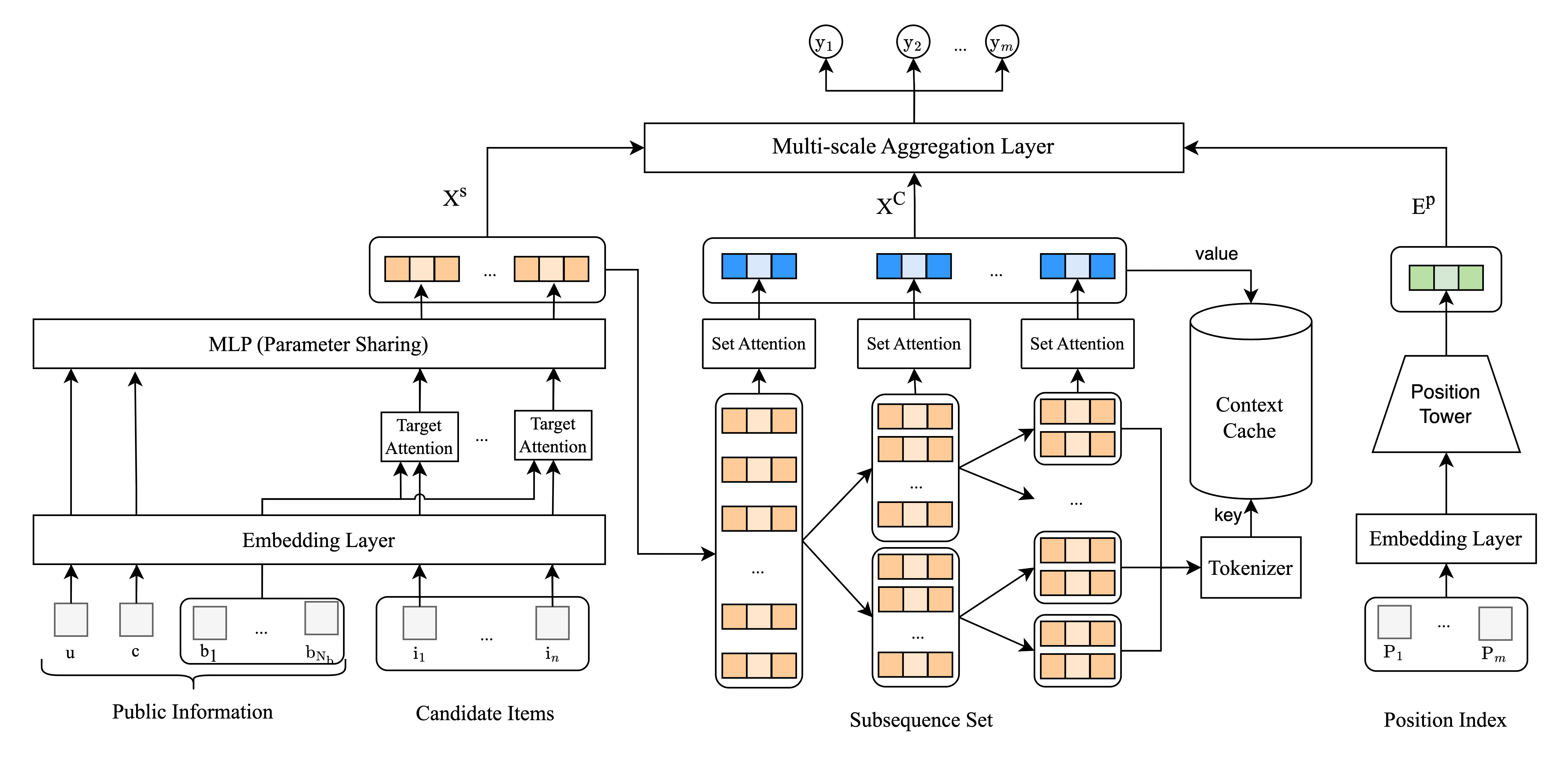

- Item-level Representation Module (IRM): This module derives semantic embeddings of candidate items to capture user-item interactions. It is crucial for ensuring that point-wise predictions used by the online system are consistent with list-wise evaluations.

- Tree-based Context Extraction Module (TCEM): TCEM constructs multi-scale subsequences of items to capture diverse contextual information, leveraging a self-attention mechanism that is devoid of position encoding to facilitate information reuse.

- Context Cache Module (CCM): CCM efficiently caches contextual information across candidate permutations to reduce redundant calculations and allows effective retrieval of context data, making the computation tractable even with a large number of permutations.

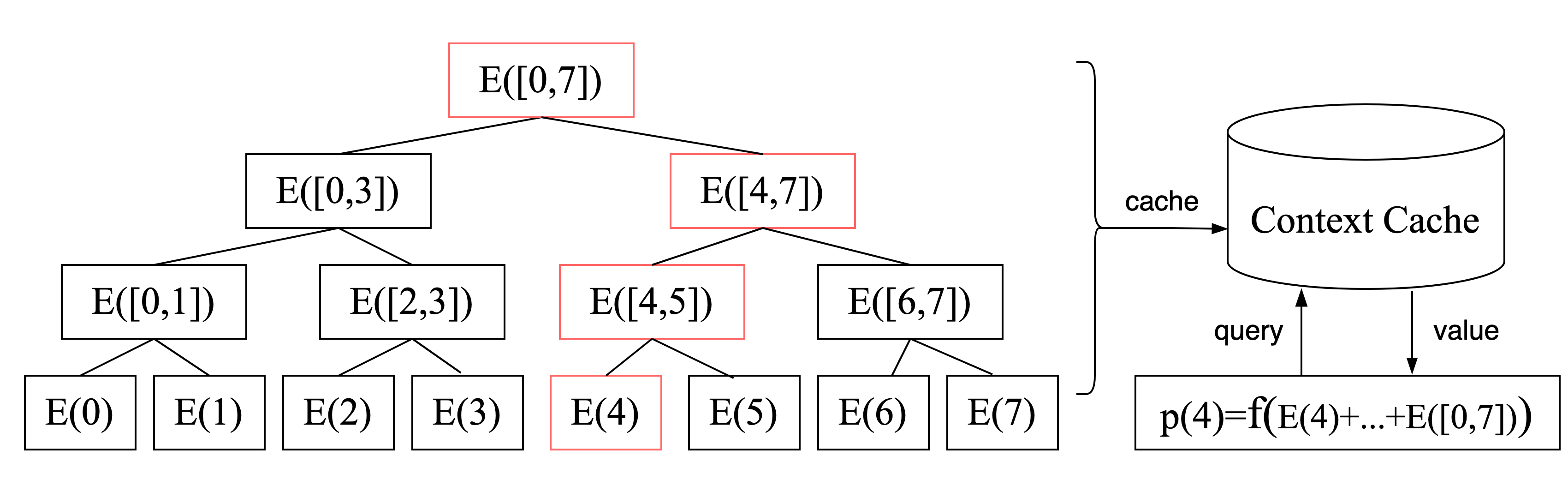

Figure 3: Demo of YOLOR. When evaluating the 4th item, precise scoring is achieved by leveraging its multi-scale contextual information and caching.

Experimental Evaluation

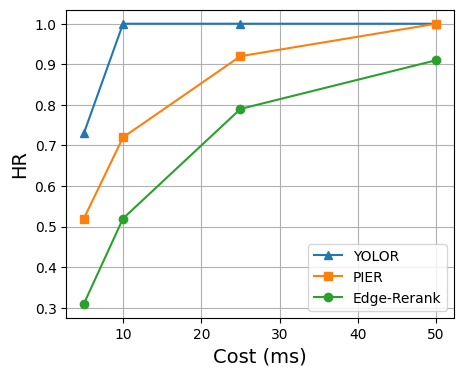

The experimental evaluation conducted on both public (Taobao Ad dataset) and proprietary datasets (Meituan platform) demonstrates significant performance gains. YOLOR showed marked improvements in AUC and GAUC metrics, particularly notable in large datasets due to its ability to fully evaluate the permutation space within practical time constraints. Moreover, during online A/B tests, YOLOR yielded a 5.13% increase in CTR and a 7.64% increase in GMV over existing baseline methods.

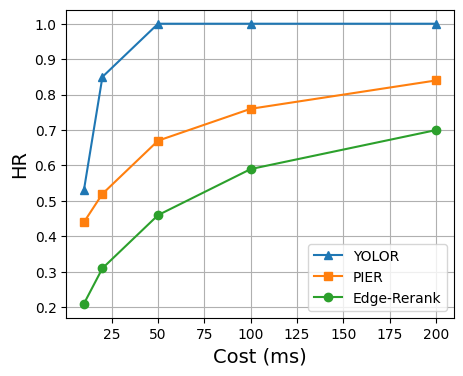

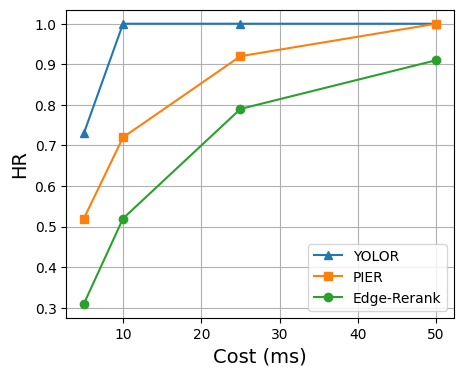

Figure 4: HR results of YOLOR and baseline methods under different cost conditions on Taobao Ad and Meituan datasets.

Conclusion

YOLOR offers a compelling solution to the inefficiencies of conventional reranking processes by ensuring complete list evaluation in a single stage. Its incorporation of multi-scale contextual analysis and efficient caching is pivotal for its performance efficacy. The deployment of YOLOR on Meituan's food delivery platform validates its industrial applicability, where it has achieved substantial enhancements in key performance metrics. Future work may explore further optimization of its components to extend its applicability across varied recommendation scenarios.

Figure 5: Architecture of the online deployment with YOLOR.