An Evaluation of "LlamaRec: Two-Stage Recommendation using LLMs for Ranking"

The paper entitled "LlamaRec: Two-Stage Recommendation using LLMs for Ranking" proposes a novel framework, LlamaRec, intended to bridge the gap in utilizing LLMs for efficient and effective recommendation systems. The authors' focus lies in addressing the traditional limitations of LLMs, namely inference time and recommendation quality, through a two-stage process involving retrieval and ranking. This methodology positions LlamaRec to utilize the strength of LLMs with improved recommendation performance and efficiency.

Methodological Overview

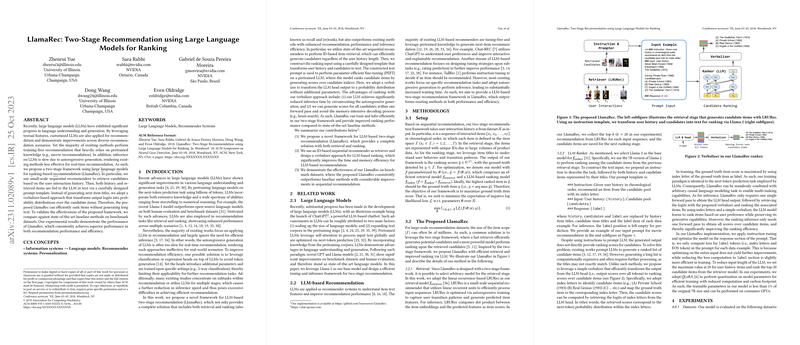

The framework is delineated into two principal stages:

- Retrieval Stage: In the initial phase, a small-scale sequential recommender, specifically LRURec, is employed to efficiently shortlist candidate items. LRURec makes use of linear recurrent units to maximize retrieval efficiency using ID-based predictions.

- Ranking Stage: The innovation of the LlamaRec framework becomes evident in this stage, where a LLM-based model, specifically the Llama~2 model, is tasked with ranking the list of candidate items. The proposed ranking mechanism leverages text to better understand user preferences. Unlike the traditional autoregressive sampling methods, LlamaRec introduces a verbalizer-based approach. This approach translates the LLM's output logits directly into the ranking scores for the candidate items, thereby circumventing the computational overhead associated with generating complete item descriptions.

This two-stage approach not only refines the recommendation accuracy compared to other state-of-the-art models but also substantially improves inference efficiency—a crucial factor for real-time applications.

Experimental Results

The experiments conducted span several datasets, including ML-100k, Beauty, and Games, which are well-established benchmarks for recommendation systems. The performance metrics considered are MRR, NDCG, and Recall, evaluated at various cutoff points. LlamaRec consistently demonstrates superior performance across these datasets against conventional models such as NARM, SASRec, and BERT4Rec.

Specifically, LlamaRec exhibits considerable improvements on ML-100k, with notable increases in MRR@5, NDCG@5, and Recall@5 values, thus demonstrating its capability to leverage intricate user-item interactions effectively. Furthermore, the paper highlights LlamaRec's advantages when benchmarked against other LLM-based recommendation approaches, such as PALR and GPT4Rec, showcasing significant gains particularly in the Beauty dataset regarding both recall and NDCG metrics.

Implications and Future Directions

The development of LlamaRec has considerable implications for both theoretical exploration and practical application. The proposed verbalizer-based ranking architecture not only facilitates efficient computation but also sets a foundation for multi-task learning capabilities within LLMs. By avoiding the resource-intensive autoregressive generation process, LlamaRec can be seamlessly integrated to accommodate large-scale practical deployments.

Looking forward, this framework paves the way for further exploration into deep integration of LLMs in recommendation systems, potentially leveraging advancements in model quantization to reduce training overheads. The approach can also inspire future work in enhancing contextual understanding through richer textual representations, thereby enhancing the granularity of preferences inferred by recommendation systems.

Conclusion

The LlamaRec framework exemplifies a significant step forward in the utilization of LLMs for recommender systems by strategically bifurcating the recommendation pipeline into retrieval and ranking stages. Through its innovative approach to efficient inference, LlamaRec not only enhances recommendation quality but also operational efficiency, positioning it as a valuable advancement in the domain of AI-driven recommendation systems. As the field continues to evolve, the principles established within this work may serve as a foundational component in the continuous refinement of recommendation methodologies employing LLMs.