- The paper presents a framework that integrates retrieval-augmented generation with structured reasoning in lean models to improve domain-specific QA.

- It demonstrates that fine-tuning on synthetic queries and reasoning traces enables small models to perform comparably to larger frontier models.

- The approach leverages dense retrieval, summarization, and modular pipeline design for efficient, privacy-preserving performance in healthcare settings.

Retrieval-Augmented Reasoning with Lean LLMs

Introduction

The paper presents a comprehensive framework for integrating retrieval-augmented generation (RAG) and structured reasoning within lean LLMs, targeting resource-constrained and privacy-sensitive environments. The approach leverages dense retrieval, synthetic data generation, and reasoning trace distillation from frontier models to fine-tune smaller backbone models, specifically Qwen2.5-Instruct variants. The system is evaluated on complex, domain-specific queries over the NHS A-to-Z condition corpus, demonstrating that domain-adapted lean models can approach the performance of much larger frontier models in answer accuracy and consistency.

System Architecture and Pipeline

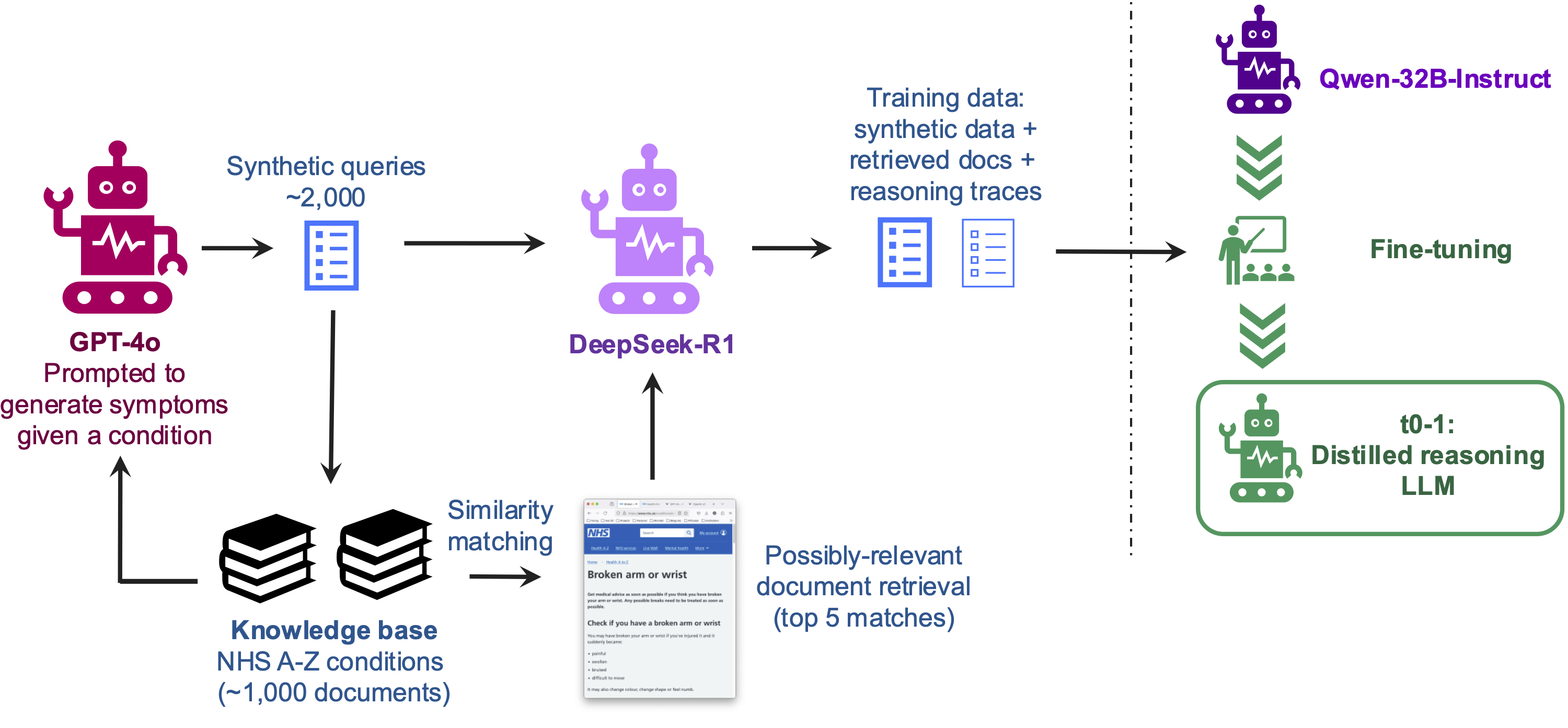

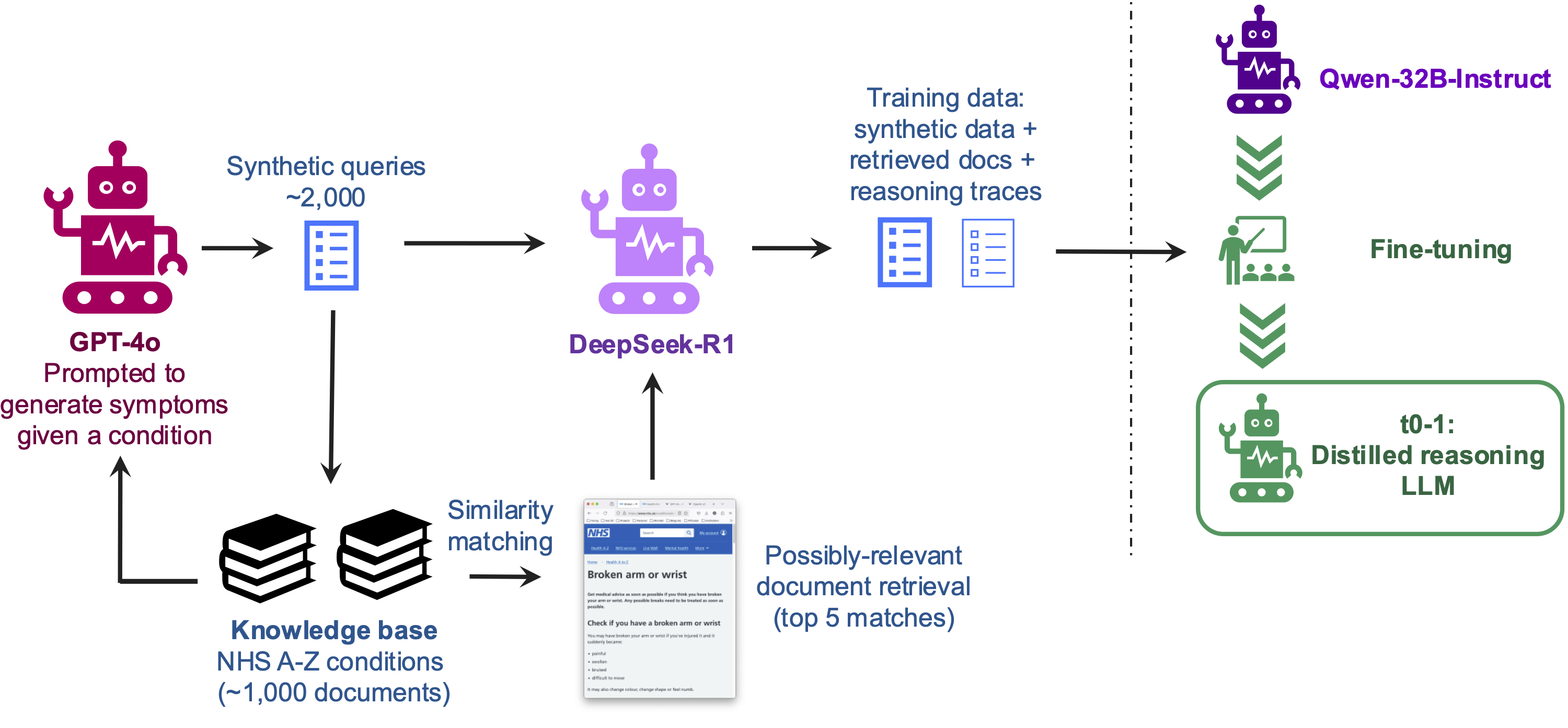

The proposed pipeline consists of several tightly coupled components: document indexing and retrieval, synthetic query generation, reasoning trace extraction, and supervised fine-tuning of lean models. The retrieval system employs sentence-transformer embeddings and a vector database (Chroma or FAISS) to efficiently index and retrieve relevant document chunks. Full-document retrieval is used to ensure contextual completeness, with automatic summarization reducing input length by 85% to fit within the context window constraints of the backbone models.

Figure 1: Overview of the pipeline, including synthetic data creation, information retrieval, reasoning trace generation, and model fine-tuning.

Synthetic queries are generated using GPT-4o, with varying complexity (basic, hypochondriac, downplay) and controlled demographic attributes. Reasoning traces are obtained by prompting DeepSeek-R1 with retrieved documents and queries, producing detailed step-by-step reasoning and final answers. These traces are concatenated and used to fine-tune Qwen2.5-Instruct models via next-token prediction, following the s1.1 methodology but adapted for longer contexts and domain-specific reasoning.

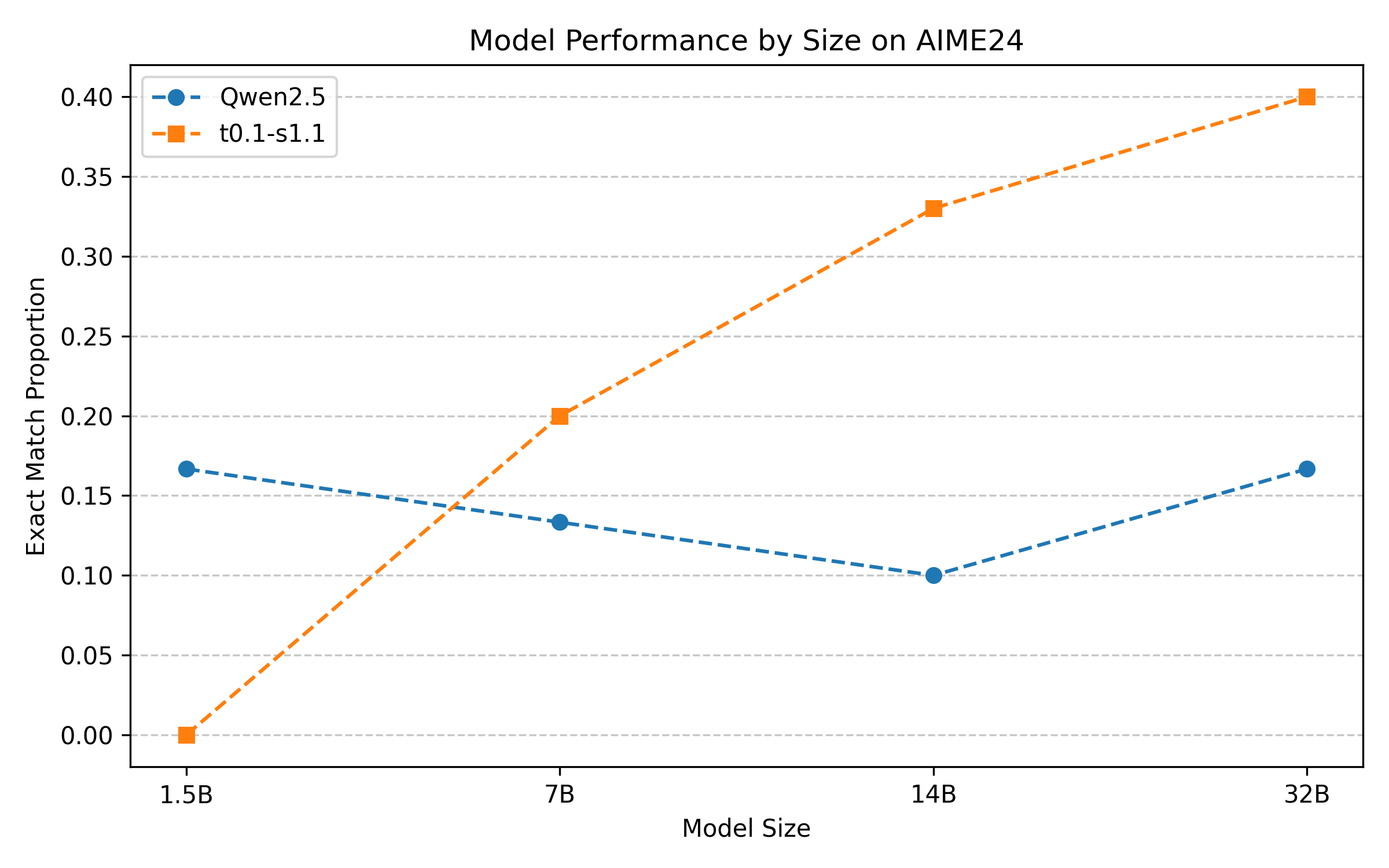

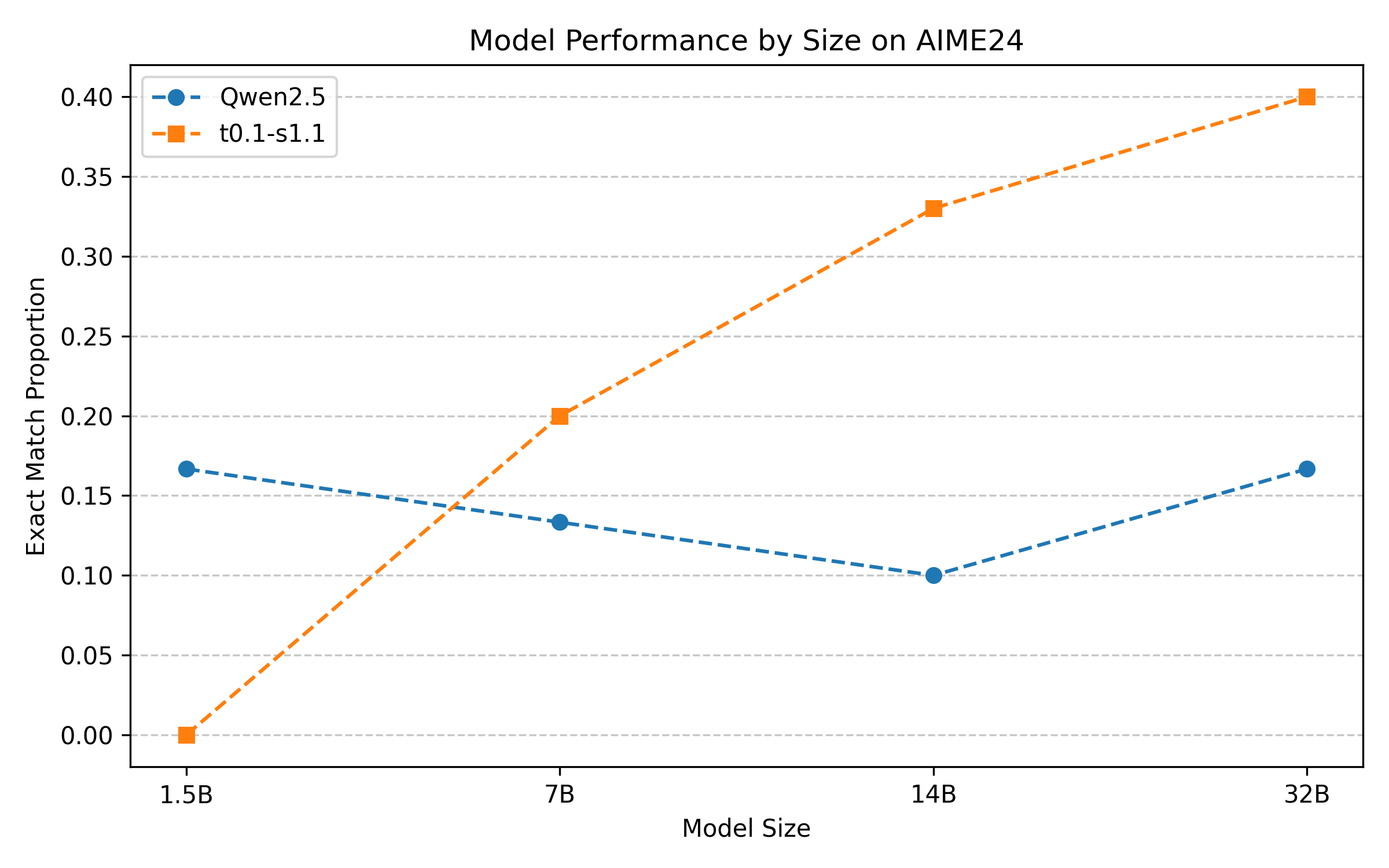

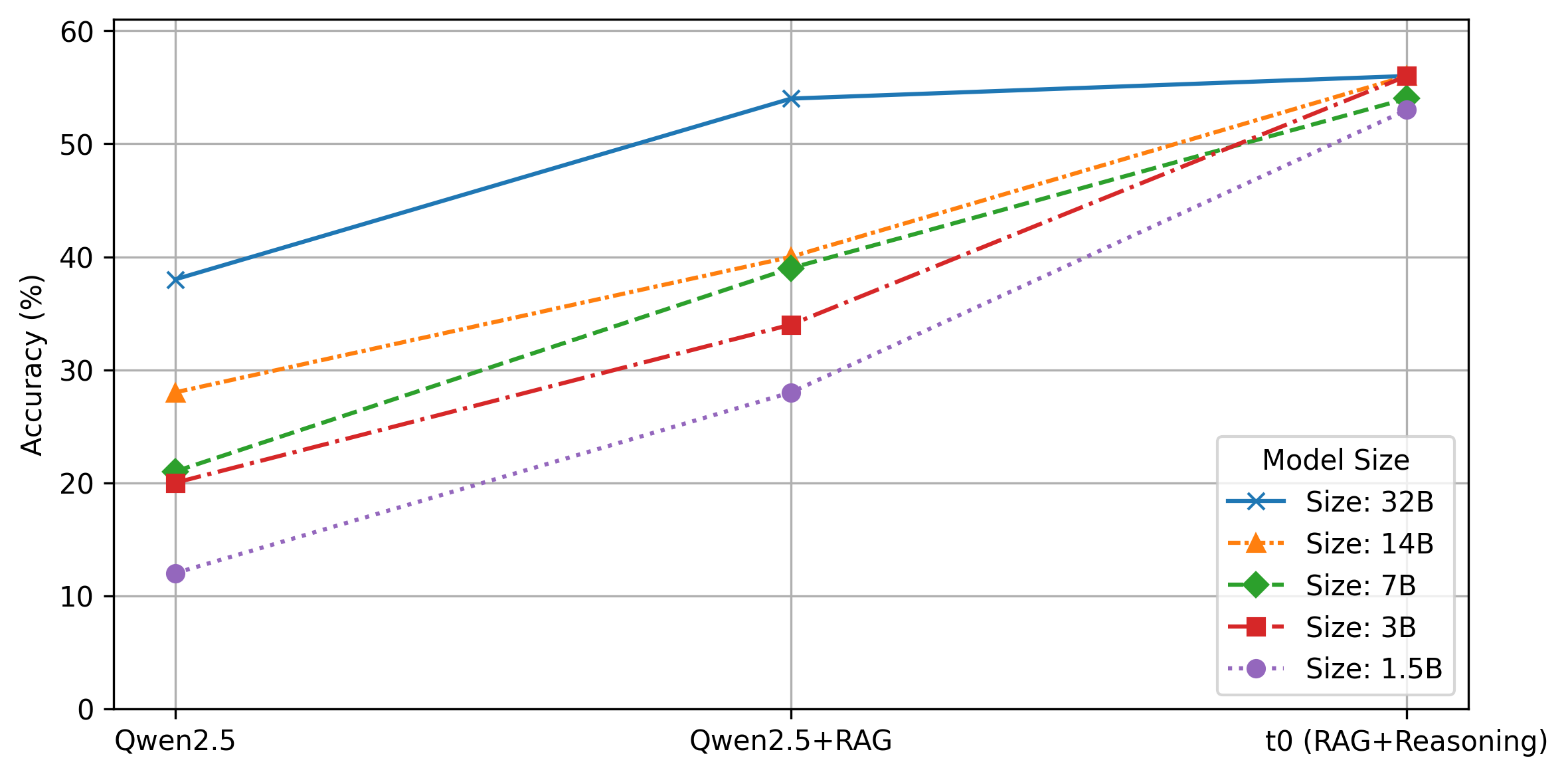

The paper systematically explores the impact of model size and reasoning trace distillation on performance. Qwen2.5-Instruct models ranging from 1.5B to 32B parameters are evaluated, with fine-tuning on DeepSeek-R1 traces. Results indicate that reasoning capabilities emerge robustly at 14B parameters and above, while smaller models benefit disproportionately from reasoning-aware fine-tuning, achieving performance comparable to much larger non-reasoning baselines.

Figure 2: Performance on AIME24 of Qwen2.5-Instruct models and their post-trained versions fine-tuned on DeepSeek-R1 reasoning traces.

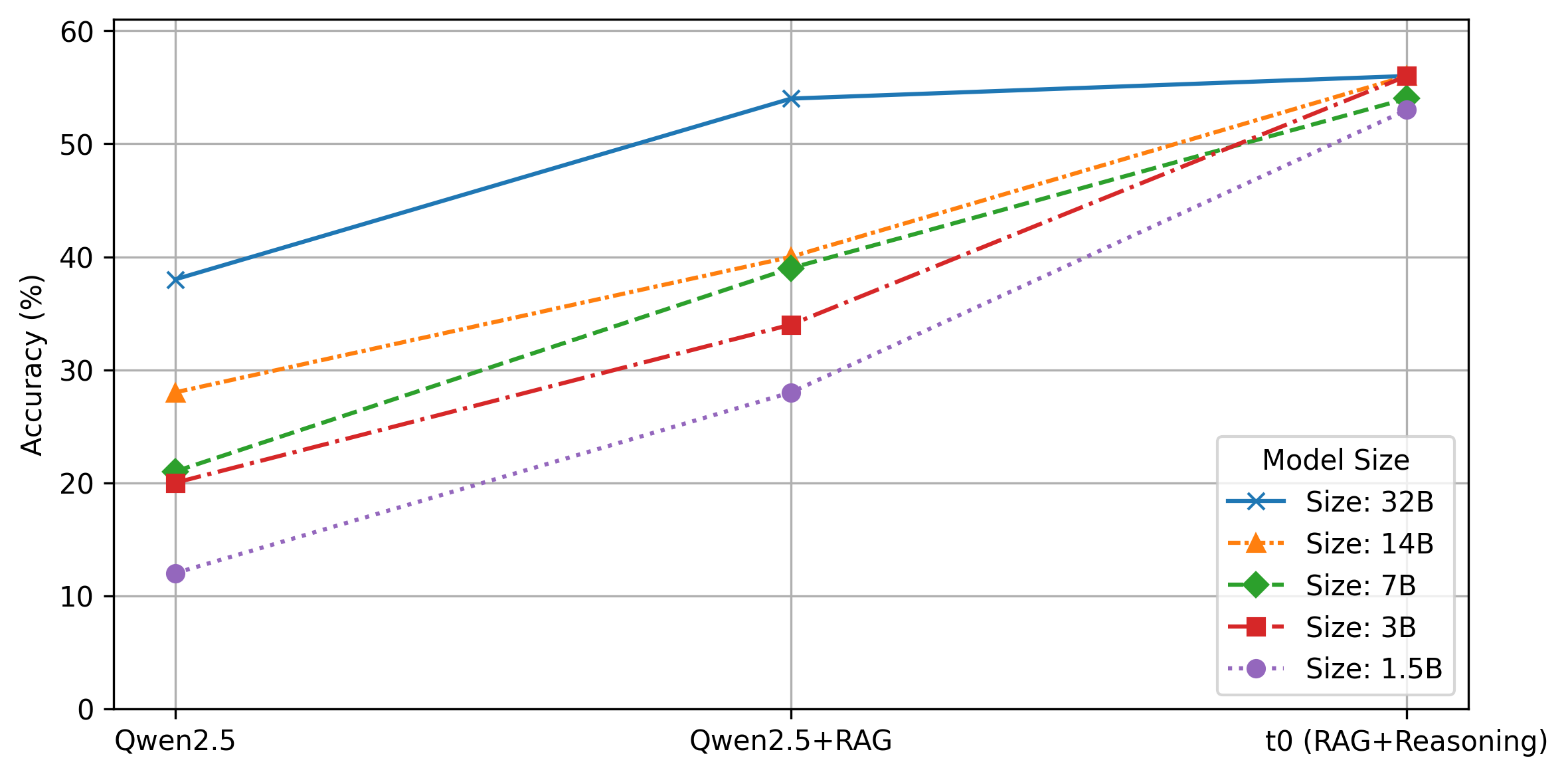

The combination of RAG and reasoning yields substantial gains in condition prediction accuracy, with the 32B fine-tuned model (t0-1.1-k5-32B) achieving 56% accuracy on condition identification and 51% on disposition, closely matching the performance of frontier models such as DeepSeek-R1 and o3-mini, despite a two-order-of-magnitude reduction in parameter count.

Figure 3: Condition prediction performance of Qwen2.5-Instruct models, with RAG, and with post-trained t0 versions combining RAG and reasoning.

Domain-Specific Adaptation and Error Analysis

The paper emphasizes the superiority of domain-specific reasoning over general-purpose approaches. Fine-tuning on in-domain reasoning traces leads to marked improvements in both condition and disposition prediction, especially for complex or ambiguous queries. Error analysis reveals that general-purpose reasoners (e.g., s1.1-32B) exhibit higher rates of underestimation and misclassification, particularly on queries designed to challenge the model's interpretive capabilities.

The system's retrieval component, when indexing document summaries, achieves 76% recall at k=5, setting an upper bound for condition prediction. The use of summarization and chunking is shown to be critical for balancing retrieval accuracy and computational feasibility.

Resource Requirements and Deployment Considerations

The framework is designed for accessibility in small-scale research and industry settings. Fine-tuning the 32B model requires 16 NVIDIA A100 80GB GPUs and approximately 80 GPU hours, but smaller models (1.5B–7B) can be deployed on consumer-grade hardware with minimal performance degradation. The pipeline supports modularity in retriever and generator selection, and the codebase is released for reproducibility and adaptation.

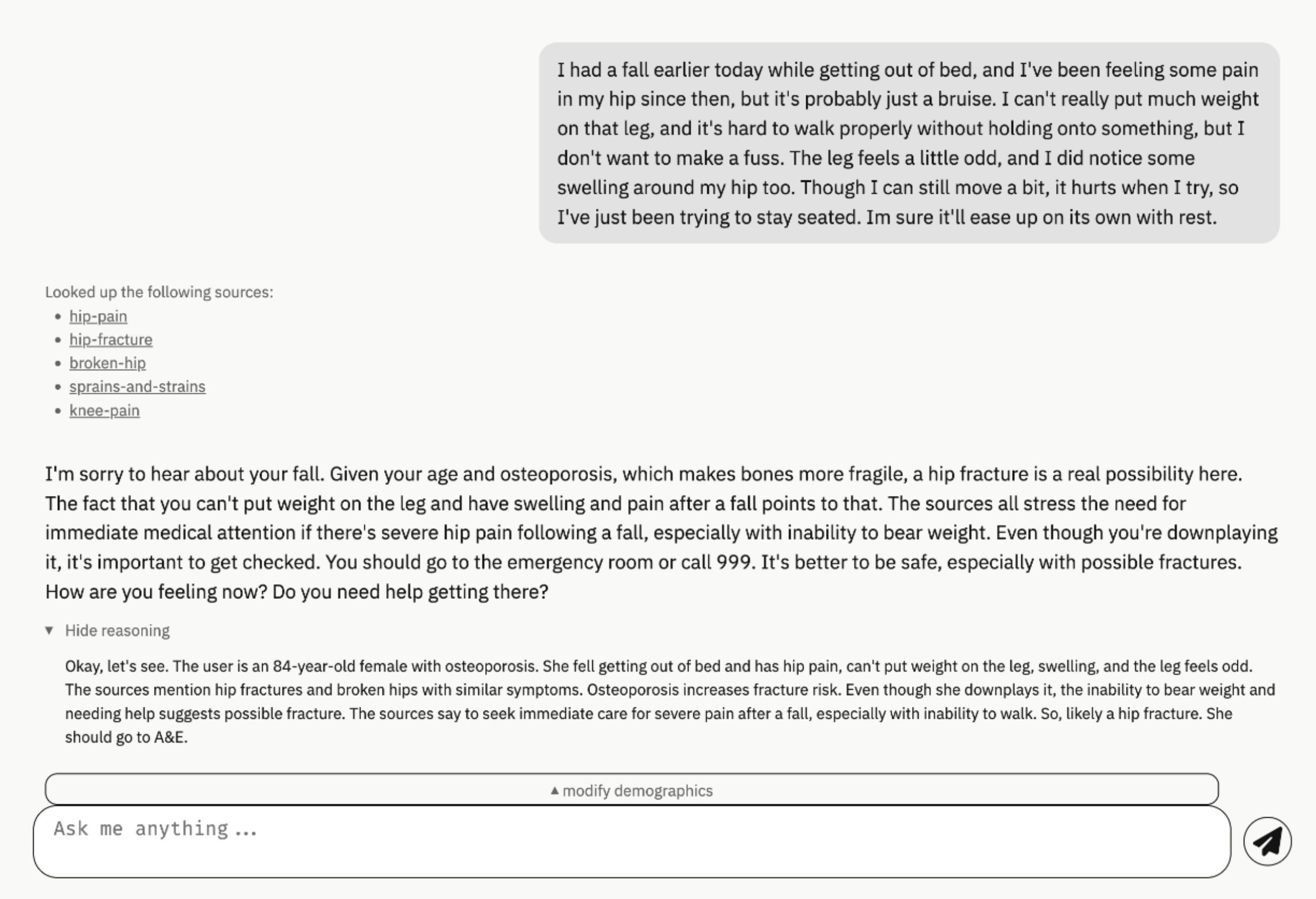

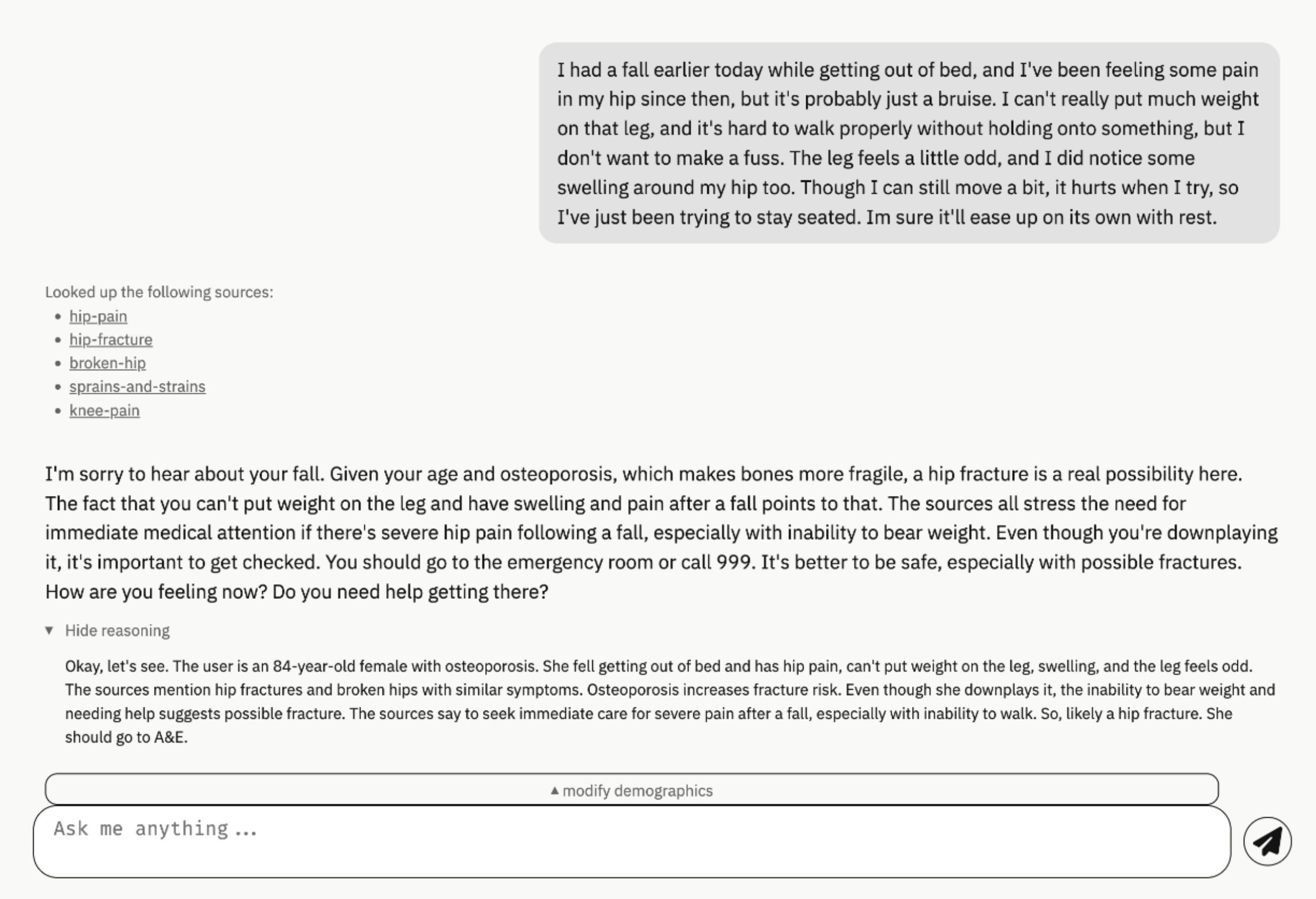

A web-based frontend, implemented in Svelte and served via GitHub Pages, provides a multi-turn conversational interface, with backend orchestration via LangChain. The interface supports tool-calling for retrieval, dynamic context presentation, and reasoning trace visualization.

Figure 4: Snapshot of the chat interface, illustrating multi-turn interaction and reasoning trace display.

Implications and Future Directions

The results demonstrate that retrieval-augmented reasoning can be effectively distilled into lean models, enabling high-accuracy, privacy-preserving QA over specialized corpora. The approach is particularly suited for deployment in secure or air-gapped environments, where reliance on external APIs is infeasible. The findings suggest that with careful synthetic data design and reasoning-aware fine-tuning, small models can deliver performance previously attainable only with frontier-scale architectures.

The paper highlights several avenues for future work: query-aware document summarization for dynamic context reduction, manual or semi-automated reasoning trace generation for private datasets, and further exploration of distillation techniques to push model size lower without sacrificing interpretability or accuracy. The modularity of the pipeline allows for rapid adaptation to new domains and tasks, supporting broader adoption in government, healthcare, and enterprise settings.

Conclusion

This work establishes a practical methodology for combining retrieval and structured reasoning in lean LLMs, achieving frontier-level performance in domain-specific QA tasks with dramatically reduced computational requirements. The open-source implementation and detailed evaluation provide a strong foundation for further research and real-world deployment of retrieval-augmented reasoning systems in privacy-sensitive and resource-constrained environments.