Overview of "Auto-RAG: Autonomous Retrieval-Augmented Generation for LLMs"

The paper presents Auto-RAG, an advanced model for Retrieval-Augmented Generation (RAG) designed to improve the decision-making capabilities of LLMs through autonomous iterative retrieval. This model addresses the shortcomings of existing RAG approaches, which often rely on manually constructed rules or few-shot prompting, thereby enhancing both efficiency and robustness in handling complex queries.

Problem Statement

RAG models are instrumental in addressing knowledge-intensive tasks by augmenting a generation process with external information retrieval. Traditional approaches to RAG, however, face challenges such as noise in retrieved content and limitations in retrieving comprehensive information for complex queries. These challenges lead to an increased inference overhead and limit the model's ability to leverage the full reasoning capabilities of LLMs.

Methodology

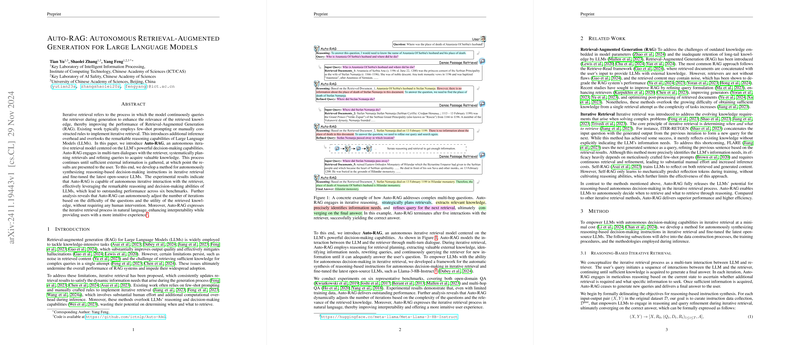

Auto-RAG introduces a systematic approach to iterative retrieval by leveraging LLMs' inherent decision-making and reasoning attributes in a fully autonomous manner.

- Iterative Retrieval Framework: Auto-RAG employs a multi-turn dialogue with the retriever, enabling the system to engage in iterative planning and query refinement. The iterative process concludes when sufficient information has been gathered, allowing the LLM to produce a final response.

- Reasoning and Decision-Making: The model autonomously synthesizes reasoning-based decision-making instructions that guide the iterative retrieval process. This involves the creation of a reasoning paradigm that determines the need for further information based on relevance and utility, thereby improving efficiency while minimizing unwarranted information processing.

- Fine-Tuning Process: Auto-RAG's capabilities are enhanced by fine-tuning the latest open-source LLMs on synthesized reasoning tasks, which guide the model to better manage the retrieval process and optimize its interactions with the retriever.

Experimental Evaluation

The authors demonstrate the effectiveness of Auto-RAG by evaluating its performance across six benchmarks: open-domain and multi-hop QA datasets, including Natural Questions and TriviaQA. Noteworthy experimental results include:

- Superior performance compared to standard RAG and other iterative retrieval models, achieving high scores across multiple benchmarks.

- Capabilities for autonomous retrieval adaptation based on question difficulty and retriever performance.

- Improved interpretability and user experience by expressing the retrieval process in natural language.

Implications and Future Directions

Auto-RAG sets a new standard in retrieval-augmented generation by fully integrating the decision-making capabilities of LLMs with iterative retrieval processes, effectively enhancing both accuracy and efficiency. The results suggest possible improvements in various applications, from open-domain question answering to more specialized tasks requiring complex reasoning chains. Future research directions may explore further diversification of iterative methods and fine-tuning approaches, as well as application to other AI tasks beyond question answering. Moreover, the development of faster mechanisms for query refinement and noise reduction in retrieval could yield additional performance gains, especially as retrieval corpora grow and become more heterogeneous.

In conclusion, Auto-RAG represents an innovative step towards more autonomous and effective RAG systems, with broader implications for AI's ability to process and integrate vast amounts of information with minimal human oversight.