- The paper introduces ComoRAG, a framework that emulates human cognition to dynamically integrate new evidence with past knowledge for enhanced long narrative reasoning.

- It leverages a hierarchical knowledge source and a metacognitive regulation loop that iteratively refines memory, achieving up to 11% performance gains over traditional RAG systems.

- Ablation studies confirm the critical roles of the veridical layer and metacognitive processes, underscoring its adaptability across diverse narrative comprehension tasks.

ComoRAG: A Cognitive-Inspired Memory-Organized RAG for Stateful Long Narrative Reasoning

Introduction

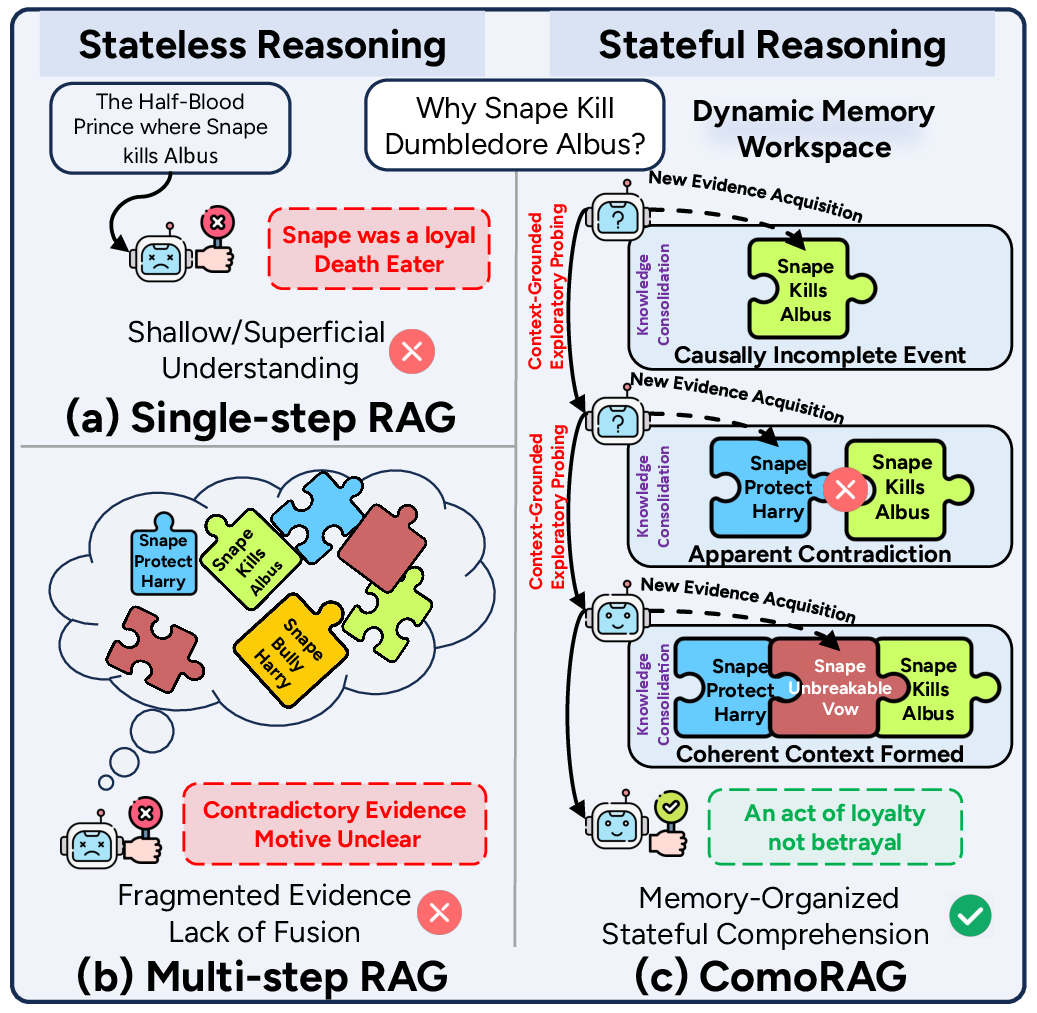

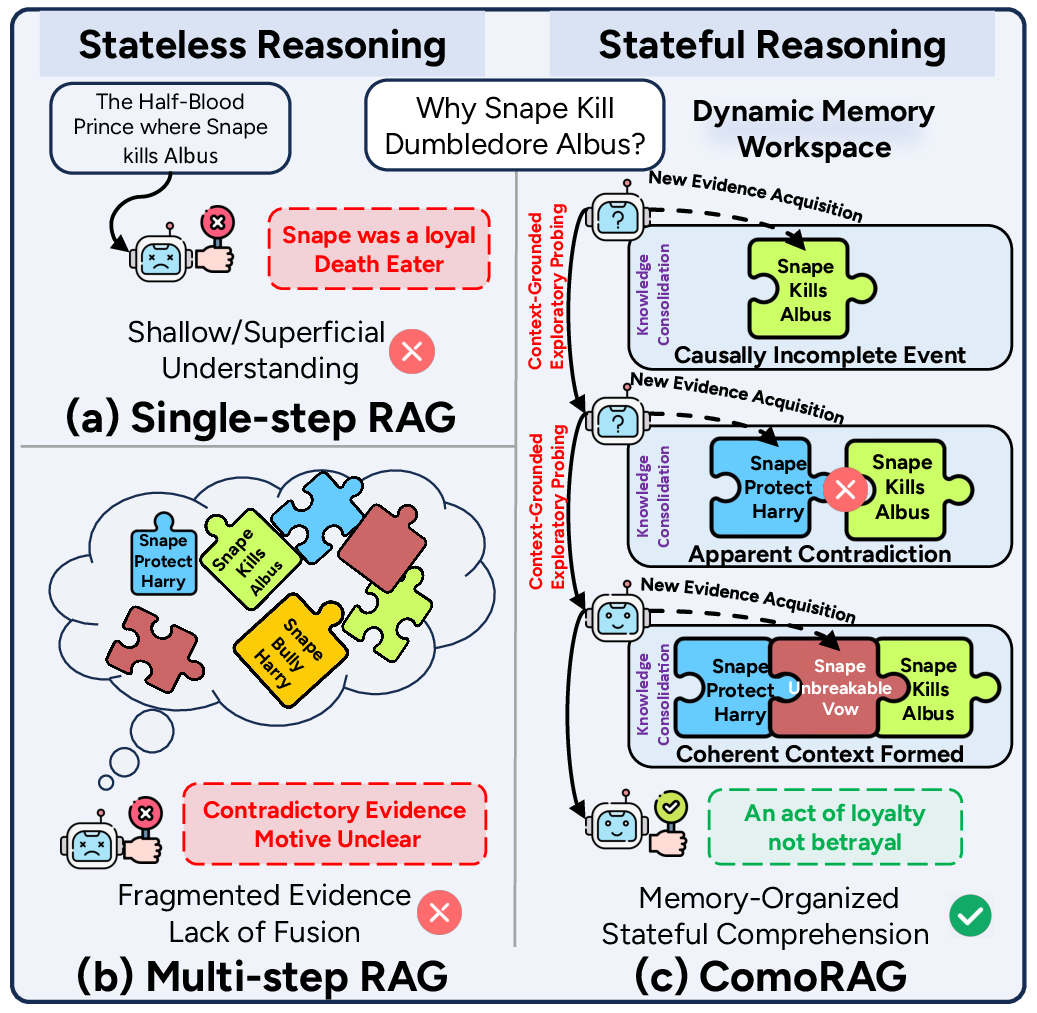

The paper "ComoRAG: A Cognitive-Inspired Memory-Organized RAG for Stateful Long Narrative Reasoning" introduces an innovative framework for tackling the challenges of narrative comprehension in long stories and novels. Traditional LLMs struggle with reasoning over extended contexts due to diminished capacity and high computational costs. Retrieval-based approaches offer a practical alternative yet fall short due to their stateless and single-step nature. This work proposes ComoRAG, which aims to emulate human cognitive processes to overcome these limitations, enabling dynamic interplay between new evidence acquisition and past knowledge consolidation.

Framework and Methodology

Hierarchical Knowledge Source

ComoRAG constructs a multi-layered knowledge source akin to cognitive dimensions in the human brain, enabling deep contextual understanding:

- Veridical Layer: Grounded in factual evidence with raw text chunks and knowledge triples, enhancing retrieval effectiveness.

- Semantic Layer: Abstracts thematic structures using semantic clustering, as developed in the RAPTOR framework, for superior context abstraction.

- Episodic Layer: Captures narrative flow and plotline through episodic representations, facilitating temporal and causal comprehension.

Metacognitive Regulation Loop

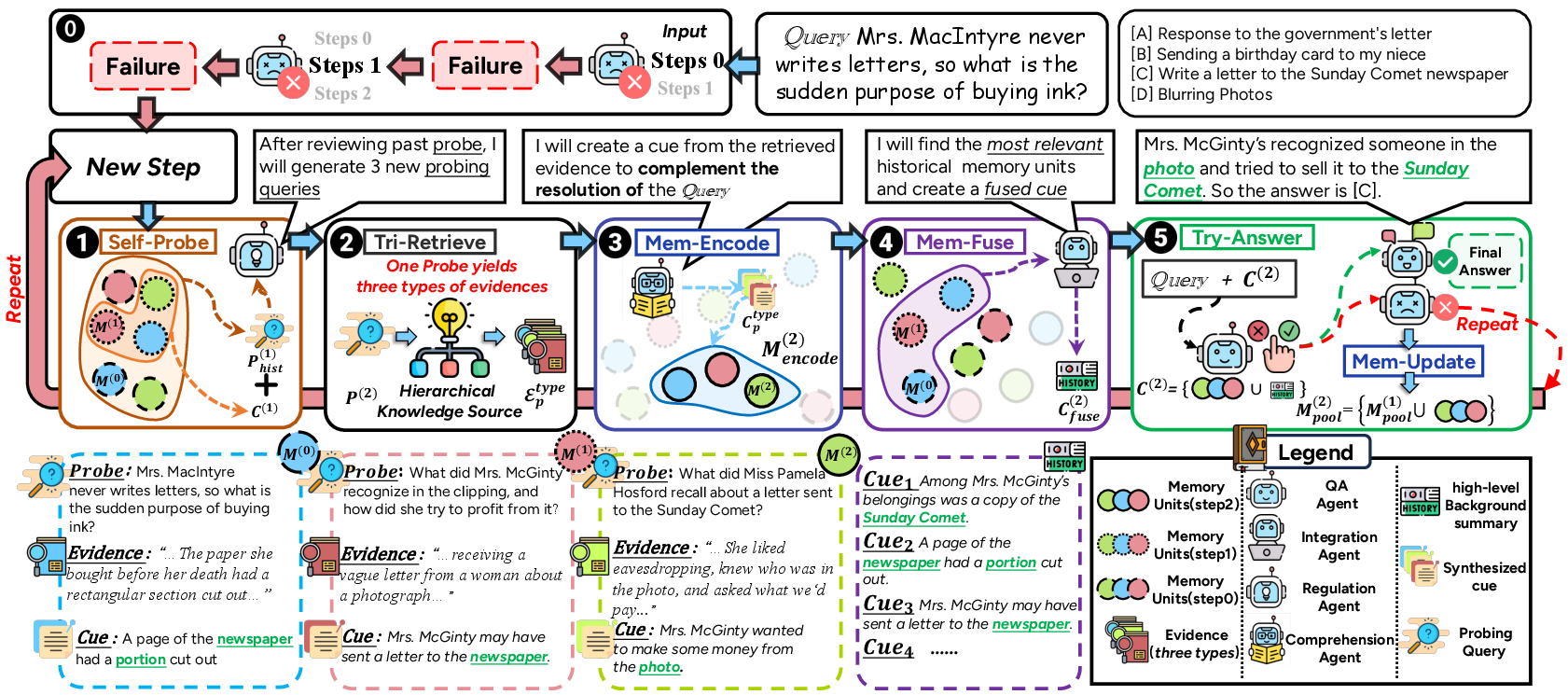

Central to ComoRAG is its Metacognitive Regulation Loop, comprising:

- Dynamic Memory Workspace: Memory units created after each retrieval operation serve as evolving knowledge states aiding deeper reasoning.

- Regulatory and Metacognitive Processes: The loop includes planning new probes strategically, retrieving evidence, and synthesizing memory cues, enabling iterative, stateful reasoning.

By iteratively probing and updating memory, ComoRAG aims to address inherent challenges in understanding complex narrative queries that require global plot comprehension.

Figure 1: Comparison of RAG reasoning paradigms.

Experimental Evaluation

ComoRAG was evaluated on four narrative comprehension datasets, demonstrating consistent performance gains over strong RAG baselines by up to 11%.

Performance Metrics and Analysis:

Ablation Studies

A series of ablation studies confirmed the contribution of each component within ComoRAG:

- Hierarchical Knowledge Source: Removing the Veridical layer led to a nearly 30% accuracy drop, underscoring its significance.

- Metacognition: The absence of this module resulted in significant performance degradation, revealing its essential role in managing dynamic memory.

- Regulation: Disabling this module affected retrieval efficiency, highlighting its importance in directing meaningful probing queries.

(Table 1)

Table 1: Evaluation results on four long narrative comprehension datasets. ComoRAG consistently outperforms baselines.

Future Directions and Conclusion

ComoRAG exhibits profound implications for long narrative reasoning in AI:

- Scalability and Generalization: The framework's cognitive-inspired loop efficiently adapts to different LLM backbones, improving baseline performance substantially.

- Application in Complex Narrative Tasks: Its dynamic and modular design facilitates a generalized approach to complex narrative comprehension, offering opportunities for integration in existing systems.

ComoRAG represents a promising step forward for retrieval-based systems, offering a new paradigm aligned with cognitive processes for stateful long-context reasoning. Future advancements may explore integrating even more sophisticated LLMs to further enhance reasoning capabilities and broadening the framework's applicability to diverse narrative structures.

In conclusion, ComoRAG addresses the intricate demands of narrative comprehension, offering a cognitive-inspired, principled approach to retrieving and synthesizing information across long narratives. This positions it as a notable advancement in addressing stateful reasoning challenges within LLM contexts.