- The paper presents a novel multimodal agent architecture that integrates real-time visual and auditory streams into an entity-centric long-term memory for iterative reasoning.

- It leverages dual processes of memorization and control, using imitation learning and reinforcement learning to achieve up to 7.7% accuracy improvements over baselines.

- The study introduces M3-Bench, a rigorous evaluation benchmark that demonstrates the agent's scalability and robust performance in complex, real-world video tasks.

M3-Agent: A Multimodal Agent with Long-Term Memory for Real-World Reasoning

Overview and Motivation

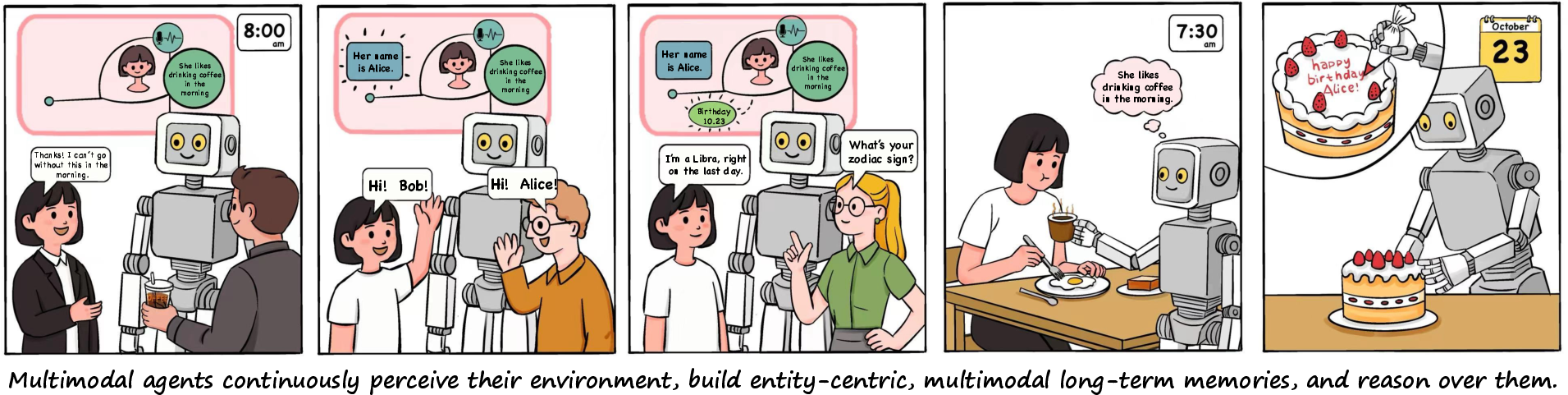

The paper presents M3-Agent, a multimodal agent architecture designed to process real-time visual and auditory streams, construct long-term memory, and perform iterative reasoning for complex tasks. The agent is evaluated on M3-Bench, a new long-video question answering (LVQA) benchmark comprising both robot-perspective and diverse web-sourced videos. The work addresses critical limitations in existing multimodal agents: scalability to arbitrarily long input streams, consistency in entity tracking, and the ability to accumulate and reason over world knowledge.

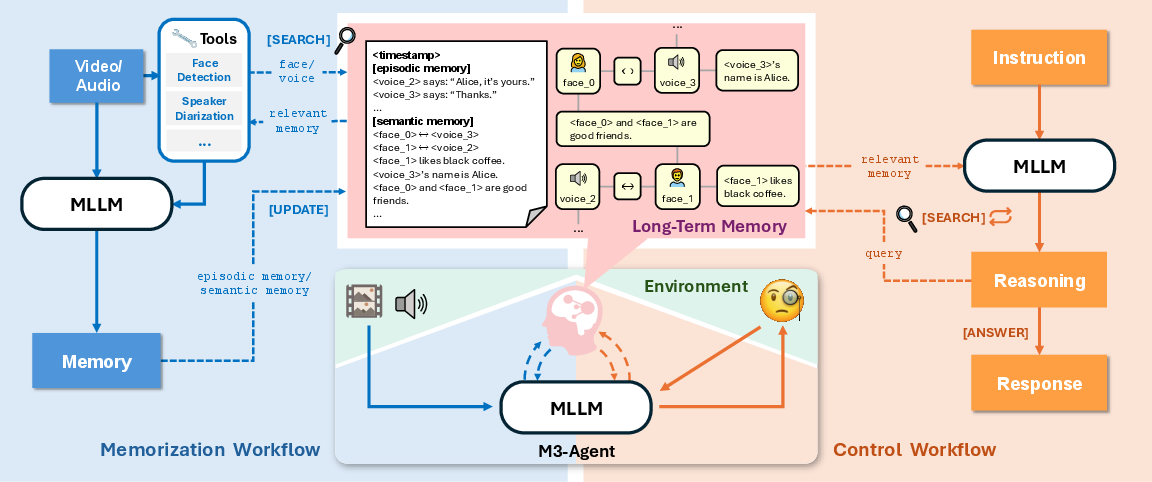

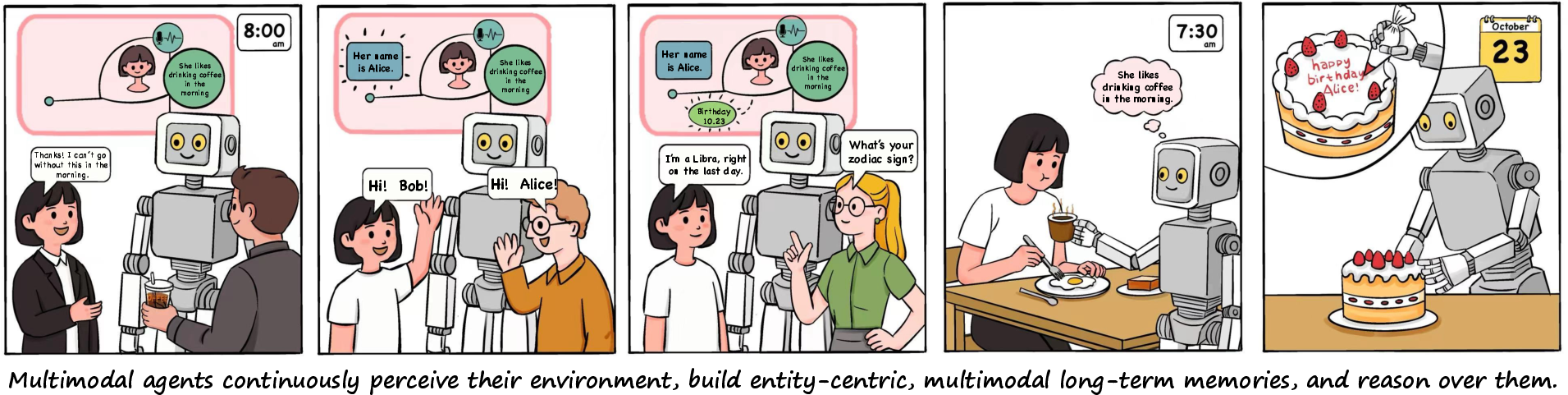

Figure 1: Illustration of M3-Agent’s dual processes: memorization (continuous multimodal perception and memory construction) and control (iterative reasoning and retrieval from long-term memory).

Architecture and Memory Design

M3-Agent consists of a multimodal LLM (MLLM) and a multimodal long-term memory module. The system operates via two parallel processes:

Entity-Centric Multimodal Memory

A key innovation is the entity-centric memory graph, which maintains persistent multimodal representations (faces, voices, textual knowledge) for each entity. This design enables robust identity tracking and cross-modal reasoning, overcoming the ambiguity and inconsistency inherent in language-only memory systems. The agent leverages external tools for facial recognition and speaker identification, associating extracted features with global entity IDs and updating the graph incrementally.

Memory Retrieval and Reasoning

Memory access is facilitated by modality-specific search functions (e.g., search_node, search_clip), implemented via MIPS over embeddings. The agent employs reinforcement learning to optimize multi-turn reasoning and retrieval strategies, rather than relying on single-turn RAG. This approach yields higher task success rates and more reliable long-horizon reasoning.

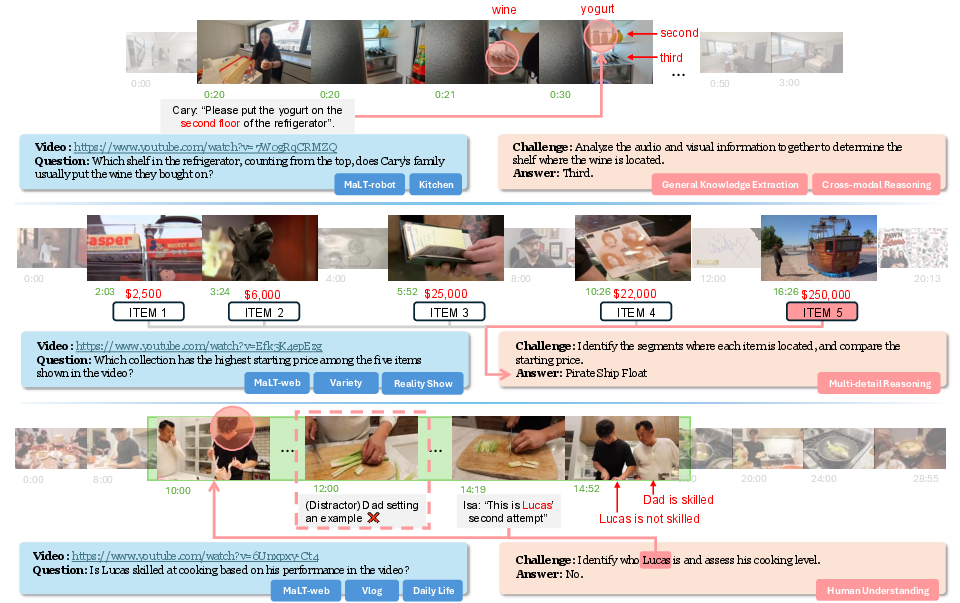

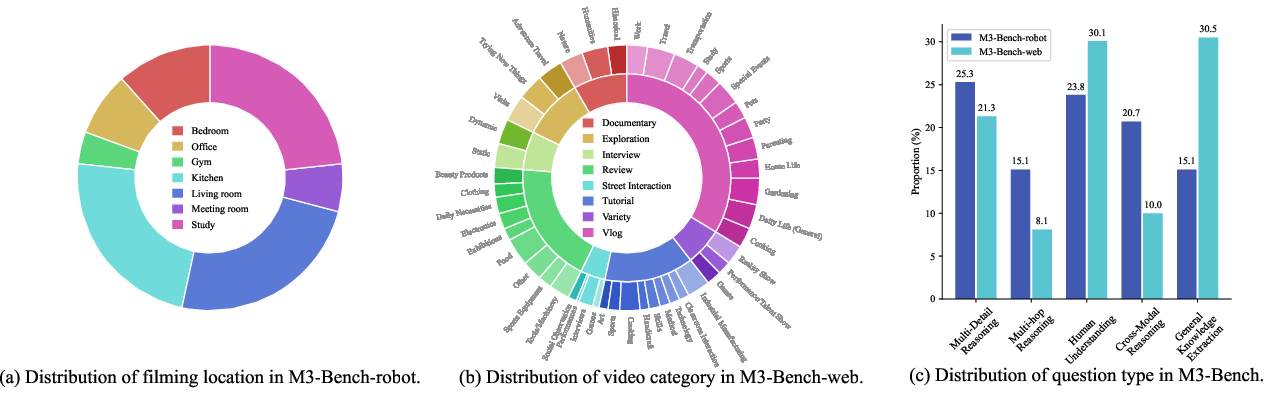

Benchmark: M3-Bench

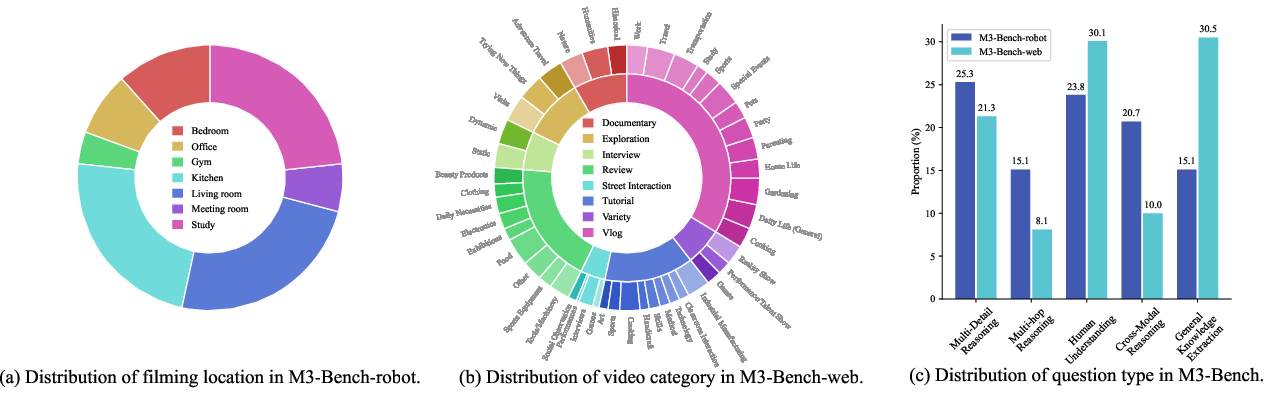

M3-Bench is introduced to rigorously evaluate memory-based reasoning in multimodal agents. It comprises:

- M3-Bench-robot: 100 real-world videos recorded from a robot’s perspective, simulating agent-centric perception in practical environments.

- M3-Bench-web: 920 web-sourced videos spanning diverse scenarios.

Each video is paired with open-ended QA tasks targeting five reasoning types: multi-detail, multi-hop, cross-modal, human understanding, and general knowledge extraction.

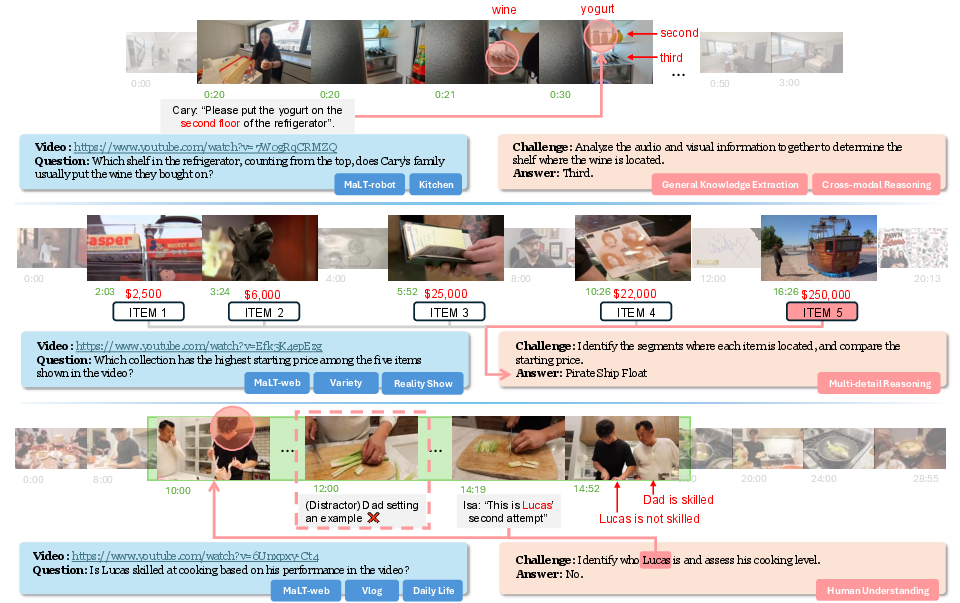

Figure 3: Examples from M3-Bench, illustrating the diversity and complexity of scenarios and QA tasks.

Figure 4: Statistical overview of M3-Bench, showing distribution of question types and video sources.

Training and Optimization

Memorization and control are handled by separate models, initialized from Qwen2.5-Omni (multimodal) and Qwen3 (reasoning), respectively. Memorization is trained via imitation learning on synthetic demonstration data, with episodic and semantic memory generated by hybrid prompting of Gemini-1.5-Pro and GPT-4o. Control is optimized using DAPO, a scalable RL algorithm, with rewards provided by GPT-4o-based automatic evaluation.

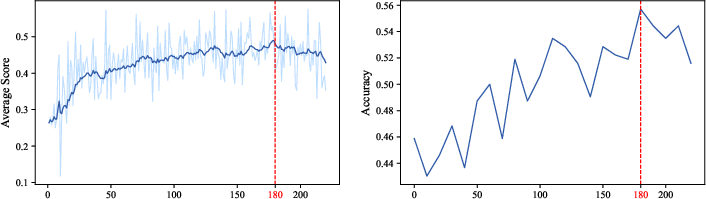

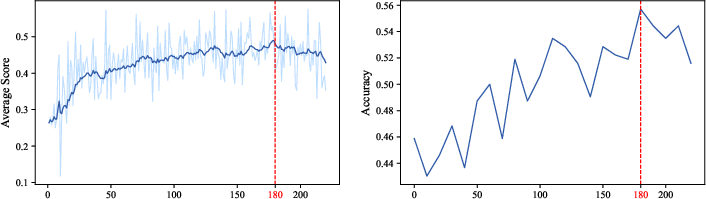

Figure 5: DAPO training curves, showing progressive improvement in average scores and accuracy.

Experimental Results

M3-Agent is evaluated against Socratic Models, online video understanding methods (MovieChat, MA-LMM, Flash-VStream), and agent baselines using prompted commercial models (Gemini-1.5-Pro, GPT-4o). Key findings:

- Accuracy: M3-Agent outperforms the strongest baseline (Gemini-GPT4o-Hybrid) by 6.7%, 7.7%, and 5.3% on M3-Bench-robot, M3-Bench-web, and VideoMME-long, respectively.

- Ablation: Removing semantic memory reduces accuracy by 17.1–19.2%; RL training and inter-turn instructions yield 8–10% improvements; disabling reasoning mode leads to 8.8–11.7% drops.

- Reasoning Types: M3-Agent excels in human understanding and cross-modal reasoning, demonstrating superior entity consistency and multimodal integration.

Implementation Considerations

- Scalability: The memory graph and retrieval mechanisms are designed for continuous, online operation, supporting arbitrarily long input streams.

- Resource Requirements: Training requires large-scale multimodal data and significant GPU resources (16–32 GPUs, 80GB each).

- Deployment: The modular design allows integration with external perception tools and adaptation to various agent platforms (e.g., household robots, wearable devices).

- Limitations: Fine-grained detail retention and spatial reasoning remain challenging; future work should explore attention mechanisms for selective memorization and richer visual memory representations.

Implications and Future Directions

M3-Agent advances the state-of-the-art in multimodal agent architectures by enabling persistent, consistent, and semantically rich long-term memory. The entity-centric multimodal graph and RL-optimized control provide a foundation for robust real-world reasoning. M3-Bench sets a new standard for evaluating memory-based reasoning in agent-centric scenarios.

Potential future developments include:

- Hierarchical and task-adaptive memory formation

- Integration of richer spatial and temporal representations

- Generalization to embodied agents and interactive environments

- Scalable deployment in real-world robotics and assistive systems

Conclusion

M3-Agent demonstrates that multimodal agents equipped with structured long-term memory and iterative reasoning can achieve superior performance in complex, real-world tasks. The architecture and benchmark provide a rigorous framework for advancing memory-based reasoning in AI agents, with strong empirical results and clear directions for future research.