Enhancing Long-term Video Understanding with Memory-Augmented Multimodal Models

Introduction to the Memory-Augmented Large Multimodal Model (MA-LMM)

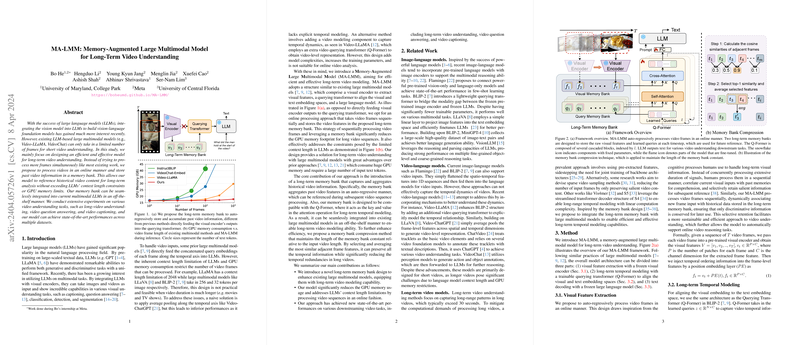

The integration of vision models into LLMs has piqued significant interest, especially for tasks requiring understanding of long-term video content, which poses unique challenges due to the limitations of LLMs' context length and GPU memory constraints. Most existing models capable of handling multimodal inputs, such as Video-LLaMA and VideoChat, work well with short video segments but struggle with longer content. The recently proposed Memory-Augmented Large Multimodal Model (MA-LMM) addresses these issues by introducing a novel memory bank mechanism, enabling efficient and effective long-term video understanding without exceeding LLMs' context length constraints or GPU memory limits.

Key Contributions

- MA-LMM processes videos in an online manner, storing past video information in a memory bank, allowing it to reference historical video content for long-term analysis.

- A novel long-term memory bank design that auto-regressively stores past video information, enabling seamless integration into existing multimodal LLMs.

- Significant reduction in GPU memory usage, facilitated by MA-LMM's online processing approach, which has demonstrated state-of-the-art performances across multiple video understanding tasks.

Memory Bank Architecture

The proposed memory bank can be seamlessly integrated with the querying transformer (Q-Former) present in multimodal LLMs, acting as the key and value in the attention operation for superior temporal modeling. This design, which allows storing and referencing past video information, comprises two main components: the visual memory bank for raw visual features and the query memory bank for input queries, capturing video information at increasing levels of abstraction.

- Visual Memory Bank: Storing raw visual features extracted from a pre-trained visual encoder, enabling the model to explicitly attend to past visual information through cached memory.

- Query Memory Bank: Accumulating input queries from each timestep, this dynamic memory retains a model’s understanding of video content up to the current moment, evolving through cascaded Q-Former blocks during training.

Experimental Validation

The effectiveness of MA-LMM was extensively evaluated on several video understanding tasks, showing remarkable advancements over current state-of-the-art models. Specifically, MA-LMM achieved substantial improvements on the Long-term Video Understanding (LVU) benchmark, the Breakfast and COIN datasets for long-video understanding, and video question answering tasks involving both MSRVTT and MSVD datasets.

Theoretical and Practical Implications

The introduction of a memory bank to large multimodal models invites a rethinking of how these systems can efficiently process and reason about long-term video content. By emulating human cognitive processes — sequential processing of visual inputs, correlation with past memories, and selective retention of salient information — MA-LMM represents a shift towards more sustainable and efficient long-term video understanding. This model not only addresses current limitations in processing long video sequences but also opens avenues for future developments in AI, particularly in applications requiring real-time, long-duration video analysis.

Future Directions

Exploration into extending MA-LMM's capabilities, such as integrating video- or clip-based visual encoders and enhancing pre-training with large-scale video-text datasets, promises further advancements. Additionally, leveraging more advanced LLM architectures could significantly boost performance, underscoring the model's potential in handling complex video-content understanding tasks.

Conclusion

MA-LMM represents a significant step forward in the quest for effective long-term video understanding, offering a scalable and efficient solution. Its architecture, grounded in the novel long-term memory bank, paves the way for groundbreaking advancements in video processing, potentially transforming various applications that rely on deep video understanding.