- The paper demonstrates that augmenting KG-RAG systems with a metacognitive Perceive-Evaluate-Adjust cycle significantly improves retrieval quality and addresses cognitive blindness.

- The methodology employs iterative, path-aware refinements and targeted restarts, yielding superior accuracy and explanation quality across multiple datasets.

- Experimental results validate MetaKGRAG’s effectiveness compared to leading LLM baselines, showing robust performance in diverse domains.

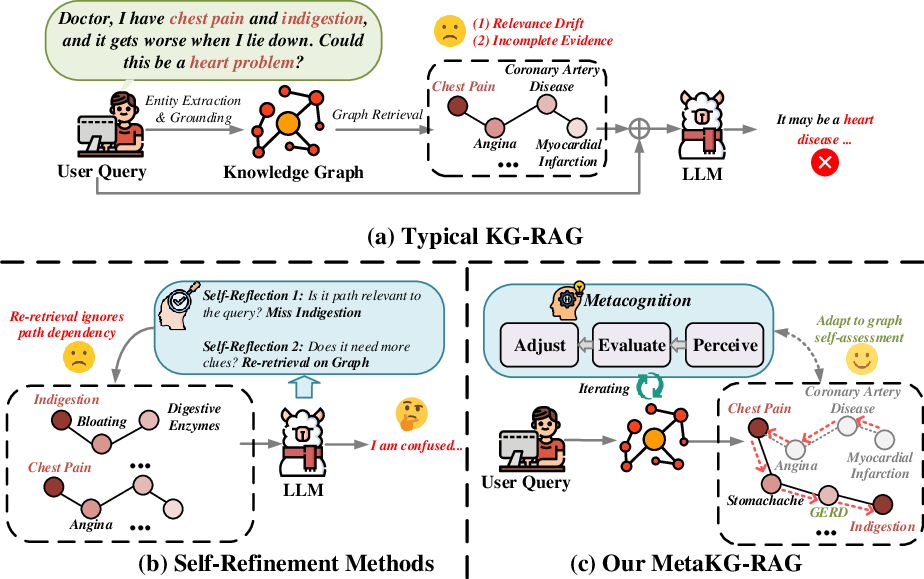

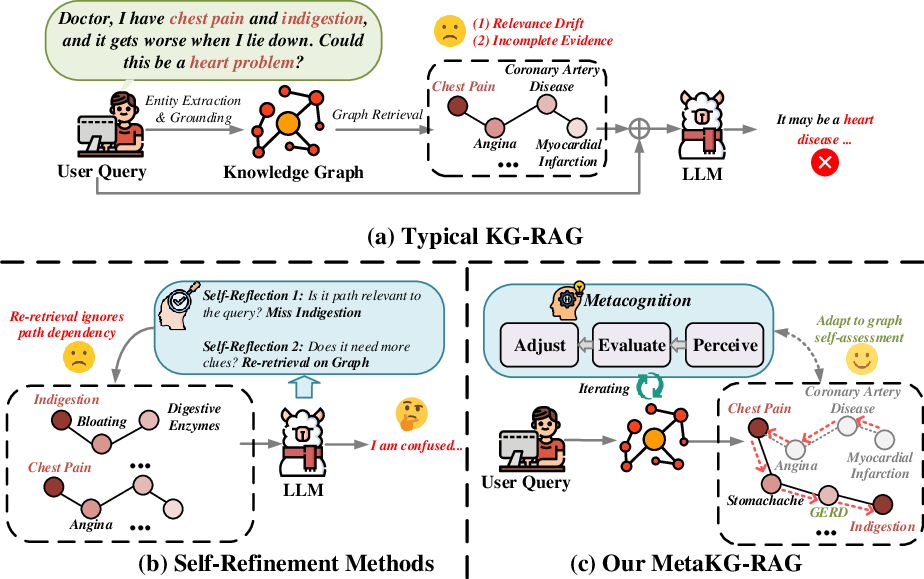

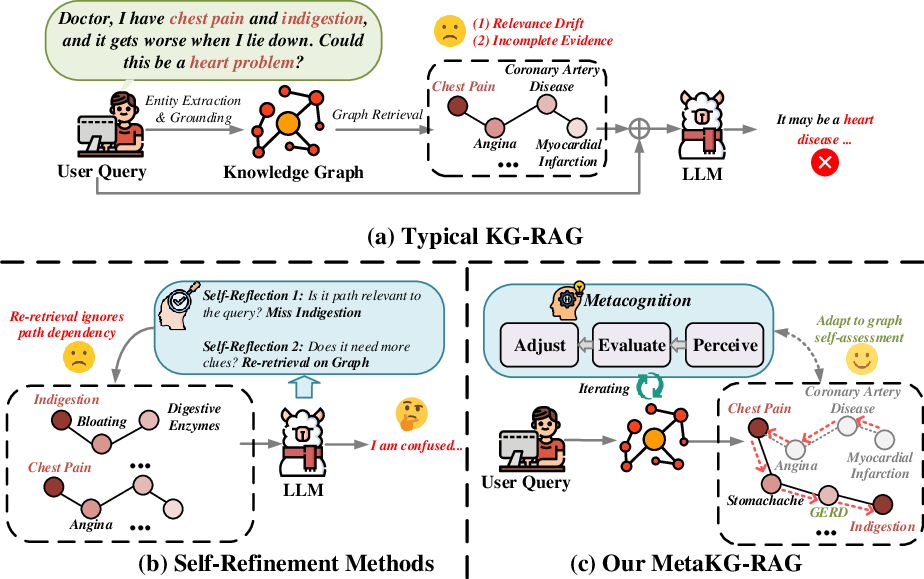

The paper presents a novel approach for enhancing the performance of Retrieval-Augmented Generation (RAG) using metacognitive processes. This approach, termed Metacognitive Knowledge Graph Retrieval-Augmented Generation (MetaKGRAG), addresses the inherent limitations of traditional open-loop Knowledge Graph-based Retrieval-Augmented Generation (KG-RAG) systems that suffer from cognitive blindness. MetaKGRAG introduces a Perceive-Evaluate-Adjust cycle inspired by human metacognition, enabling tailored path-aware refinement during graph exploration (Figure 1).

Figure 1: The comparisons of KG-RAG pipeline, Self-Refinement methods and our MetaKGRAG. (a) Typical KG-RAG suffers from cognitive blindness issues, leading to relevance drift and incomplete evidence. (b) Self-Refinement struggles to adapt to KG-RAG due to overlooking path dependency of graph exploration. (c) Our MetaKGRAG achieves graph-based self-cognition through a metacognitive cycle.

Introduction

Knowledge Graph-based Retrieval-Augmented Generation (KG-RAG) frameworks harness structured knowledge to significantly amplify the reasoning capabilities of LLMs. However, typical KG-RAG methods operate in an open-loop manner, leading to cognitive blindness and often resulting in relevance drift and incomplete evidence. These systems perform single-pass graph explorations, which cannot revisit and correct mistaken paths without a proper feedback loop. Current text-self-refinement techniques, while efficient for document-level retrieval, are inadequate for the path-dependent nature of graph exploration. MetaKGRAG is presented as a novel framework inspired by human metacognitive processes to address these issues via a specific Perceive-Evaluate-Adjust cycle.

Figure 1: The comparisons of KG-RAG pipeline, Self-Refinement methods and our MetaKGRAG. (a) Typical KG-RAG suffers from cognitive blindness issues, leading to relevance drift and incomplete evidence. (b) Self-Refinement struggles to adapt to KG-RAG due to overlooking path dependency of graph exploration. (c) Our MetaKGRAG achieves graph-based self-cognition through a metacognitive cycle.

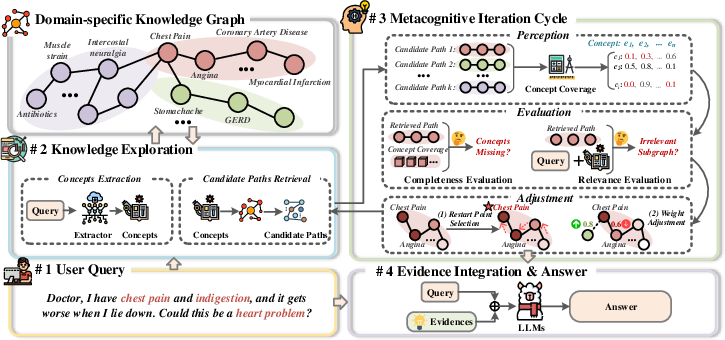

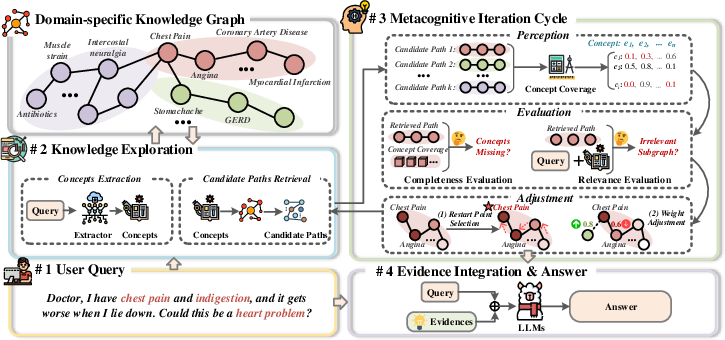

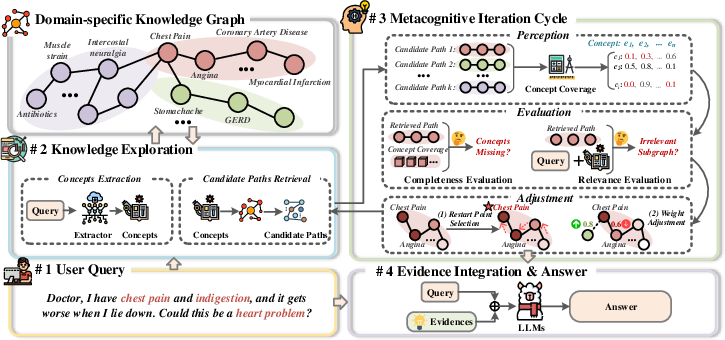

MetaKGRAG is designed around a metacognitive cycle specific to graph exploration, which is based on a Perceive-Evaluate-Adjust cycle (Figure 2). This induces awareness in the KG-RAG framework by allowing it to perform reflective adjustments during evidence path generation, transforming it into a path-aware process.

Figure 2: An overview of our MetaKGRAG framework. It iteratively refines graph exploration via a path-aware Perceive-Evaluate-Adjust cycle to address cognitive blindness in relevance drift and incomplete evidence.

The Perceive stage involves generating an initial evidence path from a high-confidence entity and assessing its coverage of important concepts. The Evaluate stage then diagnoses specific issues in the path's completeness or relevance. Contraindicated nodes are identified using a path-dependent global support scoring method. This guides re-search by selecting informed restart points on flawed paths to prioritize high-support entities, ensuring trajectory-connected corrections. The closed-loop cycle allows iterative refinement until diagnostics reveal a path that sufficiently covers the query's key aspects (Figure 2).

Figure 2: An overview of our MetaKGRAG framework. It iteratively refines graph exploration via a path-aware Perceive-Evaluate-Adjust cycle to address cognitive blindness in relevance drift and incomplete evidence.

Experimental Evaluation

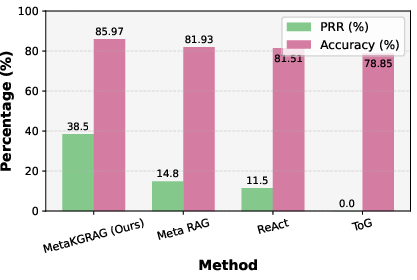

To validate MetaKGRAG's efficacy, the framework was benchmarked across a series of five datasets, covering domains of medical, legal, and commonsense reasoning. Comparisons were drawn against powerful leading LLM baselines such as GPT-4o and Claude 3.5 Sonnet, KG-RAG frameworks, and self-refinement methods like Chain-of-Thought and MetaRAG.

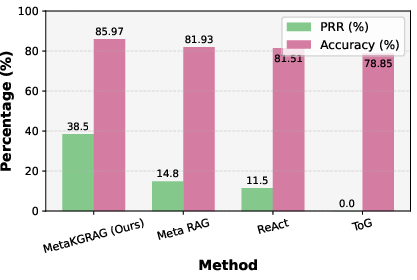

As shown in (Figure 1), experimental results on ExplainCPE and JEC-QA datasets demonstrated substantial improvements in accuracy over both LLM baselines and both existing retrieval and self-refinement methods. Across diverse domains, MetaKGRAG consistently achieved state-of-the-art performance on both multiple-choice and generative QA tasks, as demonstrated by our comprehensive experiments on five datasets spanning two languages.

Figure 3: Path refinement rate (PPR) and accuracy of different methods on ExplainCPE.

Moreover, MetaKGRAG in Figure 4 demonstrated significant efficacy under varying hyperparameter settings on ExplainCPE and JEC-QA datasets, demonstrating low sensitivity to core parameters like the concept relevance threshold and maximum iteration number. This indicates that our metacognitive cycle effectively helps the system self-correct deficient paths over iterations, further validating that the perceived diagnosis and targeted FIX are critical to performance improvement.

\section{Conclusion}

MetaKGRAG stands as an advancement in addressing the limitations of existing KG-RAG systems. It addresses cognitive blindness and path dependencies in knowledge graph exploration, achieving superior retrieval performance across multiple domains. Our framework achieves significant gains in accuracy and explanation quality, proving the importance of path-aware metacognitive cycles for refining evidence on structured knowledge graphs. Future work will explore refining adjustment strategies using learnable components to further enhance metacognitive adaptability, as well as broadening the framework's applicability to low-resource settings and alternative graph structures.