Mindful-RAG: A Study of Points of Failure in Retrieval Augmented Generation

Overview of the Paper

The paper "Mindful-RAG: A Study of Points of Failure in Retrieval Augmented Generation" scrutinizes the inadequacies of current Retrieval-Augmented Generation (RAG) systems when employing LLMs in the context of Knowledge Graph (KG)-based question-answering (QA) tasks. Authored by Garima Agrawal, Tharindu Kumarage, Zeyad Alghamdi, and Huan Liu from Arizona State University, the paper not only identifies critical failure points in these systems but also proposes a novel methodology, termed Mindful-RAG, to ameliorate these issues by focusing on intent-driven and contextually coherent knowledge retrieval.

Identified Failure Points

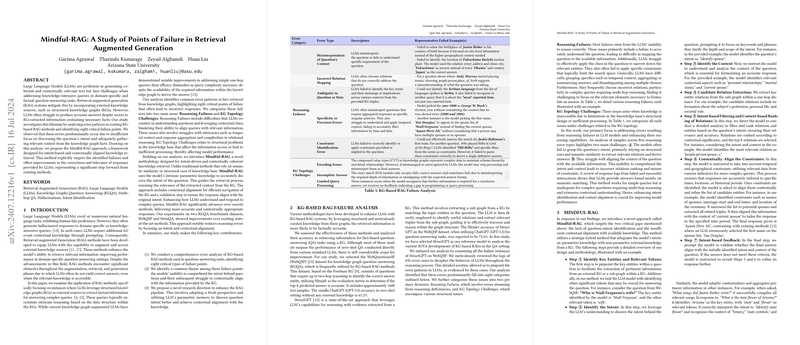

The paper identifies eight critical failure points in existing KG-based RAG systems, which are categorized under two primary headings: Reasoning Failures and KG Topology Challenges.

- Reasoning Failures:

- Misinterpretation of Question's Context: Errors where LLMs misunderstand the intent, often due to focusing on incorrect granularities of information.

- Incorrect Relation Mapping: Errors originating from the selection of relations that do not suitably answer the question.

- Ambiguity in Question or Data: Failure to adequately interpret key terms or contextual nuances.

- Specificity or Precision Errors: Misinterpretation of questions requiring aggregated responses or those involving temporal context.

- Constraint Identification Errors: Inability to effectively narrow down the search space using provided or implied constraints.

- KG Topology Challenges:

- Encoding Issues: Problems related to the misinterpretation of compound data types, leading to answer misalignment.

- Incomplete Answer: Errors due to the requirement of exact answers from the system, often resulting in partial or imprecise responses.

- Limited Query Processing: Instances where the model recognizes the need for additional information but fails to correctly solicit or process it.

Proposed Method: Mindful-RAG

To address these failures, the authors propose "Mindful-RAG," which harnesses the intrinsic parametric knowledge of LLMs to accurately discern question intent and ensure contextual alignment with the KG.

Core steps of Mindful-RAG include:

- Identify Key Entities and Relevant Tokens: Pinpointing significant elements within a question to extract relevant information from the KG.

- Identify the Intent: Utilizing the LLM to discern the underlying intent of the question.

- Identify the Context: Analyzing the query's scope and the relevant contextual clues.

- Candidate Relation Extraction: Extracting and ranking key entity relations from the KG.

- Intent-based Filtering and Context-based Ranking of Relations: Filtering and ranking relations to ensure their relevance and precision.

- Contextually Align the Constraints: Incorporating temporal and geographical constraints to tailor the response appropriately.

- Intent-based Feedback: Validating the final answer against the identified intent and context to ensure accuracy.

Experimental Results

The experiments were conducted on two benchmark datasets: WebQSP and MetaQA. The authors compared multiple baseline methods, including StructGPT, KAPING, Retrieve-Rewrite-Answer (RRA), and Reasoning on Graphs (RoG). Mindful-RAG demonstrated superior performance, achieving Hits@1 accuracy of 84% on WebQSP and 82% on MetaQA (3-hop).

Implications and Future Directions

The implications of the findings are significant for both practical applications and theoretical advancements. By focusing on intent and contextual alignment, Mindful-RAG mitigates the prevalent reasoning failures, thus improving the accuracy and reliability of LLMs in answering complex, multi-hop queries. Future research could enhance KG structures and optimize query processing, further minimizing failures. Moreover, the integration of user feedback mechanisms and the hybridization of vector-based and KG-based retrieval methods present promising avenues for further elevating the performance of KG-based RAG systems.

In summary, the paper offers a rigorous analysis of the limitations of existing KG-based RAG methods and presents Mindful-RAG as a methodological innovation that significantly improves the alignment of LLM responses with the intended questions and context. This advancement underscores the potential of LLMs in complex QA tasks and provides a foundation for future research aimed at further refining these systems.