- The paper demonstrates vulnerabilities in GraphRAG systems by introducing two effective knowledge poisoning attacks through subtle text edits.

- It details the Targeted Knowledge Poisoning Attack (TKPA) and Universal Knowledge Poisoning Attack (UKPA) that exploit network intervention and linguistic perturbations to mislead LLM outputs.

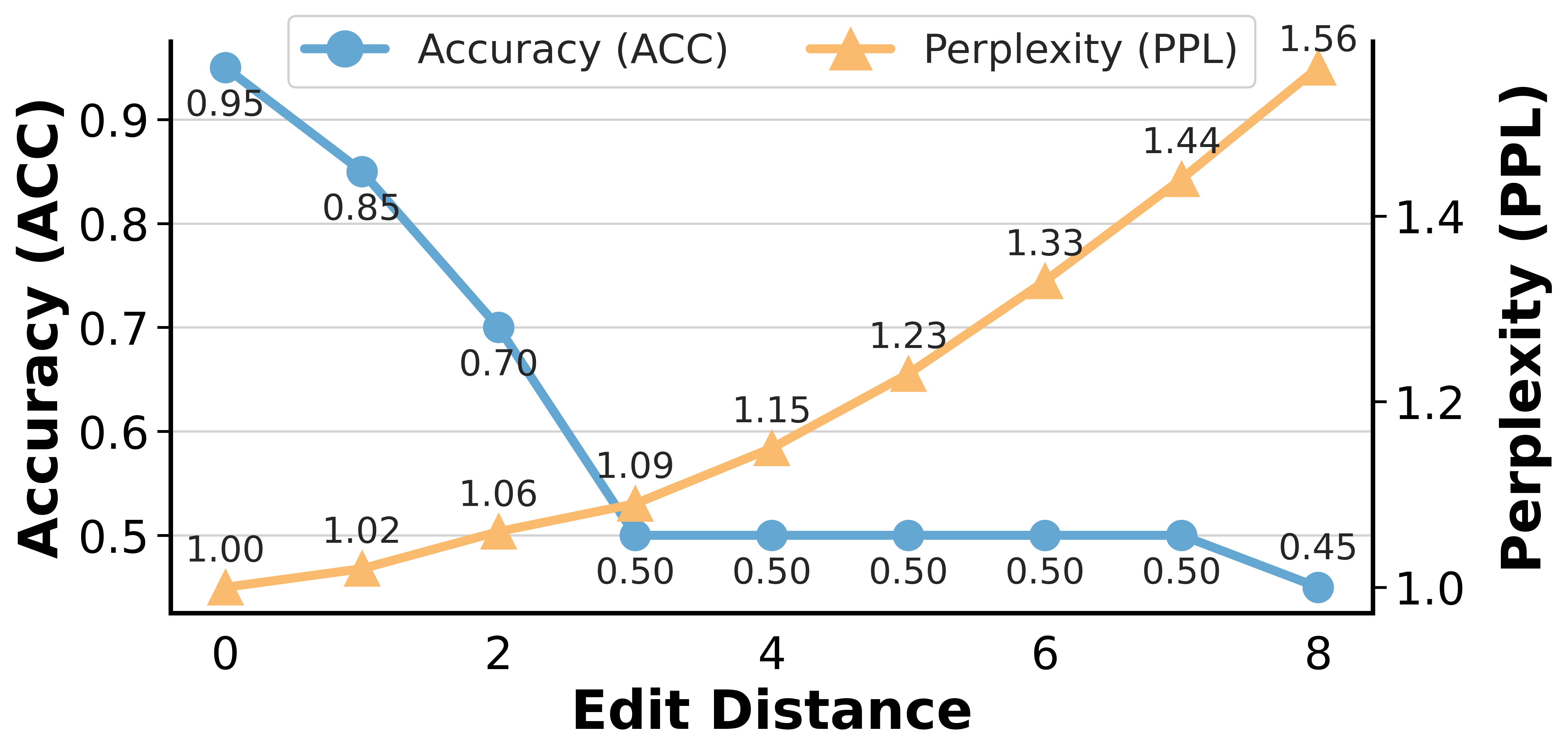

- Experimental evaluations reveal a collapse in QA accuracy from 95% to 50% and expose the limitations of current defense mechanisms against such manipulations.

A Comprehensive Analysis of "A Few Words Can Distort Graphs: Knowledge Poisoning Attacks on Graph-based Retrieval-Augmented Generation of LLMs" (2508.04276)

The paper "A Few Words Can Distort Graphs: Knowledge Poisoning Attacks on Graph-based Retrieval-Augmented Generation of LLMs" explores the vulnerabilities inherent in Graph-based Retrieval-Augmented Generation (GraphRAG) systems. This essay explores the methodologies and implications of knowledge poisoning attacks capable of disrupting the integrity and reliability of GraphRAG pipelines with minimal textual modifications.

Introduction to Graph-based Retrieval-Augmented Generation

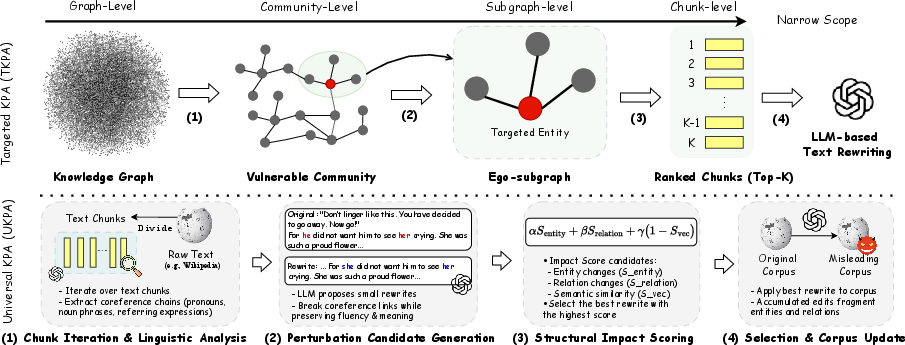

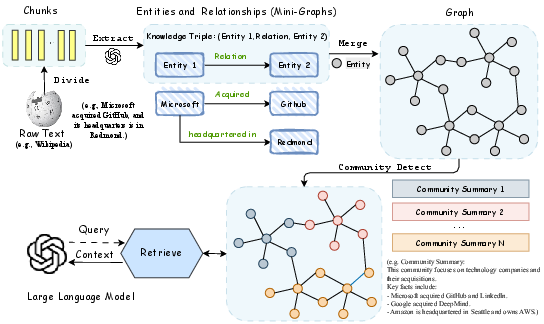

Graph-based Retrieval-Augmented Generation (GraphRAG) represents an advanced methodology for enriching LLMs with external structured knowledge embeddings. Unlike traditional RAG systems, which retrieve isolated text chunks, GraphRAG enhances the model's reasoning capability by organizing text into knowledge graphs, thus facilitating the deciphering of complex queries. However, the reliance on external, potentially tampered corpora offers a venue for security attacks, posing threats to subsequent reasoning and decision-making processes by LLMs.

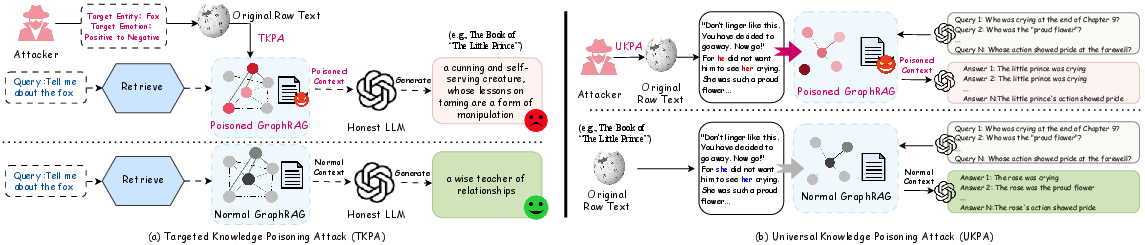

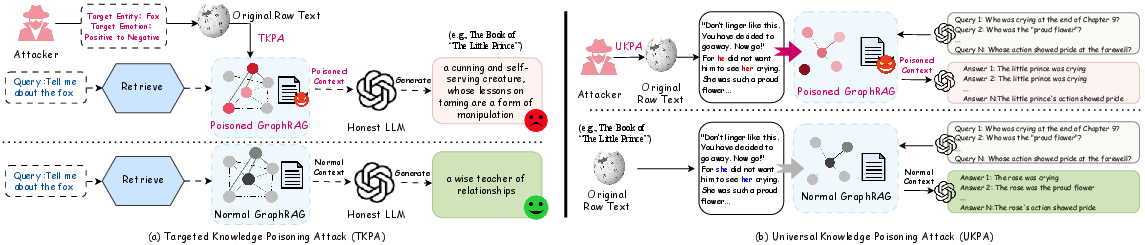

Figure 1: Proposed two knowledge poisoning attacks. (a) Targeted knowledge poisoning: manipulated facts cause GraphRAG to select poisoned context for a query, leading to incorrect LLM output. (b) Universal knowledge poisoning: altered linguistic cues globally distort graph structure, causing GraphRAG to build a biased knowledge graph and mislead LLM reasoning across diverse queries.

Knowledge Poisoning Attacks: TKPA and UKPA

The paper introduces two primary Knowledge Poisoning Attacks (KPAs) that exploit vulnerabilities within the GraphRAG architecture: Targeted Knowledge Poisoning Attack (TKPA) and Universal Knowledge Poisoning Attack (UKPA). These attack vectors emphasize minimal edits to source texts, revealing an insidious manipulation-only vulnerability.

Targeted Knowledge Poisoning Attack (TKPA)

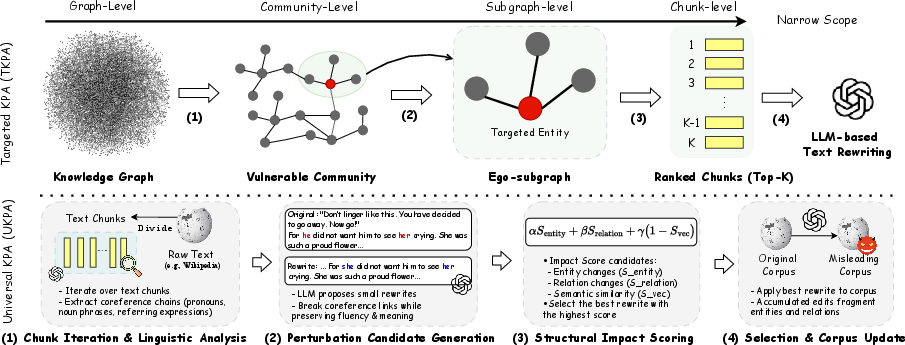

The Targeted Knowledge Poisoning Attack reinterprets the task of knowledge poisoning as a problem of network intervention.

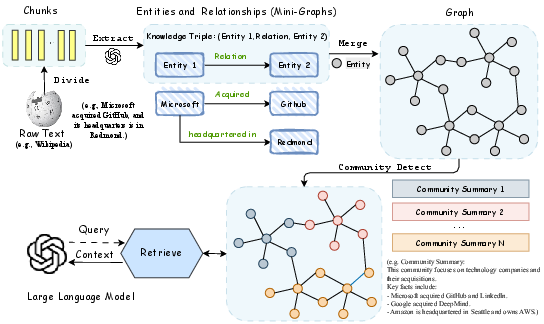

Figure 2: The pipeline of TKPA and UKPA.

- Vulnerable Community Localization (VCL): TKPA begins by identifying the community within the knowledge graph where an extensive impact can be achieved with minimal textual edits. This involves determining a vulnerability score using a combination of the target entity's centrality and community size.

- Ego-subgraph Extraction: By identifying an ego-subgraph around a targeted node, the adversary ensures that content modifications are highly localized, and maximize the downstream deviation for specific queries.

- Chunk Scoring and Selection: The attack focuses on chunks with significant influence by evaluating structural parameters like centrality, semantic similarity, and sentiment polarity.

- LLM-driven Manipulation: Edits involve using LLMs to ensure that the text remains undetectably fluent and natural, yet achieves an average success rate of 93.1% in manipulating specific QA outcomes.

Universal Knowledge Poisoning Attack (UKPA)

The UKPA exploits weaknesses in linguistic cues like pronouns and coreference that LLMs rely upon to construct coherent knowledge graphs from document corpora.

- Linguistic Signal Perturbation: Small edits disrupt the coreference and entity linking processes, compromising the structural integrity of the resulting graphs.

- Fragmentation Induction: These small disturbances spread throughout the graph structure, fragmenting it broadly and thus destabilizing the multi-hop reasoning pathways.

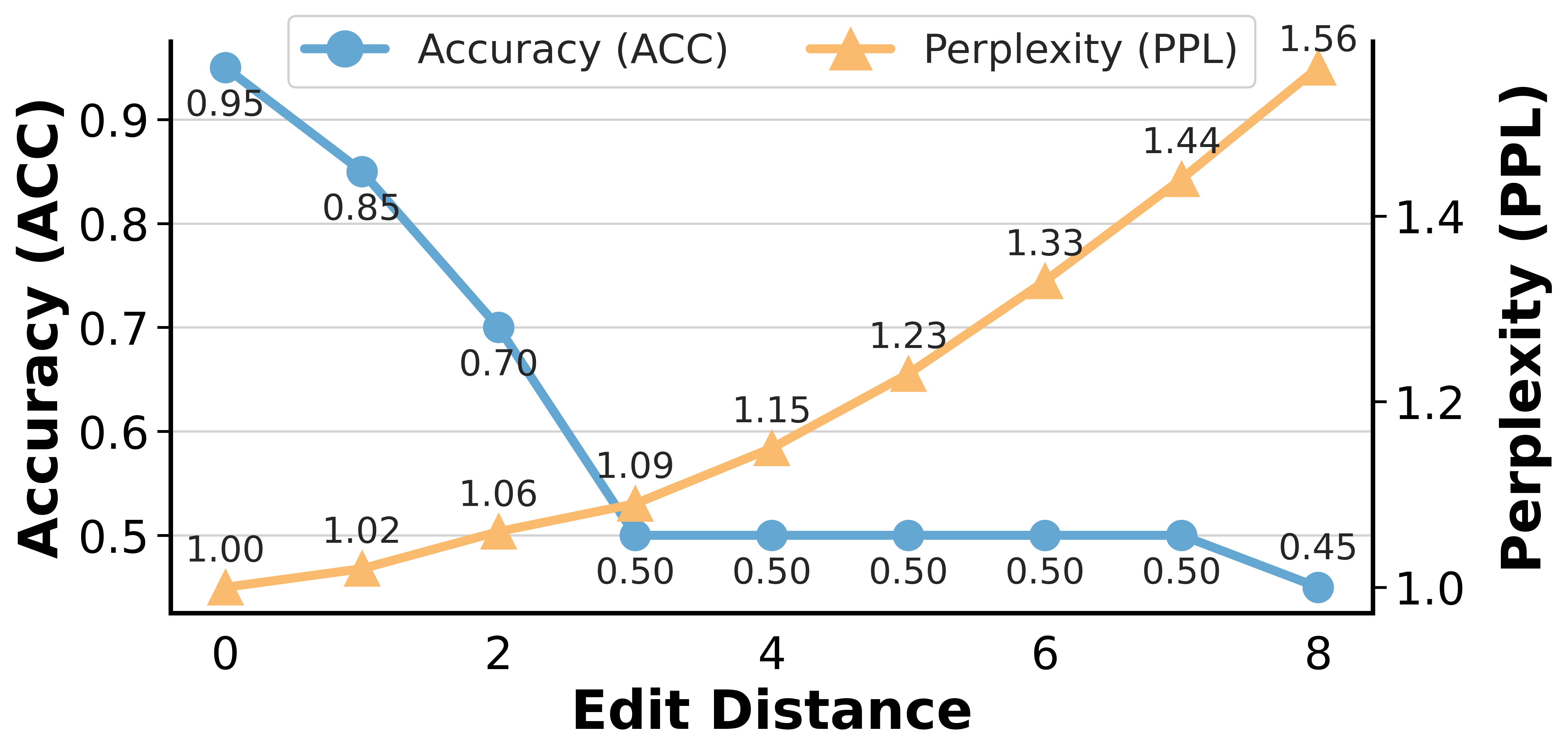

UKPA demonstrates a striking reduction in QA performance from 95% down to 50% accuracy by editing less than 0.05% of the corpus on average, with structural degradation evident in reduced node and edge retention rates and Jaccard similarity. The strategic linguistic manipulation results in nodes becoming isolated, relations disjoined and a collapse of long-range reasoning.

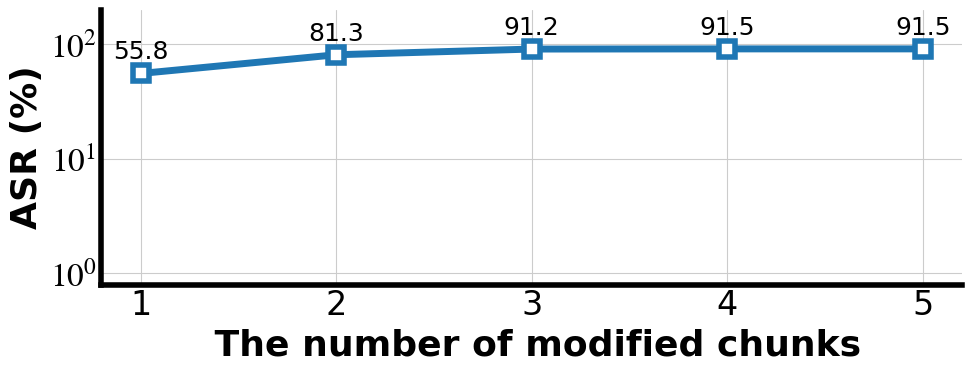

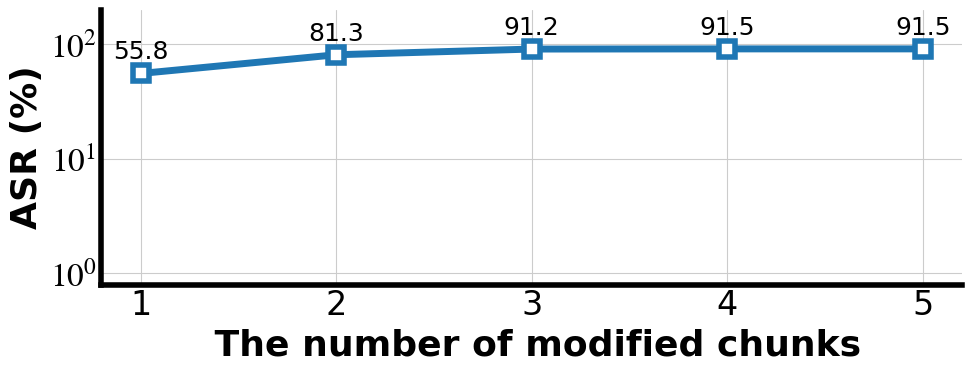

Figure 3: Impact of the number of modified chunks (Top-K) on the ASR of TKPA.

Figure 4: Impact of edit distance on UKPA.

Evaluation and Efficacy

The experimental evaluation confirmed the high effectiveness and stealth of both attacks. TKPA achieved an average ASR greater than 91% with substantial semantic deviation from correct QA responses, while UKPA collapsed QA accuracy from 95% to 50% by editing a nominal percentage of words.

Defense Mechanism Evaluation

Evaluations against state-of-the-art defenses, including Perplexity-based Filter (PF), LLM-based Contamination Detector (LLMDet), and Semantic Closeness Checking (SCC), reveal their inadequacies in detecting both types of attacks. The sophisticated nature of TKPA and UKPA, with their minimal text modifications and deeply structural implications, renders these defenses ineffective.

Figure 5: Proposed GraphRAG pipeline illustrating chunk to knowledge graph conversion used in TKPA and UKPA.

Conclusion

This paper identifies a critical vulnerability within GraphRAG systems, revealing the susceptibility of automatically constructed knowledge graphs to minute text modifications. Through the development of Targeted Knowledge Poisoning Attack (TKPA) and Universal Knowledge Poisoning Attack (UKPA), the research demonstrates both precise and widespread effects of manipulative interventions coupled with their marked ability to evade current defenses. The findings urge a reevaluation of graph construction's role in the security framework of GraphRAG systems and call for further research into robust defense strategies against manipulation-only threats, particularly with the inclusion of multimodal data sources.