- The paper introduces the AntLLM framework that integrates ACO and LLM-based improvements to optimize AI agent placement and migration.

- It models edge servers and tasks while reducing latency by 10.3% and cutting resource consumption by 38.6% compared to baseline methods.

- This approach is practical for dynamic edge environments, effectively adapting to user mobility and heterogeneous resource constraints.

Adaptive AI Agent Placement and Migration in Edge Intelligence Systems

This paper addresses the challenge of deploying and optimizing AI agents in dynamic edge environments by presenting a novel framework for adaptive agent placement and migration.

Introduction

The growing popularity of LLMs, such as ChatGPT and Claude, necessitates deployment strategies that ensure low latency in data-intensive scenarios. Cloud-based deployments introduce latency due to extensive data transmission. Edge computing offers a solution, reducing latency by processing tasks closer to the data source. However, edge environments face constraints related to heterogeneous resources, necessitating efficient placement and migration strategies.

System Model and Cost Analysis

System Model

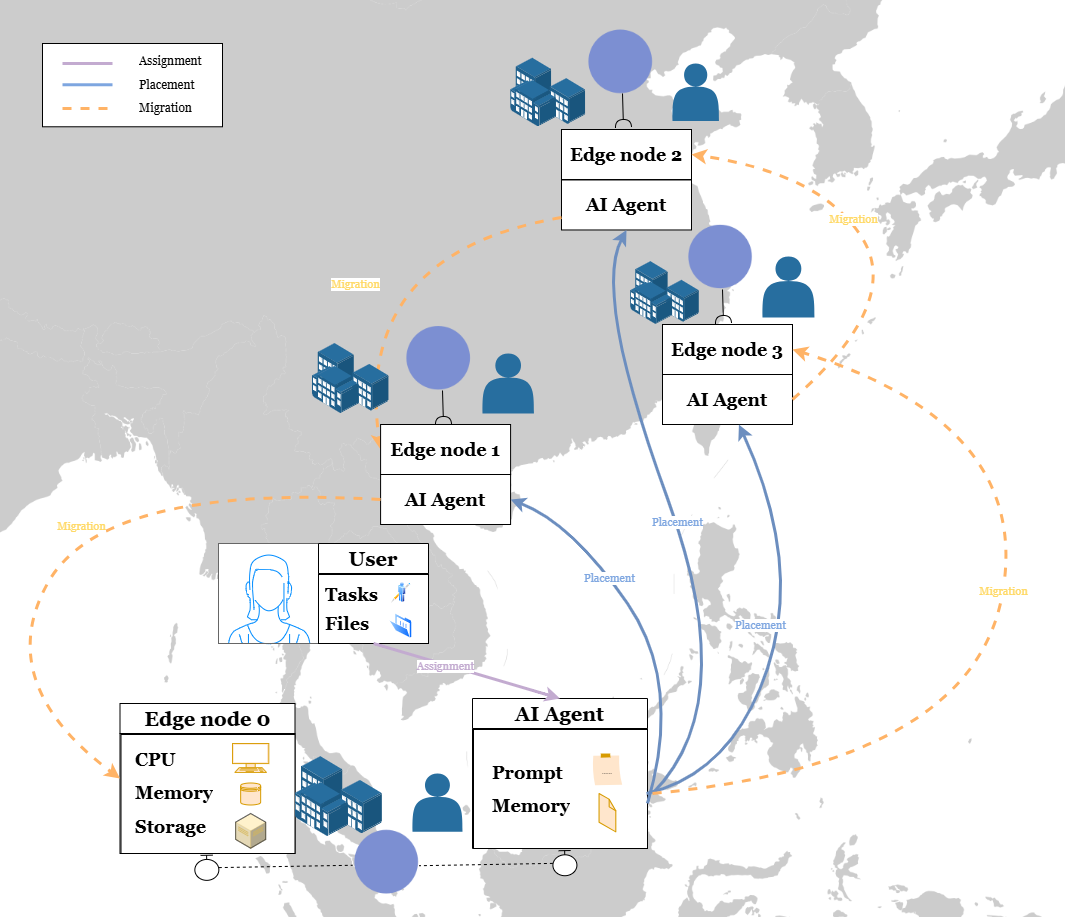

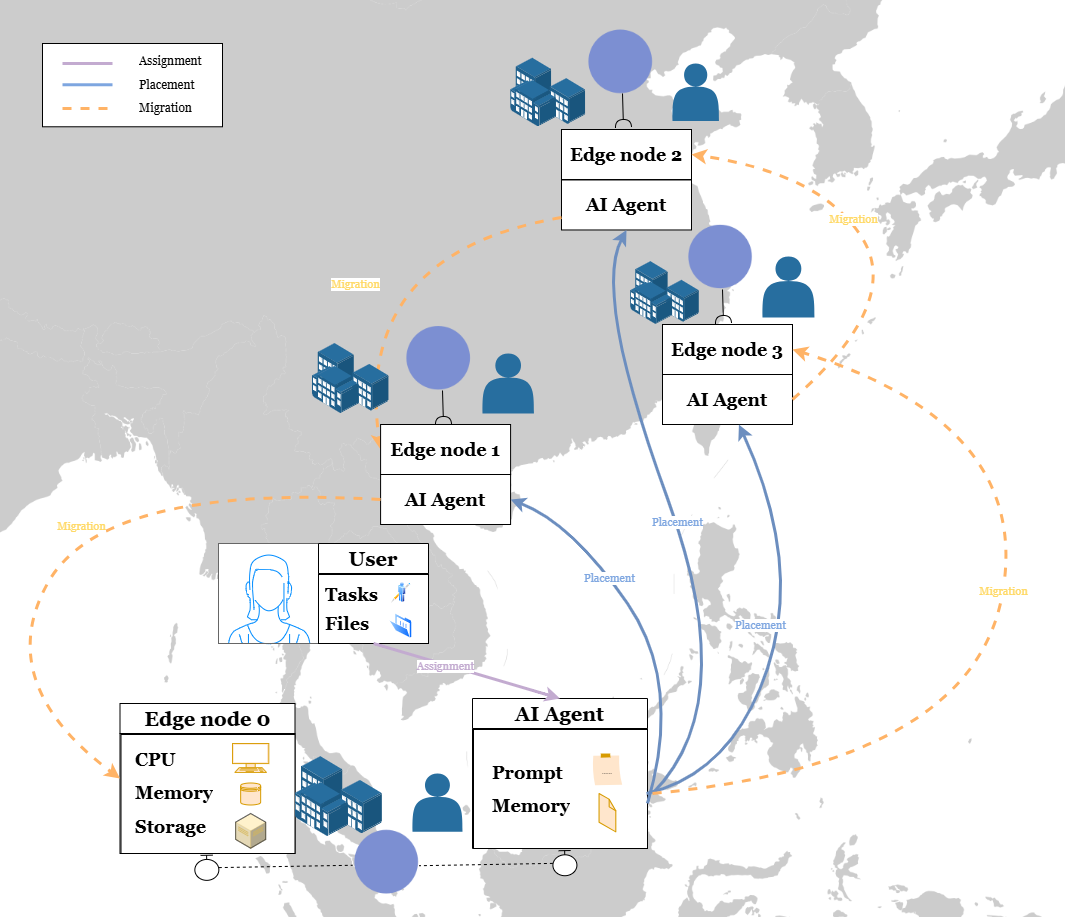

An Edge AI system is modeled as a network of edge servers hosting AI agents. Each AI agent is characterized by its memory, computing, storage, and communication resource requirements. Edge servers are defined by their capacity in these resource dimensions. The task system defines tasks as a set of required AI agents with specific prompts and data files.

Latency and Cost

Latency sources include data transfer, agent initiation, migration, and task processing. The cost factor combines computing, storage, and communication resource consumption. The overarching problem is formulated as optimizing both Edge Agent Deployment (EAD) and Edge Agent Migration (EAM) to minimize costs while maintaining QoS under resource constraints.

Algorithmic Solutions

AntLLM Algorithm

The AntLLM algorithm consists of two parts: AntLLM Placement (ALP) and AntLLM Migration (ALM). Both leverage ant colony optimization (ACO) for initial solutions, refining with LLM-based improvement steps.

AntLLM Placement (ALP)

The ALP algorithm models the placement task as a path selection problem. Ants choose paths based on a combination of pheromone trails and heuristic information related to resource availability and task requirements. The optimal deployment strategy is refined by an LLM-based post-optimization process.

AntLLM Migration (ALM)

ALM addresses the dynamic reallocation of AI agents in response to changes such as user mobility or resource bottlenecks. Transition probabilities for agent migration are determined by ACO, and the resulting solutions are further optimized using LLM feedback.

Implementation

The system architecture and algorithms are implemented using AgentScope, designed to automate the deployment and migration tasks in a distributed edge setting. The system monitors real-time resource states and uses SSH connections for remote management and task coordination.

Figure 1: An overview of our edge intelligence system.

Experimental Results

Extensive experiments were conducted to evaluate the proposed system's latency reduction and resource efficiency. The metrics were measured under varying numbers of edge servers and tasks. Compared to baseline approaches such as Greedy and Random, the AntLLM algorithm demonstrated a 10.3% reduction in latency and a 38.6% decrease in resource consumption.

(Figure 2)

Figure 2: Delay Performance with different number of nodes.

(Figure 3)

Figure 3: Cost Performance with different number of nodes.

Conclusion

This research introduces a practical approach to optimize AI agent deployment and migration in edge computing environments. The AntLLM algorithm successfully integrates ACO with LLM enhancements to adapt to dynamic resource availability and user mobility, demonstrating significant improvements in system performance. Future work will explore real-time policy updates enhancing adaptability to ongoing environmental changes.