- The paper introduces a latent-space prompt engineering framework that automates prompt generation and optimization for improved LLM performance.

- It employs continuous latent space exploration and interpolation techniques, achieving nearly a 3% increase in sentiment classification accuracy.

- The method is model-agnostic and leverages a feedback loop to iteratively refine prompts without relying on gradient-based techniques.

LatentPrompt: Optimizing Prompts in Latent Space

Introduction

The paper "LatentPrompt: Optimizing Prompts in Latent Space" introduces a novel approach to prompt engineering. This method leverages a latent semantic space to optimize prompts for LLMs automatically, enhancing their task performance across various applications. Unlike traditional manual methods, which rely heavily on trial-and-error and heuristics, LatentPrompt automates prompt generation, evaluation, and refinement by exploring semantic embeddings. This paper proposes that by representing prompts as vectors in latent space, we can identify semantically related prompts that yield superior task outcomes.

Methodology

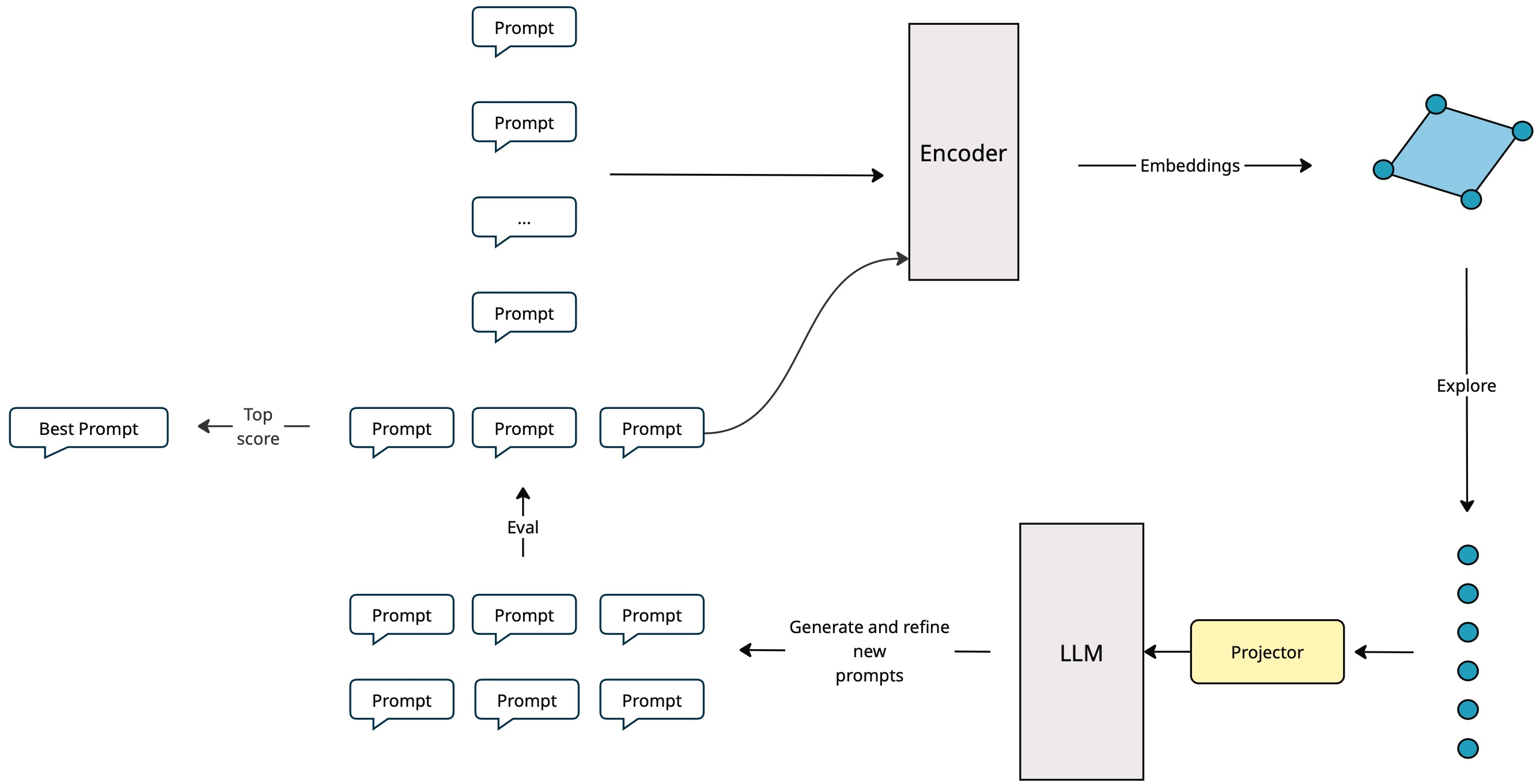

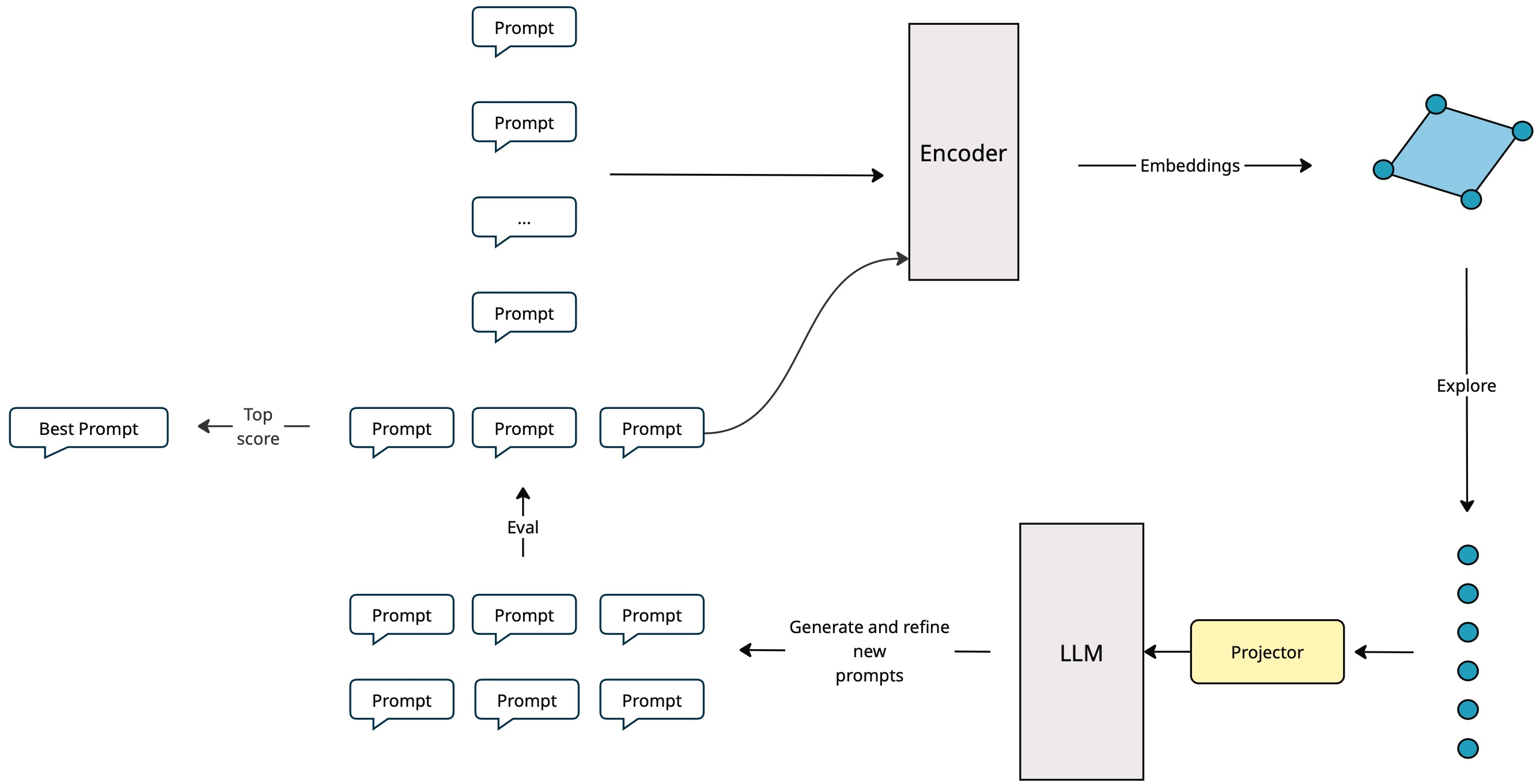

The core innovation of LatentPrompt lies in its method of embedding prompts into a high-dimensional semantic space, where exploration can uncover effective prompt variations. The framework is composed of several key components:

- Prompt Encoder: Converts prompts into fixed-dimensional embeddings.

- Latent Space Explorer: Generates new candidate embeddings using interpolation and extrapolation strategies. This is achieved by blending seed prompts in the semantic space.

- Cross-Modal Projection: Projects embeddings into the token space of an LLM.

- Prompt Decoder: Uses the projected embeddings to produce new text prompts.

- Evaluator: Assesses the effectiveness of prompts based on task-specific performance metrics.

The framework is encapsulated in a feedback loop that allows iterative refinement of prompts to enhance performance continuously.

Figure 1: Overview of our framework. Seed prompts are encoded into embeddings, explored in latent space, and projected into an LLM token space for prompt generation.

Experimental Evaluation

LatentPrompt's efficacy was demonstrated on the Financial PhraseBank sentiment classification benchmark. Initial seed prompts were derived using state-of-the-art practices, such as those enabled by GPT-4o. Through a single cycle of latent space exploration, the framework achieved a classification accuracy improvement of nearly 3 percentage points, showcasing its potential to outperform manually crafted prompts even in competitive settings.

The results from the experiments emphasize LatentPrompt's capability in generating prompts that improve performance:

- Baseline Accuracy (Best Seed Prompt): 75.36%

- Optimized Prompt Accuracy: 78.14%

This improvement illustrates the effectiveness of the latent space interpolation strategy in semantic space, leveraging latent variations that are not easily reachable through direct token mutations.

Discussion

LatentPrompt successfully bridges continuous and discrete optimization approaches, offering a robust framework for prompt engineering. It operates without needing gradient-based methods, which are limited to white-box models. The method is also model-agnostic and requires only black-box access to LLMs, broadening its applicability to various closed-source models.

The approach of using human-readable prompts as outputs ensures clarity and trust, addressing a significant limitation of some continuous optimization techniques that yield non-intelligible prompts. Furthermore, combining this framework with evolutionary algorithms or discrete genetic search strategies could enhance efficiency and exploration depth.

Conclusion

The LatentPrompt framework exemplifies a significant stride in automated prompt optimization, enabling enhanced performance of LLMs across diverse tasks with minimal human intervention. By transforming prompt crafting into a latent space exploration problem, this method allows for the seamless adaptation and tuning of prompts to meet specific task requirements effectively. As LLMs continue to evolve and integrate into complex applications, such optimization frameworks will become indispensable for achieving peak performance across varied domains and tasks. Future research directions include more sophisticated exploration strategies, multi-objective optimization, and broader application to other NLP tasks beyond sentiment classification.