Is Chain-of-Thought Reasoning of LLMs a Mirage? A Data Distribution Lens (2508.01191v2)

Abstract: Chain-of-Thought (CoT) prompting has been shown to improve LLM performance on various tasks. With this approach, LLMs appear to produce human-like reasoning steps before providing answers (a.k.a., CoT reasoning), which often leads to the perception that they engage in deliberate inferential processes. However, some initial findings suggest that CoT reasoning may be more superficial than it appears, motivating us to explore further. In this paper, we study CoT reasoning via a data distribution lens and investigate if CoT reasoning reflects a structured inductive bias learned from in-distribution data, allowing the model to conditionally generate reasoning paths that approximate those seen during training. Thus, its effectiveness is fundamentally bounded by the degree of distribution discrepancy between the training data and the test queries. With this lens, we dissect CoT reasoning via three dimensions: task, length, and format. To investigate each dimension, we design DataAlchemy, an isolated and controlled environment to train LLMs from scratch and systematically probe them under various distribution conditions. Our results reveal that CoT reasoning is a brittle mirage that vanishes when it is pushed beyond training distributions. This work offers a deeper understanding of why and when CoT reasoning fails, emphasizing the ongoing challenge of achieving genuine and generalizable reasoning.

Summary

- The paper demonstrates that CoT reasoning in LLMs is fundamentally structured pattern matching limited by training data distribution rather than true logical inference.

- It introduces the DataAlchemy framework, using controlled synthetic environments to precisely probe task, length, and format generalization.

- Experiments reveal that minor distribution shifts lead to drastic performance drops, with fine-tuning only offering local fixes to overcome brittleness.

Data Distribution Limits of Chain-of-Thought Reasoning in LLMs

Introduction

This paper presents a systematic investigation into the true nature of Chain-of-Thought (CoT) reasoning in LLMs, challenging the prevailing assumption that CoT reflects genuine, generalizable logical inference. The authors propose a data distribution-centric perspective, hypothesizing that CoT reasoning is fundamentally a form of structured pattern matching, with its efficacy strictly bounded by the statistical properties of the training data. To empirically validate this hypothesis, the authors introduce DataAlchemy, a controlled synthetic environment for training and probing LLMs from scratch, enabling precise manipulation of distributional shifts along task, length, and format axes.

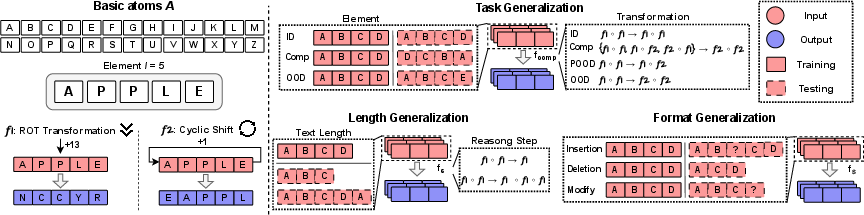

Figure 1: Framework of DataAlchemy. It creates an isolated and controlled environment to train LLMs from scratch and probe the task, length, and format generalization.

The Data Distribution Lens on CoT Reasoning

The central thesis is that CoT reasoning does not emerge from an intrinsic capacity for logical inference, but rather from the model's ability to interpolate and extrapolate within the manifold of its training distribution. The authors formalize this with a generalization bound: the expected test risk is upper-bounded by the sum of the training risk, a term proportional to the distributional discrepancy (e.g., KL divergence or Wasserstein distance) between train and test distributions, and a statistical error term. This theoretical framing predicts that CoT performance will degrade as the test distribution diverges from the training distribution, regardless of the apparent logical structure of the task.

DataAlchemy: Controlled Probing of Generalization

DataAlchemy is a synthetic dataset generator and training environment that enables precise control over the data distribution. The core constructs are:

- Atoms and Elements: Sequences of alphabetic tokens, parameterized by length.

- Transformations: Bijective operations (e.g., ROT-n, cyclic position shift) applied to elements, supporting compositional chains to simulate multi-step reasoning.

- Generalization Axes: Systematic manipulation of (1) task (novel transformations or element compositions), (2) length (sequence or reasoning chain length), and (3) format (prompt surface form).

This design allows for rigorous, isolated evaluation of LLM generalization, eliminating confounds from large-scale pretraining.

Task Generalization: Transformation and Element Novelty

Transformation Generalization

The authors evaluate LLMs on their ability to generalize to unseen transformation compositions. Four regimes are considered: in-distribution (ID), novel compositions (CMP), partial OOD (POOD), and fully OOD transformations.

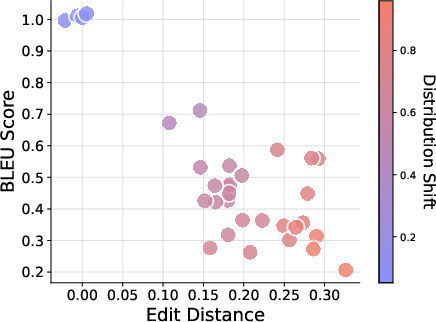

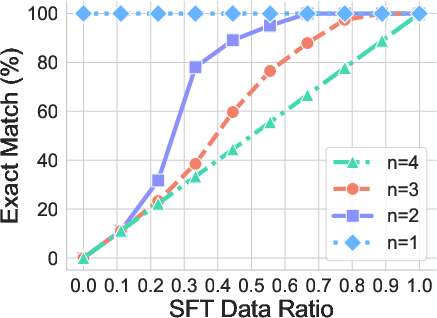

Figure 2: Performance of CoT reasoning on transformation generalization. Efficacy of CoT reasoning declines as the degree of distributional discrepancy increases.

Results show that CoT reasoning is highly brittle: exact match accuracy drops from 100% (ID) to near-zero (CMP, POOD, OOD) as soon as the test transformations deviate from those seen in training. Notably, LLMs sometimes produce correct intermediate reasoning steps but incorrect final answers, or vice versa, indicating a lack of true compositional understanding.

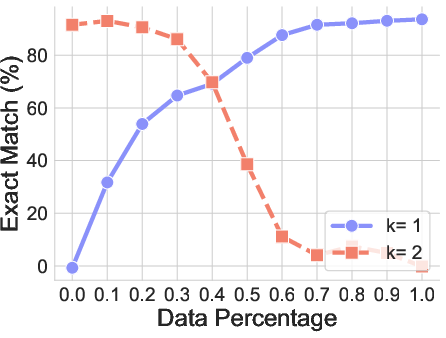

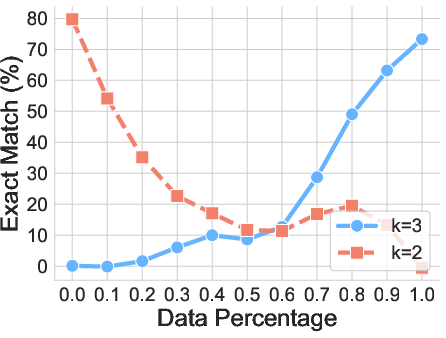

Fine-Tuning and Distributional Proximity

Introducing a small fraction of unseen transformation data via supervised fine-tuning (SFT) rapidly restores performance, but only for the specific new distribution, not for genuinely novel tasks.

Figure 3: Performance on unseen transformation using SFT in various levels of distribution shift. Introducing a small amount of unseen data helps CoT reasoning to generalize across different scenarios.

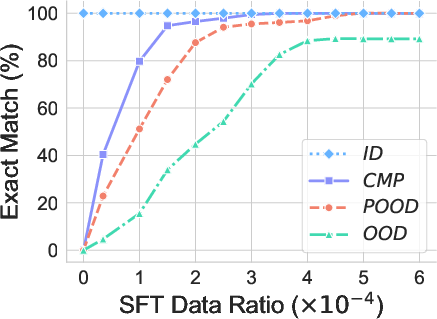

Element Generalization

When tested on elements (token sequences) containing novel atoms or unseen combinations, LLMs again fail to generalize, with performance collapsing to chance. SFT on a small number of new element examples enables rapid recovery, but only for those specific cases.

Figure 4: Element generalization results on various scenarios and relations.

Figure 5: SFT performances for element generalization. SFT helps to generalize to novel elements.

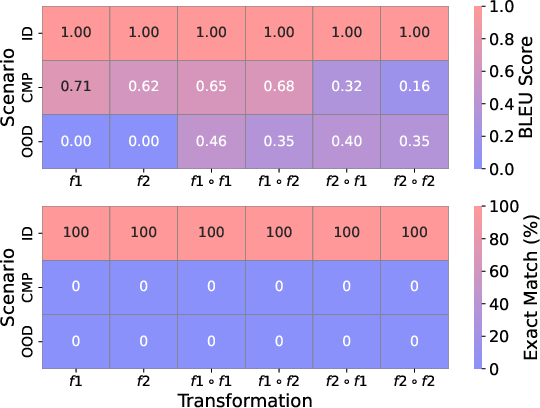

Length Generalization: Sequence and Reasoning Step Extrapolation

Text Length Generalization

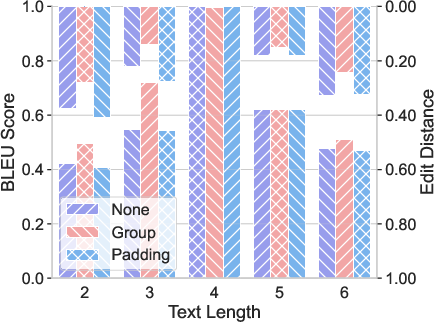

Models trained on fixed-length sequences fail to generalize to shorter or longer sequences, with performance degrading as a Gaussian function of the length discrepancy. Padding strategies do not mitigate this; only grouping strategies that expose the model to a range of lengths during training improve generalization.

Figure 6: Performance of text length generalization across various padding strategies. Group strategies contribute to length generalization.

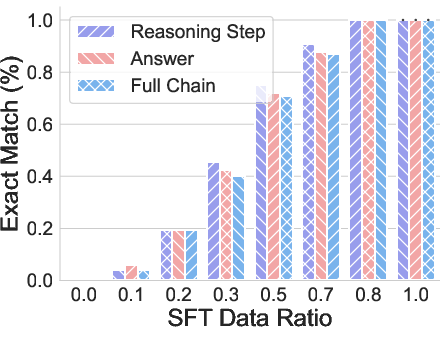

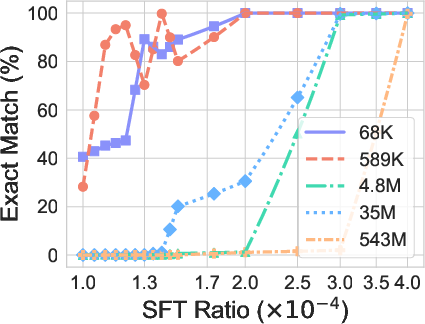

Reasoning Step Generalization

Similarly, models trained on a fixed number of reasoning steps cannot extrapolate to problems requiring more or fewer steps. SFT on new step counts enables adaptation, but only for those specific cases.

Figure 7: SFT performances for reasoning step generalization.

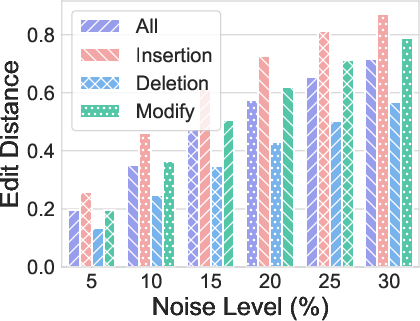

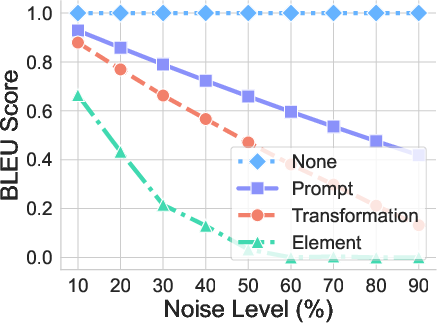

Format Generalization: Prompt Robustness

The authors probe the sensitivity of CoT reasoning to surface-level prompt perturbations (insertion, deletion, modification, hybrid). All forms of perturbation, except minor deletions, cause significant performance drops, especially when applied to tokens encoding elements or transformations. This demonstrates that CoT reasoning is not robust to superficial format changes, further supporting the pattern-matching hypothesis.

Figure 8: Performance of format generalization.

Model Size and Temperature Robustness

The observed brittleness of CoT reasoning holds across a range of model sizes and sampling temperatures, indicating that the findings are not artifacts of underparameterization or decoding stochasticity.

Figure 9: Temperature and model size. The findings hold under different temperatures and model sizes.

Implications and Future Directions

The results have several critical implications:

- CoT as Pattern Matching: The empirical and theoretical evidence converges on the conclusion that CoT reasoning in LLMs is a form of structured pattern matching, not abstract logical inference. The apparent reasoning ability is a mirage, vanishing under even mild distributional shift.

- Fine-Tuning is Local, Not Global: SFT can rapidly adapt models to new distributions, but this is a local patch, not a solution to the lack of generalization. Each new OOD scenario requires explicit data exposure.

- Evaluation Practices: Standard in-distribution validation is insufficient. Robustness must be assessed via systematic OOD and adversarial testing along task, length, and format axes.

- Research Directions: Achieving genuine, generalizable reasoning in LLMs will require architectural or training innovations that go beyond scaling and data augmentation. Approaches that explicitly encode algorithmic or symbolic reasoning, or that disentangle reasoning from surface pattern recognition, are promising avenues.

Conclusion

This work provides a rigorous, data-centric dissection of CoT reasoning in LLMs, demonstrating that its effectiveness is strictly bounded by the training data distribution. The DataAlchemy framework enables precise, controlled evaluation of generalization, revealing the brittleness and superficiality of current CoT capabilities. These findings underscore the need for new methods to achieve authentic, robust reasoning in future AI systems.

Follow-up Questions

- How does the data distribution lens reshape our understanding of Chain-of-Thought capabilities in LLMs?

- What are the implications of viewing CoT reasoning as structured pattern matching for future AI model designs?

- How does the DataAlchemy framework enable controlled evaluation of LLM generalization across various axes?

- What strategies could be employed to enhance the robustness of CoT reasoning under distribution shifts?

- Find recent papers about LLM reasoning limitations.

Related Papers

- Chain-of-Thought Prompting Elicits Reasoning in Large Language Models (2022)

- Towards Understanding Chain-of-Thought Prompting: An Empirical Study of What Matters (2022)

- The Impact of Reasoning Step Length on Large Language Models (2024)

- Chain-of-Thought Reasoning Without Prompting (2024)

- How Likely Do LLMs with CoT Mimic Human Reasoning? (2024)

- Improve Vision Language Model Chain-of-thought Reasoning (2024)

- A Theoretical Understanding of Chain-of-Thought: Coherent Reasoning and Error-Aware Demonstration (2024)

- Demystifying Long Chain-of-Thought Reasoning in LLMs (2025)

- Unveiling the Mechanisms of Explicit CoT Training: How CoT Enhances Reasoning Generalization (2025)

- Reasoning Beyond Language: A Comprehensive Survey on Latent Chain-of-Thought Reasoning (2025)

Tweets

YouTube

HackerNews

- Is Chain-of-Thought Reasoning of LLMs a Mirage? A Data Distribution Lens (4 points, 0 comments)

- Is Chain-of-Thought Reasoning of LLMs a Mirage? (3 points, 0 comments)

- New paper reveals Chain-of-Thought reasoning of LLMs a mirage (433 points, 110 comments)

- "Chain of thought" reasoning models fall apart when trying to move outside of training data. (71 points, 32 comments)

- New paper reveals Chain-of-Thought reasoning of LLMs a mirage (65 points, 3 comments)

- New paper reveals Chain-of-Thought reasoning of LLMs a mirage (45 points, 46 comments)

- New paper reveals Chain-of-Thought reasoning of LLMs a mirage (5 points, 1 comment)

alphaXiv

- Is Chain-of-Thought Reasoning of LLMs a Mirage? A Data Distribution Lens (76 likes, 0 questions)