CoT-Self-Instruct: Building high-quality synthetic prompts for reasoning and non-reasoning tasks (2507.23751v1)

Abstract: We propose CoT-Self-Instruct, a synthetic data generation method that instructs LLMs to first reason and plan via Chain-of-Thought (CoT) based on the given seed tasks, and then to generate a new synthetic prompt of similar quality and complexity for use in LLM training, followed by filtering for high-quality data with automatic metrics. In verifiable reasoning, our synthetic data significantly outperforms existing training datasets, such as s1k and OpenMathReasoning, across MATH500, AMC23, AIME24 and GPQA-Diamond. For non-verifiable instruction-following tasks, our method surpasses the performance of human or standard self-instruct prompts on both AlpacaEval 2.0 and Arena-Hard.

Summary

- The paper introduces a novel pipeline that leverages chain-of-thought reasoning to generate and curate synthetic prompts.

- It employs task-specific filtering—Answer-Consistency for reasoning tasks and RIP for non-reasoning tasks—to ensure high data quality.

- The approach significantly outperforms baselines, reducing annotation costs while enhancing verification and overall model performance.

CoT-Self-Instruct: High-Quality Synthetic Prompt Generation for Reasoning and Non-Reasoning Tasks

Introduction

The paper "CoT-Self-Instruct: Building high-quality synthetic prompts for reasoning and non-reasoning tasks" (2507.23751) introduces a synthetic data generation and curation pipeline that leverages Chain-of-Thought (CoT) reasoning to produce high-quality prompts for both verifiable reasoning and general instruction-following tasks. The method addresses the limitations of human-generated data—such as cost, scarcity, and inherent bias—by enabling LLMs to autonomously generate and filter synthetic instructions, thereby improving downstream model performance in both reasoning and non-reasoning domains.

Methodology: CoT-Self-Instruct Pipeline

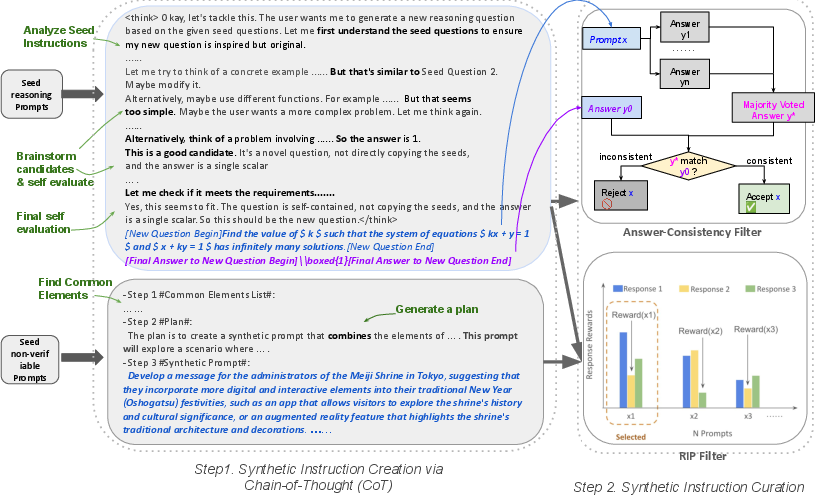

The CoT-Self-Instruct pipeline consists of two primary stages: (1) synthetic instruction creation via CoT reasoning, and (2) synthetic instruction curation using task-appropriate filtering mechanisms.

Figure 1: The CoT-Self-Instruct pipeline: LLMs are prompted to reason and generate new instructions from seed prompts, followed by automatic curation using Answer-Consistency for verifiable tasks or RIP for non-verifiable tasks.

Synthetic Instruction Creation via CoT

Given a small pool of high-quality, human-annotated seed instructions, the LLM is prompted in a few-shot manner to analyze the domain, complexity, and purpose of the seeds. The model is then instructed to reason step-by-step (CoT) to devise a plan for generating a new instruction of comparable quality and complexity. For verifiable reasoning tasks, the model also generates a reference answer via explicit reasoning. For non-verifiable tasks, only the instruction is generated, with responses to be evaluated later by a reward model.

Synthetic Instruction Curation

To ensure the quality of the generated synthetic data, the pipeline employs task-specific filtering:

- Verifiable Reasoning Tasks: The Answer-Consistency filter discards examples where the LLM's majority-vote solution does not match the CoT-generated answer, thus removing incorrectly labeled or overly difficult prompts.

- Non-Verifiable Tasks: The Rejecting Instruction Preferences (RIP) filter uses a reward model to score multiple LLM-generated responses per prompt, retaining only those prompts whose lowest response score exceeds a threshold, thereby filtering out ambiguous or low-quality instructions.

Experimental Setup

The efficacy of CoT-Self-Instruct is evaluated on both reasoning and non-reasoning tasks, with comprehensive ablations and comparisons to existing baselines.

Reasoning Tasks

- Seed Data: s1k dataset (893 verifiable prompts).

- Prompt Generation: Qwen3-4B-Base and Qwen3-4B models, with both CoT-Self-Instruct and Self-Instruct templates.

- Training: GRPO (RL with verifiable rewards), 40 epochs, batch size 128, 16 rollouts per prompt.

- Evaluation: MATH500, AMC23, AIME24, GPQA-Diamond; pass@1 over 16 seeds.

Non-Reasoning Tasks

- Seed Data: Wildchat-RIP-Filtered-by-8b-Llama (4k prompts, 8 categories).

- Prompt Generation: LLama 3.1-8B-Instruct, with CoT-Self-Instruct and Self-Instruct templates.

- Training: DPO (offline and online), 5k DPO pairs, length-normalized reward selection.

- Evaluation: AlpacaEval 2.0 and Arena-Hard, using GPT-4-turbo and GPT-4o as judges.

Results

Reasoning Tasks

- CoT-Self-Instruct outperforms Self-Instruct: Without filtering, CoT-Self-Instruct achieves 53.0% average accuracy, compared to 49.5% for Self-Instruct.

- Filtering amplifies gains: With Self-Consistency filtering, CoT-Self-Instruct improves to 55.1%, and with RIP filtering to 56.2%. The Answer-Consistency filter yields the highest performance at 57.2% (2926 prompts), and further scaling to 10k prompts increases accuracy to 58.7%.

- Superiority over human and public synthetic data: CoT-Self-Instruct with Answer-Consistency filtering significantly outperforms s1k (44.6%) and OpenMathReasoning (47.5%) baselines, even when matched for training set size.

Non-Reasoning Tasks

- CoT-Self-Instruct surpasses Self-Instruct and human prompts: On average, CoT-Self-Instruct achieves 53.9 (DPO, unfiltered) versus 47.4 for Self-Instruct and 46.8 for WildChat human prompts.

- RIP filtering further improves results: CoT-Self-Instruct with RIP filtering reaches 54.7, outperforming both filtered Self-Instruct (49.1) and filtered WildChat (50.7).

- Online DPO yields additional improvements: CoT-Self-Instruct+RIP achieves 67.1, compared to 63.1 for WildChat, under online DPO training.

Ablation and Template Analysis

- Longer CoT chains yield higher-quality prompts: Experiments with varying CoT lengths demonstrate that more extensive reasoning during prompt generation consistently improves downstream model performance.

- Filtering is critical: Both Answer-Consistency and RIP filtering are essential for maximizing the utility of synthetic data, with Answer-Consistency being optimal for verifiable tasks and RIP for non-verifiable tasks.

Implications and Future Directions

The results demonstrate that LLMs, when guided to reason and plan via CoT, can autonomously generate synthetic data that is not only competitive with but often superior to human-annotated and standard synthetic datasets. The strong numerical gains—up to 14 percentage points over human data in some settings—underscore the importance of explicit reasoning and rigorous filtering in synthetic data pipelines.

From a practical perspective, this approach reduces reliance on costly human annotation and enables scalable, domain-adaptive data generation. Theoretically, the findings suggest that LLMs possess sufficient meta-cognitive capacity to self-improve through structured prompt engineering and self-critique.

Potential future developments include:

- Extending CoT-Self-Instruct to multi-modal and multi-agent settings, where reasoning over diverse data types or collaborative tasks may further benefit from structured synthetic data.

- Investigating the limits of recursive self-improvement, particularly in light of concerns about model collapse when training on recursively generated data.

- Integrating more sophisticated reward models and filtering criteria, potentially leveraging human-in-the-loop or adversarial evaluation to further enhance data quality.

Conclusion

CoT-Self-Instruct establishes a robust framework for high-quality synthetic prompt generation by combining explicit CoT reasoning with task-appropriate filtering. The method consistently outperforms both human and standard synthetic data baselines across a range of reasoning and instruction-following benchmarks. These results highlight the viability of autonomous, LLM-driven data generation pipelines for advancing the capabilities of future LLMs.

Follow-up Questions

- What are the key differences between CoT-Self-Instruct and traditional self-instruct methods?

- How do the Answer-Consistency and RIP filtering mechanisms enhance the quality of synthetic prompts?

- In what ways does chain-of-thought reasoning contribute to generating more effective synthetic data?

- How does the performance of the CoT-Self-Instruct pipeline compare on reasoning versus non-reasoning tasks?

- Find recent papers about synthetic prompt generation for LLMs.

Related Papers

- Chain-of-Thought Prompting Elicits Reasoning in Large Language Models (2022)

- Automatic Prompt Augmentation and Selection with Chain-of-Thought from Labeled Data (2023)

- Enhancing Chain-of-Thoughts Prompting with Iterative Bootstrapping in Large Language Models (2023)

- Better Zero-Shot Reasoning with Self-Adaptive Prompting (2023)

- Self-prompted Chain-of-Thought on Large Language Models for Open-domain Multi-hop Reasoning (2023)

- Large Language Models are In-context Teachers for Knowledge Reasoning (2023)

- Chain-of-Thought Reasoning Without Prompting (2024)

- Strategic Chain-of-Thought: Guiding Accurate Reasoning in LLMs through Strategy Elicitation (2024)

- Improve Vision Language Model Chain-of-thought Reasoning (2024)

- Demystifying Long Chain-of-Thought Reasoning in LLMs (2025)

HackerNews

- Cot-Self-Instruct: Synthetic prompts for reasoning and non-reasoning tasks (2 points, 0 comments)