- The paper introduces EdgeSNNs by integrating spiking neural networks with edge computing to overcome resource constraints and reduce data transmission latency.

- It details innovative methods including ANN2SNN conversion, on-device learning, and hardware-aware optimizations to balance performance with energy efficiency.

- The paper addresses security and privacy challenges through encrypted weights and privacy-preserving strategies, ensuring robust decentralized data processing.

"Edge Intelligence with Spiking Neural Networks" (2507.14069)

Introduction to EdgeSNNs

The research on "Edge Intelligence with Spiking Neural Networks" tackles the integration of Spiking Neural Networks (SNNs) with edge computing. This convergence offers a promising solution to address the constraints posed by limited computational resources and data privacy concerns in edge computing environments. Traditional cloud-centric AI solutions suffer from latency issues and high bandwidth demands. By leveraging the energy-efficient, event-driven dynamics of SNNs, edge devices can perform intelligent tasks locally, minimizing the need for data transmission to centralized data centers.

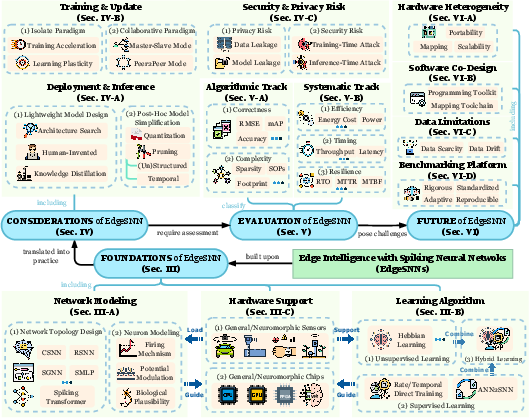

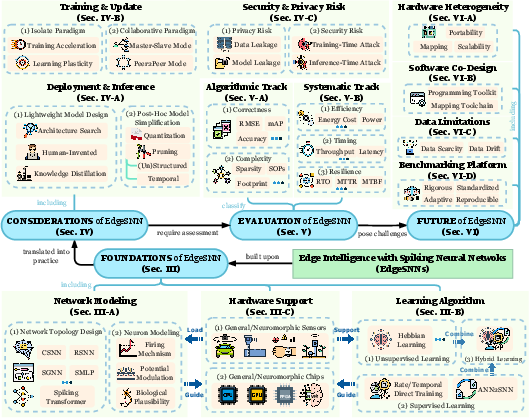

Figure 1: The organizational structure of EdgeSNN systems is built on four core aspects: foundational principles, practical building considerations, evaluation methodologies, and future challenges.

Foundational Components of EdgeSNNs

Neuronal and Network Modeling

SNNs offer a biologically plausible model that uses spikes, or discrete events, to process information, inherently aligning them with the low-power requirement of edge devices. Key to this model is the Leaky Integrate-and-Fire (LIF) neuron model, widely applied due to its balance between biological fidelity and computational efficiency.

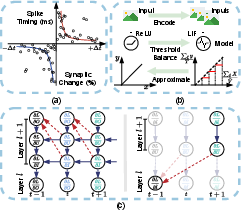

The challenge is designing SNN architectures like CNNs and RNNs that harness SNN efficiencies while maintaining high performance in tasks traditionally dominated by artificial neural networks (ANNs). Recent solutions involve incorporating temporal and spatial feature extraction capabilities (Figure 2).

Figure 2: Diverse architectures, such as CNNs, GNNs, RNNs, and Transformers, are being adapted to SNN topologies to exploit spatial, sequential, and global data dependencies.

Learning Algorithms

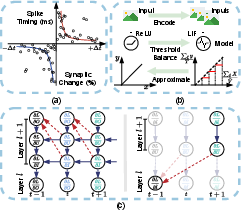

Learning in SNNs traditionally mimics synaptic plasticity observed in biological brains, such as Spike-Timing-Dependent Plasticity (STDP). Yet, supervised approaches using gradient descent, known for ANN training, adapt to SNNs through surrogate gradients enabling backpropagation.

The paper details methods like direct training, which simulate SNNs fully, and ANN2SNN conversion, where ANNs trained models are translated to SNNs, offering a strategic balance of accuracy and resource efficiency. Training efficiency is crucial in the context of resource-constrained edge devices, motivating hybrid learning paradigms that integrate multiple strategies for optimization (Figure 3).

Figure 3: Visualization of STDP and ANN2SNN methods illustrate the range of learning techniques applicable to SNNs.

Practical Deployment of EdgeSNNs

The deployment of SNNs on edge devices involves optimizing models to fit the memory and processing constraints inherent to such platforms. Techniques include:

- Model Pruning and Quantization: Reducing the layers and precision of models while maintaining accuracy, allowing efficient deployment on devices with limited computational power.

- On-Device Learning: This tackles dynamic environments, enabling devices to adapt to new data through methods like Federated Learning, which updates models in collaboration between a cloud server and multiple edge devices.

The paper emphasizes the importance of creating hardware-aware optimization strategies to ensure models capitalize on the unique capabilities of neuromorphic hardware while aligning with edge constraints.

Security and Privacy

As crucial as intelligence is the need for robust security and privacy measures. Privacy challenges in EdgeSNNs stem from decentralized data, requiring on-device processing to protect sensitive information. Proposed solutions involve encrypting SNN weights and using privacy-preserving learning paradigms, such as differential privacy, to minimize data leakage risks.

Security solutions are proposed to safeguard against adversarial attacks prevalent in both training and inference phases, ensuring model integrity and reliable performance in diverse edge applications.

Evaluation and Future Directions

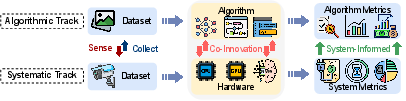

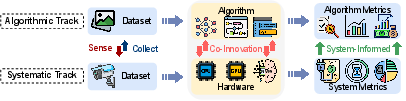

The evaluation of EdgeSNN systems is divided into algorithmic and systematic levels, focusing on correctness and computational efficiency under restricted conditions (Figure 4). A dual-track evaluation provides a balanced framework for benchmarking SNN solutions in various deployment scenarios.

Figure 4: Evaluation of EdgeSNNs considers both algorithmic intricacies and system-level metrics, offering comprehensive insights into performance under constraints.

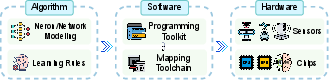

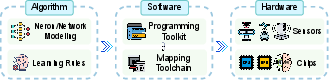

The paper closes by acknowledging open challenges such as hardware heterogeneity and the need for co-design methodologies spanning algorithms, software, and hardware (Figure 5).

Figure 5: Development workflow in EdgeSNN systems emphasizes a co-design approach linking software to hardware implementations.

Conclusion

The integration of SNNs with edge computing stands to revolutionize intelligent processing for IoT applications, providing scalable, low-power solutions tailored for real-time environments. As neuromorphic AI evolves, further exploration into robust, flexible, and efficient models will be essential to meet future demands in edge computing scenarios.