- The paper introduces an innovative neural network model combining VAEs and MHNs to mimic complementary learning systems.

- The proposed method achieves near state-of-the-art accuracy on the Split-MNIST task while effectively reducing catastrophic forgetting.

- The study demonstrates that MHNs excel in pattern separation and VAEs in pattern completion, bridging biological plausibility with practical performance.

Summary of "A Neural Network Model of Complementary Learning Systems: Pattern Separation and Completion for Continual Learning"

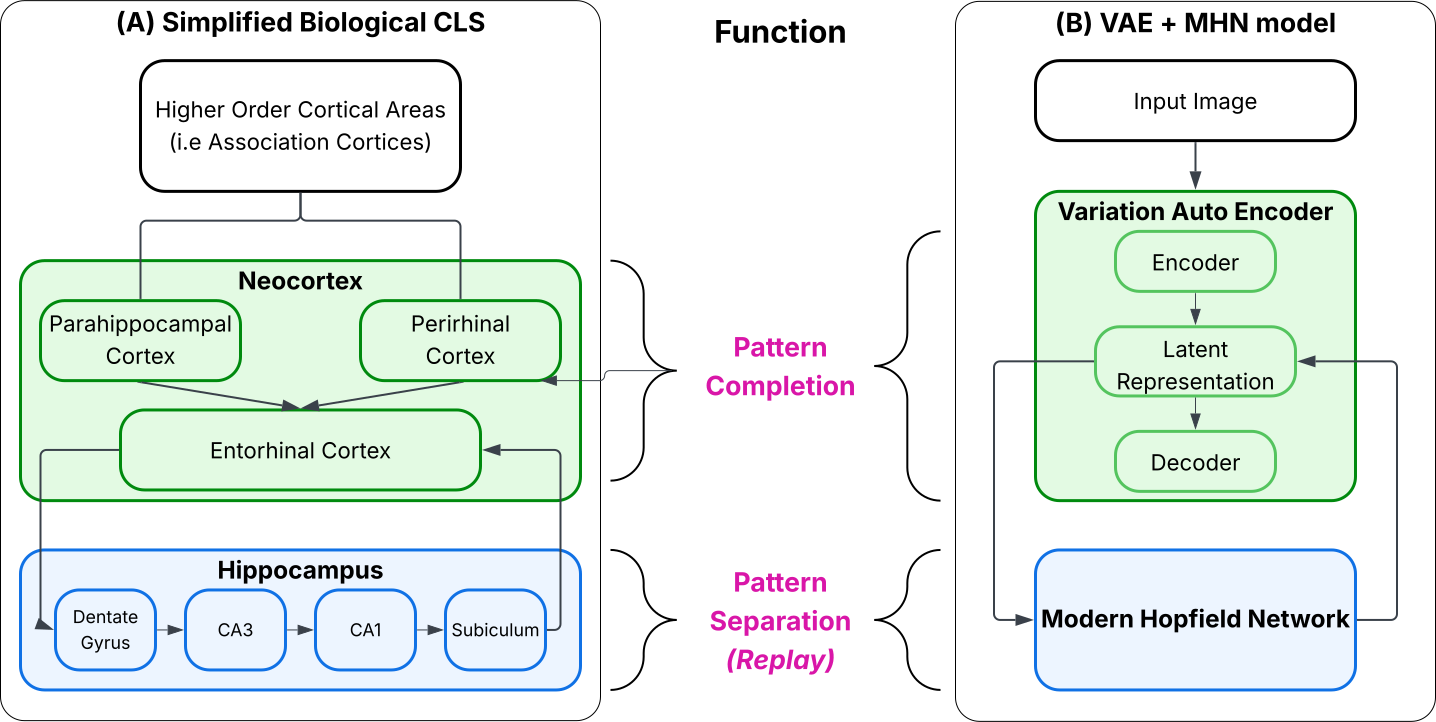

This paper presents an innovative neural network model based on Complementary Learning Systems (CLS) theory which addresses catastrophic forgetting in artificial neural networks. The model integrates Variational Autoencoders (VAEs) and Modern Hopfield Networks (MHNs), leveraging their capabilities to emulate pattern separation and completion. This biologically inspired approach is evaluated using the Split-MNIST task.

Introduction and Background

Catastrophic forgetting is a significant challenge in neural networks when learning incrementally. Unlike human brain structures like the hippocampus and neocortex that facilitate continual learning through CLS [Mcclelland1995, Kumaran2016], artificial models often lack this ability inherently. Traditional Generative Replay (GR) techniques, inspired by CLS, have mitigated forgetting but sometimes sacrificed biological plausibility for performance.

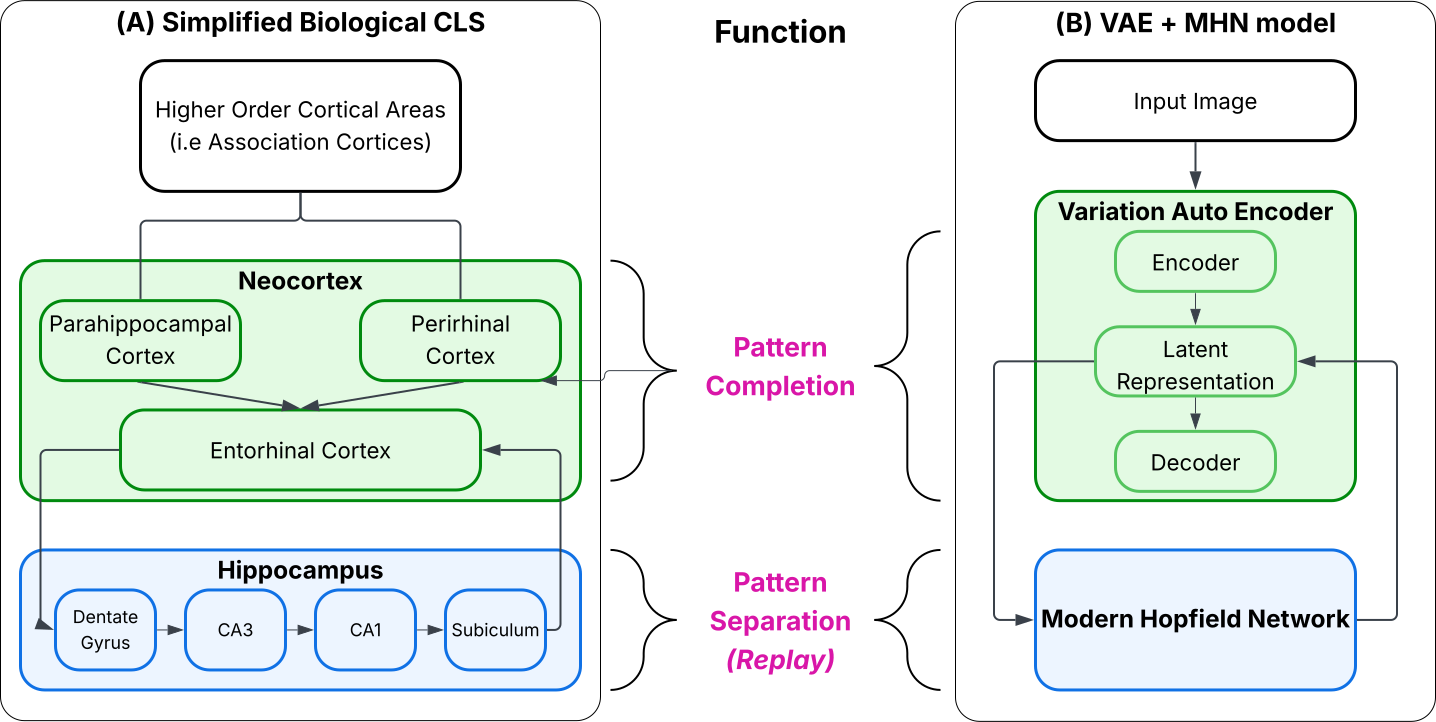

The paper proposes a model combining VAE, representing neocortical function in pattern completion, and MHN, reflecting hippocampal function in pattern separation. This approach is grounded in CLS principles, aiming to simulate dynamic interactions within memory systems for efficient continual learning.

Figure 1: A schematic representation of the CLS framework, illustrating memory function divisions between the hippocampus and neocortex.

Methodology

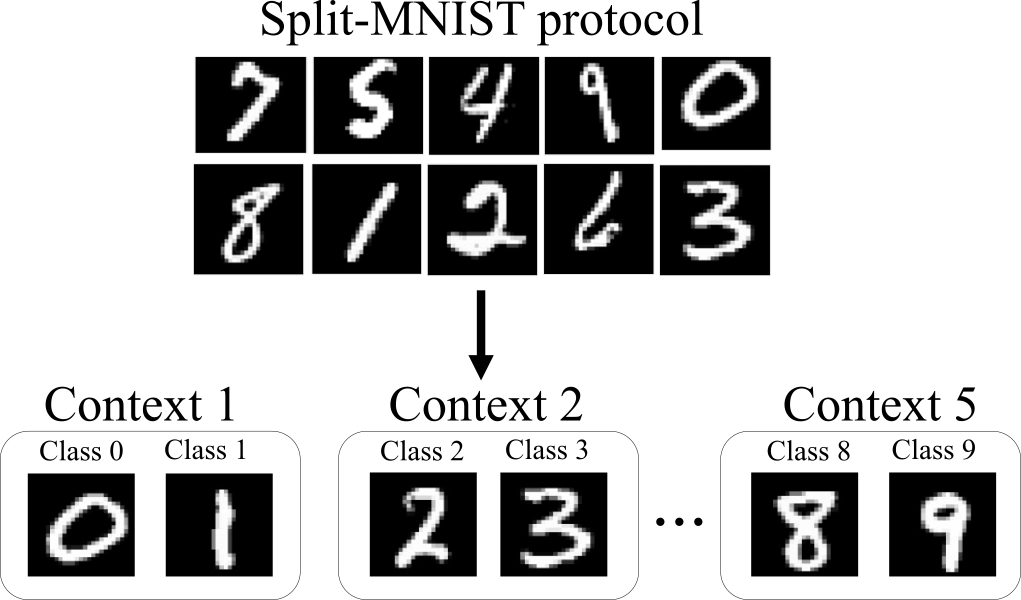

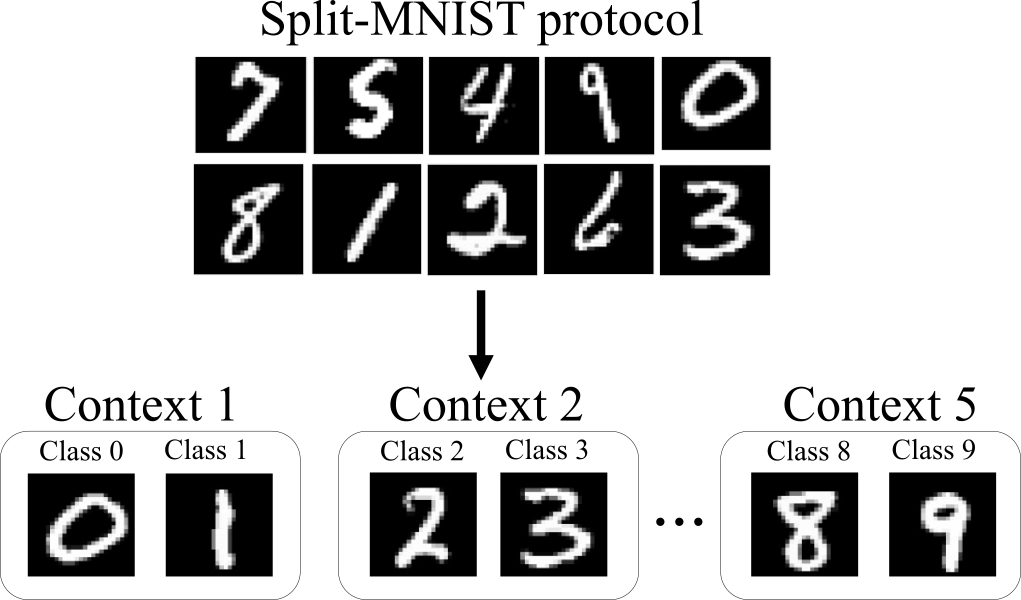

The VAE+MHN model is tested on the Split-MNIST task, which involves sequential learning of binary classifications. The architecture supports pattern separation through MHN by storing distinct episodic memories and allows pattern completion via VAE by generalizing from replayed samples.

Model training employs generative replay methods inspired by CLS, using latent space representations and employing teacher-student dynamics between the MHN and VAE. Key parameters like latent dimensionality and replay ratios significantly influence model performance.

Figure 2: Class-incremental continual learning setup on the MNIST dataset (Split-MNIST Protocol).

Results

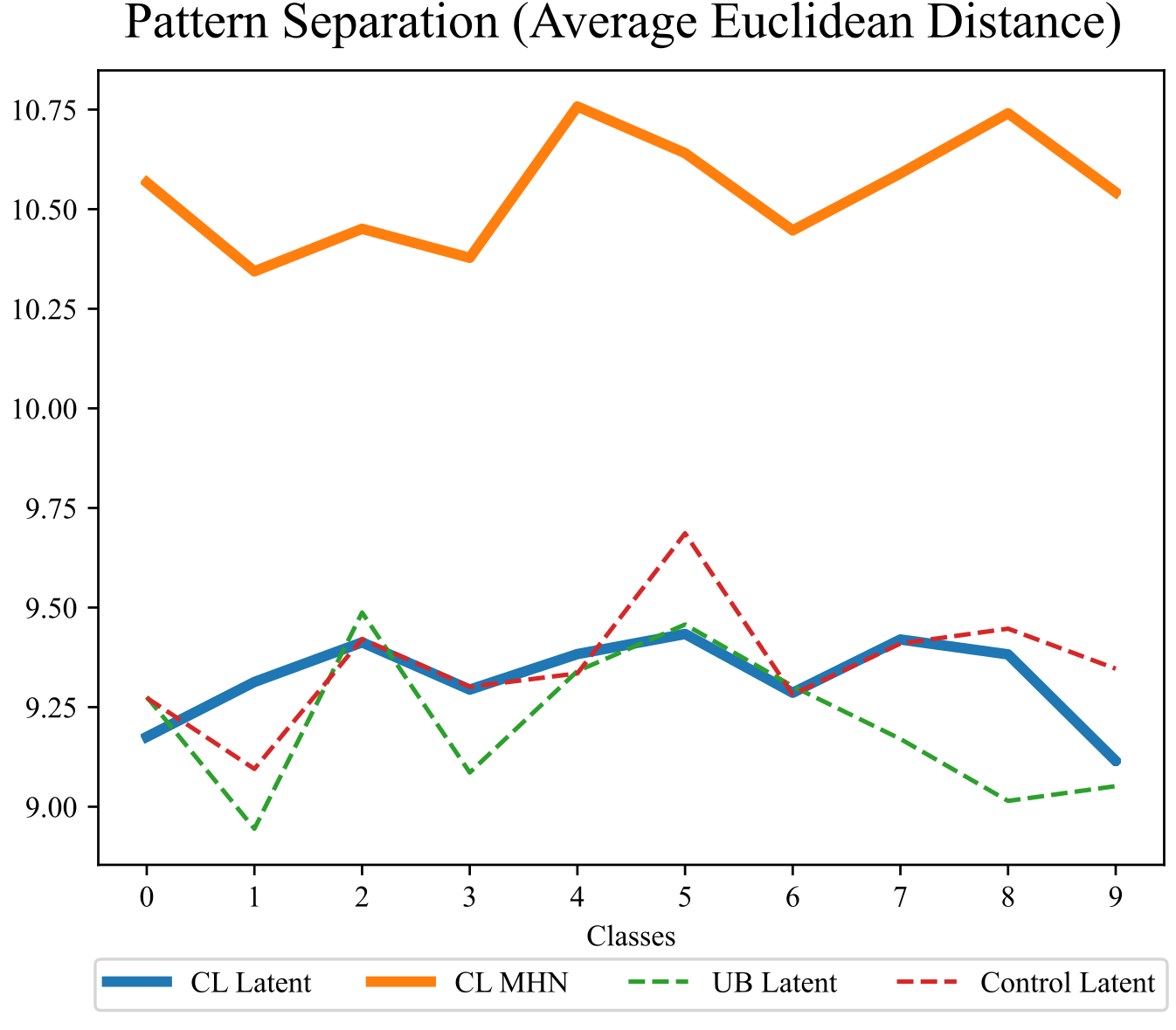

The VAE+MHN model achieves test accuracy near state-of-the-art levels, with performance indicators such as Euclidean distance and SSIM validating its functional alignment with CLS [Spens2024]. The model successfully approximates the upper baseline trained without generative replay, positioning its accuracy within 6% of that benchmark while minimizing catastrophic interference.

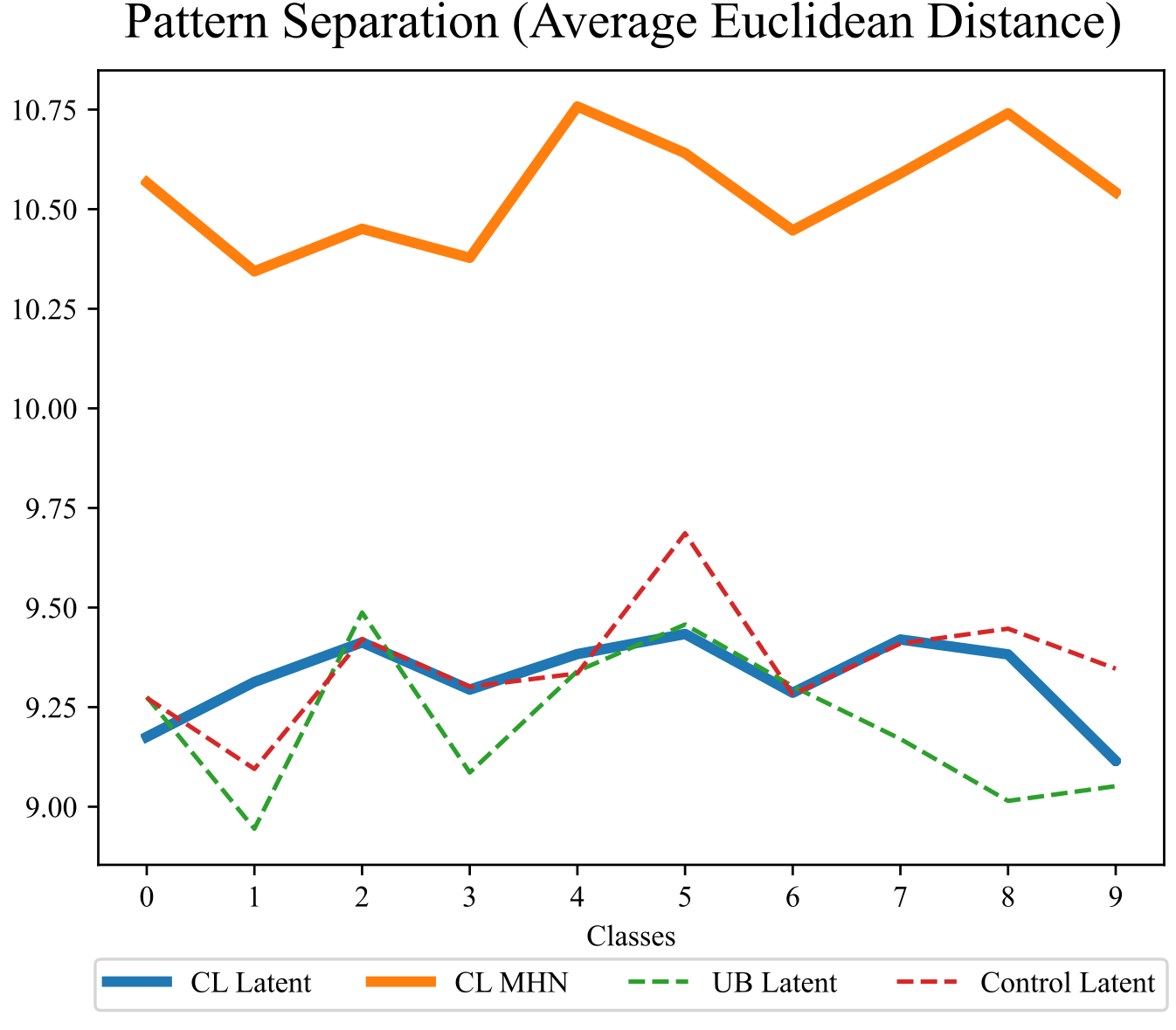

Pattern separation analyses reveal MHN's superior capability to distinguish intra-class samples as opposed to VAE latent layers, demonstrating its role in providing differentiated representations.

Figure 3: Averaged intra-class Euclidean distances highlight enhanced pattern separation in MHN representations.

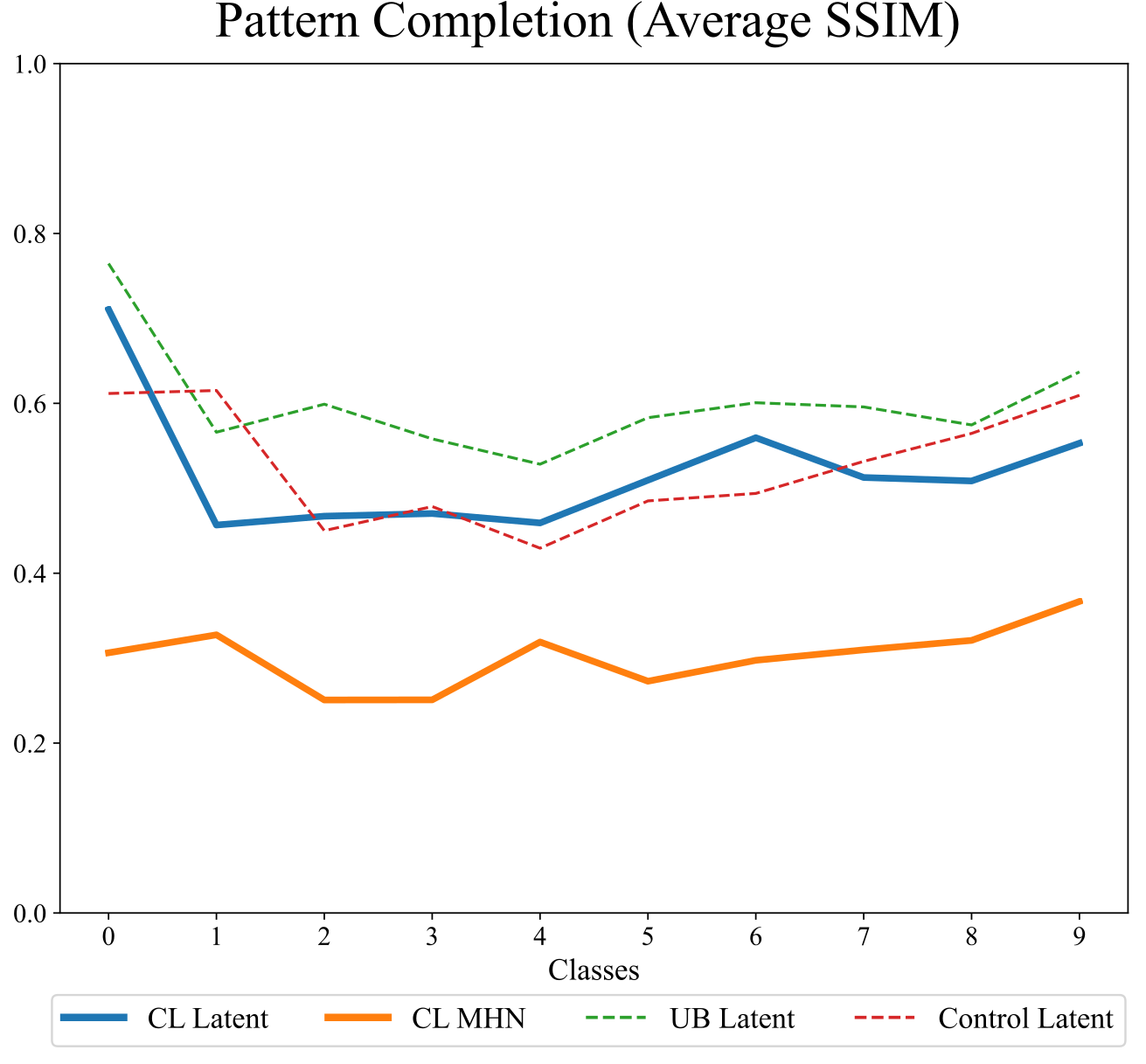

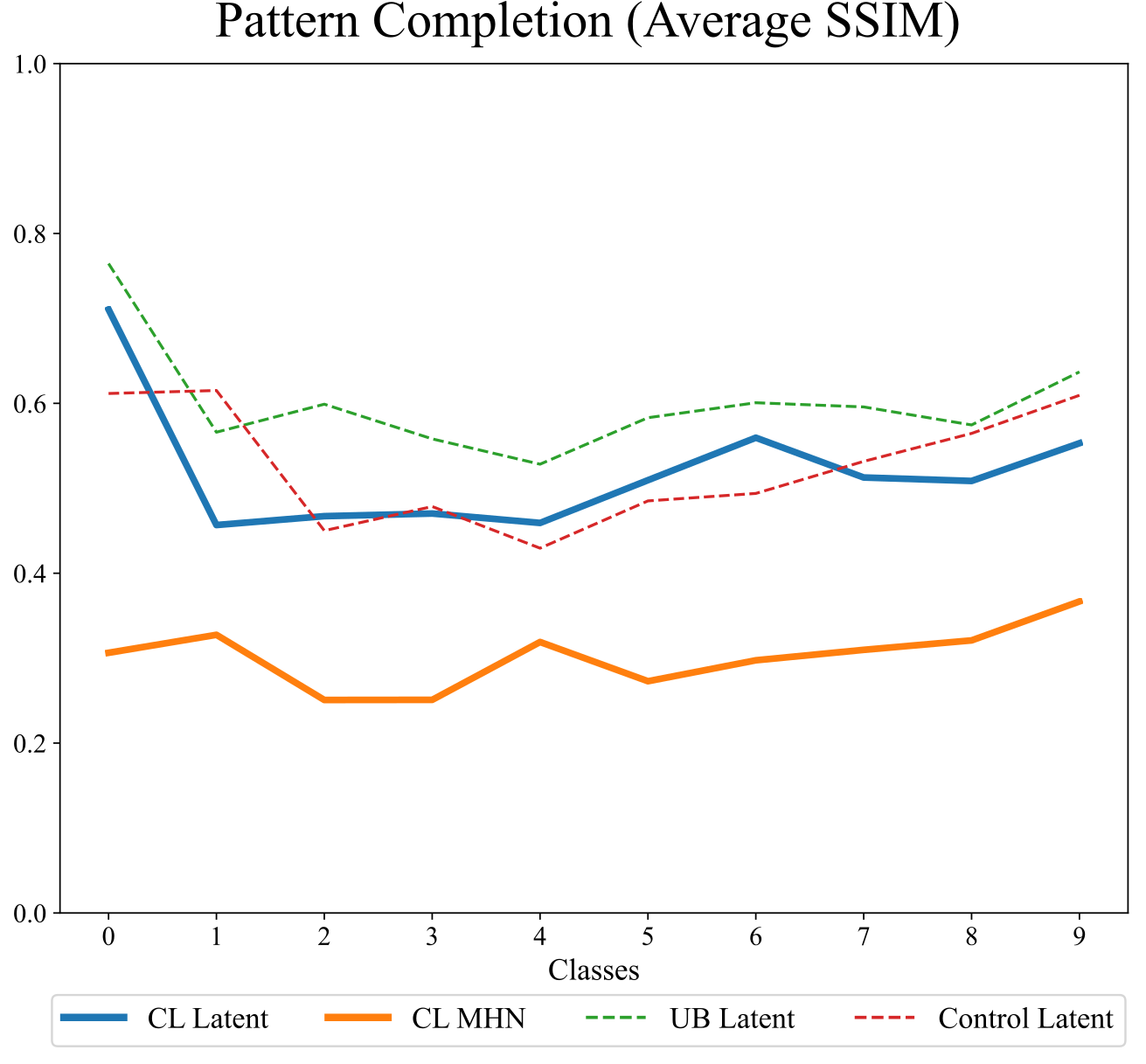

Pattern completion assessments through SSIM scores indicate effective reconstruction capabilities of the VAE layer, supporting its completion function.

Figure 4: SSIM scores demonstrate VAE latent layer's reconstruction superiority, consistent with its role in pattern completion.

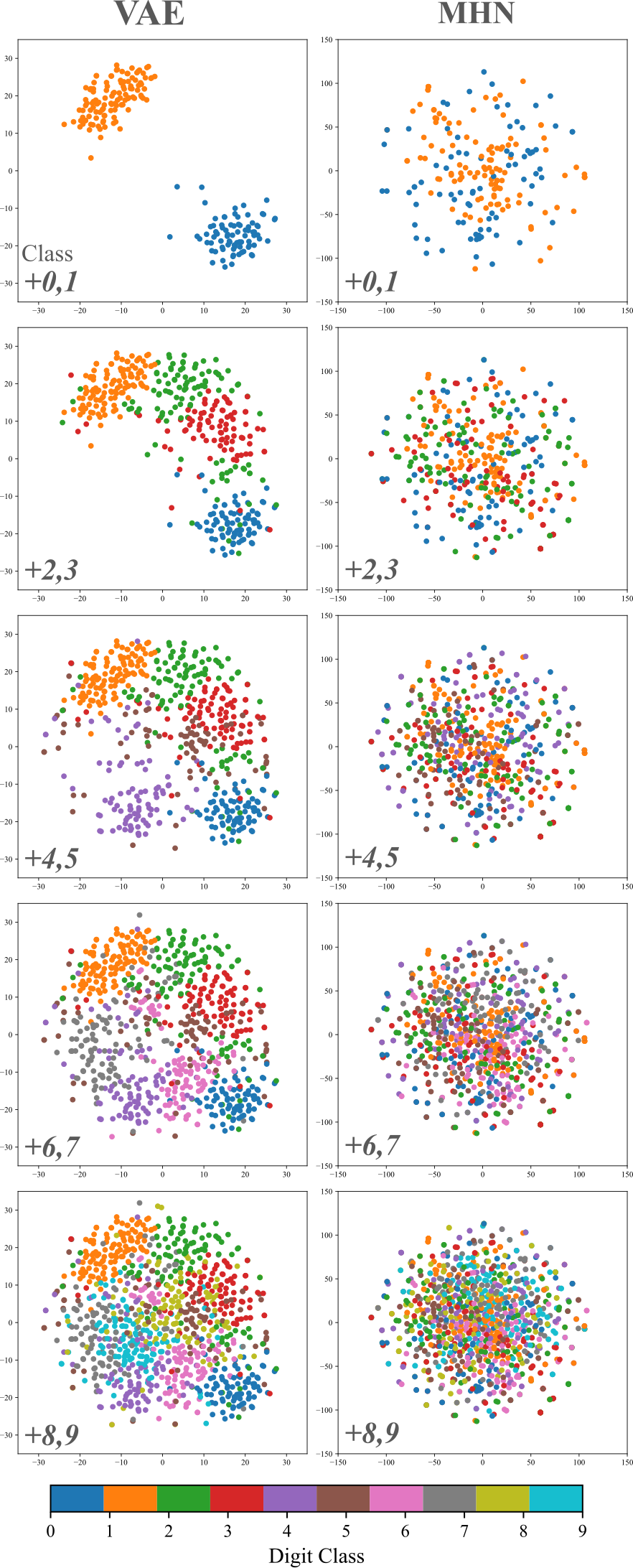

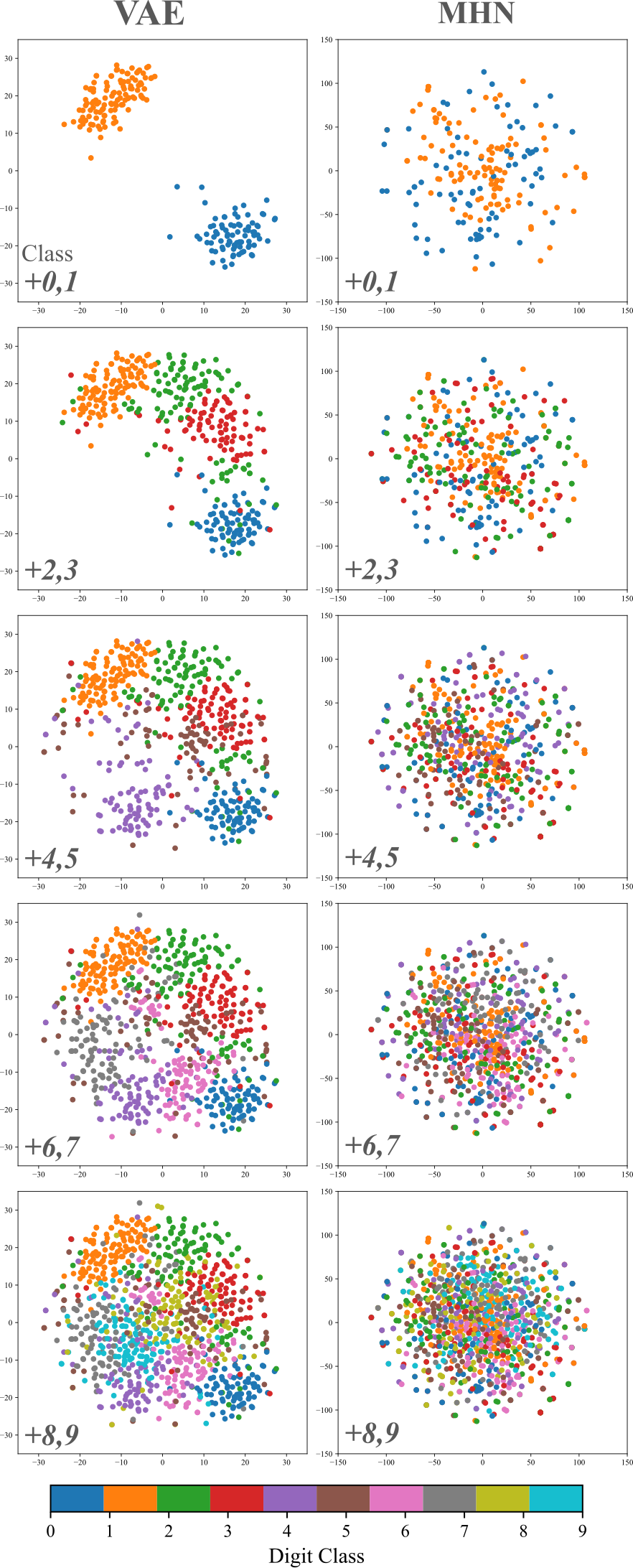

Visualization of t-SNE projections further exemplifies the complementary functional roles within the model, where the VAE fosters class-level consolidation and the MHN supports separation dynamics.

Discussion

The VAE+MHN model underscores the importance of preserving neural plausibility while achieving competitive CL performance. While simplifying intricate biological processes, the model paves the way for integrating cognitive neuroscience insights into scalable ML architectures. Future research directions may explore its applicability to higher-order cognitive functions and reasonings, extending beyond simple memory tasks.

Figure 5: t-SNE visualization showing distinct patterns facilitating separation in MHN and completion in VAE.

Conclusion

This paper marks an advancement in bridging CLS theory with artificial continual learning, presenting a neurally plausible template for addressing catastrophic forgetting. By successfully demonstrating pattern separation and completion within a unified architecture, the VAE+MHN model contributes significantly to developing robust models in both theoretical and practical domains of artificial intelligence.