- The paper presents WORKBank, a survey-based auditing framework that evaluates AI agent automation and augmentation across 844 detailed occupational tasks.

- It reveals significant mismatches between worker desires and expert capability assessments, emphasizing the need for targeted R&D in AI development.

- The study highlights a shift in core skills from information processing to interpersonal competence, underscoring the importance of workforce retraining.

Auditing Automation and Augmentation Potential of AI Agents

This paper (2506.06576) introduces a survey-based auditing framework, WORKBank, to evaluate the potential of AI agents in automating or augmenting tasks across the U.S. workforce. The framework captures worker desires and expert assessments, incorporating the Human Agency Scale (HAS) to quantify human involvement. The paper's core contribution lies in its comprehensive analysis of the desire-capability landscape, revealing mismatches and opportunities for AI agent development, while also highlighting the potential shift in core human skills due to AI integration.

Framework and Methodology

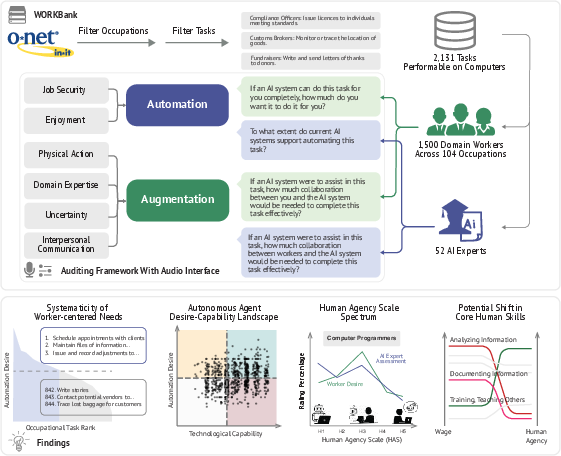

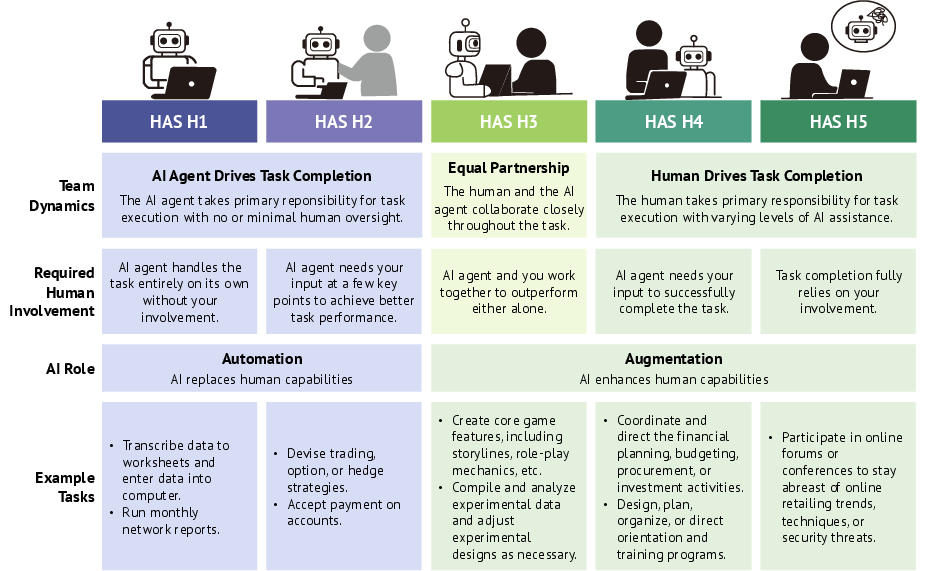

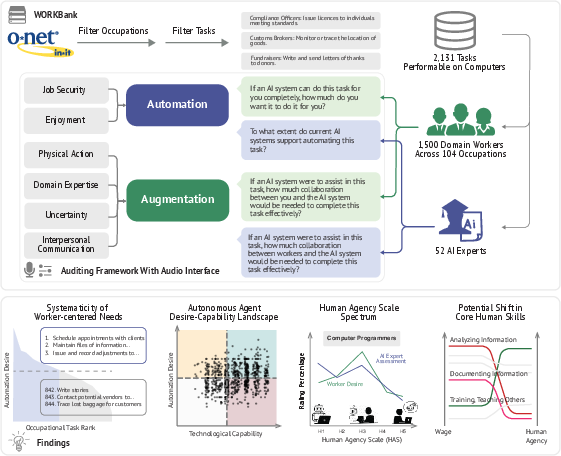

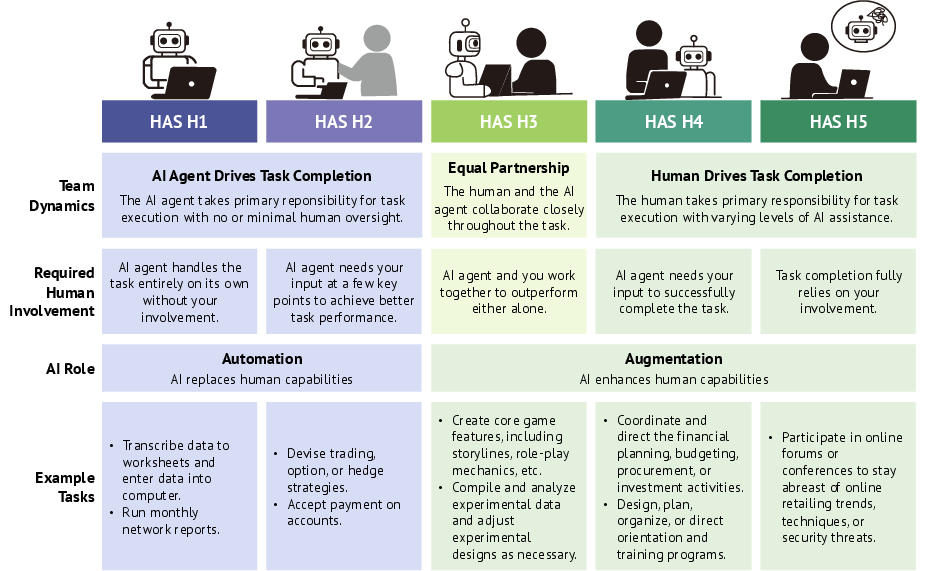

The auditing framework (Figure 1) centers on complex, multi-step tasks sourced from the O*NET database and emphasizes the spectrum of automation and augmentation. The HAS, a five-level scale ranging from full automation (H1) to essential human involvement (H5) (Figure 2), provides a human-centered lens for assessing task properties and guiding agent development. The framework collects worker ratings on automation desire and desired HAS levels, using an audio-enhanced survey system. AI experts then assess automation capability and feasible HAS levels. The WORKBank database, built upon this framework, consists of responses from 1,500 workers and 52 AI experts, covering 844 occupational tasks.

Figure 1: Overview of the auditing framework and key insights.

Figure 2: Levels of Human Agency Scale (HAS).

Key Findings and Analysis

The paper reveals several key findings:

- Workers desire automation for low-value and repetitive tasks. For 46.1% of tasks, workers express positive attitudes toward AI agent automation, motivated primarily by freeing up time for high-value work (Figure 3).

- The desire-capability landscape reveals critical mismatches. Tasks are divided into four zones: Automation "Green Light," "Red Light," R{content}D Opportunity, and Low Priority. Investments are disproportionately concentrated in the Low Priority and Automation "Red Light" Zones (Figure 4).

- The HAS provides a shared language to audit AI use at work. Workers generally prefer higher levels of human agency than experts deem necessary (Figure 5).

- Key human skills may be shifting from information processing to interpersonal competence (Figure 6).

Implications and Future Directions

These findings have significant implications for AI agent research, development, and deployment strategies. The identified mismatches between worker desires and technological capabilities highlight the need for more targeted R{content}D efforts. The HAS framework offers a valuable tool for understanding and promoting human-agent collaboration, while the observed shift in core human skills underscores the importance of workforce development and retraining initiatives. Future research should focus on longitudinal tracking of task-level changes and further exploration of worker reskilling strategies.

Critical Assessment

The paper presents a comprehensive and well-executed paper of the potential impact of AI agents on the U.S. workforce. The auditing framework and WORKBank database represent valuable resources for researchers and practitioners in the field. The analysis of the desire-capability landscape and the HAS framework provide novel insights into the complex interplay between automation and augmentation.

One potential limitation is the reliance on existing occupational tasks defined by the O*NET database, which may not fully capture the emergence of new tasks as AI agents become more integrated into the workplace. Additionally, while the paper includes a diverse sample of workers, it is possible that participants' responses may be influenced by their limited awareness of AI capabilities and constraints, as well as concerns about job security.

Conclusion

This paper makes a significant contribution to the understanding of the future of work with AI agents. By integrating worker desires and expert assessments, the paper provides actionable insights for aligning AI development with human needs and preparing workers for evolving workplace dynamics. The WORKBank database and auditing framework offer a valuable foundation for future research and policy initiatives in this rapidly evolving field.