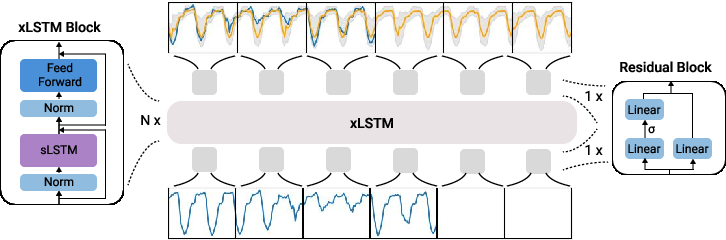

- The paper introduces TiRex, a novel zero-shot forecasting model that leverages xLSTM blocks for effective state-tracking over long horizons.

- It employs a unique Contiguous Patch Masking strategy to mitigate error propagation in multi-step predictions.

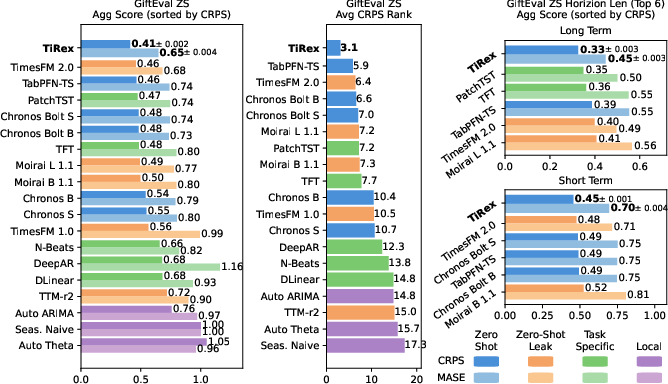

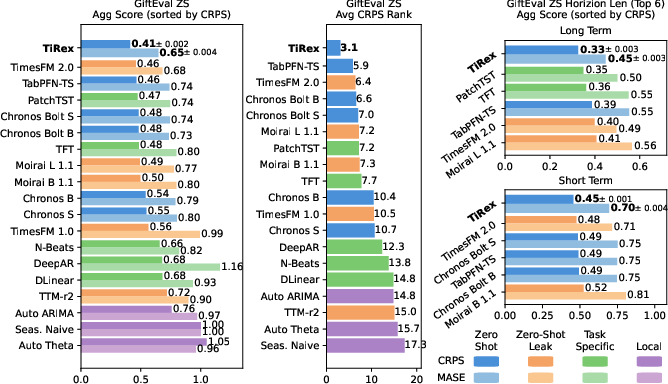

- TiRex demonstrates superior performance on benchmarks like GiftEval, offering robust predictions across diverse real-world scenarios.

TiRex: Zero-Shot Forecasting Across Long and Short Horizons with Enhanced In-Context Learning

This essay discusses the implementation and practical application of TiRex, a novel time series forecasting model designed for zero-shot prediction using enhanced in-context learning capabilities.

Introduction to TiRex

TiRex is an innovative forecasting model leveraging the xLSTM architecture to perform zero-shot predictions on time series data. Unlike transformers, which are predominant in language processing but fall short in time series forecasting, TiRex combines the strengths of LSTMs with advanced in-context learning capabilities. This unique approach aims to bridge the gap by providing robust state-tracking suitable for long-horizon forecasts.

Key Components of TiRex

Implementation Details

Implementing TiRex requires understanding both its architectural design and training strategies. The xLSTM blocks form the backbone, characterized by efficient state tracking. CPM ensures the model can handle long sequences through controlled masking, maintaining coherency in predictions over numerous steps.

Training and Evaluation

TiRex is trained on a diverse corpus, incorporating synthetic and real data from various domains, with augmentations increasing data variability. The training employs a learning rate scheduler and optimization techniques like AdamW, vital for managing the model's performance across extensive datasets.

TiRex has demonstrated state-of-the-art performance on benchmarks like GiftEval and Chronos-ZS. Its zero-shot capabilities are especially notable in datasets where data overlap between training and benchmarking is minimized.

Figure 2: Results of the GiftEval-ZS benchmark illustrate TiRex's superior aggregated scores compared to other zero-shot models.

Practical Considerations

Scalability and Efficiency

TiRex's architecture is optimized for scalability, ensuring efficient memory and computational use without compromising prediction accuracy. Its flexibility allows it to be deployed in various environments, from local systems to cloud-based architectures.

Application Domains

TiRex is applicable across multiple domains, including energy, healthcare, and retail, where accurate long-term predictions are essential. Its ability to provide coherent uncertainty estimates makes it valuable for decision-making processes that rely on forecasting under uncertainty.

Trade-offs and Limitations

While TiRex excels in generalization and zero-shot learning, the finite pre-training corpus may limit its performance on highly domain-specific tasks. Future improvements could focus on expanding the diversity of training data and evaluating multi-variate series integration.

Conclusion

TiRex represents a significant advance in zero-shot time series forecasting by addressing the deficiencies of transformer models in this domain. By leveraging the xLSTM architecture and innovative training strategies such as CPM, TiRex sets a new performance standard across various evaluation benchmarks.

Future iterations of TiRex could see enhancements in handling multivariate time series and potentially adopting more sophisticated augmentation strategies to further boost its generalization and adaptability across even broader application areas.