- The paper introduces TRA-PAN, a novel two-stage framework that effectively fuses LRMS and PAN images for high-resolution multispectral output.

- It leverages degradation-aware modeling with a warm-up and random alternation procedure to balance full and reduced resolution training.

- The framework outperforms state-of-the-art methods on datasets like WV3 and GF2, improving both spectral fidelity and spatial alignment.

Two-Stage Random Alternation Framework for One-Shot Pansharpening

Introduction

This paper introduces a novel framework, named Two-Stage Random Alternation Framework (TRA-PAN), designed to tackle the challenges associated with pansharpening, especially in scenarios where acquiring real high-resolution images is difficult. Pansharpening combines low-resolution multispectral (LRMS) images and high-resolution panchromatic (PAN) images to produce a high-resolution multispectral (HRMS) image. While traditional methods have been successful, they often face issues such as spectral distortion and spatial misalignment. Recent deep learning (DL) methods improve upon these challenges but require extensive datasets for training, which are not always available. TRA-PAN addresses these issues by leveraging both reduced- and full-resolution training strategies.

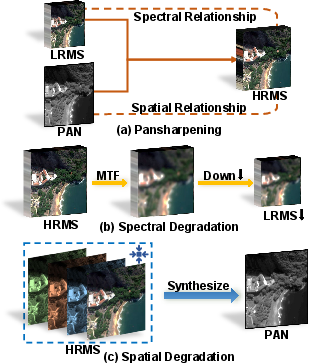

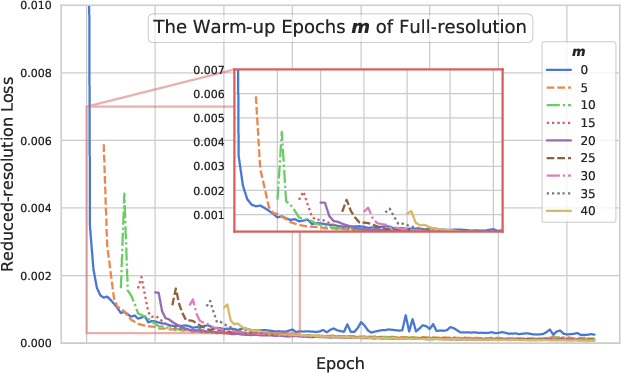

Figure 1: Overview of full-resolution training for pansharpening. (a) The pansharpening process highlights spectral and spatial relationships, linking LRMS and PAN images with the HRMS image. (b) The spectral degradation from HRMS to LRMS, including MTF and downsampling. (c) By synthesizing the channels in HRMS, such as the RGB channels shown in the figure, the spatial relationship between HRMS and PAN is learned.

Methodology

Two-Stage Framework

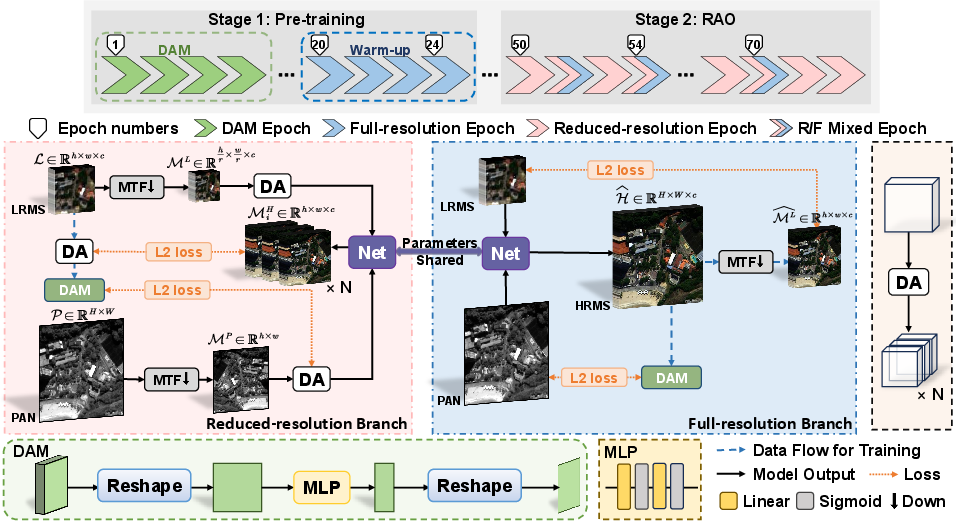

The TRA-PAN framework consists of two distinct stages:

- Pre-training Stage: This stage introduces two key procedures:

- Degradation-Aware Modeling (DAM): Captures complex spatial relationships by modeling the degradation from HRMS to PAN and LRMS, preparing the fusion network.

- Warm-up Procedure: Trains the fusion network using only full-resolution data to establish an optimal initial state, avoiding early limitations of reduced-resolution training.

- Random Alternation Optimization (RAO) Stage:

- In this stage, the alternation between reduced- and full-resolution training occurs with a specific probability. This strategy acts as a regularization tool, balancing strong supervision from reduced-resolution data and weaker supervision from full-resolution images, ensuring robust learning and avoiding overfitting.

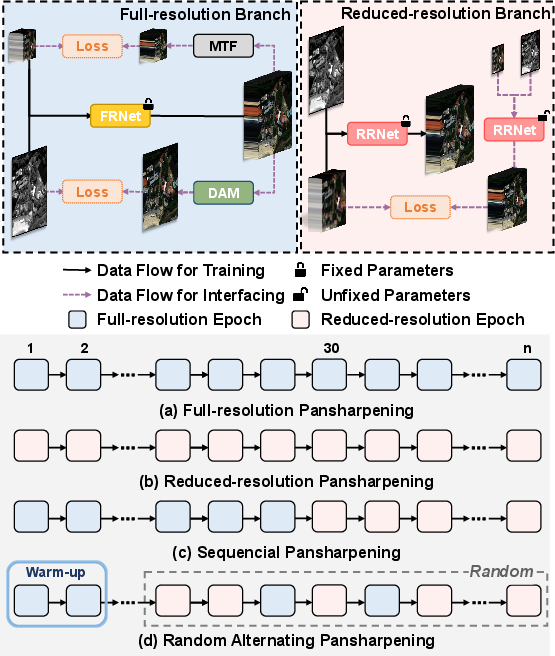

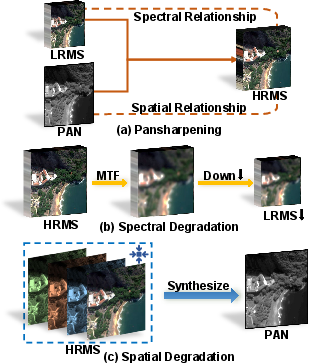

Figure 2: Pansharpening paradigms: (a) full-resolution training, (b) reduced-resolution training, (c) serial stage-wise training, (d) TRA-PAN with warm-up and random alternating training.

Implementation Details

The methodology employs various techniques to achieve efficient training and high-quality pansharpening:

- A Multi-Layer Perceptron (MLP) network is used during the DAM procedure to predict spatial degradations.

- The augmented LRMS and PAN images facilitate robust training by mitigating overfitting risks.

- Noise-robust optimization is integral during the RAO stage, with dynamic training strategies ensuring effective cross-resolution learning.

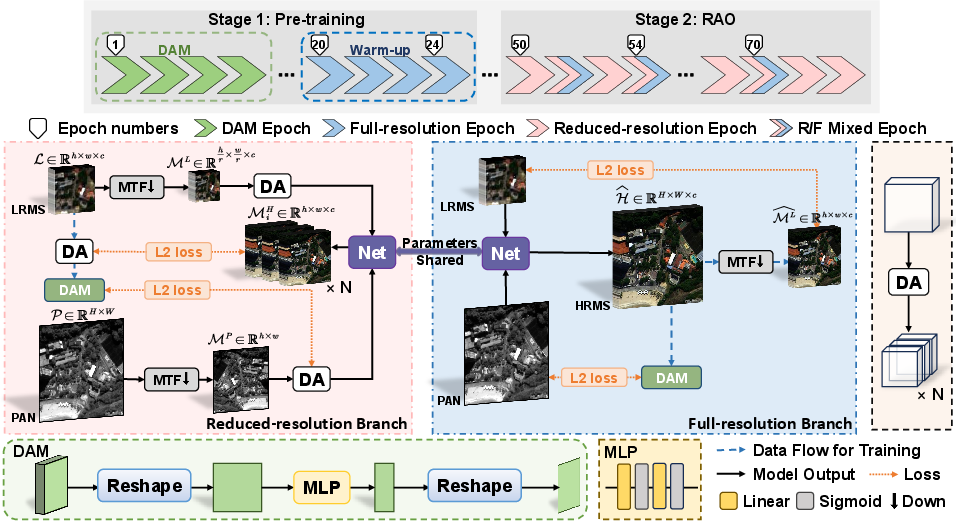

Figure 3: Flowchart of the TRA-PAN framework. The entire training process is illustrated by the progress bar at the top. First, the DAM procedure in the pre-training stage is initiated, and its network peremeters θD proceeds to the RAO stage. After the DAM procedure is completed, the process moves to the warm-up procedure, which exclusively utilizes the full-resolution branch. Finally, the process enters the RAO stage, where random alternating training takes place. Note that the whole procedure only has one pair of LRMS and PAN images as input, then it could yield promising outcomes, especially for full-resolution data.

Results and Discussion

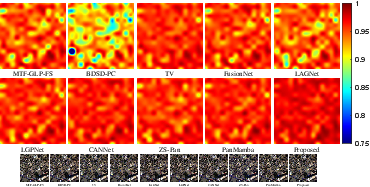

The TRA-PAN framework demonstrates superior performance over state-of-the-art methods across multiple datasets, including WorldView-3 (WV3), QuickBird (QB), and GaoFen-2 (GF2). The method achieved improvements in HQNR (a composite metric of spectral and spatial fidelity) over existing methods, highlighting its efficacy.

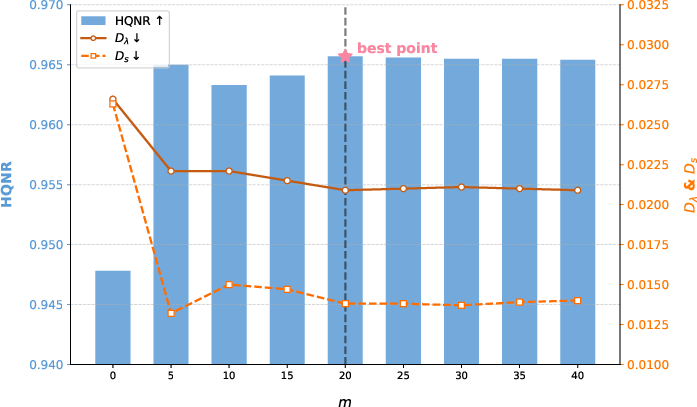

Experiments indicate that the framework's success hinges on:

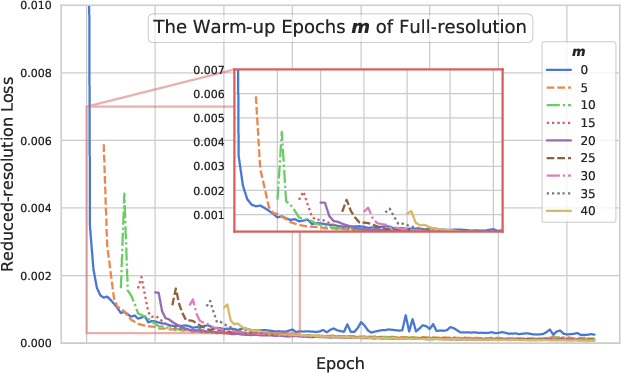

- The effectiveness of the warm-up procedure, which accelerates network convergence.

- The balance between reduced- and full-resolution training, effectively handled by the random alternation strategy.

These components collectively enable the generation of high-quality HRMS images from minimal training data, showcasing the framework's practical applicability in real-world, data-scarce scenarios.

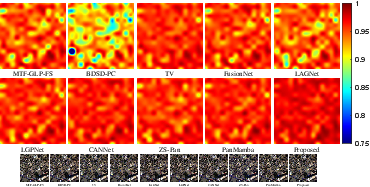

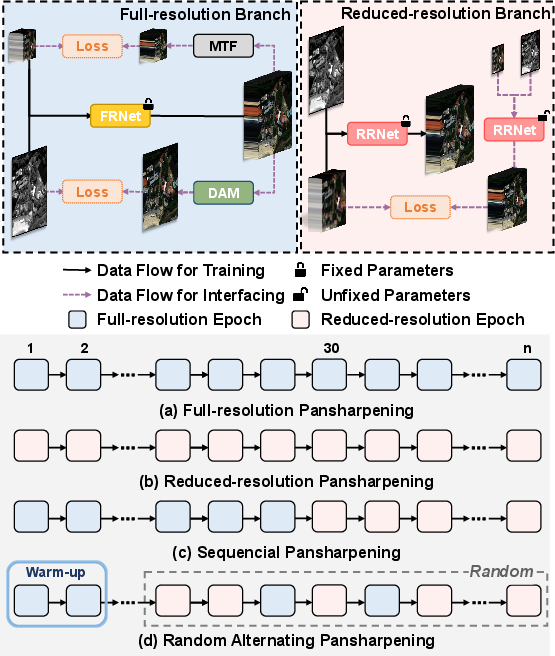

Figure 4: The HQNR maps (Top) and visual results (bottom) of all compared approaches on the WV3 full-resolution dataset.

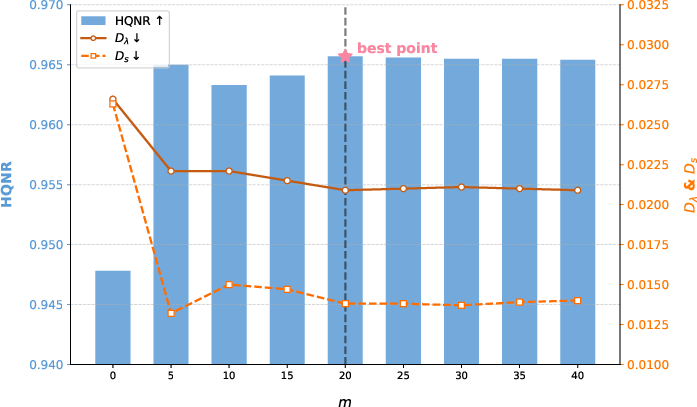

Figure 5: Effect of warm-up epochs on HQNR during full-resolution training.

Figure 6: Warm-up epochs vs. the loss of fusion network during reduced-resolution training.

Conclusion

TRA-PAN offers a viable solution to pansharpening by effectively integrating various training strategies, ensuring robustness in environments with limited data availability. This framework outperforms existing methods in practical applications by enhancing the spectral and spatial coherence of the fusion model. While the methodology slightly increases training time due to alternate resolution training, the gains in model performance and adaptability in zero-shot settings are significant. The approach sets the stage for further exploration into flexible training schemes that can adapt to varying data availability and resolution conditions, potentially leading to advancements in zero-shot and unsupervised learning paradigms in remote sensing and beyond.