- The paper proposes MIA-Mind, a novel attention mechanism that jointly models channel and spatial dependencies to enhance feature representation.

- It employs a cross-attentive fusion strategy using global feature extraction, interactive attention generation, and dynamic reweighting to optimize performance across tasks.

- Experimental results demonstrate improved accuracy and efficiency in classification, segmentation, and anomaly detection, underscoring its practical impact.

MIA-Mind: A Multidimensional Interactive Attention Mechanism Based on MindSpore

The paper "MIA-Mind: A Multidimensional Interactive Attention Mechanism Based on MindSpore" introduces a novel attention mechanism addressing the limitations of independent channel and spatial attention modeling. Developed upon the MindSpore framework, MIA-Mind enhances feature representation through a cross-attentive fusion strategy, optimizing both spatial and channel dependencies.

Introduction

Attention mechanisms have become integral to deep learning models for their ability to prioritize critical features. Traditional attention approaches typically model either spatial saliency or channel importance separately, which can constrain feature expressiveness due to overlooked interdependencies. MIA-Mind, a lightweight and modular attention mechanism, refines feature recalibration by modeling spatial and channel features jointly. Implemented within the MindSpore ecosystem, MIA-Mind aims to provide significant performance improvements with minimal additional computational burden.

Key Contributions:

Methodology

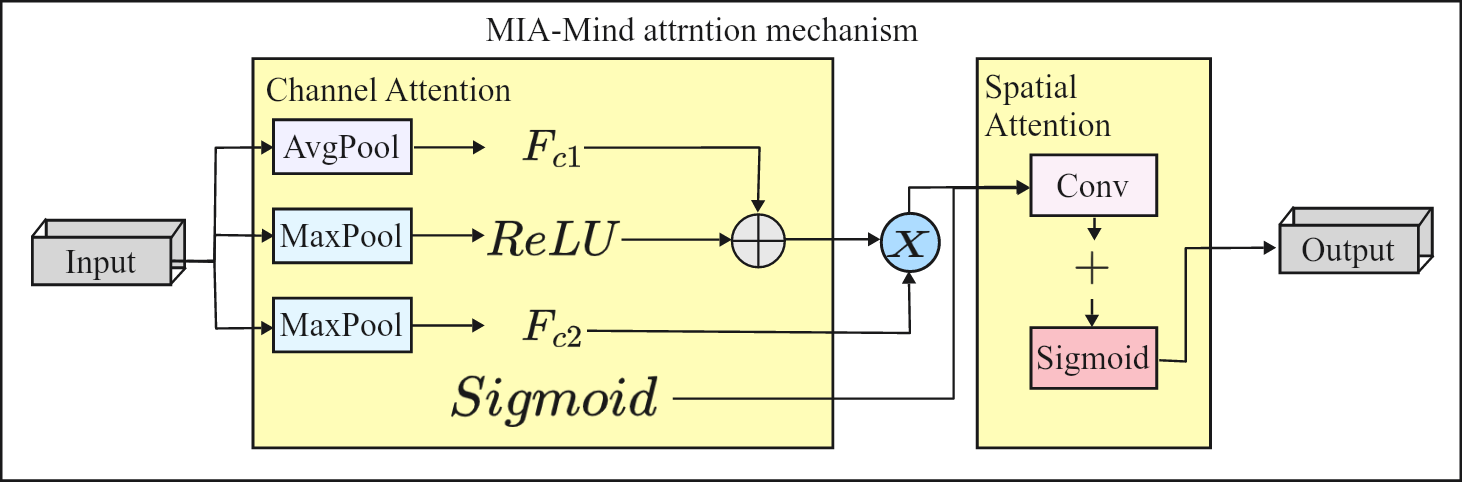

MIA-Mind's architecture comprises three critical modules:

- Global Feature Extraction: This module generates initial descriptors reflecting global channel and spatial context. Channel descriptors are obtained through global average pooling (GAP), while spatial descriptors result from channel-wise averaging.

- Interactive Attention Generation: This module computes joint attention maps through cross-multiplicative operations, allowing both channel-wise relevance and spatial focus to inform one another, optimizing context-aware feature recalibration.

- Dynamic Reweighting: The computed attention maps recalibrate input features via element-wise multiplication, ensuring semantic features are emphasized in critical spatial positions.

MIA-Mind fully exploits MindSpore's modular and efficient platform, benefiting from its dynamic/static graph modes and optimization strategies like operator fusion. Implemented as a MindSpore nn.Cell, MIA-Mind ensures a seamless integration and efficient model training.

Experimental Evaluation

Extensive experiments validate MIA-Mind's versatility and efficacy across three datasets and tasks:

- CIFAR-10 (Classification): Achieved an accuracy of 82.9%, demonstrating significant improvements in feature discrimination of natural images.

- ISBI2012 (Segmentation): Achieved a Dice coefficient of 87.6%, highlighting superior boundary delineation in medical imaging.

- CIC-IDS2017 (Anomaly Detection): Achieved an outstanding accuracy of 91.9%, with high precision rates indicating accurate anomaly identification.

The results, summarized in the table below, underline MIA-Mind's consistent performance across domains without task-specific design modifications.

| Task |

Dataset |

Metric |

Score |

| Image Classification |

CIFAR-10 |

Accuracy |

82.9% |

|

|

Precision |

83.1% |

|

|

Recall |

82.9% |

|

|

F1-score |

82.8% |

| Medical Image Segmentation |

ISBI2012 |

Accuracy |

78.7% |

|

|

Dice coefficient |

87.6% |

| Anomaly Detection |

CIC-IDS2017 |

Accuracy |

91.9% |

|

|

Precision |

98.9% |

|

|

Recall |

74.5% |

|

|

F1-score |

84.9% |

Discussion

MIA-Mind's joint modeling of spatial saliency and channel importance effectively captures complex feature dependencies, enhancing overall model performance. The MindSpore framework further boosts execution efficiency through optimized graph and tensor operations, supporting deployment even in resource-constrained environments. Limitations include a lack of validation on large-scale distributed systems and the adoption of static attention fusion strategies. Future work will involve scaling MIA-Mind across broader datasets and environments, as well as exploring adaptive fusion techniques for improved versatility.

Conclusion

MIA-Mind presents a robust, efficient mechanism that advances the integration of multidimensional attention, leveraging MindSpore's comprehensive optimization capabilities. Validated across various tasks, MIA-Mind underscores its effectiveness in improving feature representation with low computational overhead. Future research directions will focus on adaptability and scalability enhancements to further meet the demands of complex, real-world applications.