- The paper demonstrates that using Affect Control Theory effectively converts identity-impression discrepancies into synthetic emotions in artificial agents.

- The study's experiments reveal that high-frequency emotional displays notably enhance the perceived emotional agency of robots.

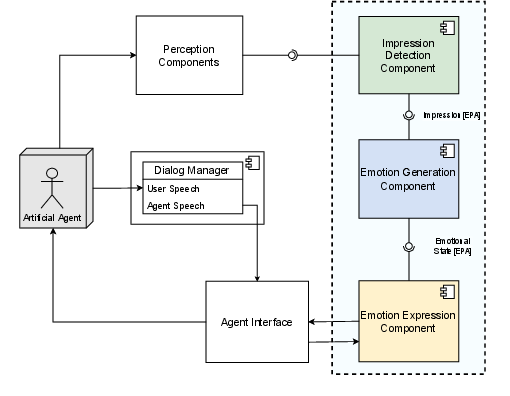

- The framework integrates impression detection, emotion generation, and emotion expression to improve dynamic human-robot interaction.

EmoACT: Embedding Emotions in Artificial Agents with Affect Control Theory

Introduction

The integration of emotions into robots and artificial agents has garnered interest due to its potential to enhance human-robot interaction (HRI). Emotions facilitate more natural and transparent interactions, akin to human-human communication. The paper "EmoACT: a Framework to Embed Emotions into Artificial Agents Based on Affect Control Theory" presents a framework leveraging Affect Control Theory (ACT) to embed synthetic emotions into humanoid robots. This essay provides a comprehensive overview of the paper’s theoretical background, proposed architecture, and experimental evaluation, focusing on its methodological rigor and empirical findings.

Affect Control Theory in HRI

ACT suggests that emotions arise from discrepancies between an individual's identity and the perceived impression of others during interactions. These discrepancies generate emotions in a three-dimensional space (EPA): Evaluation, Potency, and Activity. This theory contrasts with Cognitive Appraisal Theories by explicitly linking emotions to social interactions, aligning well with the goals of HRI. By implementing ACT in robotic systems, the paper explores whether artificial agents can embody emotions in a manner that affects human perception.

EmoACT Framework

The EmoACT framework is designed to be platform-independent and comprises several key modules: Impression Detection, Emotion Generation, and Emotion Expression.

Experimental Design

Two experiments were conducted to evaluate the EmoACT framework's effectiveness:

- Experiment 1: Compared a non-emotional robot with one that displays emotions at a low frequency.

- Experiment 2: Compared a non-emotional robot with one exhibiting high-frequency emotional expressions.

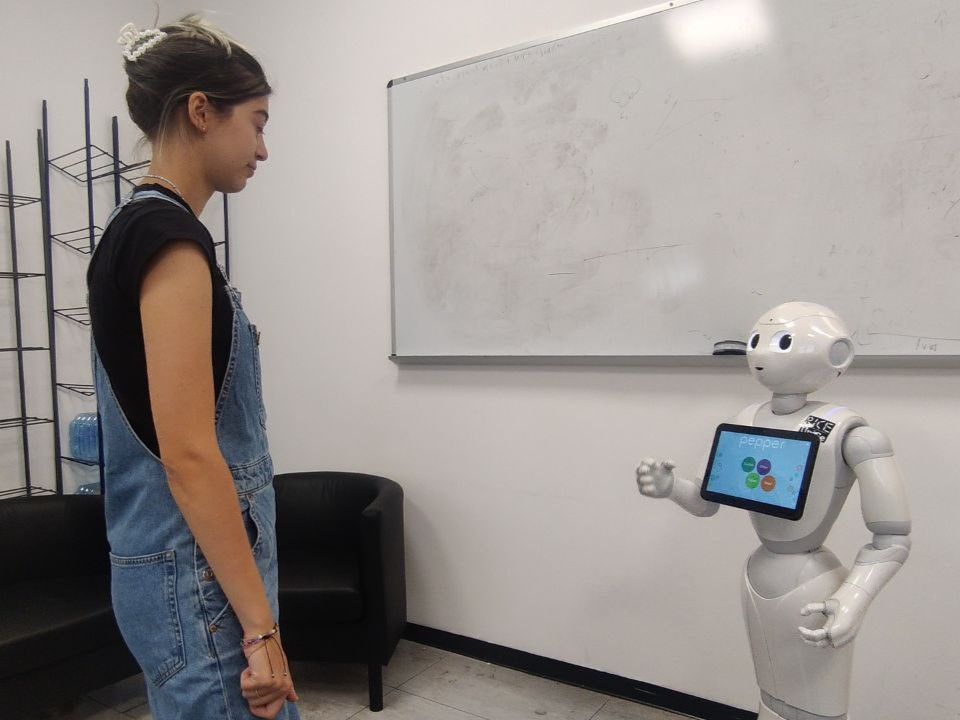

Participants interacted with the robot in collaborative storytelling scenarios, where the robot's emotional expressiveness was tested under varying conditions.

Figure 2: Experiment interaction.

Results

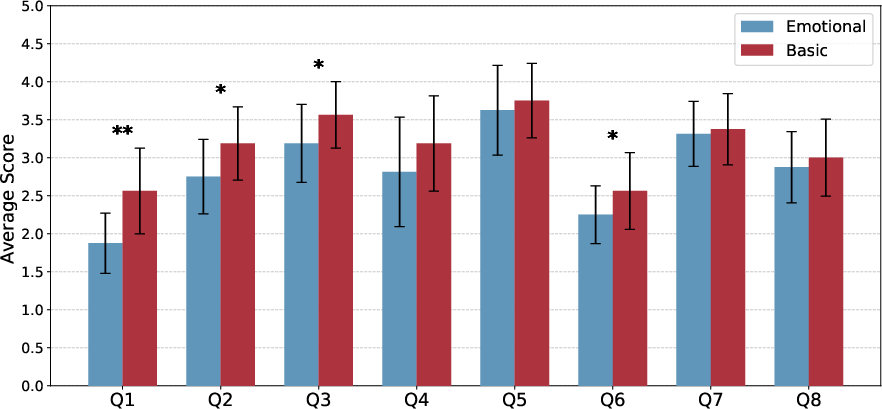

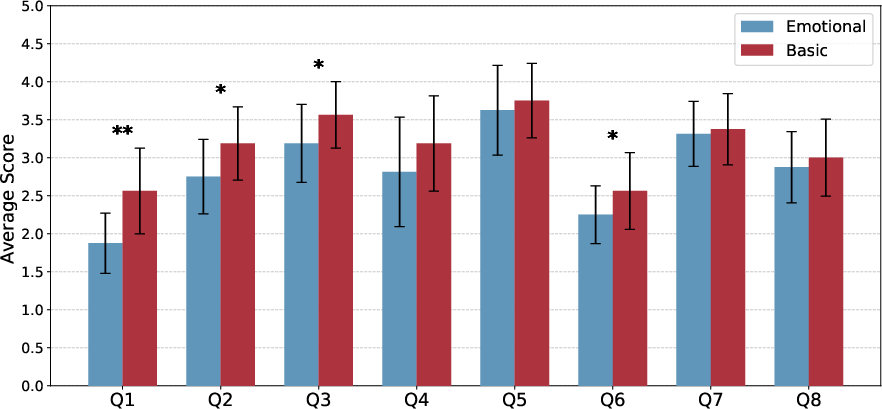

The experiments demonstrate that ACT-based emotional expressions in robots improve users' perceptions of the robot's emotional and cognitive capabilities. The high-frequency emotional display significantly enhanced the perceived emotional agency compared to the control group. However, the improvements in anthropomorphism, animacy, and intelligence were less pronounced, suggesting that frequency plays a crucial role in successful emotion conveyance.

Figure 3: Average score and confidence interval of the Agency Experience questions for the emotional and basic robot in Case Experiment~2.

The paper's findings suggest that while emotions can enhance the perception of robots, their effective portrayal depends on the frequency and type of emotional cues used. The high-frequency emotional display robot was perceived as more emotionally intelligent, indicating the importance of dynamic expressiveness in developing social robots.

Discussion and Conclusion

The research shows promising results for embedding synthetic emotions using ACT, providing a novel approach to HRI. EmoACT enhances the perceived emotional agency of robots, highlighting the importance of expressiveness frequency in emotion recognition. Future work should explore integrating other psychological theories, including personality traits, to enrich the framework. Despite some limitations, such as the focus on positive identities and instantaneous emotion generation, the framework successfully demonstrates the potential of ACT in improving human-robot interactions.

In conclusion, this paper contributes significantly to the field of social robotics by demonstrating that ACT can be effectively employed to embed synthetic emotions into artificial agents, thus advancing the understanding of emotion-driven human-robot interaction.