- The paper introduces AgentAda, a novel LLM-powered analytics agent with a dynamic, skill-informed framework that tailors data insight discovery.

- It employs a three-stage pipeline—question generation, skill matching with a hybrid RAG approach, and robust code generation—to enhance analysis quality.

- AgentAda outperforms existing tools on the AdaBench benchmark, with human evaluations favoring its deeper, goal-aligned insights by nearly 49%.

AgentAda: Skill-Adaptive Data Analytics for Tailored Insight Discovery

Introduction

"AgentAda: Skill-Adaptive Data Analytics for Tailored Insight Discovery" introduces a novel LLM-powered analytics agent named AgentAda. The paper tackles the limitations in existing LLM-based analytics tools which often struggle with complex workflows and rely on basic analytical techniques. AgentAda aims to address these issues by introducing a dynamic, skill-informed framework that leverages a curated library of analytical skills for more structured and goal-oriented data analysis.

Approach and System Design

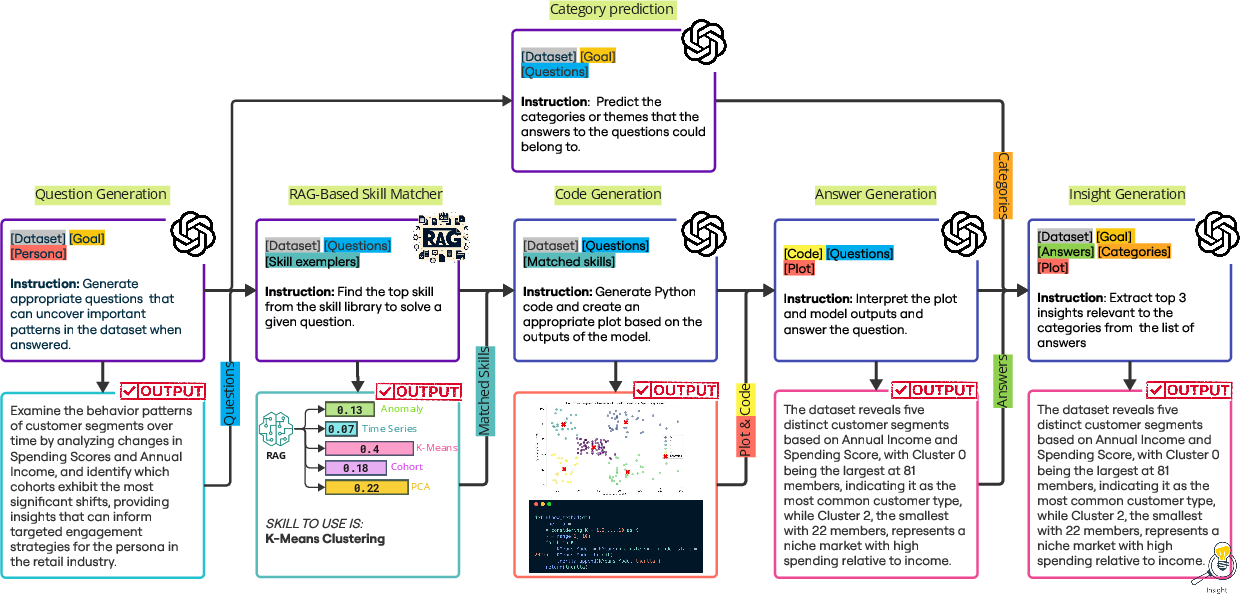

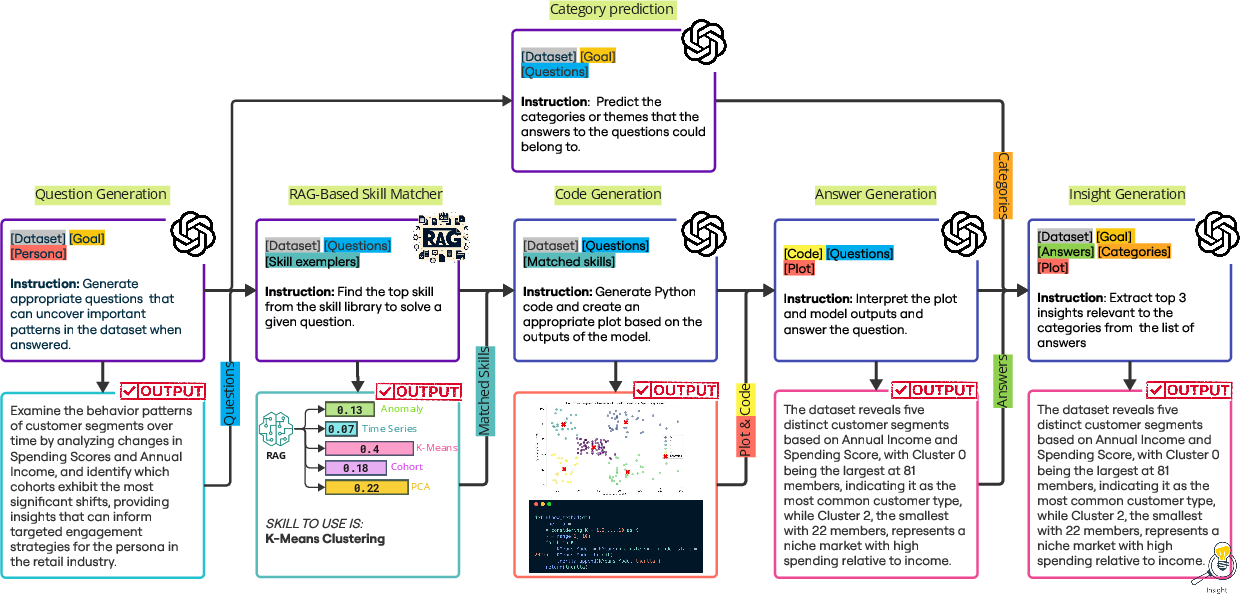

AgentAda's architecture comprises three pivotal components: question generation, skill matching, and code generation.

- Question Generation:

- Skill Matcher:

- Code Generation:

- Once a skill is matched to a question, AgentAda employs a code generation cascade to produce executable code tailored to that specific skill's documentation. This ensures that the analysis is both accurate and efficient.

- The code generation module is adept at self-correction, incorporating error messages during regeneration attempts to improve robustness and reliability.

Benchmarking and Evaluation

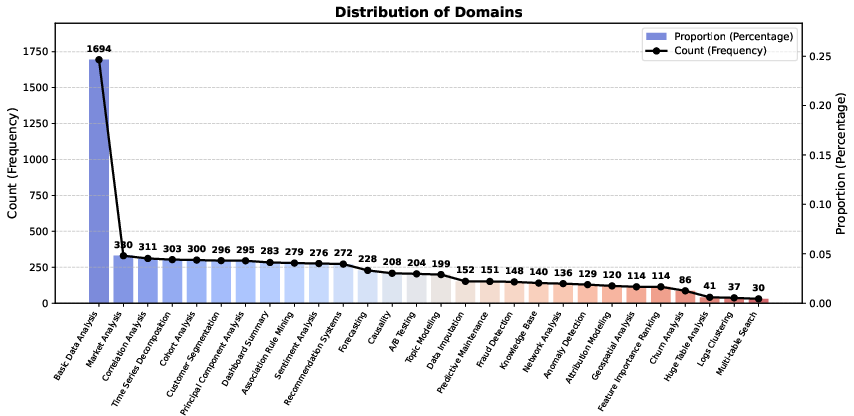

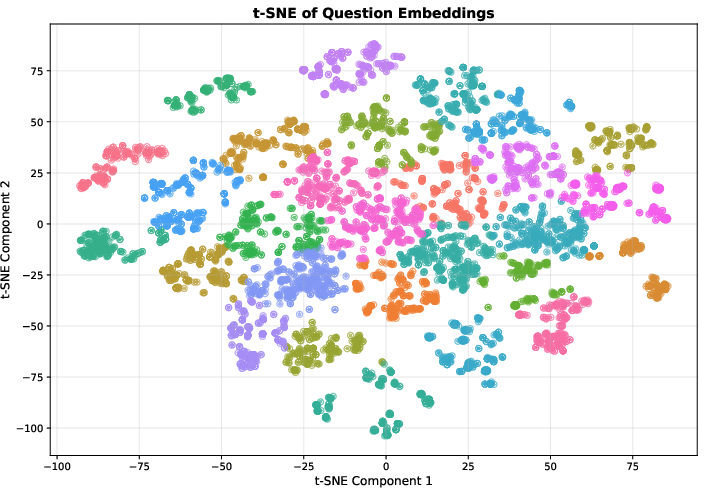

AgentAda introduces a comprehensive benchmark named AdaBench that spans 700 examples across 49 domains and 28 task types. The diversity of AdaBench allows for robust evaluations of AgentAda's capabilities.

- Human Evaluation:

- The paper details human evaluation experiments that demonstrate AgentAda's ability to provide insights of greater depth and relevance than existing tools.

- Evaluators preferred AgentAda-generated insights 48.78% of the time, significantly surpassing the unskilled baseline variant.

- LLM-as-a-Judge Framework:

- A novel evaluation strategy named SCORER (Structured Calibration Of Ratings via Expert Refinement) aligns LLM-evaluated insights with human judgments by optimizing LLM prompts based on human ratings, instead of model fine-tuning.

- SCORER ensures scalability and reliability in evaluating insight quality.

Key Results and Insights

AgentAda outperformed existing agents such as Poirot and Pandas AI in critical areas, including depth of analysis and goal relevance. The adoption of skill guidance in data analytics tasks resulted in more profound insights and coherent analyses, as formalized numerically through benchmarks and qualitative feedback.

Figure 3: AgentAda's pipeline for automated data-driven insights.

Impact and Future Directions

AgentAda's significant contributions lie in its structured approach to LLM-powered data analytics, enabling goal-aligned, insightful, and advanced analysis across varied domains. This agent not only sets a new standard for LLM analytics but also opens new avenues for scalable evaluation using the LLM-as-a-judge framework.

Future works could expand AgentAda's scope beyond structured data to encompass unstructured data, multi-table analysis, and large-scale datasets, further pushing the boundaries of LLM capabilities in real-world applications.

Conclusion

The research showcases a significant leap in utilizing LLMs for advanced data analytics tasks, transitioning from basic statistical analysis to end-to-end, skill-informed data processing. AgentAda exemplifies the integration of LLMs with curated skill libraries and structured workflows to produce scalable, meaningful insights, setting the stage for future innovations in AI-driven data analytics.