Analysis of "ERATTA: Extreme RAG for Table To Answers with LLMs"

The paper "ERATTA: Extreme RAG for Table To Answers with LLMs" is a pioneering work focusing on the utilization of LLMs in combination with Retrieval Augmented Generation (@@@@2@@@@) to address question-answering needs from extensive and dynamic data tables. This approach particularly aims at Enterprise-level data processing where the response efficiency and accuracy are paramount. The proposed system stands out with its ability to efficiently authenticate, fetch, and prompt responses through a well-structured multi-LLM strategy, ensuring queries are resolved in under ten seconds.

System Architecture and Methodology

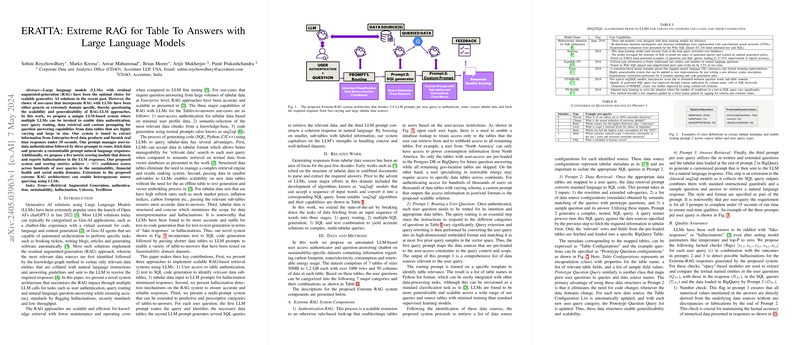

The innovative system architecture integrates multiple LLMs to perform sequential tasks: user authentication, query routing, data retrieval, and response generation. The paper details a three-prompt system that operates as follows:

- Authentication RAG: This component determines user access to specific tables based on authentication rules, ensuring that only relevant data sources are pre-loaded and analyzed per user query.

- Prompt 1 - Query Routing: Each incoming query is analyzed for its intent and appropriately routed. This step ensures that responses are relevant to the specific data points required, aligning user queries with designated data tables.

- Prompt 2 - Data Retrieval: The system leverages a text-to-SQL conversion process, executing automatically generated SQL queries to extract pertinent data subsets. This results in a more efficient processing of queries across large and heterogeneous data tables without manual intervention for varying data domains.

- Prompt 3 - Response Generation: This final stage generates natural language responses by employing LLMs to interpret SQL output alongside standard instructions, ultimately ensuring answers are both contextually and semantically precise.

Hallucination Detection Mechanism

A noteworthy aspect of the system is its robust hallucination detection module that evaluates the reliability and consistency of LLM-generated responses. Utilizing five key metrics—number check, entity check, query check, regurgitation check, and increase/decrease modifier check—this mechanism rigorous screens the outputs for accuracy. The implementation of these checks results in over 90% confidence scores across diverse domains such as sustainability, finance, and social media.

Implications and Future Directions

The practical implications of this research are significant, particularly in fields requiring rapid access to comprehensive datasets, such as financial analytics, healthcare informatics, and real-time social media monitoring. The integration of LLMs in processing complex, multi-tabular queries underscores the potential to generalize this methodology across different data domains without specific training for each new dataset.

The proposed system is ripe for further augmentation, such as expanding its capabilities to incorporate real-time predictions and prescriptive analytics through extensions of prompt 2. This future direction indicates a potential shift towards dynamic scenario planning where LLMs could be leveraged for predictive insights seamlessly interwoven with natural language responses.

Conclusion

In conclusion, the ERATTA system showcases an effective melding of LLMs with RAG methodology to provide rapid, accurate question-answer capabilities across extensive datasets. The work underscores the need for scalable, versatile generative AI systems adept at navigating and extracting insights from large-scale data environments. As AI models evolve, the extension of such frameworks to more complex scenarios will be an exciting frontier, continuing to bridge the gap between large-scale data and actionable intelligence.