- The paper introduces SALT, a flexible semi-automatic tool that enhances LiDAR point cloud annotation using zero-shot techniques with Vision Foundation Models.

- It employs a novel data alignment strategy and 4D-consistent spatiotemporal processing to maintain semantic integrity across diverse scenes and sensor setups.

- Experimental results show an 18.4% improvement in Panoptic Quality on SemanticKITTI and an 83% reduction in manual annotation time compared to traditional methods.

The paper presents SALT, a semi-automatic labeling tool for LiDAR point clouds designed to enhance annotation efficiency and adaptability across various scenes and LiDAR types. Unlike traditional annotation methods that rely heavily on camera data or human input, SALT utilizes a novel data alignment strategy that leverages the strengths of Vision Foundation Models (VFMs) like SAM2. The following sections provide a detailed exploration of SALT's methodology, experimental results, and potential impacts on the AI and robotics communities.

Methodology

Overview and Data Alignment

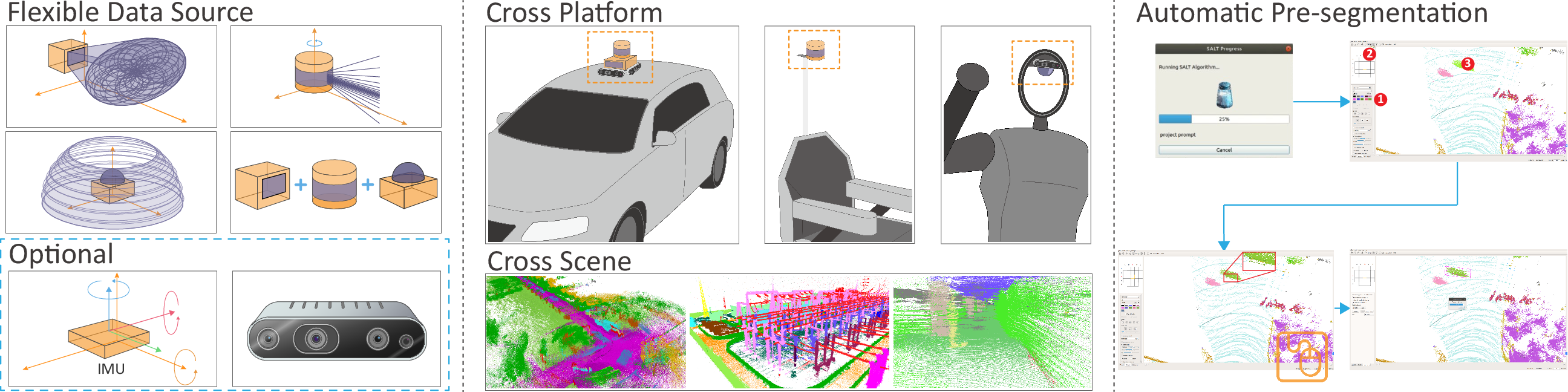

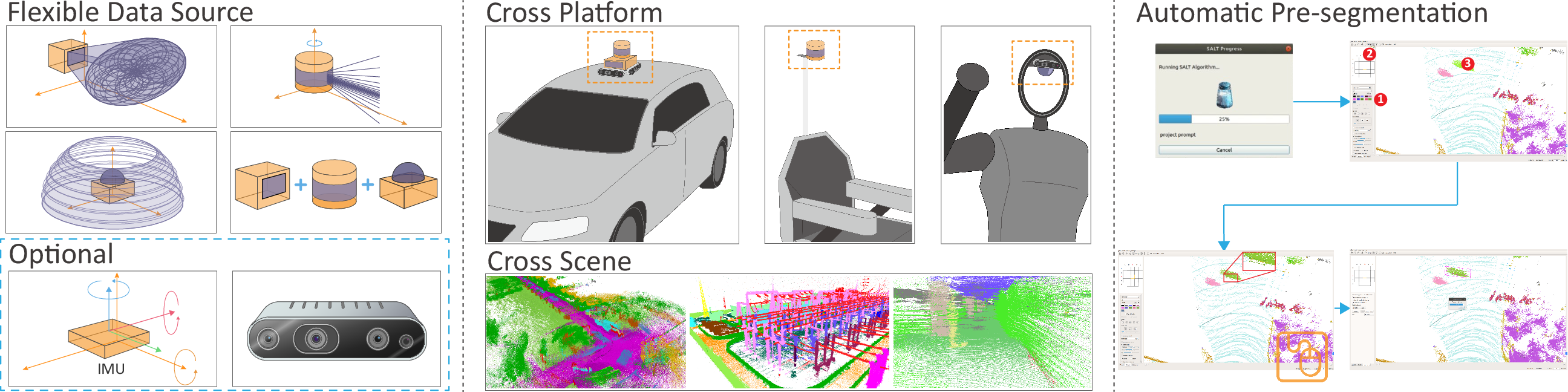

SALT's innovative approach is underpinned by its unique data alignment paradigm. The tool transforms raw LiDAR data into pseudo-images aligned with the training distribution of VFMs, optimizing for decision boundary adherence without requiring extensive camera calibration. This process, illustrated in Figure 1, ensures the pseudo-images are compatible with the decision-making boundaries of VFMs, allowing for accurate zero-shot cross-domain segmentation.

Figure 1: Overview of SALT: Flexible data sources, cross-platform adaptability, and automatic presegmentation workflow.

Spatiotemporal Processing and 4D Consistency

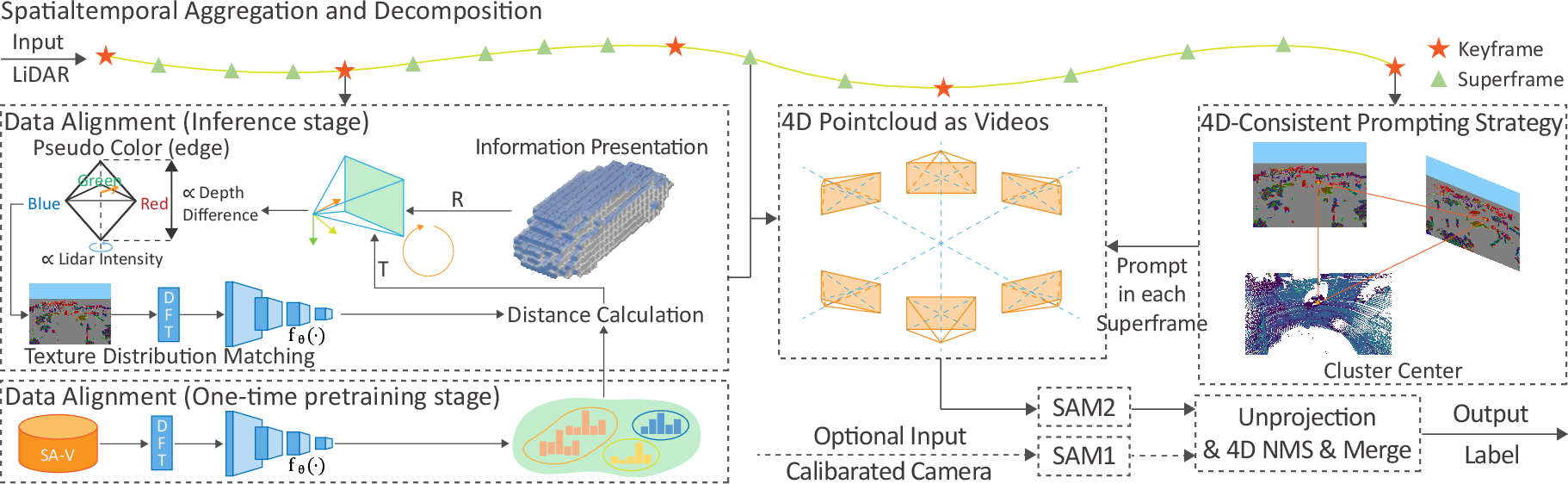

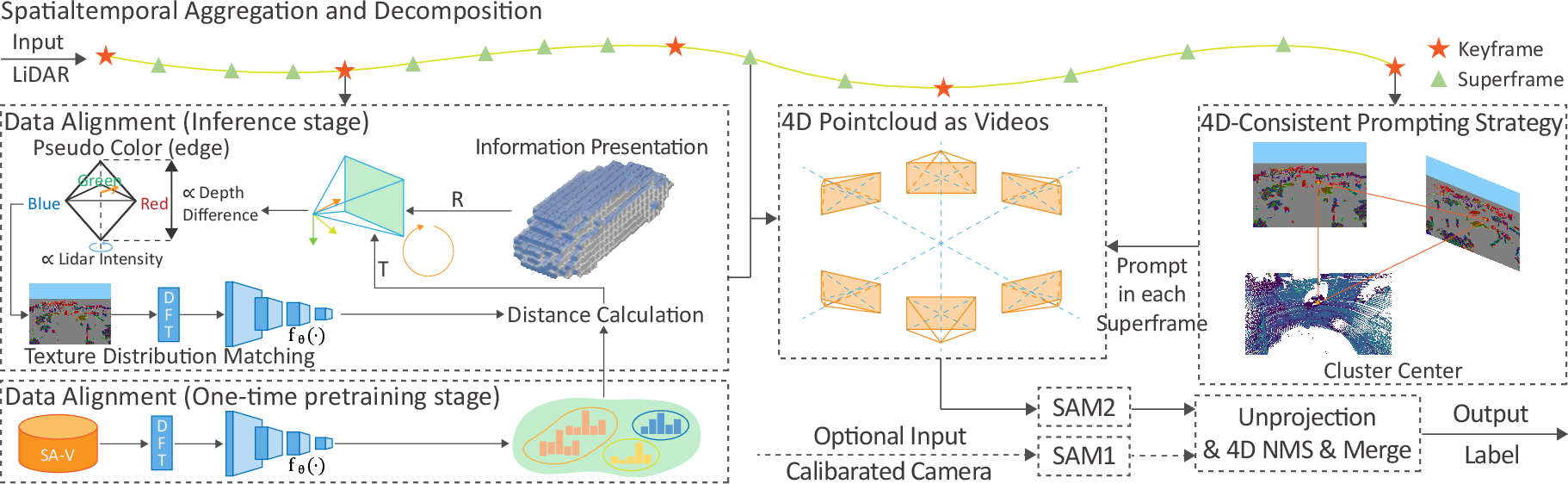

The tool employs spatiotemporal aggregation to leverage the rich temporal information in 4D LiDAR data, supported by a pseudo-color generation mechanism that maintains texture consistency (Figure 2). This design allows SALT to preserve semantic and spatial information throughout the dataset.

Figure 2: Pipeline of SALT. Integration of spatiotemporal aggregation, pseudo-camera alignment, and consistent prompting strategies.

The final presegmentation is enhanced using a 4D-consistent prompting strategy combined with 4D Non-Maximum Suppression (NMS), further refining the temporal and spatial coherence of the segmentation.

Experimental Results

SALT's performance was evaluated on several benchmarks, including SemanticKITTI and nuScenes, demonstrating significant improvements over state-of-the-art zero-shot methods. In SemanticKITTI, SALT surpassed existing methods by 18.4% in Panoptic Quality (PQ) without requiring additional camera information.

Cross-Scene Adaptability

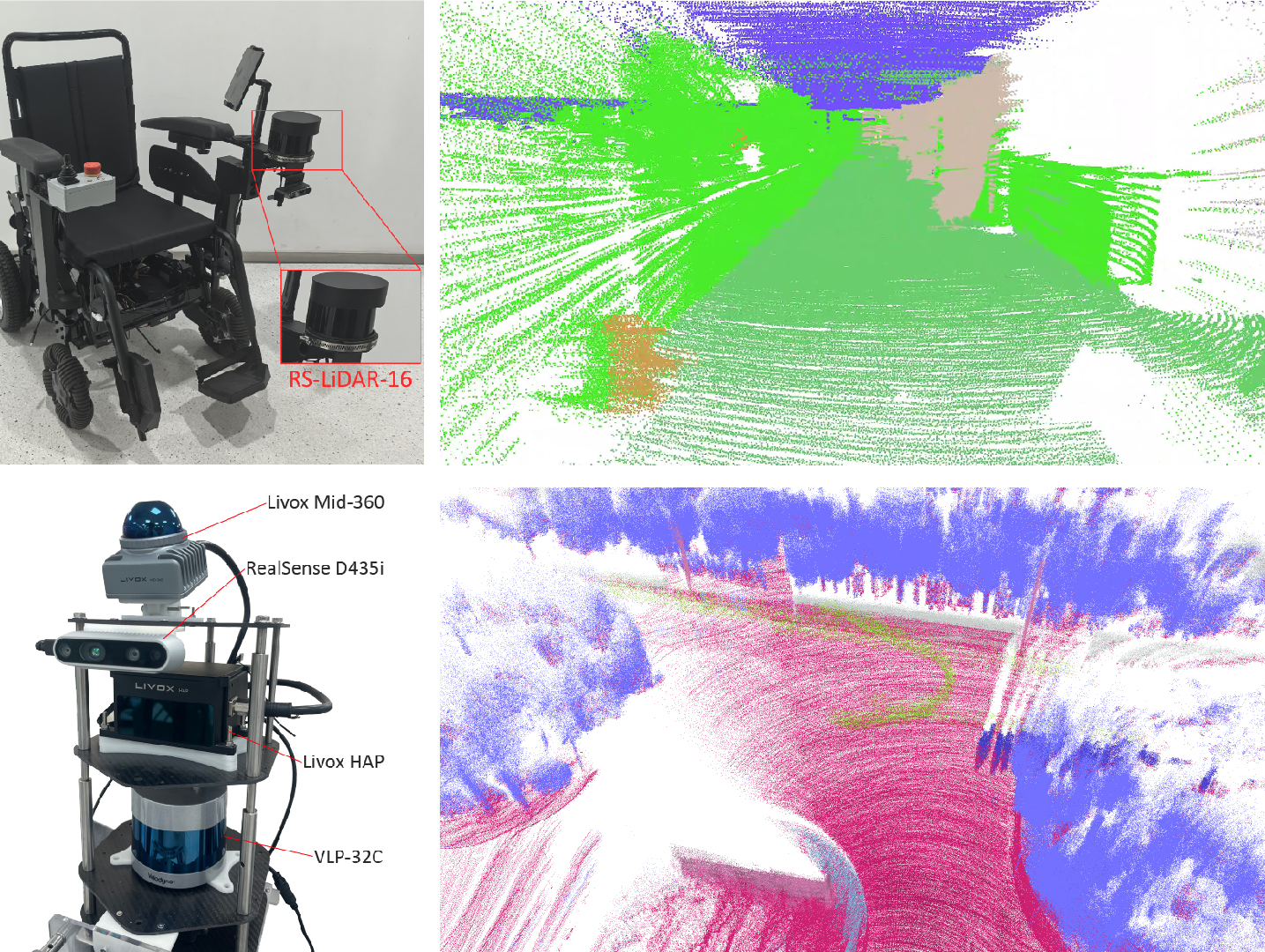

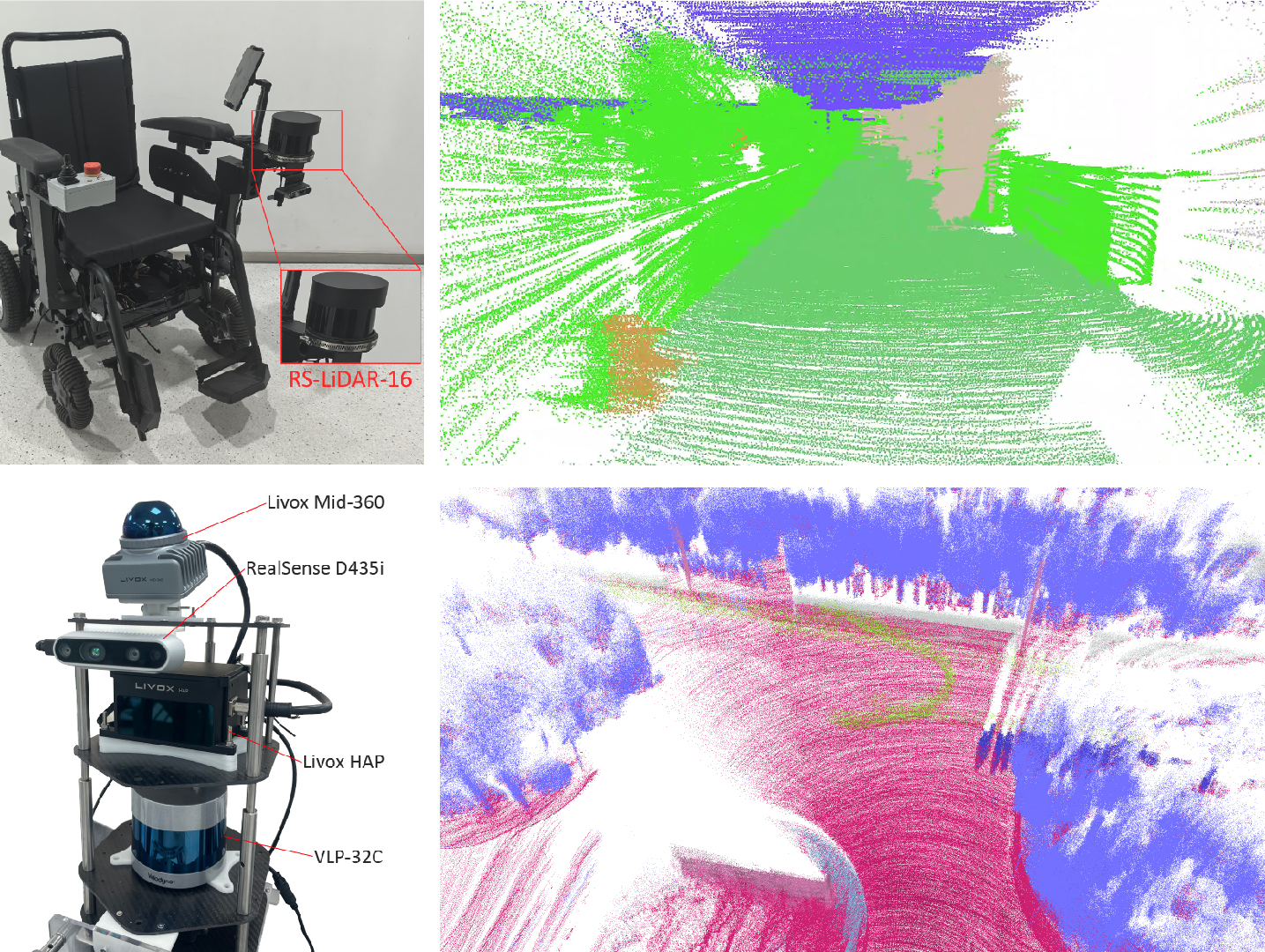

SALT's robustness across different LiDAR setups and environments was tested by annotating datasets from customized platforms involving low-resolution and combined LiDAR sensors (Figure 3). The tool maintained consistent performance, highlighting its potential for seamless deployment across varied operational contexts.

Figure 3: Platform, sensors, and cumulated point clouds showing SALT's adaptability to different LiDAR setups.

Efficiency and User Friendliness

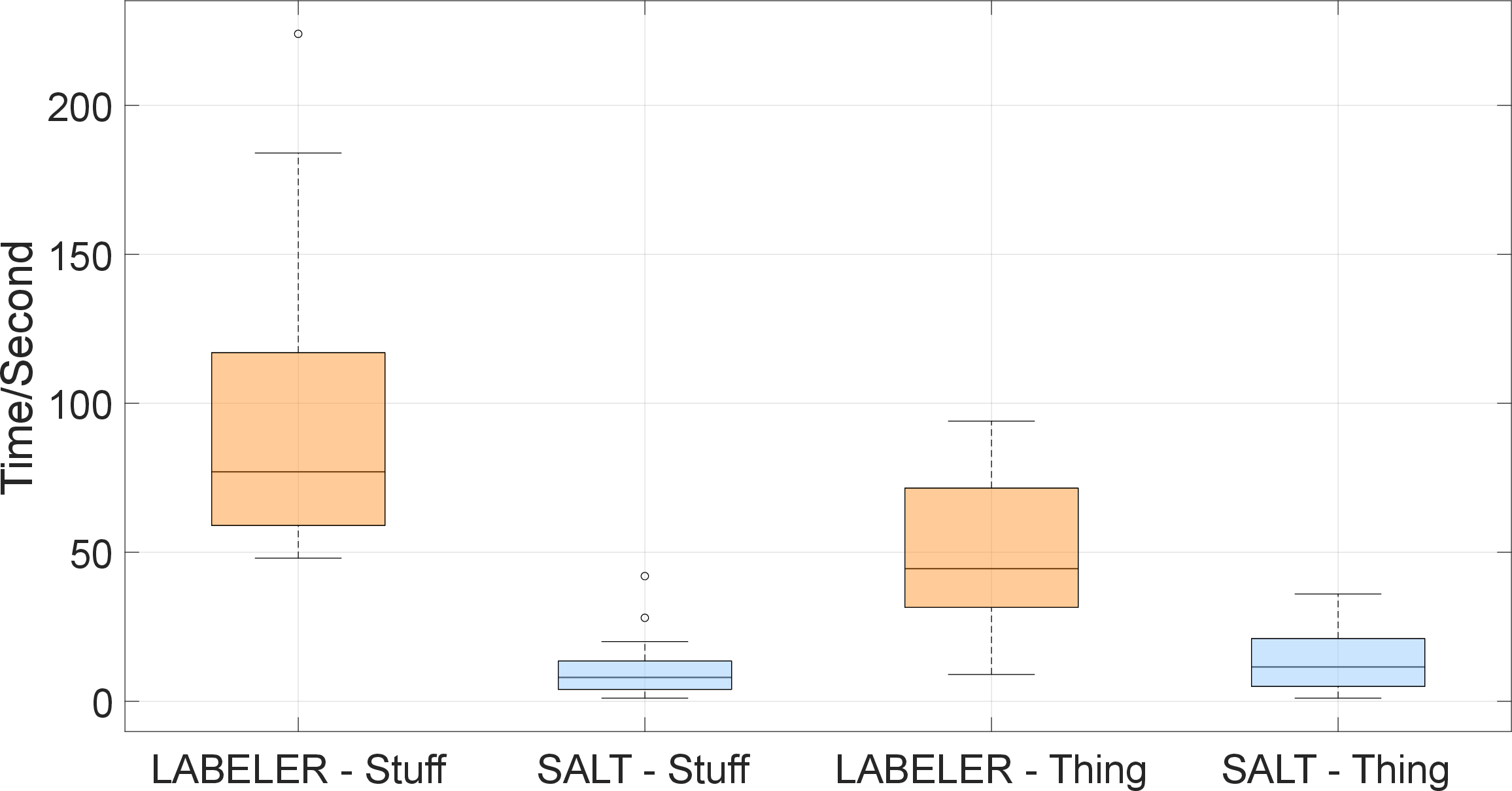

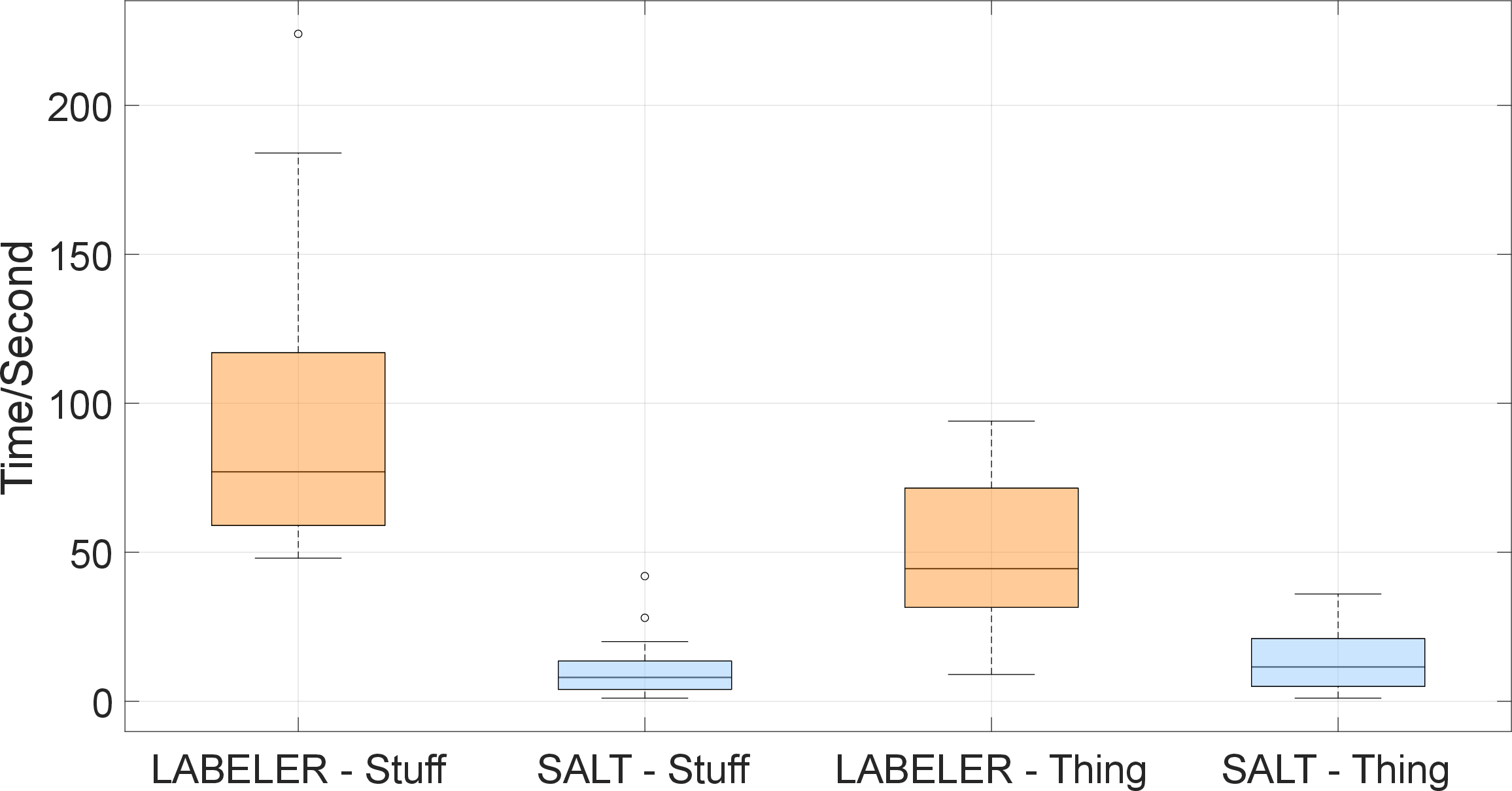

A comparative analysis with traditional tools (Figure 4) revealed that SALT significantly reduces annotation time, cutting down the manual workload by approximately 83%. This efficiency boost is attributed to the tool's ability to provide high-quality pre-annotations that require minimal subsequent human intervention.

Figure 4: User test results comparing annotation times between SALT and LABELER.

Conclusion

SALT represents a significant advancement in LiDAR point cloud annotation by integrating robust zero-shot learning, cross-platform adaptability, and efficient data processing mechanisms. Its ability to operate effectively across various scene types and sensor configurations underscores its potential to shape future AI-driven annotation tools. Future work may focus on refining the data alignment process and enhancing compatibility with emerging VFMs to further extend its zero-shot capabilities and improve tracking accuracy. The paper posits that open-sourcing SALT could catalyze growth in LiDAR dataset scalability, paving the way for the next generation of LiDAR foundation models.