GPT-PPG: A GPT-based Foundation Model for Photoplethysmography Signals (2503.08015v1)

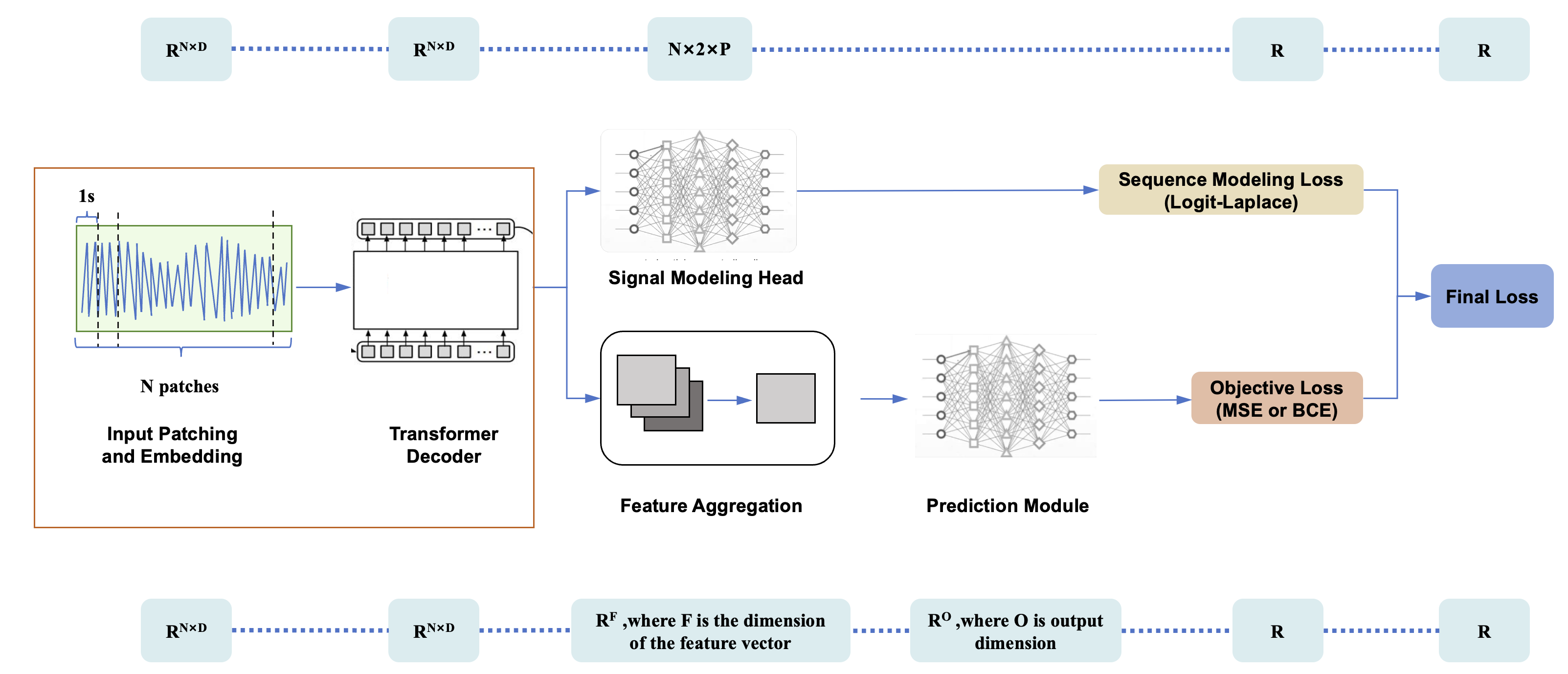

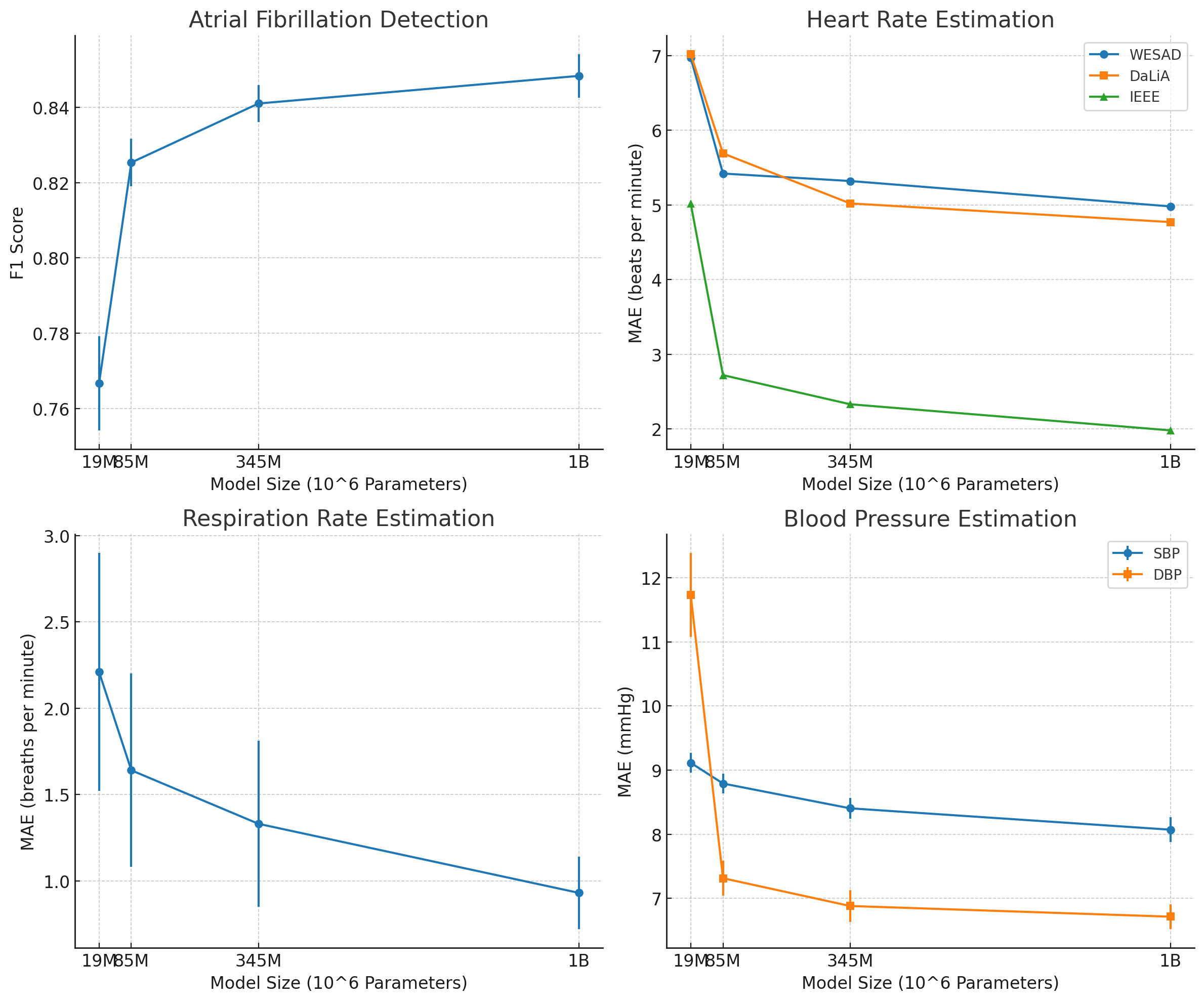

Abstract: This study introduces a novel application of a Generative Pre-trained Transformer (GPT) model tailored for photoplethysmography (PPG) signals, serving as a foundation model for various downstream tasks. Adapting the standard GPT architecture to suit the continuous characteristics of PPG signals, our approach demonstrates promising results. Our models are pre-trained on our extensive dataset that contains more than 200 million 30s PPG samples. We explored different supervised fine-tuning techniques to adapt our model to downstream tasks, resulting in performance comparable to or surpassing current state-of-the-art (SOTA) methods in tasks like atrial fibrillation detection. A standout feature of our GPT model is its inherent capability to perform generative tasks such as signal denoising effectively, without the need for further fine-tuning. This success is attributed to the generative nature of the GPT framework.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Practical Applications

In evaluating the real-world applications derived from the research paper "GPT-PPG: A GPT-based Foundation Model for Photoplethysmography Signals," we can consider how its findings, methods, and innovations translate into actionable use cases across various domains. Below, I've organized these applications into "Immediate Applications" and "Long-Term Applications," with specific notes on sectors and feasibility considerations.

Immediate Applications

These applications can be deployed with existing technology and infrastructure.

Healthcare

- Atrial Fibrillation Detection:

- Use Case: Implement in wearable health monitors and home health applications to provide continuous, non-invasive monitoring for atrial fibrillation.

- Feasibility Considerations: Requires integration with existing wearables; reliance on robust data privacy measures.

- Heart Rate Estimation:

- Use Case: Enhance fitness trackers and smartwatch platforms to offer more accurate heart rate monitoring and health insights during physical activities.

- Feasibility Considerations: Current hardware capabilities are sufficient; requires collaboration with device manufacturers.

- Blood Pressure Estimation:

- Use Case: Offer non-invasive blood pressure monitoring solutions in clinical and remote health settings, reducing the need for frequent manual measurements.

- Feasibility Considerations: Could face regulatory hurdles for clinical approval but is technologically feasible for immediate integration in consumer wearables.

- Signal Denoising:

- Use Case: Improve the accuracy of existing PPG data analysis solutions by integrating signal denoising capabilities, particularly beneficial for devices used in motion-prone environments.

- Feasibility Considerations: Requires integration with data processing software and existing healthcare data systems.

Long-Term Applications

These applications entail further research, scaling, or development before they can be realized.

Academia

- Cross-Modal Biosignal Research:

- Use Case: Extend research capabilities by developing multimodal biosignal models combining PPG with ECG or other physiological data for comprehensive health diagnostics.

- Feasibility Considerations: Needs multi-disciplinary research collaboration and large-scale data collection efforts.

Energy and Wearable Technology

- Efficient Wearable Devices:

- Use Case: Explore energy-efficient implementations of AI models in wearable devices that continuously monitor health without frequent recharging needs.

- Feasibility Considerations: Requires advancements in low-power AI processing technologies.

Software and Data Systems

- Advanced Biometric Authentication:

- Use Case: Develop biometric authentication systems leveraging PPG and AI models for secure identification technology in personal devices.

- Feasibility Considerations: Depends on evolving security protocols and public acceptance of biometric data use.

General Assumptions and Dependencies

- Assumptions:

- Availability of high-volume, large-scale data for model training and validation.

- Consumer willingness to adopt and trust AI-driven health monitoring solutions.

- Dependencies:

- Continued advancements in AI interpretability and regulatory compliance.

- Partnerships with healthcare providers and technology firms for implementation and scaling.

These applications highlight the versatility and potential of GPT-PPG beyond traditional healthcare settings, paving the way for innovative solutions across various sectors.

Collections

Sign up for free to add this paper to one or more collections.