- The paper develops a brain-inspired deep learning framework that integrates deep Hopfield networks with Hadoop to achieve scalable semantic data linking.

- It employs a dual-hemisphere model mimicking parallel learning and sequential reasoning to reinforce or weaken semantic associations.

- Experimental evaluations show 100% recall accuracy under reinforced conditions and adaptive forgetting as data patterns evolve.

Brain-Inspired Deep Learning for Semantic Data Linking with Hopfield Networks

Introduction

The paper "Hopfield Networks Meet Big Data: A Brain-Inspired Deep Learning Framework for Semantic Data Linking" (2503.03084) presents a distributed cognitive architecture that integrates deep Hopfield networks with big data processing frameworks to address semantic data linking and data cleaning at scale. The approach is motivated by the dual-hemisphere structure of the human brain, leveraging the right hemisphere for parallel learning and the left for sequential reasoning. The framework is implemented atop Hadoop's MapReduce and HDFS, enabling scalable, parallel processing of heterogeneous datasets. The core innovation is the use of deep Hopfield networks as associative memory, dynamically reinforcing or weakening semantic links based on evolving data usage patterns, thus mimicking biological memory recall and forgetting.

Biological and Computational Foundations

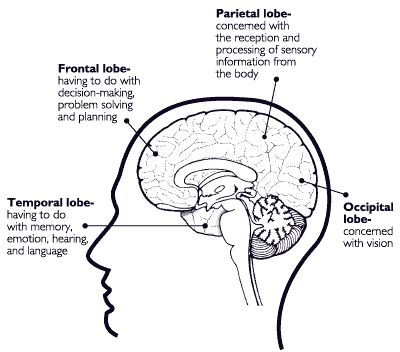

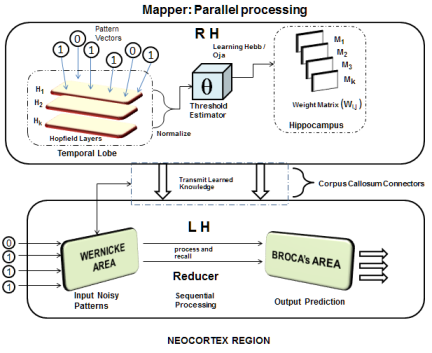

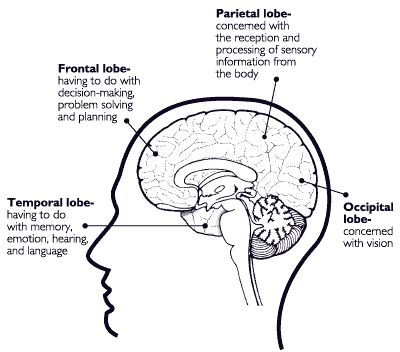

The architecture is grounded in the principles of Biologically Inspired Cognitive Architectures (BICA), particularly the hemispheric specialization of the human brain. The right hemisphere (RH) is modeled as the locus of learning and perception, while the left hemisphere (LH) is responsible for reasoning and recall. The mapping of brain regions to computational modules is explicit: the temporal lobe (RH) is implemented as a layer of Hopfield neurons, the hippocampus as the weight matrix storing associative patterns, and the Wernicke and Broca areas (LH) as sequential processing and output modules.

Figure 1: Human brain representation, highlighting the functional specialization of lobes and their computational analogs in the proposed architecture.

The architecture's design is informed by neuropsychological evidence on parallel and sequential information processing, as well as the role of associative memory in cognition. The use of Hopfield networks is justified by their content-addressable memory and capacity for pattern completion, which are essential for robust semantic association in noisy, high-dimensional data environments.

System Architecture and Implementation

The system is architected as a distributed pipeline, with the following key components:

The implementation leverages the Neupy library for Hopfield network primitives and integrates with Hadoop for distributed storage and computation. The Mapper processes in RH parallelize the learning of usage patterns, while the Reducer in LH synthesizes predictions from distributed memory.

Learning and Memory Dynamics

The framework incorporates both Hebbian and Oja's learning rules for weight updates. Hebbian learning reinforces co-activation, while Oja's rule introduces normalization to prevent unbounded growth of weights. The system exhibits palimpsest memory: frequently co-occurring patterns are reinforced, while infrequent or outdated associations decay, enabling dynamic adaptation to changing data usage.

The update rule for the Hopfield network is:

Wi,j=n1p=1∑kxipxjp

with asynchronous updates for biological fidelity.

Experimental Evaluation

Experiments are conducted on synthetic datasets simulating usage patterns across k datasets and p usage combinations. The system is evaluated on its ability to recover known associations and adapt to evolving patterns. Key metrics include:

- Recovery Accuracy: Measured by the number of correctly recalled associations (β) and new associations formed (γ) as the memory is exposed to new patterns.

- Cosine Similarity: Quantifies the alignment between test, result, and stored patterns.

Results demonstrate that the model achieves 100% recovery accuracy when test patterns match the most recently reinforced memory. As new, dissimilar patterns are introduced, recovery accuracy decreases, reflecting the system's adaptive forgetting. The model's self-optimizing behavior ensures that only contextually relevant associations are retained, improving semantic disambiguation and integration accuracy.

Theoretical and Practical Implications

The proposed framework advances the state of the art in several dimensions:

- Scalability: By leveraging MapReduce and HDFS, the system is capable of processing high-dimensional, large-scale datasets, a critical requirement for modern data integration tasks.

- Biological Plausibility: The dual-hemisphere architecture and palimpsest memory dynamics provide a computationally efficient and neuro-inspired approach to associative learning.

- Dynamic Adaptation: The system's ability to reinforce or weaken associations based on usage patterns enables robust handling of evolving data semantics, a key challenge in real-world data lakes and knowledge graphs.

The approach is particularly well-suited for applications in automated data cleaning, semantic integration, and knowledge graph construction, where context-sensitive association and disambiguation are essential.

Limitations and Future Directions

While the framework demonstrates strong performance on synthetic data and offers a compelling biologically inspired paradigm, several limitations remain:

- Pattern Capacity: Classical Hopfield networks have limited storage capacity (∼0.14n for n neurons). Scaling to very high-dimensional data may require continuous or modern Hopfield variants.

- Binary Representation: The reliance on binary input vectors may limit expressivity for complex, multi-valued semantic relationships.

- Real-World Validation: Further empirical validation on real-world, heterogeneous datasets is necessary to assess generalization and robustness.

Future work should explore integration with continuous-state Hopfield networks and transformer-based architectures to enhance memory capacity and capture long-range dependencies. Real-time adaptation and online learning in streaming data environments are also promising directions.

Conclusion

This work presents a distributed, brain-inspired deep learning framework for semantic data linking, integrating Hopfield networks with big data processing paradigms. The dual-hemisphere architecture, grounded in neurocognitive principles, enables scalable, adaptive, and context-sensitive association of data attributes. Experimental results validate the model's capacity for dynamic memory reinforcement and forgetting, mirroring biological cognition. The framework offers a promising foundation for future research in scalable, neuro-inspired data integration and semantic inference systems.